整体规划

host ----------- ip--------------------port---------------------说明

-----------------192.168.100.30 10088 Keepalived-VIP

Proxysql-21 192.168.100.21 6032,6033 ProxySQL代理

Proxysql-22 192.168.100.22 6032,6033 ProxySQL代理

mysql-master 192.168.100.11 3306,33060-mgr端口 mysql主节点

mysql-slave1 192.168.100.12 3306,33060-mgr端口 mysql从节点

mysql-slave2 192.168.100.13 3306,33060-mgr端口 mysql从节点

基本流程

配置MGR

Step1 给3个mysql容器编写配置文件

Step2 创建3个mysql容器

Step3 配置master节点

Step4 配置slave节点

配置ProxySQL 集群

Step5 配置节点帐号

Step6 安装ProxySQL

Step7 配置第二台ProxySQL

配置keepalived

Step8 两台ProxySQL配置SSH免密码认证

Step9 配置100.21上的keepalived

Step10 配置100.23上的keepalived

配置宿主机端口转发

Step11 在主机配置端口转发

配置MGR

Step1 给3个mysql容器编写配置文件

#mkdir -p /opt/mysql/{master,slave1,slave2}

Master的my.cnf:

#vi /opt/mysql/master/conf/my.cnf

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

skip-name-resolve

character-set-server=utf8

collation-server=utf8_general_ci

log_bin=binlog

binlog_format=row

relay-log=bogn-relay-bin

innodb_buffer_pool_size=2048M

innodb_log_file_size=128M

query_cache_size=64M

max_connections=128

max_allowed_packet = 50M

log_timestamps=SYSTEM

symbolic-links=0

server-id=1

pid-file=/var/run/mysqld/mysqld.pid

#for use gtid

gtid_mode=on

enforce_gtid_consistency=on

log_slave_updates=on

#add for group replication

master_info_repository=table

relay_log_info_repository=table

binlog_checksum=none

transaction_write_set_extraction=XXHASH64 #唯一确定事务影响行的主键,必须开启

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" #唯一标识一个组

loose-group_replication_allow_local_disjoint_gtids_join=on

#重启自动组复制,第一次初始化的时候可以off,不然进去初始化之前需要先关闭stop group_replication

loose-group_replication_start_on_boot=on

loose-group_replication_unreachable_majority_timeout=10 #unreachable的超时时间设置

loose-group_replication_local_address="192.168.100.11:33060" #用于组间通信的地址

#donor地址

loose-group_replication_group_seeds="192.168.100.11:33060,192.168.100.12:33060,192.168.100.13:33060"

loose-group_replication_bootstrap_group=off #引导节点设置

[mysqld_safe]

default-character-set=utf8

Slave1的my.cnf:

#vi /opt/mysql/slave1/conf/my.cnf,只有下面两行与Master不同,其它相同:

server-id=2

loose-group_replication_local_address= “192.168.100.12:33060”

Slave2的my.cnf:

#vi /opt/mysql/slave2/conf/my.cnf,只有下面两行与Master不同,其它相同:

server-id=3

loose-group_replication_local_address= “192.168.100.13:33060”

Step2 创建3个mysql容器

在docker创建容器之前,先创建一个网络:

docker network create --subnet=192.168.100.0/24 mysqlnet

再用docker创建3个mysql容器:

docker run -d -restart=always -p 10001:3306 -p 10011:33060 --name master --hostname=mysql-master --net=mysqlnet --ip=192.168.100.11 --add-host mysql-master:192.168.100.11 --add-host mysql-slave1:192.168.100.12 --add-host mysql-slave2:192.168.100.13 -v /opt/mysql/master/conf/my.cnf:/etc/mysql/conf.d/my.cnf -v /opt/mysql/master/logs:/var/log/mysql -v /opt/mysql/master/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 mysql:5.7.23

docker run -d -restart=always -p 10002:3306 -p 10012:33060 --name slave1 --hostname=mysql-slave1 --net=mysqlnet --ip=192.168.100.12 --add-host mysql-master:192.168.100.11 --add-host mysql-slave1:192.168.100.12 --add-host mysql-slave2:192.168.100.13 -v /opt/mysql/slave1/conf/my.cnf:/etc/mysql/conf.d/my.cnf -v /opt/mysql/slave1/logs:/var/log/mysql -v /opt/mysql/slave1/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 mysql:5.7.23

docker run -d -restart=always -p 10003:3306 -p 10013:33060 --name slave2 --hostname=mysql-slave2 --net=mysqlnet --ip=192.168.100.13 --add-host mysql-master:192.168.100.11 --add-host mysql-slave1:192.168.100.12 --add-host mysql-slave2:192.168.100.13 -v /opt/mysql/slave2/conf/my.cnf:/etc/mysql/conf.d/my.cnf -v /opt/mysql/slave2/logs:/var/log/mysql -v /opt/mysql/slave2/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 mysql:5.7.23

Step3 配置master节点

#docker exec -it master /bin/bash

第一步:创建用于复制的用户

root@mysql-master:/# mysql -uroot -p123456

mysql> set sql_log_bin=0;

mysql> grant replication slave,replication client on . to ‘mgruser’@’%’ identified by ‘123456’;

mysql> grant replication slave,replication client on . to ‘mgruser’@‘127.0.0.1’ identified by ‘123456’;

mysql> grant replication slave,replication client on . to ‘mgruser’@‘localhost’ identified by ‘123456’;

mysql> set sql_log_bin=1;

mysql> flush privileges;

第二步:配置复制所使用的用户

mysql> CHANGE MASTER TO MASTER_USER=‘mgruser’, MASTER_PASSWORD=‘123456’ FOR CHANNEL ‘group_replication_recovery’;

第三步:安装mysql group replication 这个插件

mysql> install plugin group_replication soname ‘group_replication.so’;

第四步:初始化一个复制组

mysql> set global group_replication_bootstrap_group=on;

mysql> start group_replication;

mysql> set global group_replication_bootstrap_group=off;

第一次启动节点后,需要安装一个group_replicaiton插件 . 以及配置复制,进行初始配置。每个节点都需要执行前三步。

配置完成后,启动集群中的第一个节点的group_replication时,需要设置boostrap参数。 其他的节点直接使用START GROUP_REPLICATION;即可。

整个集群配置完毕之后,当某个节点宕机之后,使用常规的mysql启动命令进行重启即可,无需再手动配置,节点重启后将自动加入复制集群。

Step4 配置slave节点

步骤的前三步同上面master一样,最后一步只需要start group_replication;

成功之后再查看一下当前的组员

mysql> SELECT * FROM performance_schema.replication_group_members;

至此,一主二从同步配置完毕。测试当关闭master时,从节点是否接管主节点。

在从节点上查看当前主节点是谁:

mysql> show global status like ‘group_replication_primary_member’;

关闭主节点:

#docker stop master

从节点上再次查看主节点是谁:

mysql> show global status like ‘group_replication_primary_member’;

现在主节点是slave1了。

原主节点加入集群:

#docker stop master

此时可看到集群成员包含三台,并且可以看到主节点仍是slave1。

如果原主节点没加入集群,再尝试执行start group_replication;

当重启docker服务或主机重启后,如果这些节点没有加入复制组,先在主节点上执行:

mysql> set global group_replication_bootstrap_group=on;

mysql> start group_replication;

mysql> set global group_replication_bootstrap_group=off;

然后在从节点上执行:

mysql> start group_replication;

配置ProxySQL 集群

在以前的ProxySQL版本中,要支持MySQL组复制(MGR,MySQL Group Replication)需要借助第三方脚本对组复制做健康检查并自动调整配置,但是从ProxySQL v1.4.0开始,已原生支持MySQL组复制的代理,在main库中也已提供mysql_group_replication_hostgroups表来控制组复制集群中的读、写组。

尽管已原生支持MGR,但仍然需要在MGR节点中创建一张额外的系统视图sys.gr_member_routing_candidate_status为ProxySQL提供监控指标。创建该视图的脚本在github.com可以找到。

Step5 配置节点帐号

在后端数据库上用show global status like ‘group_replication_primary_member’;确认一下哪一台是写节点,然后在写节点上创建程序帐号和监控帐号(之前停止过写节点master,所以现在写节点变成slave1):

第一步,在写节点创建检查MGR节点状态的函数和视图:

#cd /opt/mysql/slave1/data

#vi addition_to_sys.sql,把https://github.com/lefred/mysql_gr_routing_check/blob/master/addition_to_sys.sql这个页面中的sql代码复制下来。

#docker exec -it slave1 /bin/bash

root@mysql-slave1:/# mysql -uroot -p < /var/lib/mysql/addition_to_sys.sql

第二步,在写节点上创建帐号

root@mysql-slave1:/# mysql -uroot -p

mysql> grant select on . to ‘monitor’@’%’ identified by ‘monitor’;

mysql> grant all privileges on . to ‘proxysql’@’%’ identified by ‘proxysql’;

mysql> flush privileges;

Step6 安装ProxySQL

这里没有找到ProxySQL的官方镜像,于是使用了一个centos的镜像,在centos的镜像里面再安装ProxySQL

第一步,安装centos的镜像

#mkdir -p /opt/{proxysql-1,proxysql-2}/home,用来存放keepalived检测脚本

docker run -d --restart=always --privileged --name proxysql-21 --hostname=proxysql-21 --net=mysqlnet --ip=192.168.100.21 --add-host mysql-proxy-22:192.168.100.22 -v /opt/proxysql-1/home:/home centos /usr/sbin/init

docker run -d --restart=always --privileged --name proxysql-22 --hostname=proxysql-22 --net=mysqlnet --ip=192.168.100.22 --add-host mysql-proxy-21:192.168.100.21 -v /opt/proxysql-2/home:/home centos /usr/sbin/init

这里并未映射端口,因为映射的是VIP-192.168.100.30的6033端口,不能绑定到ProxySQL主机中,需要单独添加到iptables中。

第二步,安装mysql客户端

#docker exec -it proxysql-21 /bin/bash

[root@proxysql-21 /]# yum install -y https://repo.mysql.com/mysql57-community-release-el7.rpm

[root@proxysql-21 /]# yum install mysql -y

第三步,安装ProxySQL

根据http://repo.proxysql.com/的文档安装

[root@proxysql-21 /]# vi /etc/yum.repos.d/proxysql.repo

[proxysql_repo]

name= ProxySQL YUM repository

baseurl=http://repo.proxysql.com/ProxySQL/proxysql-1.4.x/centos/$releasever

gpgcheck=1

gpgkey=http://repo.proxysql.com/ProxySQL/repo_pub_key

安装:

[root@proxysql-21 /]# yum install -y proxysql

先别启动proxysql,写点集群有关配置到/etc/proxysql.cnf比在数据库看insert方便。

[root@proxysql-21 /]# vi /etc/proxysql.cnf

datadir="/var/lib/proxysql"

admin_variables=

{

admin_credentials="admin:admin;cluster:cluster"

mysql_ifaces="0.0.0.0:6032"

cluster_username="cluster"

cluster_password="cluster"

cluster_check_interval_ms=200

cluster_check_status_frequency=100

cluster_mysql_query_rules_save_to_disk=true

cluster_mysql_servers_save_to_disk=true

cluster_mysql_users_save_to_disk=true

cluster_proxysql_servers_save_to_disk=true

cluster_mysql_query_rules_diffs_before_sync=3

cluster_mysql_servers_diffs_before_sync=3

cluster_mysql_users_diffs_before_sync=3

cluster_proxysql_servers_diffs_before_sync=3

}

mysql_variables=

{

threads=4

max_connections=2048

default_query_delay=0

default_query_timeout=36000000

have_compress=true

poll_timeout=2000

interfaces="0.0.0.0:6033"

default_schema="information_schema"

stacksize=1048576

server_version="5.5.30"

connect_timeout_server=3000

monitor_username="monitor"

monitor_password="monitor"

monitor_history=600000

monitor_connect_interval=60000

monitor_ping_interval=10000

monitor_read_only_interval=1500

monitor_read_only_timeout=500

ping_interval_server_msec=120000

ping_timeout_server=500

commands_stats=true

sessions_sort=true

connect_retries_on_failure=10

}

proxysql_servers =

(

{

hostname="192.168.100.21"

port=6032

comment="primary"

},

{

hostname="192.168.100.22"

port=6032

comment="secondary"

}

)

mysql_servers =

(

{

address = "192.168.100.11" # no default, required .

port = 3306 # no default, required .

hostgroup = 1 # no default, required

status = "ONLINE" # default: ONLINE

weight = 1 # default: 1

compression = 0 # default: 0

max_replication_lag = 10 # default 0 .

},

{

address = "192.168.100.12" # no default, required .

port = 3306 # no default, required .

hostgroup = 3 # no default, required

status = "ONLINE" # default: ONLINE

weight = 1 # default: 1

compression = 0 # default: 0

max_replication_lag = 10 # default 0 .

},

{

address = "192.168.100.13" # no default, required .

port = 3306 # no default, required .

hostgroup = 3 # no default, required

status = "ONLINE" # default: ONLINE

weight = 1 # default: 1

compression = 0 # default: 0

max_replication_lag = 10 # default 0 .

}

)

mysql_users:

(

{

username = "proxysql" # no default , required

password = "proxysql" # default: ''

default_hostgroup = 1 # default: 0

active = 1 # default: 1

}

)

#defines MySQL Query Rules

mysql_query_rules:

(

{

rule_id=1

active=1

match_pattern="^SELECT .* FOR UPDATE$"

destination_hostgroup=0

apply=1

},

{

rule_id=2

active=1

match_pattern="^SELECT"

destination_hostgroup=3

apply=1

}

)

scheduler=()

mysql_replication_hostgroups=()

启动proxysql:

[root@proxysql-21 /] systemctl start proxysql

添加mysql group replicatio信息(在/etc/proxysql.cnf添加会报语法错)

#mysql -uadmin -padmin -h127.0.0.1 -P6032

insert into mysql_group_replication_hostgroups (writer_hostgroup,backup_writer_hostgroup,reader_hostgroup, offline_hostgroup,active,max_writers,writer_is_also_reader,max_transactions_behind) values (1,2,3,4,1,1,0,100);

load mysql servers to runtime;

save mysql servers to disk;

至此,ProxySQL-21配置完成,可以在某个客户端使用:

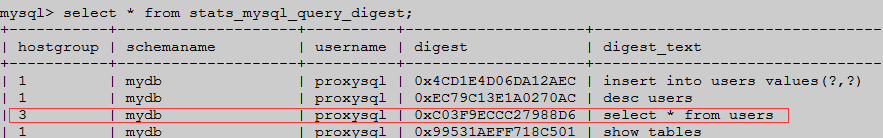

mysql -uproxysql -pproxysql -h192.168.100.21 -P6033 进入数据库,更新一条记录,然后查看读写是否分离:

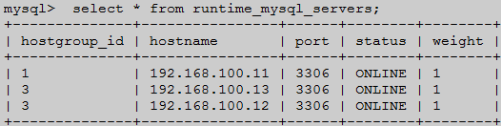

查看运行时后端数据库分组情况:

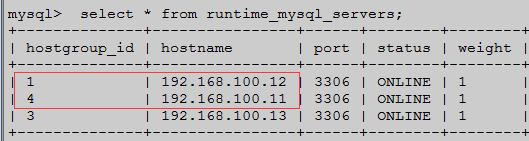

关闭写节点容器,再查看运行时后端数据库分组情况:

可见,原写节点放在hostgroup_id为4的离线主机组了,而读节点192.168.100.12提升到写节点了。再一次使用mysql -uproxysql -pproxysql -h192.168.100.21 -P6033 进入数据库,更新一条记录,然后查看读写是否分离正确。

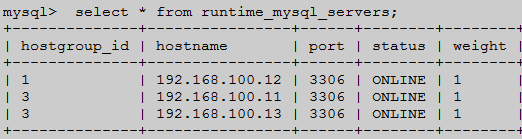

启动原写节点容器,再查看运行时后端数据库分组情况:

可见原写节点192.168.100.11加了集群,不过是在读组中,不会抢占成为写节点。

Step7 配置第二台ProxySQL

同样的方法,配置完ProxySQL-22,查询,更新数据库,检查读写分离是否正确。两台ProxySQL配置好后,接下来再做成Keepalived集群。

配置keepalived

查看宿主机中是否加载了ip_vs和xt_set模块,没有就要加载,否则容器中启动keepalived会失败,报错如下:

IPVS: Can’t initialize ipvs: Protocol not available

Unable to load module xt_set - not using ipsets

#lsmod | grep ip_vs

#lsmod | grep xt_set

如果没有显示结果,则要如下处理:

#vi /etc/sysconfig/modules/ip_vs.modules

#!/bin/sh

/sbin/modinfo -F filename ip_vs > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ip_vs

fi

#chmod 755 /etc/sysconfig/modules/ip_vs.modules

#vi /etc/sysconfig/modules/xt_set.modules

#!/bin/sh

/sbin/modinfo -F filename xt_set > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe xt_set

fi

#chmod 755 /etc/sysconfig/modules/xt_set.modules

#reboot

检查:

#lsmod | grep ip_vs

#lsmod | grep xt_set

如果是RHEL6/CentOS6,则echo “modprobe ip_vs” >> /etc/rc.sysinit

此外,还需要配置# echo ‘net.ipv4.ip_nonlocal_bind=1’ >> /etc/sysctl.conf

Step8 两台ProxySQL配置SSH免密码认证

因为检测脚本要用ssh去连接对方判断对方的keepalived服务是否处于avtived状态,所以要配置SSH服务及免密码登录。

第一步:开启SSH服务

#docker exec -it proxysql-21 /bin/bash

[root@proxysql-21 /]# mkdir /var/run/sshd/

[root@proxysql-21 /]# yum install -y openssh-server passwd

[root@proxysql-21 /]# echo “123456” | passwd --stdin root

以下创建公私密钥,输入命令后,直接按两次enter键确认就行了

[root@proxysql-21 /]# ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key

[root@proxysql-21 /]# ssh-keygen -t ecdsa -f /etc/ssh/ssh_host_ecdsa_key

[root@proxysql-21 /]# ssh-keygen -t ed25519 -f /etc/ssh/ssh_host_ed25519_key

[root@proxysql-21 /]# sed -i “s/UsePAM yes/UsePAM no/g” /etc/ssh/sshd_config

[root@proxysql-21 /]# systemctl start sshd

#docker exec -it proxysql-22 /bin/bash

配置完全同proxysql-21

第二步,配置免密钥认证

proxysql-21:

[root@proxysql-21 /]# ssh-keygen

[root@proxysql-21 /]# ssh-copy-id 192.168.100.22

[email protected]’s password: 123456

proxysql-22:

[root@proxysql-22 /]# ssh-keygen

[root@proxysql-22 /]# ssh-copy-id 192.168.100.21

[email protected]’s password: 123456

Step9 配置100.21上的keepalived

[root@proxysql-21 /]# yum install -y iproute keepalived

[root@proxysql-21 /]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_21

# vrrp_strict //注释掉,否则VIP拼不通

}

vrrp_script chk_proxysql

{ //此 { 要单独一行

script "/home/check_proxysql.sh"

interval 1

}

vrrp_instance proxysql {

state BACKUP

interface eth0

virtual_router_id 55

priority 100

advert_int 1

nopreempt //非抢占,结合state BACKUP一起用

authentication {

auth_type PASS

auth_pass XXXX

}

track_script {

chk_proxysql

}

virtual_ipaddress {

192.168.100.30/24

}

}

/home/check_proxysql.sh脚本内容如下:

#!/bin/sh

vip='192.168.100.30'

ip a | grep $vip

if [ $? -ne 0 ];then

exit 0

fi

peer_ip='192.168.100.22'

peer_port=22

proxysql='proxysql-21'

log=/home/keepalived.log

alias date='date +"%y-%m-%d_%H:%M:%S"'

#echo "`date` enter script." >> $log

#check if this keepalived is MASTER

#echo "`date` after check keepalived master script." >> $log

#check if data port(6033) is alive

data_port_stats=$(timeout 2 bash -c 'cat < /dev/null > /dev/tcp/0.0.0.0/6033' &> /dev/null;echo $?)

if [ $data_port_stats -eq 0 ];then

exit 0

else

#check if the other keepalived is running

peer_keepalived=$(ssh -p$peer_port $peer_ip 'systemctl is-active keepalived.service')

if [ "$peer_keepalived" != "active" ];then

echo "`date` data port:6033 of $proxysql is not available, but the BACKUP keepalived is not running, so can't do the failover" >> $log

else

echo "`date` data port:6033 of $proxysql is not available, now SHUTDOWN keepalived." >> $log

systemctl stop keepalived.service

fi

fi

[root@proxysql-21 /]# chmod +x /home/check_proxysql.sh

[root@proxysql-21 /]# systemctl enable keepalived

[root@proxysql-21 /]# systemctl start keepalived

linux 设备里面有个比较特殊的文件:/dev/[tcp|upd]/host/port,只要读取或者写入这个文件,相当于系统会尝试连接:host这台机器,对应port端口。如果主机以及端口存在,就建立一个socket 连接。/dev/[tcp|upd]这个目录实际不存在,它是特殊设备文件。

Step10 配置100.22上的keepalived

#docker exec -it proxysql-22 /bin/bash

[root@proxysql-22 /]# yum install -y iproute openssh-clients keepalived

[root@proxysql-22 /]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_22

# vrrp_strict //注释掉,否则VIP拼不通

}

vrrp_script chk_proxysql

{ //独占一行

script "/home/check_proxysql.sh"

interval 1

}

vrrp_instance proxysql {

state BACKUP

interface eth0

virtual_router_id 55

priority 90

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass XXXX

}

track_script {

chk_proxysql

}

virtual_ipaddress {

192.168.100.30/24

}

}

/home/check_proxysql.sh

vip='192.168.100.30'

ip a | grep $vip

if [ $? -ne 0 ];then

exit 0

fi

peer_ip='192.168.100.21'

peer_port=22

proxysql='proxysql-22'

log=/home/keepalived.log

alias date='date +"%y-%m-%d_%H:%M:%S"'

#echo "`date` enter script." >> $log

#check if this keepalived is MASTER

#echo "`date` after check keepalived master script." >> $log

#check if data port(6033) is alive

data_port_stats=$(timeout 2 bash -c 'cat < /dev/null > /dev/tcp/0.0.0.0/6033' &> /dev/null;echo $?)

if [ $data_port_stats -eq 0 ];then

exit 0

else

#check if the other keepalived is running

peer_keepalived=$(ssh -p$peer_port $peer_ip 'systemctl is-active keepalived.service')

if [ "$peer_keepalived" != "active" ];then

echo "`date` data port:6033 of $proxysql is not available, but the BACKUP keepalived is not running, so can't do the failover" >> $log

else

echo "`date` data port:6033 of $proxysql is not available, now SHUTDOWN keepalived." >> $log

systemctl stop keepalived.service

fi

fi

[root@proxysql-21 /]# chmod +x /home/check_proxysql.sh

[root@proxysql-21 /]# systemctl enable keepalived

[root@proxysql-21 /]# systemctl start keepalived

配置宿主机端口转发

Step11 在宿主机配置端口转发

#docker network ls | grep mysqlnet

6a9cfeb216f9 mysqlnet bridge local

#brctl show,查看有哪些网桥设备

bridge name bridge id STP enabled interfaces

br-6a9cfeb216f9 8000.024233a036ff no veth78b39b9

veth942efcb

vethe5fb8b6

vethe86e1c7

vethffd913e

br0 8000.08606e577285 no enp3s0

docker0 8000.0242115d2745 no

#ifconfig br-6a9cfeb216f9,可以看到网段是192.168.100.0/24。

#iptables -t nat -A DOCKER ! -i br-6a9cfeb216f9 -p tcp -m tcp --dport 10088 -j DNAT --to-destination 192.168.100.30:6033

#iptables -t nat -nL | grep 6033

最后在Mysql客户端连接ip:10088进行测试,用户名密码均为proxysql。

参考:

https://blog.csdn.net/yingziisme/article/details/83044146