版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/wm5920/article/details/81058465

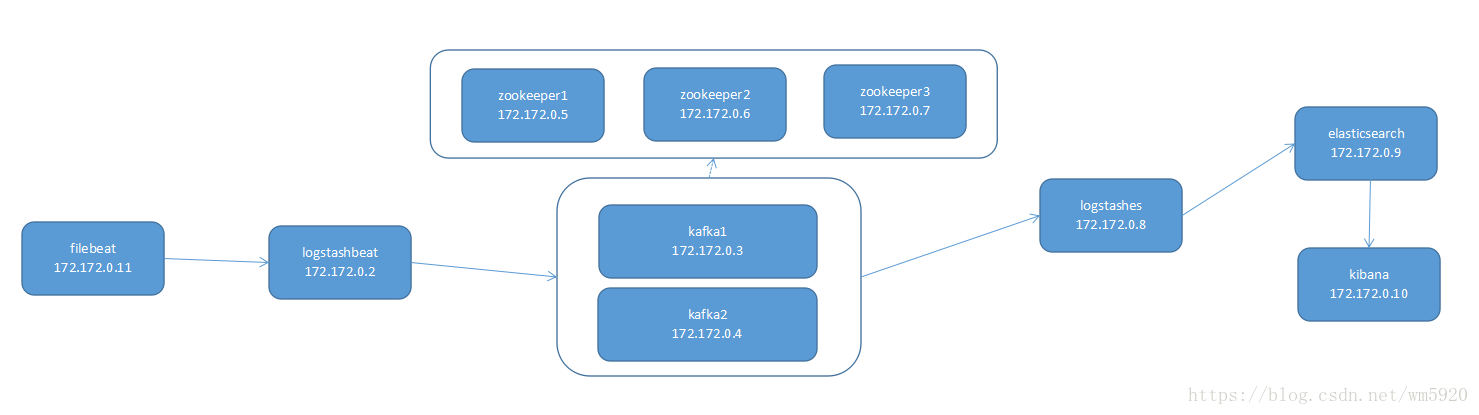

主要技术

filebeat

logstash

zookeeper

kafka

elasticsearch

kibana

docker

centos7

架构图

详细搭建

服务器目录情况

/home/log-script/lib

包含

elasticsearch-6.3.0.tar.gz

filebeat-6.3.0-linux-x86_64.tar.gz

jdk-8u171-linux-x64.tar.gz

kafka_2.11-1.1.0.tgz

kibana-6.3.0-linux-x86_64.tar.gz

lc-centos7-ssh.tar

logstash-6.3.0.tar.gz

zookeeper-3.4.12.tar.gz

解压jdk

tar -zxvf jdk-8u171-linux-x64.tar.gz -C /home/log-script/lib

创建docker网络

]# docker network create --subnet=172.172.0.0/24 elknettips

docker network rm elknet 删除网络的方法

docker network ls 查看已有网络的方法

其他服务器访问192.168.62.133上的docker,添加路由

route add -net 172.17.0.0 netmask 255.255.0.0 gw 192.168.62.133这一步是固定docker的ip地址前提,不然每次开机启动docker都会分配其他的ip

搭建启动es

]# docker run --name=elasticsearch --net elknet --ip 172.172.0.9 --privileged=true -e \

TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it elasticsearch bin/bash

/]# tar -zxvf /home/lib/elasticsearch-6.3.0.tar.gz -C /home

/]# mkdir /home/es_data

/]# mkdir /home/es_logs

/]# vi /home/elasticsearch-6.3.0/config/elasticsearch.yml

添加并保存

node.name: es

path.data: /home/es_data

path.logs: /home/es_logs

network.host: 172.172.0.9

http.port: 9200

{检查yml文件中的配置项书写格式: (空格)name:(空格)value,否则会出现

expecting token of type [START_OBJECT] but found [VALUE_STRING]]解析错误 }增加内存,修改文件句柄,否则提示max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

/]# vi /etc/security/limits.conf

添加并保存

* soft nofile 65536

* hard nofile 131072

* soft nproc 4096

* hard nproc 4096

/]# vi /etc/security/limits.d/90-nproc.conf

添加并保存

* soft nproc 4096

/]# vi /etc/sysctl.conf

添加并保存

vm.max_map_count=655360

/]# sysctl -p继续,es不能用root启动,需要创建用户

/]# yum install -y which

/]# adduser es

/]# passwd es

/]# chown -R es /home/es_*

/]# vi /home/startes.sh

添加并保存

#!/bin/bash

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

/home/elasticsearch-6.3.0/bin/elasticsearch -d

/]# chmod 777 /home/startes.sh退出容器并启动

]# docker exec -i -u es elasticsearch /home/startes.sh浏览器打开,看到成功页面

http://172.172.0.9:9200/

启动kibana

]# docker run --name=kibana --net elknet --ip 172.172.0.10 --privileged=true -e TZ=Asia/Shanghai \

-v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it kibana bin/bash

/]# tar -zxvf /home/lib/kibana-6.3.0-linux-x86_64.tar.gz -C /home

/]# vi /home/kibana-6.3.0-linux-x86_64/config/kibana.yml

添加并保存

server.port: 5601

server.host: "172.172.0.10"

elasticsearch.url: "http://172.172.0.9:9200"

/]# nohup /home/kibana-6.3.0-linux-x86_64/bin/kibana > /dev/null &打开网页

http://172.172.0.10:5601/

启动zookeper

配置zookeper1

]# docker run --name=zookeeper1 --net elknet --ip 172.172.0.5 --privileged=true -e\

TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it zookeeper1 bin/bash

/]# tar -zxvf /home/lib/zookeeper-3.4.12.tar.gz -C /home

/]# cp /home/zookeeper-3.4.12/conf/zoo_sample.cfg /home/zookeeper-3.4.12/conf/zoo.cfg

/]# mkdir /home/zoodata

/]# vi /home/zookeeper-3.4.12/conf/zoo.cfg

添加并保存(注:dataDir有默认值)

dataDir=/home/zoodata

server.1=172.172.0.5:12888:13888

server.2=172.172.0.6:12888:13888

server.3=172.172.0.7:12888:13888

/]# echo 1 >/home/zoodata/myid

/]# vi /home/zookeeper-3.4.12/bin/zkServer.sh

添加并保存

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

ctrl+d退出容器配置zookeper2

]# docker run --name=zookeeper2 --net elknet --ip 172.172.0.6 --privileged=true -e\

TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it zookeeper2 bin/bash

/]# tar -zxvf /home/lib/zookeeper-3.4.12.tar.gz -C /home

/]# cp /home/zookeeper-3.4.12/conf/zoo_sample.cfg /home/zookeeper-3.4.12/conf/zoo.cfg

/]# mkdir /home/zoodata

/]# vi /home/zookeeper-3.4.12/conf/zoo.cfg

添加并保存

dataDir=/home/zoodata

server.1=172.172.0.5:12888:13888

server.2=172.172.0.6:12888:13888

server.3=172.172.0.7:12888:13888

/]# echo 2 >/home/zoodata/myid

/]# vi /home/zookeeper-3.4.12/bin/zkServer.sh

添加并保存

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

ctrl+d退出容器配置zookeper3

]# docker run --name=zookeeper3 --net elknet --ip 172.172.0.7 --privileged=true -e\

TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it zookeeper3 bin/bash

/]# tar -zxvf /home/lib/zookeeper-3.4.12.tar.gz -C /home

/]# cp /home/zookeeper-3.4.12/conf/zoo_sample.cfg /home/zookeeper-3.4.12/conf/zoo.cfg

/]# mkdir /home/zoodata

/]# vi /home/zookeeper-3.4.12/conf/zoo.cfg

添加并保存

dataDir=/home/zoodata

server.1=172.172.0.5:12888:13888

server.2=172.172.0.6:12888:13888

server.3=172.172.0.7:12888:13888

/]# echo 3 >/home/zoodata/myid

/]# vi /home/zookeeper-3.4.12/bin/zkServer.sh

添加并保存

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

ctrl+d退出容器启动

]# docker exec -i zookeeper1 /home/zookeeper-3.4.12/bin/zkServer.sh start

]# docker exec -i zookeeper2 /home/zookeeper-3.4.12/bin/zkServer.sh start

]# docker exec -i zookeeper3 /home/zookeeper-3.4.12/bin/zkServer.sh start

]# docker exec -i zookeeper2 /home/zookeeper-3.4.12/bin/zkServer.sh status启动kafka

配置kafka1

]# docker run --name=kafka1 --net elknet --ip 172.172.0.3 --privileged=true -e TZ=Asia/Shanghai \

-v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it kafka1 bin/bash

/]# tar -zxvf /home/lib/kafka_2.11-1.1.0.tgz -C /home

/]# vi /home/kafka_2.11-1.1.0/config/server.properties

其中port 与host.name为添加配置

broker.id=1

port = 9092

host.name = 172.172.0.3

zookeeper.connect=172.172.0.5:2181,172.172.0.6:2181,172.172.0.7:2181

/]# vi /home/kafka_2.11-1.1.0/bin/kafka-server-start.sh

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

退出并启动

docker exec -i kafka1 /home/kafka_2.11-1.1.0/bin/kafka-server-start.sh -daemon \

/home/kafka_2.11-1.1.0/config/server.properties配置kafka2

]# docker run --name=kafka2 --net elknet --ip 172.172.0.4 --privileged=true -e TZ=Asia/Shanghai \

-v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it kafka2 bin/bash

/]# tar -zxvf /home/lib/kafka_2.11-1.1.0.tgz -C /home

/]# vi /home/kafka_2.11-1.1.0/config/server.properties

其中port 与host.name为添加配置

broker.id=2

port = 9092

host.name = 172.172.0.4

zookeeper.connect=172.172.0.5:2181,172.172.0.6:2181,172.172.0.7:2181

/]# vi /home/kafka_2.11-1.1.0/bin/kafka-server-start.sh

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

退出并启动

docker exec -i kafka2 /home/kafka_2.11-1.1.0/bin/kafka-server-start.sh -daemon \

/home/kafka_2.11-1.1.0/config/server.properties测试,生产输入asd,消费端显示asd,成功

kafka2 上创建主题

]# docker exec -it kafka2 bin/bash

/]# export JAVA_HOME=/home/lib/jdk1.8.0_171/;export PATH=$JAVA_HOME/bin:$PATH

/]# /home/kafka_2.11-1.1.0/bin/kafka-topics.sh --create --zookeeper \

172.172.0.5:2181 --replication-factor 1 --partitions 2 --topic ecplogs

kafka2 生产

/]# /home/kafka_2.11-1.1.0/bin/kafka-console-producer.sh --broker-list \

172.172.0.3:9092 --topic ecplogs

>asd

kafka1消费

]# docker exec -it kafka1 bin/bash

/]# export JAVA_HOME=/home/lib/jdk1.8.0_171/;export PATH=$JAVA_HOME/bin:$PATH

/]# /home/kafka_2.11-1.1.0/bin/kafka-console-consumer.sh --zookeeper 172.172.0.5:2181 --topic \

ecplogs --from-beginning

asd启动logstashes

]# docker run --name=logstashes --net elknet --ip 172.172.0.8 --privileged=true \

-e TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it logstashes bin/bash

/]# tar -zxvf /home/lib/logstash-6.3.0.tar.gz -C /home

/]# vi /home/logstash-6.3.0/config/logstash_to_es.conf

input {

kafka {

bootstrap_servers => "172.172.0.3:9092,172.172.0.4:9092"

topics => ["ecplogs"]

}

}

output {

elasticsearch {

hosts => ["172.172.0.9:9200"]

index => "ecp-log-%{+YYYY.MM.dd}"

}

}

/]# vi /home/startlogstash.sh

#!/bin/bash

export JAVA_HOME=/home/lib/jdk1.8.0_171/;export PATH=$JAVA_HOME/bin:$PATH

nohup /home/logstash-6.3.0/bin/logstash -f /home/logstash-6.3.0/config/logstash_to_es.conf \

>/dev/null &

/]# chmod 777 /home/startlogstash.sh

退出启动

]# docker exec -i logstashes /home/startlogstash.sh

启动logstashbeat

]# docker run --name=logstashbeat --net elknet --ip 172.172.0.2 --privileged=true \

-e TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it logstashbeat bin/bash

/]# tar -zxvf /home/lib/logstash-6.3.0.tar.gz -C /home

/]# vi /home/logstash-6.3.0/config/beat_to_logstash.conf

input {

beats {

port => 5044

}

}

output {

kafka {

bootstrap_servers => "172.172.0.3:9092,172.172.0.4:9092"

topic_id => "ecplogs"

}

}

/]# vi /home/startlogstash.sh

#!/bin/bash

export JAVA_HOME=/home/lib/jdk1.8.0_171/

export PATH=$JAVA_HOME/bin:$PATH

nohup /home/logstash-6.3.0/bin/logstash -f /home/logstash-6.3.0/config/beat_to_logstash.conf \

>/dev/null &

/]# chmod 777 /home/startlogstash.sh

退出启动

]# docker exec -i logstashbeat /home/startlogstash.sh

启动filebeat

]# docker run --name=filebeat --net elknet --ip 172.172.0.11 --privileged=true \

-e TZ=Asia/Shanghai -v /home/log-script/lib/:/home/lib -itd lc-centos7-ssh bin/bash

]# docker exec -it filebeat bin/bash

/]# tar -zxvf /home/lib/filebeat-6.3.0-linux-x86_64.tar.gz -C /home

修改配置文件

/]# vi /home/filebeat-6.3.0-linux-x86_64/filebeat.yml

修改filebeat.inputs部分

注释Elasticsearch output部分

修改output.logstash部分

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/log/*.log

output.logstash:

hosts: ["172.172.0.2:5044"]

启动

/]#mkdir /home/log

/]#nohup /home/filebeat-6.3.0-linux-x86_64/filebeat -c \

/home/filebeat-6.3.0-linux-x86_64/filebeat.yml >/home/log/beat.log &测试

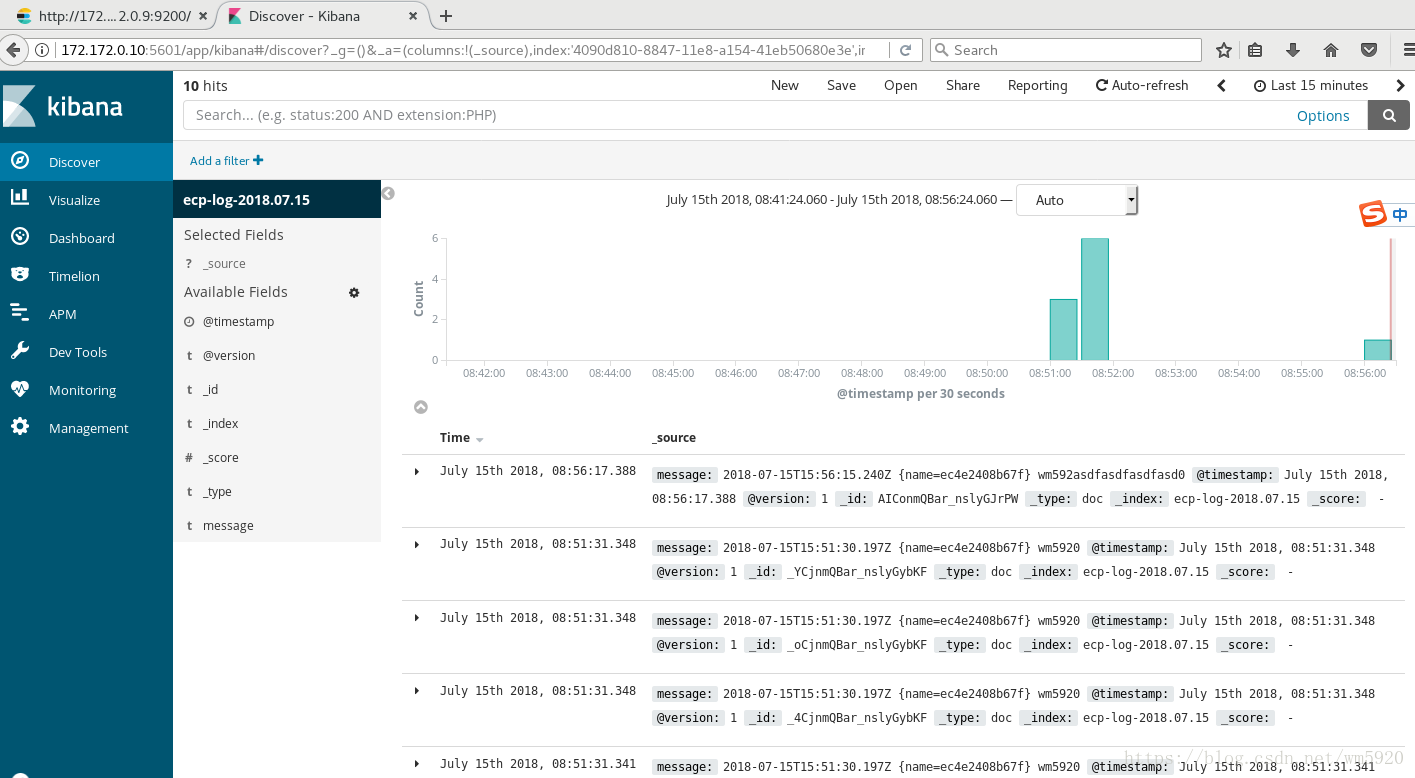

/]# echo 'wm5920'>>/home/log/beat.logkibana中创建index pattern,在discover中即可查看