版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_41185868/article/details/84559637

TF之NN:基于TF利用NN算法实现根据三个自变量预测一个因变量的回归问题

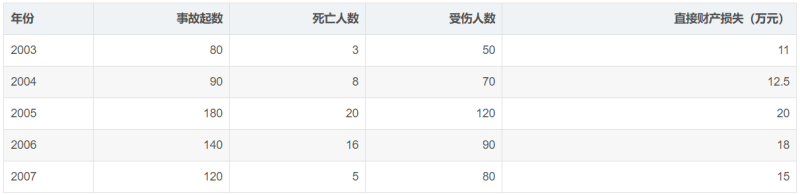

实验数据

说明:利用前四年的数据建立回归模型,并对第五年进行预测。

输出结果

loss is: 913.6623

loss is: 781206160000.0

loss is: 9006693000.0

loss is: 103840136.0

loss is: 1197209.2

loss is: 13816.644

loss is: 173.0564

loss is: 15.756571

loss is: 13.9430275

loss is: 13.922119

loss is: 13.921878

loss is: 13.921875

loss is: 13.921875

loss is: 13.921875

loss is: 13.921875

input is:[120, 5, 85]

output is:15.375002实现代码

import numpy as np

import tensorflow as tf

x = [[80,3,50],[90,8,70],[180,20,120],[140,16,90]]

y = [[11],[12.5],[20],[18]]

# y = [11,12.5,20,18]

x_pred = [[120,5,85]]

# dataset = np.loadtxt("data/20181127test04.csv", delimiter=",")

# # split into input (X) and output (Y) variables

# x = dataset[0:7,0:17]

# print(x)

# y = dataset[0:7,17]

# print(y)

# x_pred = dataset[7,0:17]

# print(x_pred)

tf_x = tf.placeholder(tf.float32, [None,3]) # input x

tf_y = tf.placeholder(tf.float32, [None,1]) # input y

print(tf_x)

# neural network layers

l1 = tf.layers.dense(tf_x, 20, tf.nn.relu) # hidden layer 18*8

output = tf.layers.dense(l1, 1) # output layer

loss = tf.losses.mean_squared_error(tf_y, output) # compute cost

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1)

train_op = optimizer.minimize(loss)

sess = tf.Session() # control training and others

sess.run(tf.global_variables_initializer()) # initialize var in graph

for step in range(150):

# train and net output

_, l, pred = sess.run([train_op, loss, output], {tf_x: x, tf_y: y})

if step % 10 == 0:

print('loss is: ' + str(l))

# print('prediction is:' + str(pred))

output_pred = sess.run(output,{tf_x:x_pred})

print('input is:' + str(x_pred[0][:]))

print('output is:' + str(output_pred[0][0]))