1.准备工作:

- chromedriver 传送门:国内:http://npm.taobao.org/mirrors/chromedriver/ vpn:

- selenium

- BeautifulSoup4(美味汤)

pip3 install selenium

pip3 install BeautifulSoup4

chromedriver 的安装请自行百度。我们直奔主题。

起飞前请确保准备工作以就绪...

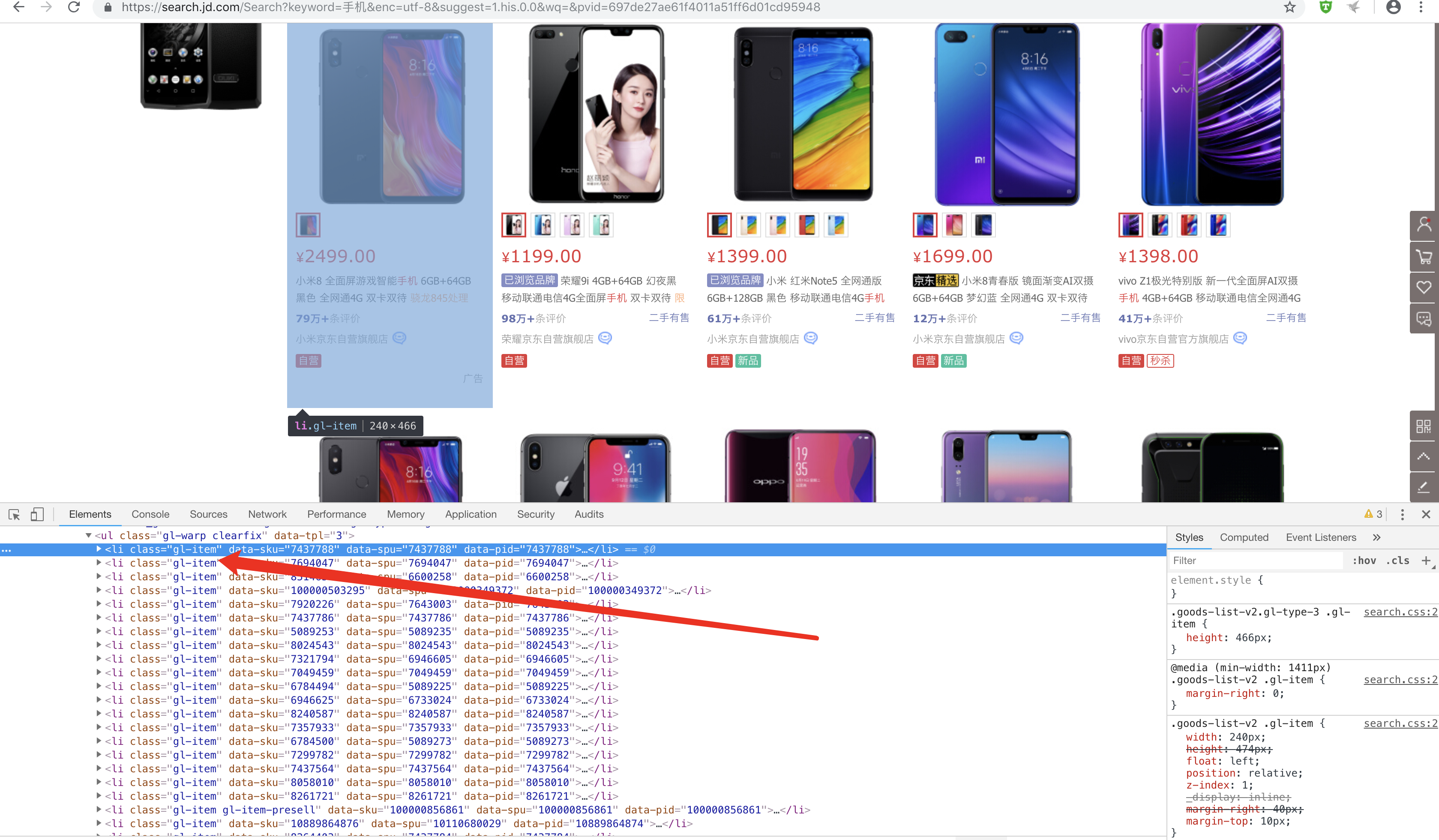

2.分析网页:

目标网址:https://www.jd.com/

所有item均保存在class="gl-item"里面

需求:

- 使用selenium 驱动浏览器自动侦测到input输入框,输入框中输入“手机”,点击搜索按钮.

- 使用seleinum抓取发挥页面的总页码,并模拟手动翻页

- 使用BeautifulSoup分析页面,抓取手机信息

从入口首页进入查询状态

1 # 定义入口查询界面 2 def search(): 3 browser.get('https://www.jd.com/') 4 try: 5 # 查找搜索框及搜索按钮,输入信息并点击按钮 6 input = wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#key"))) 7 submit = wait.until(EC.element_to_be_clickable((By.CSS_SELECTOR, "#search > div > div.form > button"))) 8 input[0].send_keys('手机') 9 submit.click() 10 # 获取总页数 11 page = wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, '#J_bottomPage > span.p-skip > em:nth-child(1) > b'))) 12 return page[0].text 13 # 如果异常,递归调用本函数 14 except TimeoutException: 15 search()

查询结束后模拟翻页

1 # 翻页 2 def next_page(page_number): 3 try: 4 # 滑动到网页底部,加载出所有商品信息 5 browser.execute_script("window.scrollTo(0, document.body.scrollHeight);") 6 time.sleep(4) 7 html = browser.page_source 8 # 当网页到达100页时,下一页按钮失效,所以选择结束程序 9 while page_number == 101: 10 exit() 11 # 查找下一页按钮,并点击按钮 12 button = wait.until(EC.element_to_be_clickable((By.CSS_SELECTOR, '#J_bottomPage > span.p-num > a.pn-next > em'))) 13 button.click() 14 # 判断是否加载到本页最后一款产品Item(每页显示60条商品信息) 15 wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#J_goodsList > ul > li:nth-child(60)"))) 16 # 判断翻页成功 17 wait.until(EC.text_to_be_present_in_element((By.CSS_SELECTOR, "#J_bottomPage > span.p-num > a.curr"), str(page_number))) 18 return html 19 except TimeoutException: 20 return next_page(page_number)

解析页面上的a标签

# 解析每一页面上的a链接 def parse_html(html): """ 解析商品列表网页,获取商品的详情页 """ soup = BeautifulSoup(html, 'html.parser') items = soup.select('.gl-item') for item in items: a = item.select('.p-name.p-name-type-2 a') link = str(a[0].attrs['href']) if 'https:' in link: continue else: link = "https:"+link yield link

根据url 截取商品id 获取价格信息

# 获取手机价格,由于价格信息是请求另外一个地址https://p.3.cn/prices/mgets?skuIds=J_+product_id def get_price(product_id): url = 'https://p.3.cn/prices/mgets?skuIds=J_' + product_id response = requests.get(url,heeders) result = ujson.loads(response.text) return result

进入item商品详情页

# 进入详情页 def detail_page(link): """ 进入item详情页 :param link: item link :return: html """ browser.get(link) try: browser.execute_script("window.scrollTo(0, document.body.scrollHeight);") time.sleep(3) html = browser.page_source return html except TimeoutException: detail_page(link)

1 # 获取详情页的手机信息 2 def get_detail(html,result): 3 """ 4 获取详情页的数据 5 :param html: 6 :return: 7 """ 8 dic ={} 9 soup = BeautifulSoup(html, 'html.parser') 10 item_list = soup.find_all('div', class_='Ptable-item') 11 for item in item_list: 12 contents1 = item.findAll('dt') 13 contents2 = item.findAll('dd') 14 for i in range(len(contents1)): 15 dic[contents1[i].string] = contents2[i].string 16 17 dic['price_jd '] = result[0]['p'] 18 dic['price_mk '] = result[0]['m'] 19 print(dic)

滴滴滴.. 基本上的思路就酱紫咯.. 传送门依旧打开直github: https://github.com/shinefairy/spider/tree/master/Crawler

end~