我们讲解完了DistributedFileSystem,随着代码我们来到了DFSClient构造函数中,该函数代码如下:

/**

* Create a new DFSClient connected to the given nameNodeUri or rpcNamenode.

* If HA is enabled and a positive value is set for

* {@link DFSConfigKeys#DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY} in the

* configuration, the DFSClient will use {@link LossyRetryInvocationHandler}

* as its RetryInvocationHandler. Otherwise one of nameNodeUri or rpcNamenode

* must be null.

*/

@VisibleForTesting

public DFSClient(URI nameNodeUri, ClientProtocol rpcNamenode,Configuration conf, FileSystem.Statistics stats)

throws IOException {

// Copy only the required DFSClient configuration

this.dfsClientConf = new Conf(conf);

if (this.dfsClientConf.useLegacyBlockReaderLocal) {

LOG.debug("Using legacy short-circuit local reads.");

}

this.conf = conf;//设置HDFS配置信息

this.stats = stats;//Client状态统计信息,包括Client读、写字节数等。

//下面内容有介绍

this.socketFactory = NetUtils.getSocketFactory(conf, ClientProtocol.class);

//dtpReplaceDatanodeOnFailure用来表明当Client读写数据时,Datanode出现故障,是否进行

//Datanode替换的策略。如果为false,那么一旦Datanode出了故障就不会进行替换,如果为true,那么就

//会去获取备用的副本Datanode,并进行替换

this.dtpReplaceDatanodeOnFailure = ReplaceDatanodeOnFailure.get(conf);

//获取当前用户信息,用于权限管理,例如hdfs文件读、写、删除等的时候判断是否有这个权限,提交job

//的时候是否有相应的使用资源权限,详解见

//https://blog.csdn.net/u011491148/article/details/45868467

this.ugi = UserGroupInformation.getCurrentUser();

//获得授权机构信息(包括用户信息、主机和端口)

this.authority = nameNodeUri == null? "null": nameNodeUri.getAuthority();

//用户名称

this.clientName = "DFSClient_" + dfsClientConf.taskId + "_" +

DFSUtil.getRandom().nextInt() + "_" + Thread.currentThread().getId();

//用于解密

provider = DFSUtil.createKeyProvider(conf);

if (LOG.isDebugEnabled()) {

if (provider == null) {

LOG.debug("No KeyProvider found.");

} else {

LOG.debug("Found KeyProvider: " + provider.toString());

}

int numResponseToDrop = conf.getInt(

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY,

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_DEFAULT);

NameNodeProxies.ProxyAndInfo<ClientProtocol> proxyInfo = null;

AtomicBoolean nnFallbackToSimpleAuth = new AtomicBoolean(false);

if (numResponseToDrop > 0) {

// This case is used for testing.

LOG.warn(DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY

+ " is set to " + numResponseToDrop

+ ", this hacked client will proactively drop responses");

proxyInfo = NameNodeProxies.createProxyWithLossyRetryHandler(conf,

nameNodeUri, ClientProtocol.class, numResponseToDrop,

nnFallbackToSimpleAuth);

}

if (proxyInfo != null) {

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

} else if (rpcNamenode != null) {

// This case is used for testing.

Preconditions.checkArgument(nameNodeUri == null);

this.namenode = rpcNamenode;

dtService = null;

} else {

Preconditions.checkArgument(nameNodeUri != null,

"null URI");

//通过NameNodeProxies.createProxy()创建Rpc引用,下面会进行详细描述

proxyInfo = NameNodeProxies.createProxy(conf, nameNodeUri,

ClientProtocol.class, nnFallbackToSimpleAuth);

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

}

String localInterfaces[] =

conf.getTrimmedStrings(DFSConfigKeys.DFS_CLIENT_LOCAL_INTERFACES);

localInterfaceAddrs = getLocalInterfaceAddrs(localInterfaces);

if (LOG.isDebugEnabled() && 0 != localInterfaces.length) {

LOG.debug("Using local interfaces [" +

Joiner.on(',').join(localInterfaces)+ "] with addresses [" +

Joiner.on(',').join(localInterfaceAddrs) + "]");

}

Boolean readDropBehind = (conf.get(DFS_CLIENT_CACHE_DROP_BEHIND_READS) == null) ?

null : conf.getBoolean(DFS_CLIENT_CACHE_DROP_BEHIND_READS, false);

Long readahead = (conf.get(DFS_CLIENT_CACHE_READAHEAD) == null) ?

null : conf.getLong(DFS_CLIENT_CACHE_READAHEAD, 0);

Boolean writeDropBehind = (conf.get(DFS_CLIENT_CACHE_DROP_BEHIND_WRITES) == null) ?

null : conf.getBoolean(DFS_CLIENT_CACHE_DROP_BEHIND_WRITES, false);

this.defaultReadCachingStrategy =

new CachingStrategy(readDropBehind, readahead);

this.defaultWriteCachingStrategy =

new CachingStrategy(writeDropBehind, readahead);

this.clientContext = ClientContext.get(

conf.get(DFS_CLIENT_CONTEXT, DFS_CLIENT_CONTEXT_DEFAULT),

dfsClientConf);

this.hedgedReadThresholdMillis = conf.getLong(

DFSConfigKeys.DFS_DFSCLIENT_HEDGED_READ_THRESHOLD_MILLIS,

DFSConfigKeys.DEFAULT_DFSCLIENT_HEDGED_READ_THRESHOLD_MILLIS);

int numThreads = conf.getInt(

DFSConfigKeys.DFS_DFSCLIENT_HEDGED_READ_THREADPOOL_SIZE,

DFSConfigKeys.DEFAULT_DFSCLIENT_HEDGED_READ_THREADPOOL_SIZE);

if (numThreads > 0) {

this.initThreadsNumForHedgedReads(numThreads);

}

this.saslClient = new SaslDataTransferClient(

conf, DataTransferSaslUtil.getSaslPropertiesResolver(conf),

TrustedChannelResolver.getInstance(conf), nnFallbackToSimpleAuth);

}执行代码this.socketFactory = NetUtils.getSocketFactory(conf, ClientProtocol.class);

/**

* Get the socket factory for the given class according to its

* configuration parameter

* <tt>hadoop.rpc.socket.factory.class.<ClassName></tt>. When no

* such parameter exists then fall back on the default socket factory as

* configured by <tt>hadoop.rpc.socket.factory.class.default</tt>. If

* this default socket factory is not configured, then fall back on the JVM

* default socket factory.

*

* @param conf the configuration

* @param clazz the class (usually a {@link VersionedProtocol})

* @return a socket factory

*/

public static SocketFactory getSocketFactory(Configuration conf,

Class<?> clazz) {

SocketFactory factory = null;

//由于clazz为ClientProtocol.class,对hadoop.rpc.socket.factory.class.ClientProtocol字符串进行解析,主要有两方面的变动,

//(1)、如果该字符串已经被废弃,那么就将新的替换

//(2)、将该字符串中带有$的属性替换成相对应的字符串

//经过上面的两个步骤,最终得到一个真正的class包名称

String propValue =

conf.get("hadoop.rpc.socket.factory.class." + clazz.getSimpleName());

if ((propValue != null) && (propValue.length() > 0))

//到配置文件中查询name为上面得到的包名称并创建该类(SocketFactory类)对象

factory = getSocketFactoryFromProperty(conf, propValue);

if (factory == null)

//如果factory为null,那么就采用默认的方式

factory = getDefaultSocketFactory(conf);

return factory;

}执行代码factory = getSocketFactoryFromProperty(conf, propValue);

/**

* Get the socket factory corresponding to the given proxy URI. If the

* given proxy URI corresponds to an absence of configuration parameter,

* returns null. If the URI is malformed raises an exception.

*

* @param propValue the property which is the class name of the

* SocketFactory to instantiate; assumed non null and non empty.

* @return a socket factory as defined in the property value.

*/

public static SocketFactory getSocketFactoryFromProperty(

Configuration conf, String propValue) {

try {

//根据类名获取到对应的Class类型对象

Class<?> theClass = conf.getClassByName(propValue);

//根据Class类型对象创建实例

return (SocketFactory) ReflectionUtils.newInstance(theClass, conf);

} catch (ClassNotFoundException cnfe) {

throw new RuntimeException("Socket Factory class not found: " + cnfe);

}

}

}我们进入ReflectionUtils中的newInstance函数中,这个函数用来创建相应的类并初始化,代码如下:

/** Create an object for the given class and initialize it from conf

*

* @param theClass class of which an object is created

* @param conf Configuration

* @return a new object

*/

@SuppressWarnings("unchecked")

public static <T> T newInstance(Class<T> theClass, Configuration conf) {

T result;

try {

//先从缓存中获取该类中的构造函数

Constructor<T> meth = (Constructor<T>) CONSTRUCTOR_CACHE.get(theClass);

if (meth == null) {

//如果缓存中没有,那么就从类中去获取一个无参构造函数

meth = theClass.getDeclaredConstructor(EMPTY_ARRAY);

//将构造函数设置为public

meth.setAccessible(true);

//将类中的构造函数保存到缓存中

CONSTRUCTOR_CACHE.put(theClass, meth);

}

//通过构造函数创建类对象

result = meth.newInstance();

} catch (Exception e) {

throw new RuntimeException(e);

}

setConf(result, conf);

return result;

}我们现在回到DFSClient中的构造函数中,开始分析如下代码:

//从配置文件中获取dfs.client.test.drop.namenode.response.number的值

int numResponseToDrop = conf.getInt(

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY,

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_DEFAULT);

NameNodeProxies.ProxyAndInfo<ClientProtocol> proxyInfo = null;

AtomicBoolean nnFallbackToSimpleAuth = new AtomicBoolean(false);

//如果返回的值大于0,那么

if (numResponseToDrop > 0) {

// This case is used for testing.

LOG.warn(DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY

+ " is set to " + numResponseToDrop

+ ", this hacked client will proactively drop responses");

//用来处理在客户端受到攻击的时候情况,这个需要HA配置,详细后面会讲到

proxyInfo = NameNodeProxies.createProxyWithLossyRetryHandler(conf,

nameNodeUri, ClientProtocol.class, numResponseToDrop,

nnFallbackToSimpleAuth);

}

//proxyInfo不为null的情况暂时不描述,我们只分析最后else的情况

if (proxyInfo != null) {

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

} else if (rpcNamenode != null) {

// This case is used for testing.

Preconditions.checkArgument(nameNodeUri == null);

this.namenode = rpcNamenode;

dtService = null;

} else {

//检查参数,如果nameNodeUri为null,那么就抛出异常

Preconditions.checkArgument(nameNodeUri != null,

"null URI");

//创建代理

proxyInfo = NameNodeProxies.createProxy(conf, nameNodeUri,

ClientProtocol.class, nnFallbackToSimpleAuth);

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

}2018-08-30 23:26追加----start

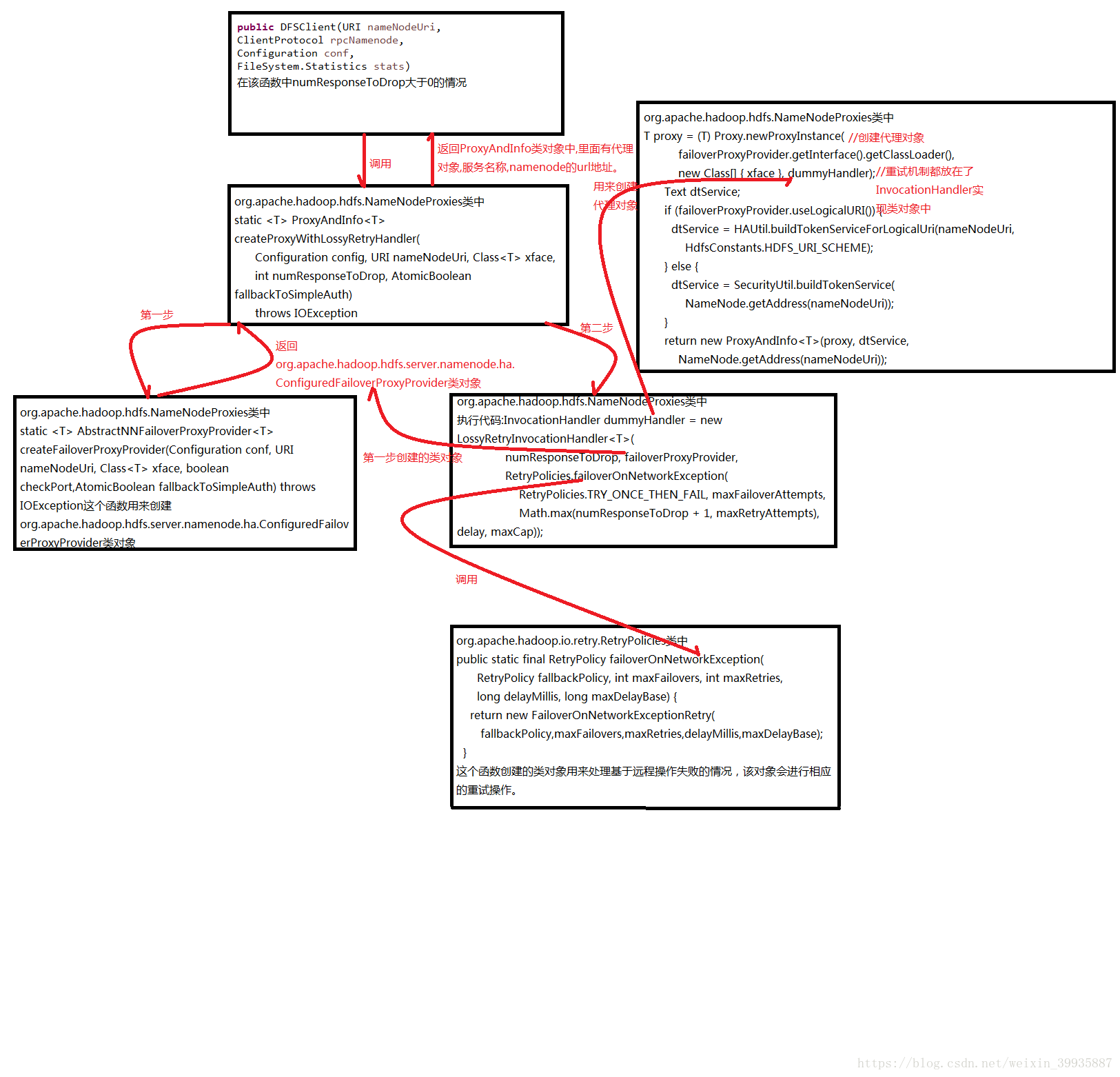

我们分析一下proxyInfo = NameNodeProxies.createProxyWithLossyRetryHandler(conf,nameNodeUri, ClientProtocol.class, numResponseToDrop,nnFallbackToSimpleAuth);,createProxyWithLossyRetryHandler函数的代码如下:

/**

* Generate a dummy namenode proxy instance that utilizes our hacked

* {@link LossyRetryInvocationHandler}. Proxy instance generated using this

* method will proactively drop RPC responses. Currently this method only

* support HA setup. null will be returned if the given configuration is not

* for HA.

*

* @param config the configuration containing the required IPC

* properties, client failover configurations, etc.

* @param nameNodeUri the URI pointing either to a specific NameNode

* or to a logical nameservice.

* @param xface the IPC interface which should be created

* @param numResponseToDrop The number of responses to drop for each RPC call

* @param fallbackToSimpleAuth set to true or false during calls to indicate if

* a secure client falls back to simple auth

* @return an object containing both the proxy and the associated

* delegation token service it corresponds to. Will return null of the

* given configuration does not support HA.

* @throws IOException if there is an error creating the proxy

*/

@SuppressWarnings("unchecked")

public static <T> ProxyAndInfo<T> createProxyWithLossyRetryHandler(

Configuration config, URI nameNodeUri, Class<T> xface,

int numResponseToDrop, AtomicBoolean fallbackToSimpleAuth)

throws IOException {

//如果numResponseToDrop小于等于0那么就抛出异常

Preconditions.checkArgument(numResponseToDrop > 0);

//创建一个失败转移的代理提供类,这个类主要用来在namenode的leader节点出问题了,那么就自动切换

//到follow的namenode节点,同时更新leader节点,这种保障机制就是HA模式

AbstractNNFailoverProxyProvider<T> failoverProxyProvider =

createFailoverProxyProvider(config, nameNodeUri, xface, true,

fallbackToSimpleAuth);

//这里如果对象不为null,说明就是HA模式

if (failoverProxyProvider != null) { // HA case

int delay = config.getInt(

DFS_CLIENT_FAILOVER_SLEEPTIME_BASE_KEY,

DFS_CLIENT_FAILOVER_SLEEPTIME_BASE_DEFAULT);

int maxCap = config.getInt(

DFS_CLIENT_FAILOVER_SLEEPTIME_MAX_KEY,

DFS_CLIENT_FAILOVER_SLEEPTIME_MAX_DEFAULT);

int maxFailoverAttempts = config.getInt(

DFS_CLIENT_FAILOVER_MAX_ATTEMPTS_KEY,

DFS_CLIENT_FAILOVER_MAX_ATTEMPTS_DEFAULT);

int maxRetryAttempts = config.getInt(

DFS_CLIENT_RETRY_MAX_ATTEMPTS_KEY,

DFS_CLIENT_RETRY_MAX_ATTEMPTS_DEFAULT);

//创建一个InvocationHandler接口实现类对象

InvocationHandler dummyHandler = new LossyRetryInvocationHandler<T>(

numResponseToDrop, failoverProxyProvider,

RetryPolicies.failoverOnNetworkException(

RetryPolicies.TRY_ONCE_THEN_FAIL, maxFailoverAttempts,

Math.max(numResponseToDrop + 1, maxRetryAttempts), delay,

maxCap));

T proxy = (T) Proxy.newProxyInstance(

failoverProxyProvider.getInterface().getClassLoader(),

new Class[] { xface }, dummyHandler);

Text dtService;

if (failoverProxyProvider.useLogicalURI()) {

dtService = HAUtil.buildTokenServiceForLogicalUri(nameNodeUri,

HdfsConstants.HDFS_URI_SCHEME);

} else {

dtService = SecurityUtil.buildTokenService(

NameNode.getAddress(nameNodeUri));

}

return new ProxyAndInfo<T>(proxy, dtService,

NameNode.getAddress(nameNodeUri));

} else {

LOG.warn("Currently creating proxy using " +

"LossyRetryInvocationHandler requires NN HA setup");

return null;

}

}进入到函数createFailoverProxyProvider中,代码如下:

/** Creates the Failover proxy provider instance*/

@VisibleForTesting

public static <T> AbstractNNFailoverProxyProvider<T> createFailoverProxyProvider(

Configuration conf, URI nameNodeUri, Class<T> xface, boolean checkPort,

AtomicBoolean fallbackToSimpleAuth) throws IOException {

Class<FailoverProxyProvider<T>> failoverProxyProviderClass = null;

AbstractNNFailoverProxyProvider<T> providerNN;

Preconditions.checkArgument(

xface.isAssignableFrom(NamenodeProtocols.class),

"Interface %s is not a NameNode protocol", xface);

try {

// Obtain the class of the proxy provider

//通过配置类对象和nameNodeUri从配置文件中获取name为

//dfs.client.failover.proxy.provider+host,对应value的Class对象

//其实这里面的Class对象对应的全路径为

//org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

failoverProxyProviderClass = getFailoverProxyProviderClass(conf,

nameNodeUri);

//如果为null,那么直接返回null

if (failoverProxyProviderClass == null) {

return null;

}

// Create a proxy provider instance.

//如果failoverProxyProviderClass不为null,那么就获取该类的构造函数

Constructor<FailoverProxyProvider<T>> ctor = failoverProxyProviderClass

.getConstructor(Configuration.class, URI.class, Class.class);

//创建该类对象

FailoverProxyProvider<T> provider = ctor.newInstance(conf, nameNodeUri,

xface);

// If the proxy provider is of an old implementation, wrap it.

if (!(provider instanceof AbstractNNFailoverProxyProvider)) {

providerNN = new WrappedFailoverProxyProvider<T>(provider);

} else {

providerNN = (AbstractNNFailoverProxyProvider<T>)provider;

}

} catch (Exception e) {

String message = "Couldn't create proxy provider " + failoverProxyProviderClass;

if (LOG.isDebugEnabled()) {

LOG.debug(message, e);

}

if (e.getCause() instanceof IOException) {

throw (IOException) e.getCause();

} else {

throw new IOException(message, e);

}

}

// Check the port in the URI, if it is logical.

if (checkPort && providerNN.useLogicalURI()) {

int port = nameNodeUri.getPort();

if (port > 0 && port != NameNode.DEFAULT_PORT) {

// Throwing here without any cleanup is fine since we have not

// actually created the underlying proxies yet.

throw new IOException("Port " + port + " specified in URI "

+ nameNodeUri + " but host '" + nameNodeUri.getHost()

+ "' is a logical (HA) namenode"

+ " and does not use port information.");

}

}

providerNN.setFallbackToSimpleAuth(fallbackToSimpleAuth);

return providerNN;

}流程图如下:

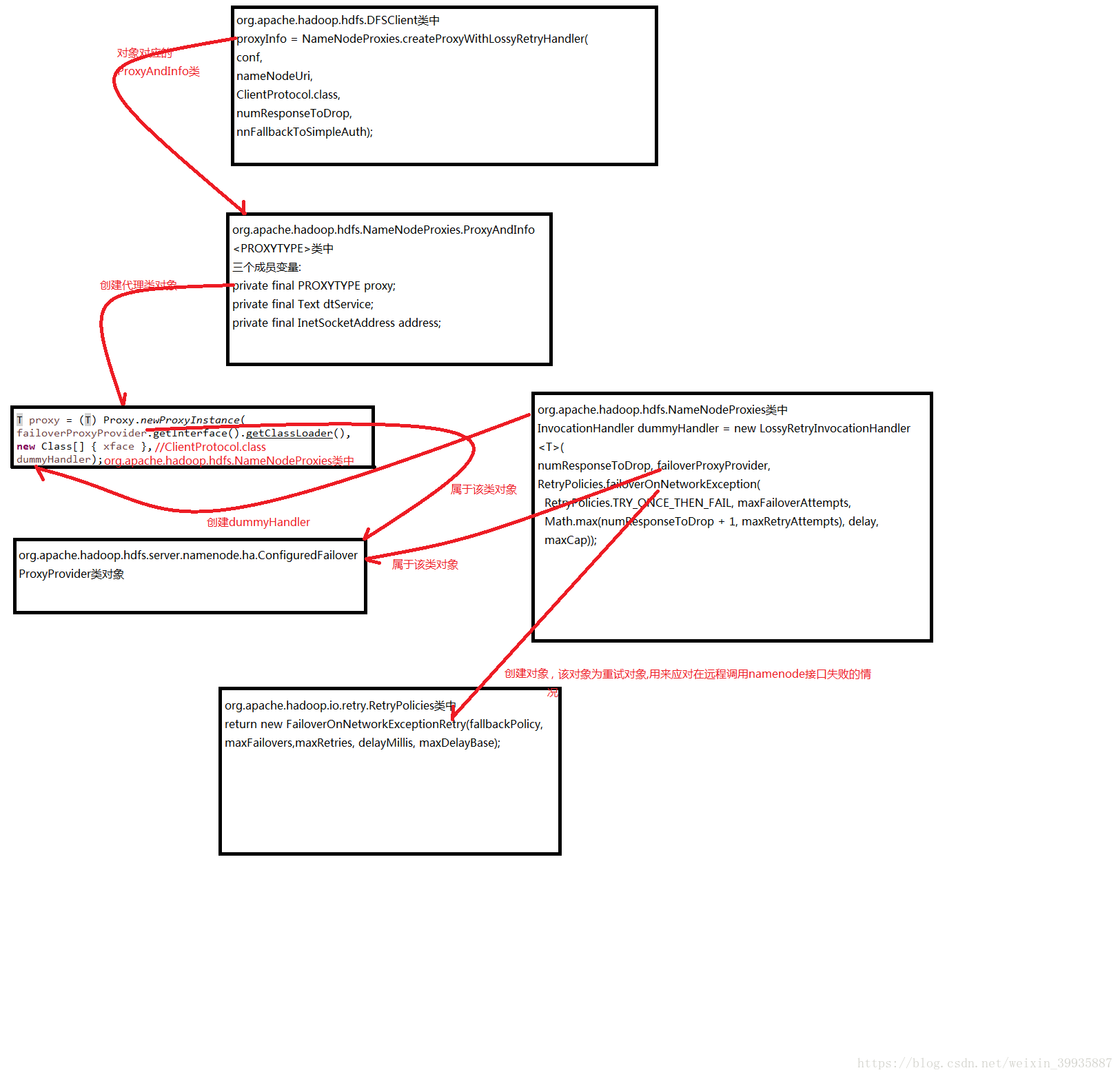

代理类的结构图

2018-08-30 23:26追加----end

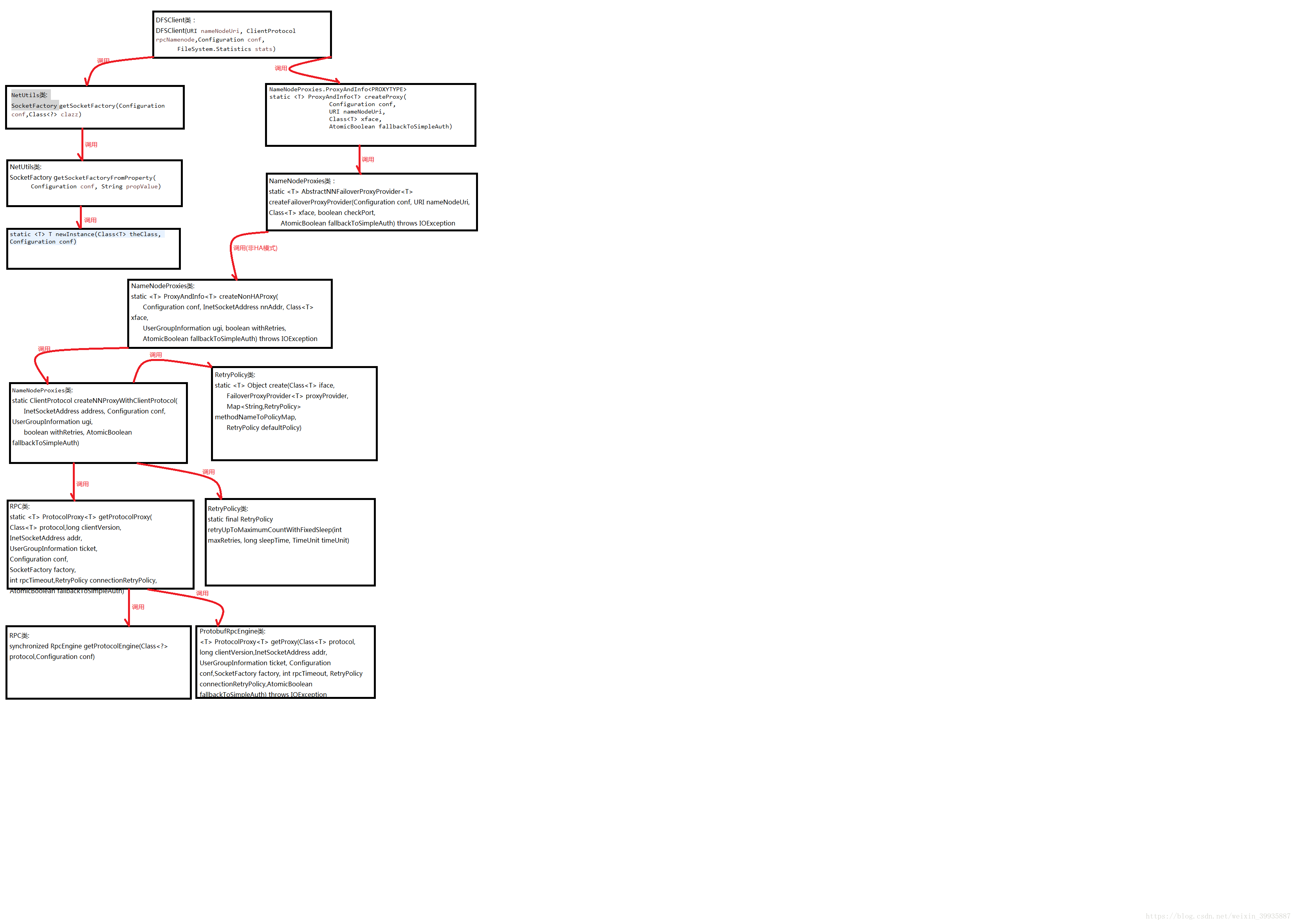

我们直接进入到else中的createProxy函数中,这个函数用来创建namenode的代理,代码如下:

/**

* Creates the namenode proxy with the passed protocol. This will handle

* creation of either HA- or non-HA-enabled proxy objects, depending upon

* if the provided URI is a configured logical URI.

*使用传递协议创建namenode代理,这将创建一个HA或者非HA的代理对象,至于到底是HA还是非HA对象取决

*于配置文件中的相关配置。

*

* @param conf the configuration containing the required IPC

* properties, client failover configurations, etc.

* @param nameNodeUri the URI pointing either to a specific NameNode

* or to a logical nameservice.

* @param xface the IPC interface which should be created

* @param fallbackToSimpleAuth set to true or false during calls to indicate if

* a secure client falls back to simple auth

* @return an object containing both the proxy and the associated

* delegation token service it corresponds to

* @throws IOException if there is an error creating the proxy

**/

@SuppressWarnings("unchecked")

public static <T> ProxyAndInfo<T> createProxy(Configuration conf,

URI nameNodeUri, Class<T> xface, AtomicBoolean fallbackToSimpleAuth)

throws IOException {

AbstractNNFailoverProxyProvider<T> failoverProxyProvider = createFailoverProxyProvider(conf, nameNodeUri, xface, true,

fallbackToSimpleAuth);

if (failoverProxyProvider == null) {

// Non-HA case

return createNonHAProxy(conf, NameNode.getAddress(nameNodeUri), xface,

UserGroupInformation.getCurrentUser(), true, fallbackToSimpleAuth);

} else {

// HA case

Conf config = new Conf(conf);

T proxy = (T) RetryProxy.create(xface, failoverProxyProvider,

RetryPolicies.failoverOnNetworkException(

RetryPolicies.TRY_ONCE_THEN_FAIL, config.maxFailoverAttempts,

config.maxRetryAttempts, config.failoverSleepBaseMillis,

config.failoverSleepMaxMillis));

Text dtService;

if (failoverProxyProvider.useLogicalURI()) {

dtService = HAUtil.buildTokenServiceForLogicalUri(nameNodeUri,

HdfsConstants.HDFS_URI_SCHEME);

} else {

dtService = SecurityUtil.buildTokenService(

NameNode.getAddress(nameNodeUri));

}

return new ProxyAndInfo<T>(proxy, dtService,

NameNode.getAddress(nameNodeUri));

}

}我们首先来分析createProxy函数中的第一行代码

AbstractNNFailoverProxyProvider<T> failoverProxyProvider = createFailoverProxyProvider(conf, nameNodeUri, xface, true,fallbackToSimpleAuth);//进入到函数createFailoverProxyProvider中,代码如下:--------在org.apache.hadoop.hdfs.NameNodeProxies类

/** Creates the Failover proxy provider instance*/

@VisibleForTesting

public static <T> AbstractNNFailoverProxyProvider<T> createFailoverProxyProvider(

Configuration conf, URI nameNodeUri, Class<T> xface, boolean checkPort,

AtomicBoolean fallbackToSimpleAuth) throws IOException {

Class<FailoverProxyProvider<T>> failoverProxyProviderClass = null;

AbstractNNFailoverProxyProvider<T> providerNN;

Preconditions.checkArgument(

xface.isAssignableFrom(NamenodeProtocols.class),

"Interface %s is not a NameNode protocol", xface);

try {

// Obtain the class of the proxy provider

//通过配置类对象和nameNodeUri从配置文件中获取name为

//dfs.client.failover.proxy.provider+host,对应value的Class对象

failoverProxyProviderClass = getFailoverProxyProviderClass(conf,

nameNodeUri);

//如果为null,那么直接返回null

if (failoverProxyProviderClass == null) {

return null;

}

// Create a proxy provider instance.

//如果failoverProxyProviderClass不为null,那么就获取该类的构造函数

Constructor<FailoverProxyProvider<T>> ctor = failoverProxyProviderClass

.getConstructor(Configuration.class, URI.class, Class.class);

//创建该类对象

FailoverProxyProvider<T> provider = ctor.newInstance(conf, nameNodeUri,

xface);

// If the proxy provider is of an old implementation, wrap it.

if (!(provider instanceof AbstractNNFailoverProxyProvider)) {

providerNN = new WrappedFailoverProxyProvider<T>(provider);

} else {

providerNN = (AbstractNNFailoverProxyProvider<T>)provider;

}

} catch (Exception e) {

String message = "Couldn't create proxy provider " + failoverProxyProviderClass;

if (LOG.isDebugEnabled()) {

LOG.debug(message, e);

}

if (e.getCause() instanceof IOException) {

throw (IOException) e.getCause();

} else {

throw new IOException(message, e);

}

}

// Check the port in the URI, if it is logical.

if (checkPort && providerNN.useLogicalURI()) {

int port = nameNodeUri.getPort();

if (port > 0 && port != NameNode.DEFAULT_PORT) {

// Throwing here without any cleanup is fine since we have not

// actually created the underlying proxies yet.

throw new IOException("Port " + port + " specified in URI "

+ nameNodeUri + " but host '" + nameNodeUri.getHost()

+ "' is a logical (HA) namenode"

+ " and does not use port information.");

}

}

providerNN.setFallbackToSimpleAuth(fallbackToSimpleAuth);

return providerNN;

}回到函数createProxy --------在org.apache.hadoop.hdfs.NameNodeProxies类中

我们继续往下分析,然后根据createFailoverProxyProvider函数返回的值failoverProxyProvider来判断是否是HA,如果failoverProxyProvider为null那么就采用非HA的,否则采用HA模式。

我们先分析非HA模式,调用函数createNonHAProxy -----------------在org.apache.hadoop.hdfs.NameNodeProxies类中

/**

* Creates an explicitly non-HA-enabled proxy object. Most of the time you

* don't want to use this, and should instead use {@link NameNodeProxies#createProxy}.

*

* @param conf the configuration object

* @param nnAddr address of the remote NN to connect to

* @param xface the IPC interface which should be created

* @param ugi the user who is making the calls on the proxy object

* @param withRetries certain interfaces have a non-standard retry policy

* @param fallbackToSimpleAuth - set to true or false during this method to

* indicate if a secure client falls back to simple auth

* @return an object containing both the proxy and the associated

* delegation token service it corresponds to

* @throws IOException

*/

@SuppressWarnings("unchecked")

public static <T> ProxyAndInfo<T> createNonHAProxy(

Configuration conf, InetSocketAddress nnAddr, Class<T> xface,

UserGroupInformation ugi, boolean withRetries,

AtomicBoolean fallbackToSimpleAuth) throws IOException {

Text dtService = SecurityUtil.buildTokenService(nnAddr);

T proxy;

if (xface == ClientProtocol.class) {

//代理类,客户端通过该类来与namenode进行交互

proxy = (T) createNNProxyWithClientProtocol(nnAddr, conf, ugi,

withRetries, fallbackToSimpleAuth);

} else if (xface == JournalProtocol.class) {

//代理类,用来namenode主和namenode从之间交互

proxy = (T) createNNProxyWithJournalProtocol(nnAddr, conf, ugi);

} else if (xface == NamenodeProtocol.class) {

//用来namenode与客户端之间交互

proxy = (T) createNNProxyWithNamenodeProtocol(nnAddr, conf, ugi,

withRetries);

} else if (xface == GetUserMappingsProtocol.class) {

proxy = (T) createNNProxyWithGetUserMappingsProtocol(nnAddr, conf, ugi);

} else if (xface == RefreshUserMappingsProtocol.class) {

proxy = (T) createNNProxyWithRefreshUserMappingsProtocol(nnAddr, conf, ugi);

} else if (xface == RefreshAuthorizationPolicyProtocol.class) {

proxy = (T) createNNProxyWithRefreshAuthorizationPolicyProtocol(nnAddr,

conf, ugi);

} else if (xface == RefreshCallQueueProtocol.class) {

proxy = (T) createNNProxyWithRefreshCallQueueProtocol(nnAddr, conf, ugi);

} else {

String message = "Unsupported protocol found when creating the proxy " +

"connection to NameNode: " +

((xface != null) ? xface.getClass().getName() : "null");

LOG.error(message);

throw new IllegalStateException(message);

}

return new ProxyAndInfo<T>(proxy, dtService, nnAddr);

}我们这里分析proxy = (T) createNNProxyWithClientProtocol(nnAddr, conf, ugi,withRetries, fallbackToSimpleAuth);代码如下:

private static ClientProtocol createNNProxyWithClientProtocol(

InetSocketAddress address, Configuration conf, UserGroupInformation ugi,

boolean withRetries, AtomicBoolean fallbackToSimpleAuth)

throws IOException {

//将name为rpc.engine拼上ClientNamenodeProtocolPB.class.getName(),value为

//ProtobufRpcEngine.class.getName()的信息存储到配置类中,还有一个前提条件就是

//ProtobufRpcEngine要实现或继承RpcEngine,或者两者相同,这个函数执行成功后,以后从配置类对象

//中获取rpc.engine拼上ClientNamenodeProtocolPB.class.getName()对应的value就是

//ProtobufRpcEngine.class.getName()了

RPC.setProtocolEngine(conf, ClientNamenodeProtocolPB.class, ProtobufRpcEngine.class);

//DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_KEY为dfs.client.retry.policy.enabled

//DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_DEFAULT为false

//DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_KEY为dfs.client.retry.policy.spec

//DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_DEFAULT为10000,6,60000,10

//这个函数主要用来根据相应的参数到配置类对象中去获取相应的重试机制类对象,具体可以看

//getDefaultRetryPolicy函数,这里不深入展开

final RetryPolicy defaultPolicy =

RetryUtils.getDefaultRetryPolicy(

conf,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_KEY,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_DEFAULT,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_KEY,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_DEFAULT,

SafeModeException.class);

//获取版本号

final long version = RPC.getProtocolVersion(ClientNamenodeProtocolPB.class);

//构造ClientNamenodeProtocolPB代理对象

ClientNamenodeProtocolPB proxy = RPC.getProtocolProxy(

ClientNamenodeProtocolPB.class, version, address, ugi, conf,

NetUtils.getDefaultSocketFactory(conf),

org.apache.hadoop.ipc.Client.getTimeout(conf), defaultPolicy,

fallbackToSimpleAuth).getProxy();

if (withRetries) { // create the proxy with retries

RetryPolicy createPolicy = RetryPolicies

.retryUpToMaximumCountWithFixedSleep(5,

HdfsConstants.LEASE_SOFTLIMIT_PERIOD, TimeUnit.MILLISECONDS);

Map<Class<? extends Exception>, RetryPolicy> remoteExceptionToPolicyMap

= new HashMap<Class<? extends Exception>, RetryPolicy>();

remoteExceptionToPolicyMap.put(AlreadyBeingCreatedException.class,

createPolicy);

RetryPolicy methodPolicy = RetryPolicies.retryByRemoteException(

defaultPolicy, remoteExceptionToPolicyMap);

Map<String, RetryPolicy> methodNameToPolicyMap

= new HashMap<String, RetryPolicy>();

methodNameToPolicyMap.put("create", methodPolicy);

ClientProtocol translatorProxy = new ClientNamenodeProtocolTranslatorPB(proxy);

return (ClientProtocol) RetryProxy.create(

ClientProtocol.class,

new DefaultFailoverProxyProvider<ClientProtocol>(

ClientProtocol.class, translatorProxy),

methodNameToPolicyMap,

defaultPolicy);

} else {

//构造ClientNamenodeProtocolTranslatorPB对象并返回

//注意ClientNamenodeProtocolTranslatorPB会持有一个ClientNamenodeProtocolPB对象

return new ClientNamenodeProtocolTranslatorPB(proxy);

}

}我们继续往下执行getProtocolProxy函数,代码如下:

/**

* Get a protocol proxy that contains a proxy connection to a remote server

* and a set of methods that are supported by the server

* 获取一个协议代理,这个协议代理包含了一个连接到远程服务器的proxy,这个远程服务包含代码集,也就

* 是说protocol proxy中包含一个proxy,可以通过这个proxy来调用远程服务器提供的方法,就跟本地调

* 用一样

*

* @param protocol protocol

* @param clientVersion client's version

* @param addr server address

* @param ticket security ticket

* @param conf configuration

* @param factory socket factory

* @param rpcTimeout max time for each rpc; 0 means no timeout

* @param connectionRetryPolicy retry policy

* @param fallbackToSimpleAuth set to true or false during calls to indicate if

* a secure client falls back to simple auth

* @return the proxy

* @throws IOException if any error occurs

*/

public static <T> ProtocolProxy<T> getProtocolProxy(Class<T> protocol,

long clientVersion,

InetSocketAddress addr,

UserGroupInformation ticket,

Configuration conf,

SocketFactory factory,

int rpcTimeout,

RetryPolicy connectionRetryPolicy,

AtomicBoolean fallbackToSimpleAuth)

throws IOException {

//判断UserGroupInformation是否处在需要安全认证的环境,

if (UserGroupInformation.isSecurityEnabled()) {

SaslRpcServer.init(conf);

}

/*首先调用getProtocolEngine()方法从配置对象中获取protocol对应的对象,也就是

ProtobufRpcEngine的类对象,然后调用ProtobufRpcEngine类对象的getProxy函数

*/

return getProtocolEngine(protocol, conf).getProxy(protocol, clientVersion,

addr, ticket, conf, factory, rpcTimeout, connectionRetryPolicy,

fallbackToSimpleAuth);

}我们进入到getProtocolEngine(protocol, conf)函数中看看,代码如下:

// return the RpcEngine configured to handle a protocol

static synchronized RpcEngine getProtocolEngine(Class<?> protocol,

Configuration conf) {

//从Map<Class<?>,RpcEngine>类对象PROTOCOL_ENGINES中查找protocol,如果找到了那么就返回

//RpcEngine类对象

RpcEngine engine = PROTOCOL_ENGINES.get(protocol);

//如果没有找到该class对象

if (engine == null) {

//那么就通过指定的key到配置对象中查找(其中ENGINE_PROP为rpc.engine,protocol.getName()为

//ClientNamenodeProtocolPB,组合起来也就是rpc.engine.ClientNamenodeProtocolPB)相应的类名

//称,我们在函数createNNProxyWithClientProtocol函数中的

//RPC.setProtocolEngine(conf,

//ClientNamenodeProtocolPB.class,ProtobufRpcEngine.class);

//做了设置,所以这里conf.getClass获取到的是ProtobufRpcEngine的Class对象,当然,如果在

//conf中没有找到那么就采用默认的Class对

//象,即 WritableRpcEngine的Class对象

Class<?> impl = conf.getClass(ENGINE_PROP+"."+protocol.getName(),

WritableRpcEngine.class);

//根据该Class对象通过反射创建类对象

engine = (RpcEngine)ReflectionUtils.newInstance(impl, conf);

//将该对象保存到PROTOCOL_ENGINES静态变量中。以便后面直接快速调用

PROTOCOL_ENGINES.put(protocol, engine);

}

return engine;

}我们回到函数getProtocolProxy中,通过函数getProtocolEngine的解析,得到返回值是ProtobufRpcEngine类对象,我们继续分析该类中的getProxy函数。代码如下:

@Override

@SuppressWarnings("unchecked")

public <T> ProtocolProxy<T> getProxy(Class<T> protocol, long clientVersion,

InetSocketAddress addr, UserGroupInformation ticket, Configuration conf,

SocketFactory factory, int rpcTimeout, RetryPolicy connectionRetryPolicy,

AtomicBoolean fallbackToSimpleAuth) throws IOException {

//首先构造InvocationHandler对象

final Invoker invoker = new Invoker(protocol, addr, ticket, conf, factory,

rpcTimeout, connectionRetryPolicy, fallbackToSimpleAuth);

//然后调用Proxy.newProxyInstance()获取动态代理对象,并通过ProtocolProxy返回

return new ProtocolProxy<T>(protocol, (T) Proxy.newProxyInstance(

protocol.getClassLoader(), new Class[]{protocol}, invoker), false);

}我们首先来看Invoker类,这个类是ProtobufRpcEngine的内部类,申明如下:

private static class Invoker implements RpcInvocationHandler,而RpcInvocationHandler接口的继承关系如下:

public interface RpcInvocationHandler extends InvocationHandler, Closeable,也就是说Invoker实现了InvocationHandler接口,InvocationHandler接口是用于动态代理的。接下来执行代码

return new ProtocolProxy<T>(protocol, (T) Proxy.newProxyInstance(

protocol.getClassLoader(), new Class[]{protocol}, invoker), false);这行代码会将创建ProtocolProxy类对象,其中protocol参数赋值给ProtocolProxy类中的protocol成员变量,代理对象赋值给proxy成员变量,false赋值给supportServerMethodCheck成员变量。其中protocol参数为ClientNamenodeProtocolPB的Class对象,proxy为ClientNamenodeProtocolPB接口的代理类。

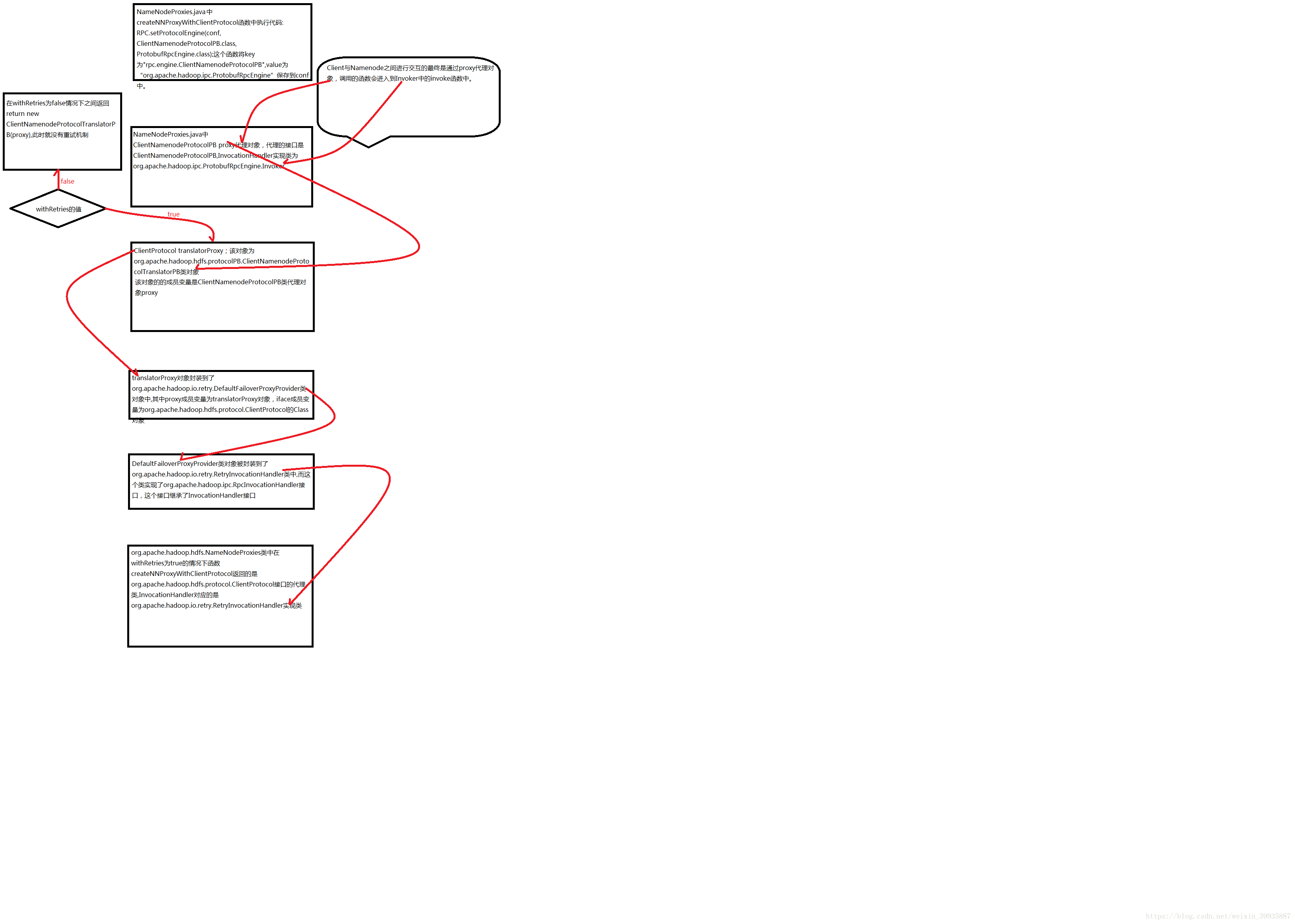

现在我们回到createNNProxyWithClientProtocol函数中,目前分析的代码如下:

ClientNamenodeProtocolPB proxy = RPC.getProtocolProxy(

ClientNamenodeProtocolPB.class, version, address, ugi, conf,

NetUtils.getDefaultSocketFactory(conf),

org.apache.hadoop.ipc.Client.getTimeout(conf), defaultPolicy,

fallbackToSimpleAuth).getProxy();getProtocolProxy函数获取到的是ProtocolProxy类对象,然后调用getProxy函数返回代理对象,也就是ClientNamenodeProtocolPB接口的代理对象。现在proxy就是ClientNamenodeProtocolPB的代理对象,我们继续往下执行,代码如下:

if (withRetries) { // create the proxy with retries

RetryPolicy createPolicy = RetryPolicies

.retryUpToMaximumCountWithFixedSleep(5,

HdfsConstants.LEASE_SOFTLIMIT_PERIOD, TimeUnit.MILLISECONDS);

Map<Class<? extends Exception>, RetryPolicy> remoteExceptionToPolicyMap

= new HashMap<Class<? extends Exception>, RetryPolicy>();

remoteExceptionToPolicyMap.put(AlreadyBeingCreatedException.class,

createPolicy);

RetryPolicy methodPolicy = RetryPolicies.retryByRemoteException(

defaultPolicy, remoteExceptionToPolicyMap);

Map<String, RetryPolicy> methodNameToPolicyMap

= new HashMap<String, RetryPolicy>();

methodNameToPolicyMap.put("create", methodPolicy);

ClientProtocol translatorProxy =

new ClientNamenodeProtocolTranslatorPB(proxy);

return (ClientProtocol) RetryProxy.create(

ClientProtocol.class,

new DefaultFailoverProxyProvider<ClientProtocol>(

ClientProtocol.class, translatorProxy),

methodNameToPolicyMap,

defaultPolicy);

} else {

//构造ClientNamenodeProtocolTranslatorPB对象并返回

//注意ClientNamenodeProtocolTranslatorPB会持有一个ClientNamenodeProtocolPB对象

return new ClientNamenodeProtocolTranslatorPB(proxy);

}这里面withRetries为调用代理对象方法失败后是否要重试,我们下面分两种情况来分别进行分析:

第一种情况,withRetries为true

执行函数

//5为最大尝试次数,LEASE_SOFTLIMIT_PERIOD为60*1000,MILLISECONDS表示毫秒

//最终会将这三个值分别存储到RetryUpToMaximumCountWithFixedSleep类对象的maxRetries、sleepTime、

// timeUnit中,而RetryUpToMaximumCountWithFixedSleep实现了RetryPolicy接口

RetryPolicy createPolicy = RetryPolicies

.retryUpToMaximumCountWithFixedSleep(5,

HdfsConstants.LEASE_SOFTLIMIT_PERIOD, TimeUnit.MILLISECONDS);

Map<Class<? extends Exception>, RetryPolicy> remoteExceptionToPolicyMap

= new HashMap<Class<? extends Exception>, RetryPolicy>();

//将AlreadyBeingCreatedException类的Class对象作为key,value为实现RetryPolicy接口的类对象

remoteExceptionToPolicyMap.put(AlreadyBeingCreatedException.class,

createPolicy);

//这里面用到了defalutPolicy,这个变量指配置中的重试机制类对象,函数retryByRemoteException会返回

// RemoteExceptionDependentRetry类对象,这个类实现了RetryPolicy接口,其中defaultPolicy会保存

// 到RemoteExceptionDependentRetry类对象的成员变量defaultPolicy中,而

// remoteExceptionToPolicyMap中的元素的key.getName()作为key,元素的value值存储到

// RemoteExceptionDependentRetry类对象成员变量exceptionNameToPolicyMap中,该变量的类型为

// Map<String, RetryPolicy>

RetryPolicy methodPolicy = RetryPolicies.retryByRemoteException(

defaultPolicy, remoteExceptionToPolicyMap);

Map<String, RetryPolicy> methodNameToPolicyMap = new HashMap<String, RetryPolicy>();

methodNameToPolicyMap.put("create", methodPolicy);

//创建ClientNamenodeProtocolTranslatorPB类对象,并将ClientNamenodeProtocolPB接口代理对象

// proxy保存到ClientNamenodeProtocolPB类型变量rpcProxy中

ClientProtocol translatorProxy = new ClientNamenodeProtocolTranslatorPB(proxy);最后我们执行代码:

return (ClientProtocol) RetryProxy.create(

ClientProtocol.class,

new DefaultFailoverProxyProvider<ClientProtocol>(

ClientProtocol.class, translatorProxy),

methodNameToPolicyMap,

defaultPolicy);我们进入到create函数中,代码如下:

/**

* Create a proxy for an interface of implementations of that interface using

* the given {@link FailoverProxyProvider} and the a set of retry policies

* specified by method name. If no retry policy is defined for a method then a

* default of {@link RetryPolicies#TRY_ONCE_THEN_FAIL} is used.

*

* @param iface the interface that the retry will implement

* @param proxyProvider provides implementation instances whose methods should be retried

* @param methodNameToPolicyMapa map of method names to retry policies

* @return the retry proxy

*/

//iface值为ClientProtocol.class,

//proxyProvider值为DefaultFailoverProxyProvider类对象,其中proxy成员变量为上面的

//ClientNamenodeProtocolTranslatorPB类对象proxy,iface成员变量为ClientProtocol类的Class对

//象。这个函数创建一个代理对象,代理接口为iface,RetryInvocationHandler最终实现

//InvocationHandler接口,也就是说调用代理类对象的接口最终会调用RetryInvocationHandler类中的

//Object invoke(Object proxy, Method method, Object[] args)函数

public static <T> Object create(Class<T> iface,

FailoverProxyProvider<T> proxyProvider,

Map<String,RetryPolicy> methodNameToPolicyMap,

RetryPolicy defaultPolicy) {

return Proxy.newProxyInstance(

proxyProvider.getInterface().getClassLoader(),

new Class<?>[] { iface },

new RetryInvocationHandler<T>(proxyProvider, defaultPolicy,

methodNameToPolicyMap)

);

}我们进入RetryInvocationHandler类函数Object invoke(Object proxy, Method method, Object[] args)中,代码如下:

@Override

public Object invoke(Object proxy, Method method, Object[] args)

throws Throwable {

//根据方法来获取对应的重试机制类对象

RetryPolicy policy = methodNameToPolicyMap.get(method.getName());

//如果没有设置重试机制,那么就采用默认的重试机制类对象

if (policy == null) {

policy = defaultPolicy;

}

// The number of times this method invocation has been failed over.

int invocationFailoverCount = 0;

final boolean isRpc = isRpcInvocation(currentProxy.proxy);

final int callId = isRpc? Client.nextCallId(): RpcConstants.INVALID_CALL_ID;

int retries = 0;

while (true) {

// The number of times this invocation handler has ever been failed over,

// before this method invocation attempt. Used to prevent concurrent

// failed method invocations from triggering multiple failover attempts.

long invocationAttemptFailoverCount;

synchronized (proxyProvider) {

invocationAttemptFailoverCount = proxyProviderFailoverCount;

}

if (isRpc) {

Client.setCallIdAndRetryCount(callId, retries);

}

try {

Object ret = invokeMethod(method, args);

hasMadeASuccessfulCall = true;

return ret;

} catch (Exception e) {

boolean isIdempotentOrAtMostOnce = proxyProvider.getInterface()

.getMethod(method.getName(), method.getParameterTypes())

.isAnnotationPresent(Idempotent.class);

if (!isIdempotentOrAtMostOnce) {

isIdempotentOrAtMostOnce = proxyProvider.getInterface()

.getMethod(method.getName(), method.getParameterTypes())

.isAnnotationPresent(AtMostOnce.class);

}

RetryAction action = policy.shouldRetry(e, retries++,

invocationFailoverCount, isIdempotentOrAtMostOnce);

if (action.action == RetryAction.RetryDecision.FAIL) {

if (action.reason != null) {

LOG.warn("Exception while invoking " + currentProxy.proxy.getClass()

+ "." + method.getName() + " over " + currentProxy.proxyInfo

+ ". Not retrying because " + action.reason, e);

}

throw e;

} else { // retry or failover

// avoid logging the failover if this is the first call on this

// proxy object, and we successfully achieve the failover without

// any flip-flopping

boolean worthLogging =

!(invocationFailoverCount == 0 && !hasMadeASuccessfulCall);

worthLogging |= LOG.isDebugEnabled();

if (action.action == RetryAction.RetryDecision.FAILOVER_AND_RETRY &&

worthLogging) {

String msg = "Exception while invoking " + method.getName()

+ " of class " + currentProxy.proxy.getClass().getSimpleName()

+ " over " + currentProxy.proxyInfo;

if (invocationFailoverCount > 0) {

msg += " after " + invocationFailoverCount + " fail over attempts";

}

msg += ". Trying to fail over " + formatSleepMessage(action.delayMillis);

LOG.info(msg, e);

} else {

if(LOG.isDebugEnabled()) {

LOG.debug("Exception while invoking " + method.getName()

+ " of class " + currentProxy.proxy.getClass().getSimpleName()

+ " over " + currentProxy.proxyInfo + ". Retrying "

+ formatSleepMessage(action.delayMillis), e);

}

}

if (action.delayMillis > 0) {

Thread.sleep(action.delayMillis);

}

if (action.action == RetryAction.RetryDecision.FAILOVER_AND_RETRY) {

// Make sure that concurrent failed method invocations only cause a

// single actual fail over.

synchronized (proxyProvider) {

if (invocationAttemptFailoverCount == proxyProviderFailoverCount) {

proxyProvider.performFailover(currentProxy.proxy);

proxyProviderFailoverCount++;

} else {

LOG.warn("A failover has occurred since the start of this method"

+ " invocation attempt.");

}

currentProxy = proxyProvider.getProxy();

}

invocationFailoverCount++;

}

}

}

}

}很多细节我们都没有具体展开,后面有时间可能会进一步描述。

下面是相应调用流程图:

代理类产生过程的概要图:

接下来我们继续讲解 hadoop2.6.0源码剖析-客户端(第二部分--DFSClient)下(HA代理)