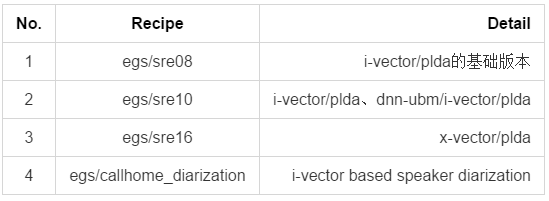

- KALDI工具包中的例子

首先最基础的就是egs/aishell/V1,先跑通它,并理解代码和基础理论知识。

- egs/sre08

REMAD.TXT

系统开发所需的数据(除了所描述的测试数据之外)

在../README.txt)中,由Fisher,过去的NIST SRE和Switchboard组成

蜂窝。 你可以只用Fisher的一部分就可以了。

演讲稿(见注)

Fisher第1部分LDC2004S13 LDC2004T19

Fisher第2部分LDC2005S13 LDC2005T19

SRE 2004测试LDC2006S44

SRE 2005测试LDC2011S04

SWBD Cellular 1 LDC2001S13

SWBD Cellular 2 LDC2004S07

注意:

带有成绩单的分发并不是真正需要的

成绩单本身,但因为那是演讲者的信息

居住(所以我们知道哪些录音来自同一个演讲者)。 这是

需要PLDA估算。 但是,请记住费舍尔不相信

对于像PLDA估计这样的事情非常好。 在较新的食谱,如

../../sre10/v1我们使用过去的SRE数据进行PLDA估算。run.sh

. ./cmd.sh

. ./path.sh

set -e

mfccdir=`pwd`/mfcc

vaddir=`pwd`/mfcc

local/make_fisher.sh /export/corpora3/LDC/{LDC2004S13,LDC2004T19} data/fisher1

#Processed 4948 utterances; 902 had missing wav data. (note: we should figure

#out why so much data goes missing.)

local/make_fisher.sh /export/corpora3/LDC/{LDC2005S13,LDC2005T19} data/fisher2

#Processed 5848 utterances; 1 had missing wav data.

local/make_sre_2005_test.pl /export/corpora5/LDC/LDC2011S04 data

local/make_sre_2004_test.pl \

/export/corpora5/LDC/LDC2006S44/r93_5_1/sp04-05/test data/sre_2004_1

local/make_sre_2004_test.pl \

/export/corpora5/LDC/LDC2006S44/r93_6_1/sp04-06/test data/sre_2004_2

local/make_sre_2008_train.pl /export/corpora5/LDC/LDC2011S05 data

local/make_sre_2008_test.sh /export/corpora5/LDC/LDC2011S08 data

local/make_sre_2006_train.pl /export/corpora5/LDC/LDC2011S09 data

local/make_sre_2005_train.pl /export/corpora5/LDC/LDC2011S01 data

local/make_swbd_cellular1.pl /export/corpora5/LDC/LDC2001S13 \

data/swbd_cellular1_train

local/make_swbd_cellular2.pl /export/corpora5/LDC/LDC2004S07 \

data/swbd_cellular2_train

utils/combine_data.sh data/train data/fisher1 data/fisher2 \

data/swbd_cellular1_train data/swbd_cellular2_train \

data/sre05_train_3conv4w_female data/sre05_train_8conv4w_female \

data/sre06_train_3conv4w_female data/sre06_train_8conv4w_female \

data/sre05_train_3conv4w_male data/sre05_train_8conv4w_male \

data/sre06_train_3conv4w_male data/sre06_train_8conv4w_male \

data/sre_2004_1/ data/sre_2004_2/ data/sre05_test

mfccdir=`pwd`/mfcc

vaddir=`pwd`/mfcc

set -e

steps/make_mfcc.sh --mfcc-config conf/mfcc.conf --nj 40 --cmd "$train_cmd" \

data/train exp/make_mfcc $mfccdir

steps/make_mfcc.sh --mfcc-config conf/mfcc.conf --nj 40 --cmd "$train_cmd" \

data/sre08_train_short2_female exp/make_mfcc $mfccdir

steps/make_mfcc.sh --mfcc-config conf/mfcc.conf --nj 40 --cmd "$train_cmd" \

data/sre08_train_short2_male exp/make_mfcc $mfccdir

steps/make_mfcc.sh --mfcc-config conf/mfcc.conf --nj 40 --cmd "$train_cmd" \

data/sre08_test_short3_female exp/make_mfcc $mfccdir

steps/make_mfcc.sh --mfcc-config conf/mfcc.conf --nj 40 --cmd "$train_cmd" \

data/sre08_test_short3_male exp/make_mfcc $mfccdir

sid/compute_vad_decision.sh --nj 4 --cmd "$train_cmd" \

data/train exp/make_vad $vaddir

sid/compute_vad_decision.sh --nj 4 --cmd "$train_cmd" \

data/sre08_train_short2_female exp/make_vad $vaddir

sid/compute_vad_decision.sh --nj 4 --cmd "$train_cmd" \

data/sre08_train_short2_male exp/make_vad $vaddir

sid/compute_vad_decision.sh --nj 4 --cmd "$train_cmd" \

data/sre08_test_short3_female exp/make_vad $vaddir

sid/compute_vad_decision.sh --nj 4 --cmd "$train_cmd" \

data/sre08_test_short3_male exp/make_vad $vaddir

# Note: to see the proportion of voiced frames you can do,

# grep Prop exp/make_vad/vad_*.1.log

# Get male and female subsets of training data.

grep -w m data/train/spk2gender | awk '{print $1}' > foo;

utils/subset_data_dir.sh --spk-list foo data/train data/train_male

grep -w f data/train/spk2gender | awk '{print $1}' > foo;

utils/subset_data_dir.sh --spk-list foo data/train data/train_female

rm foo

# Get smaller subsets of training data for faster training.

utils/subset_data_dir.sh data/train 4000 data/train_4k

utils/subset_data_dir.sh data/train 8000 data/train_8k

utils/subset_data_dir.sh data/train_male 8000 data/train_male_8k

utils/subset_data_dir.sh data/train_female 8000 data/train_female_8k

# The recipe currently uses delta-window=3 and delta-order=2. However

# the accuracy is almost as good using delta-window=4 and delta-order=1

# and could be faster due to lower dimensional features. Alternative

# delta options (e.g., --delta-window 4 --delta-order 1) can be provided to

# sid/train_diag_ubm.sh. The options will be propagated to the other scripts.

sid/train_diag_ubm.sh --nj 30 --cmd "$train_cmd" data/train_4k 2048 \

exp/diag_ubm_2048

sid/train_full_ubm.sh --nj 30 --cmd "$train_cmd" data/train_8k \

exp/diag_ubm_2048 exp/full_ubm_2048

sid/train_full_ubm.sh --nj 30 --cmd "$train_cmd" data/train_8k \

exp/diag_ubm_2048 exp/full_ubm_2048

# Get male and female versions of the UBM in one pass; make sure not to remove

# any Gaussians due to low counts (so they stay matched). This will be

# more convenient for gender-id.

sid/train_full_ubm.sh --nj 30 --remove-low-count-gaussians false \

--num-iters 1 --cmd "$train_cmd" \

data/train_male_8k exp/full_ubm_2048 exp/full_ubm_2048_male &

sid/train_full_ubm.sh --nj 30 --remove-low-count-gaussians false \

--num-iters 1 --cmd "$train_cmd" \

data/train_female_8k exp/full_ubm_2048 exp/full_ubm_2048_female &

wait

# Train the iVector extractor for male speakers.

sid/train_ivector_extractor.sh --cmd "$train_cmd --mem 35G" \

--num-iters 5 exp/full_ubm_2048_male/final.ubm data/train_male \

exp/extractor_2048_male

# The same for female speakers.

sid/train_ivector_extractor.sh --cmd "$train_cmd --mem 35G" \

--num-iters 5 exp/full_ubm_2048_female/final.ubm data/train_female \

exp/extractor_2048_female

# The script below demonstrates the gender-id script. We don't really use

# it for anything here, because the SRE 2008 data is already split up by

# gender and gender identification is not required for the eval.

# It prints out the error rate based on the info in the spk2gender file;

# see exp/gender_id_fisher/error_rate where it is also printed.

sid/gender_id.sh --cmd "$train_cmd" --nj 150 exp/full_ubm_2048{,_male,_female} \

data/train exp/gender_id_train

# Gender-id error rate is 3.41%

# Extract the iVectors for the training data.

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_male data/train_male exp/ivectors_train_male

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_female data/train_female exp/ivectors_train_female

# .. and for the SRE08 training and test data. (We focus on the main

# evaluation condition, the only required one in that eval, which is

# the short2-short3 eval.)

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_female data/sre08_train_short2_female \

exp/ivectors_sre08_train_short2_female

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_male data/sre08_train_short2_male \

exp/ivectors_sre08_train_short2_male

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_female data/sre08_test_short3_female \

exp/ivectors_sre08_test_short3_female

sid/extract_ivectors.sh --cmd "$train_cmd --mem 6G" --nj 50 \

exp/extractor_2048_male data/sre08_test_short3_male \

exp/ivectors_sre08_test_short3_male

### Demonstrate simple cosine-distance scoring:

trials=data/sre08_trials/short2-short3-female.trials

# Note: speaker-level i-vectors have already been length-normalized

# by sid/extract_ivectors.sh, but the utterance-level test i-vectors

# have not.

cat $trials | awk '{print $1, $2}' | \

ivector-compute-dot-products - \

scp:exp/ivectors_sre08_train_short2_female/spk_ivector.scp \

'ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_female/ivector.scp ark:- |' \

foo

local/score_sre08.sh $trials foo

# Results for Female:

# Scoring against data/sre08_trials/short2-short3-female.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 12.70 20.09 4.78 19.08 16.37 15.87 10.42 7.10 7.89

trials=data/sre08_trials/short2-short3-male.trials

cat $trials | awk '{print $1, $2}' | \

ivector-compute-dot-products - \

scp:exp/ivectors_sre08_train_short2_male/spk_ivector.scp \

'ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_male/ivector.scp ark:- |' \

foo

local/score_sre08.sh $trials foo

# Results for Male:

# Scoring against data/sre08_trials/short2-short3-male.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 11.10 18.55 5.24 18.03 14.35 13.44 8.47 5.92 4.82

# The following shows a more direct way to get the scores.

# condition=6

# awk '{print $3}' foo | paste - $trials | awk -v c=$condition '{n=4+c; \\

# if ($n == "Y") print $1, $4}' | \

# compute-eer -

# LOG (compute-eer:main():compute-eer.cc:136) Equal error rate is 11.10%,

# at threshold 55.9827

# Note: to see how you can plot the DET curve, look at

# local/det_curve_example.sh

### Demonstrate what happens if we reduce the dimension with LDA

ivector-compute-lda --dim=150 --total-covariance-factor=0.1 \

'ark:ivector-normalize-length scp:exp/ivectors_train_female/ivector.scp ark:- |' \

ark:data/train_female/utt2spk \

exp/ivectors_train_female/transform.mat

trials=data/sre08_trials/short2-short3-female.trials

cat $trials | awk '{print $1, $2}' | \

ivector-compute-dot-products - \

'ark:ivector-transform exp/ivectors_train_female/transform.mat scp:exp/ivectors_sre08_train_short2_female/spk_ivector.scp ark:- | ivector-normalize-length ark:- ark:- |' \

'ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_female/ivector.scp ark:- | ivector-transform exp/ivectors_train_female/transform.mat ark:- ark:- | ivector-normalize-length ark:- ark:- |' \

foo

local/score_sre08.sh $trials foo

# Results for Female:

# Scoring against data/sre08_trials/short2-short3-female.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 7.96 9.82 1.49 9.44 10.51 10.70 8.81 5.83 7.11

ivector-compute-lda --dim=150 --total-covariance-factor=0.1 \

'ark:ivector-normalize-length scp:exp/ivectors_train_male/ivector.scp ark:- |' \

ark:data/train_male/utt2spk \

exp/ivectors_train_male/transform.mat

trials=data/sre08_trials/short2-short3-male.trials

cat $trials | awk '{print $1, $2}' | \

ivector-compute-dot-products - \

'ark:ivector-transform exp/ivectors_train_male/transform.mat scp:exp/ivectors_sre08_train_short2_male/spk_ivector.scp ark:- | ivector-normalize-length ark:- ark:- |' \

'ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_male/ivector.scp ark:- | ivector-transform exp/ivectors_train_male/transform.mat ark:- ark:- | ivector-normalize-length ark:- ark:- |' \

foo

local/score_sre08.sh $trials foo

# Results for Male:

# Scoring against data/sre08_trials/short2-short3-male.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 6.20 8.30 1.21 8.10 8.43 7.03 7.32 5.70 3.51

### Demonstrate PLDA scoring:

## Note: below, the ivector-subtract-global-mean step doesn't appear to affect

## the EER, although it does shift the threshold.

trials=data/sre08_trials/short2-short3-female.trials

ivector-compute-plda ark:data/train_female/spk2utt \

'ark:ivector-normalize-length scp:exp/ivectors_train_female/ivector.scp ark:- |' \

exp/ivectors_train_female/plda 2>exp/ivectors_train_female/log/plda.log

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_female/num_utts.ark \

"ivector-copy-plda --smoothing=0.0 exp/ivectors_train_female/plda - |" \

"ark:ivector-subtract-global-mean scp:exp/ivectors_sre08_train_short2_female/spk_ivector.scp ark:- |" \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_female/ivector.scp ark:- | ivector-subtract-global-mean ark:- ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo

local/score_sre08.sh $trials foo

# Result for Female is below:

# Scoring against data/sre08_trials/short2-short3-female.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 6.44 9.76 1.49 9.76 7.66 7.21 6.87 4.06 4.74

trials=data/sre08_trials/short2-short3-male.trials

ivector-compute-plda ark:data/train_male/spk2utt \

'ark:ivector-normalize-length scp:exp/ivectors_train_male/ivector.scp ark:- |' \

exp/ivectors_train_male/plda 2>exp/ivectors_train_male/log/plda.log

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_male/num_utts.ark \

"ivector-copy-plda --smoothing=0.0 exp/ivectors_train_male/plda - |" \

"ark:ivector-subtract-global-mean scp:exp/ivectors_sre08_train_short2_male/spk_ivector.scp ark:- |" \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_male/ivector.scp ark:- | ivector-subtract-global-mean ark:- ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo; local/score_sre08.sh $trials foo

# Result for Male is below:

# Scoring against data/sre08_trials/short2-short3-male.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 4.68 7.41 1.21 7.48 5.70 4.69 5.61 3.19 2.19

### Demonstrate PLDA scoring after adapting the out-of-domain PLDA model with in-domain training data:

# first, female.

trials=data/sre08_trials/short2-short3-female.trials

cat exp/ivectors_sre08_train_short2_female/spk_ivector.scp exp/ivectors_sre08_test_short3_female/ivector.scp > female.scp

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_female/num_utts.ark \

"ivector-adapt-plda $adapt_opts exp/ivectors_train_female/plda scp:female.scp -|" \

scp:exp/ivectors_sre08_train_short2_female/spk_ivector.scp \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_female/ivector.scp ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo; local/score_sre08.sh $trials foo

# Results:

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 5.45 6.73 1.19 6.79 7.06 6.61 6.32 4.18 4.74

# Baseline (repeated from above):

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 6.44 9.76 1.49 9.76 7.66 7.21 6.87 4.06 4.74

trials=data/sre08_trials/short2-short3-male.trials

ivector-compute-plda ark:data/train_male/spk2utt \

'ark:ivector-normalize-length scp:exp/ivectors_train_male/ivector.scp ark:- |' \

exp/ivectors_train_male/plda 2>exp/ivectors_train_male/log/plda.log

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_male/num_utts.ark \

"ivector-copy-plda --smoothing=0.0 exp/ivectors_train_male/plda - |" \

"ark:ivector-subtract-global-mean scp:exp/ivectors_sre08_train_short2_male/spk_ivector.scp ark:- |" \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_male/ivector.scp ark:- | ivector-subtract-global-mean ark:- ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo; local/score_sre08.sh $trials foo

# Result for Male is below:

# Scoring against data/sre08_trials/short2-short3-male.trials

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 4.68 7.41 1.21 7.48 5.70 4.69 5.61 3.19 2.19

### Demonstrate PLDA scoring after adapting the out-of-domain PLDA model with in-domain training data:

# first, female.

trials=data/sre08_trials/short2-short3-female.trials

cat exp/ivectors_sre08_train_short2_female/spk_ivector.scp exp/ivectors_sre08_test_short3_female/ivector.scp > female.scp

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_female/num_utts.ark \

"ivector-adapt-plda $adapt_opts exp/ivectors_train_female/plda scp:female.scp -|" \

scp:exp/ivectors_sre08_train_short2_female/spk_ivector.scp \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_female/ivector.scp ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo; local/score_sre08.sh $trials foo

# Results:

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 5.45 6.73 1.19 6.79 7.06 6.61 6.32 4.18 4.74

# Baseline (repeated from above):

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 6.44 9.76 1.49 9.76 7.66 7.21 6.87 4.06 4.74

# next, male.

trials=data/sre08_trials/short2-short3-male.trials

cat exp/ivectors_sre08_train_short2_male/spk_ivector.scp exp/ivectors_sre08_test_short3_male/ivector.scp > male.scp

ivector-plda-scoring --simple-length-normalization=true --num-utts=ark:exp/ivectors_sre08_train_short2_male/num_utts.ark \

"ivector-adapt-plda $adapt_opts exp/ivectors_train_male/plda scp:male.scp -|" \

scp:exp/ivectors_sre08_train_short2_male/spk_ivector.scp \

"ark:ivector-normalize-length scp:exp/ivectors_sre08_test_short3_male/ivector.scp ark:- |" \

"cat '$trials' | awk '{print \$1, \$2}' |" foo; local/score_sre08.sh $trials foo

# Results:

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 4.03 4.71 0.81 4.73 5.01 4.84 5.61 3.87 2.63

# Baseline is as follows, repeated from above. Focus on condition 0 (= all).

# Condition: 0 1 2 3 4 5 6 7 8

# EER: 4.68 7.41 1.21 7.48 5.70 4.69 5.61 3.19 2.19

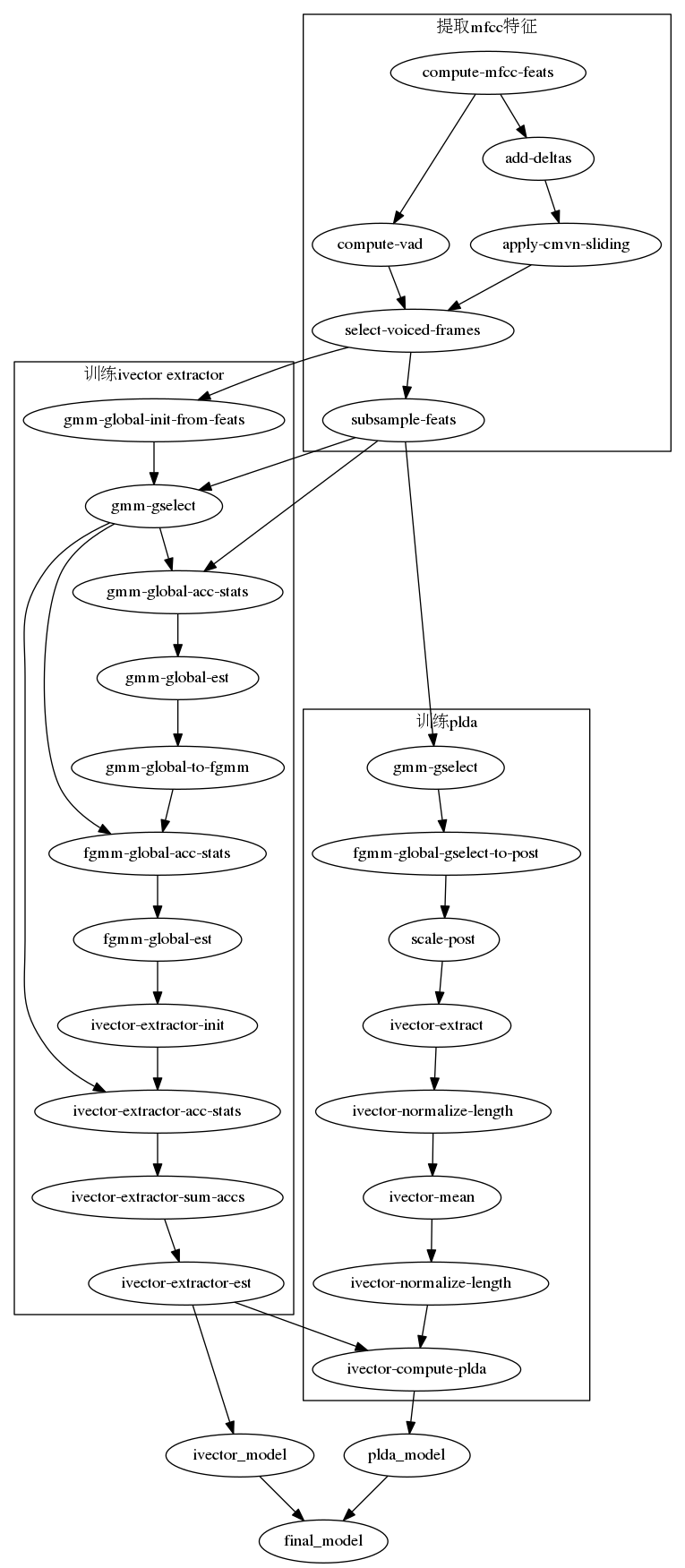

2kaldi中的声纹流程图

2. TensorFlow-based Deep Speaker

实现ResNet网络上的TE2E(Tuple-base end-to-end)Loss function训练方式。安装TensorFlow、Python3和FFMPEG(文件格式转换工具)后,准备好数据,即可一键训练。只可惜验证部分还没做,而且GRU没实现、tensor实现部分也不严谨,可详细阅读代码和论文,并贡献下您的代码。

- 源码地址:https://github.com/philipperemy/deep-speaker

- 论文地址:Deep Speaker: an End-to-End Neural Speaker Embedding System

- 数据集合:http://www.robots.ox.ac.uk/~vgg/data/voxceleb/

3. PyTorch-based Deep Speaker

基于百度论文[1],实现了ResNet + Triplet Loss。不过在 牛津大学的Voxceleb库上,EER比论文[2]所宣称的(7.8%)要高不少,看来实现还是有改进空间。Owner在求助了,大家帮帮忙contribute。

- 源码地址:https://github.com/qqueing/DeepSpeaker-pytorch

- 论文地址:Deep Speaker: an End-to-End Neural Speaker Embedding System

4. TristouNet from pyannote-audio

一个音频处理工具箱,包括Speech change detection, feature extraction, speaker embeddings extraction以及speech activity detection。其中speaker embeddings extraction部分,包括TristouNet的实现。

- 源码地址:https://github.com/pyannote/pyannote-audio

- 论文地址:TristouNet: Triplet Loss for Speaker Turn Embedding

5. CNN-based Speaker verification

Convolutional Neural Networks(卷积神经网络)在声纹识别上的试验,一个不错的尝试,可以与TDNN/x-vector做下对比。

- 源码地址:https://github.com/astorfi/3D-convolutional-speaker-recognition

- 论文地址:Text-Independent Speaker Verification Using 3D Convolutional Neural Networks

- 数据集合:https://biic.wvu.edu/data-sets/multimodal-dataset

声纹识别基础理论论文

这个博客就是把最具有代表性的资料记录下来,前提,我假设你知道啥是MFCC,啥是VAD,啥是CMVN了.

说话人识别学习路径无非就是 GMM-UBM -> JFA -> Ivector-PLDA -> DNN embeddings -> E2E

首先 GMM-UBM, 最经典代表作: Speaker Verification Using Adapted Gaussian Mixture Models

从训练普遍人声纹特征的UBM到经过MAP的目标人GMM-UBM到后面的识别的分数似然比,分数规整都有介绍,老哥Reynold MIT教授,这篇论文可以说是说话人识别开发者必读

(然后,直接跳过JFA吧)JFA太多太繁琐,但假如你是个热爱学习的好孩子,那想必这篇论文你应该很喜欢 Patrick Kenny的: Eigenvoice Modeling With Sparse Training Data

接下来我们来看看Ivector, ivector的理论,ivector 总变化空间矩阵的训练.

首先你需要知道Ivector的理论知识, 所以经典中的经典: Front-End Factor Analysis for Speaker Verification

训练算法推荐: A Straightforward and Efficient Implementation of the Factor Analysis Model for Speaker Verification

但假如你很喜欢数学,Patrick Kenny的这篇结合Eigenvoice应该很适合你: A Small Footprint i-Vector Extractor

到这里,基本上从GMM-UBM 到IVECTOR的理论和训练,你只要读完以上,再加上kaldi的一些小实验,相信聪明的朋友们绝对没问题.

Kaldi参考:train_ivector_extractor.sh和extract_ivector.sh,注意要看他们的底层C++,对着公式来,然后注意里面的符号跟论文的符号是不同的,之前的博客有说过. 你会发现,跟因子分析有关的论文不管是JFA还是Ivector都会有Patrick Kenny这个人物!没有错,这老哥公式狂魔,很猛很变态,对于很多知识点,跟着它公式推导的思路来绝对会没错,但对于像我这种数学渣,我会直接跳过.

记下来我们来看看PLDA的训练和打分

首先, 需要知道PLDA的理论,他从图像识别发展而来的,也跟因子分析有关.参考:Probabilistic Linear Discriminant Analysis for Inferences About Identity

PLDA的参数训练请主要看他的EM的算法,在该论文的APPENDIX里面

接着是PLDA的打分识别,请参考: Analysis of I-vector Length Normalization in Speaker Recognition Systems

将EM训练好的PLDA参数结合着两个IVECTOR进行打分, 这篇论文值得拥有,另外推荐Daniel Garcia-Romero,这老哥的论文多通俗易懂, 重点清晰不含糊, 并且这老哥在speaker diarization的造诣很高,在x-vector也很活跃,十分推荐.

接着来看看深度学习的东西

首先给个直觉,为什么要用深度学习,说话人能用DNN,如何借鉴语音识别在DNN的应用,参考: NOVEL SCHEME FOR SPEAKER RECOGNITION USING A PHONETICALLY-AWARE DEEP NEURAL NETWORK

有了DNN的技术后, 各种老哥们开始用embeddings的方法取代ivector的方法,最开始的是GOOGLE的 d-vector

d-vector: DEEP NEURAL NETWORKS FOR SMALL FOOTPRINT TEXT-DEPENDENT SPEAKER VERIFICATION

d-vector: End-to-End Text-Dependent Speaker Verification

然后众所周知,说话人识别or声纹识别对语音的时长是很敏感的,短时音频的识别性能是决定能不商用的一个很关键的点,所以x-vector应运而生,也是JHU的那帮人,就是Kaldi的团队

x-vector前身 : DEEP NEURAL NETWORK-BASED SPEAKER EMBEDDINGS FOR END-TO-END SPEAKER VERIFICATION

x-vector底座: IME DELAY DEEP NEURAL NETWORK-BASED UNIVERSAL BACKGROUND MODELS FOR SPEAKER RECOGNITION

x-vector正宫: X-VECTORS: ROBUST DNN EMBEDDINGS FOR SPEAKER RECOGNITION

然后然后呢,牛逼的Triplet Loss出来了, 输入是一个三元组,目的就是提升性能,(但我实验的过程经常会不收敛,摊手,本渣也不知道为什么)

Triplet Loss : TRISTOUNET: TRIPLET LOSS FOR SPEAKER TURN EMBEDDING

Triplet Loss : End-to-End Text-Independent Speaker Verification with Triplet Loss on Short Utterances

Deep speaker: Deep Speaker: an End-to-End Neural Speaker Embedding System

最后E2E,但可能我个人才疏学浅,感觉论文上说的E2E其实都是做了一个EMBEDDING出来,然后外接cosine distance,但真正的EMBEDDING是输入注册和测试音频,直接输出分数.

参考文章:

1.https://zhuanlan.zhihu.com/p/35687281

2.https://blog.csdn.net/robingao1994/article/details/80320999

3.https://blog.csdn.net/robingao1994/article/details/82659005