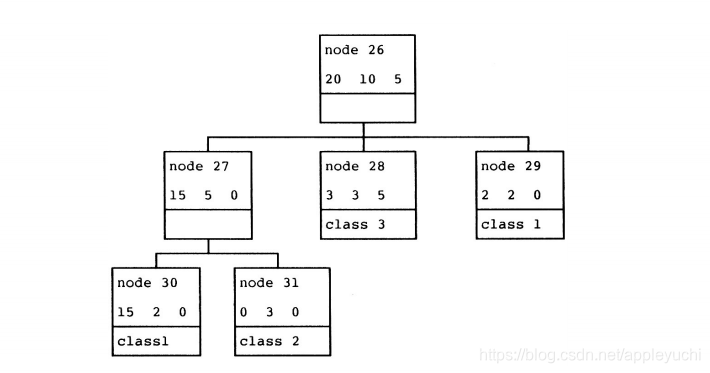

This example is from 《An Empirical Comparison of Pruning Methods

for Decision Tree Induction》

How to read these node and leaves?

For example:

node 30:

15 are classified as “class1”

2 are mis-classified as “class1”

you can reduce the rest nodes or leaves from above

criterion :

where

Be short :

Errors when unpruned<Errors after pruned

when ① is satisfied ,the current tree remains,

otherwise, it will be pruned.

The principle why above Algorithm always take effect

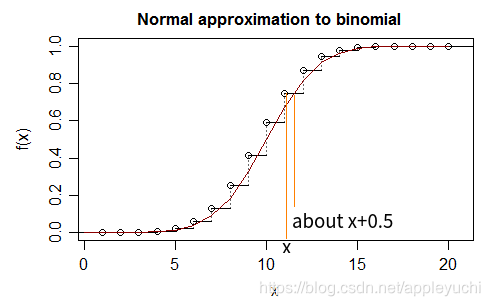

B(n,p)->N( np,np(1-p) )

Picture Reference

:https://stats.stackexchange.com/questions/213966/why-does-the-continuity-correction-say-the-normal-approximation-to-the-binomia/213995

when in reverse,we set a continuity corretion for binomial distribution:

we use “x+0.5” to make these two curse closer(of course this is not accurate enough),then you can use theory of Normal distribution with x+0.5

of course 0.5 is not rigorous,here is just approximation

Why the standard error occur in the criterion?

Let’s see an example:

will fluctuate and Y will fluctuate(I mean they are all variables,Not Constant).

then ,when does Y reach maximum?

Now if we have 4 values Y ever have produced.

1,2,1,1 ②

then average Y̅=

Standard Deviation=

=0.43

so when

Y̅+Standard Deviation=1.25+0.43=1.68≈2.0

Conclusion 1:

All above means that when Y̅+Standard Deviation,we’ll get a value nearest to the maximum in②

------------------------------------------

Let’s come back to Errors we focus just now:

regard Y as the total number of Errors of un-pruned Tree:

Assume(Such Assumption is of course Not rigorous~!):

Y̅=

:Error number of the

leaf

Standard Deviation:

just like the conclusion 1:

means that:

we’ll get a value nearest to the maximum number among possible values of “errors of un-pruned tree”.

Attention please that we assume “errors of un-pruned tree” as a variable,Not constant,

which is used to get the " maximum possible error numbers".

The reason why we call it"pessimistic" is just from

this item means:“pessimistic Error counts”

Note:

There’s a complaint from part2.2.5 of《An Empirical Comparison of Pruning Methods for Decision Tree Induction》for PEP that:

"The statistical justification of this method is somewhat dubious"☺

So the principle of PEP is Not rigorous.

After Principle ,Computation comes:

For pruned-tree,Error counts:

For un-pruned-tree,Error counts:

then

So,this tree should be kept and Not pruned

tools for print overline of texts:

https://fsymbols.com/generators/overline/