一、sqoop安装和配置

1.下载和解压,设置环境变量(略)sqoop2的配置和1差很多,网上很多都是1的

2.配置

修改sqoop/server/conf/catalina.properties

把common.loader改成

common.loader=${catalina.base}/lib,${catalina.base}/lib/*.jar,${catalina.home}/lib,${catalina.home}/lib/*.jar,${catalina.home}/../lib/*.jar,/usr/local/src/hadoop-2.

6.1/share/hadoop/common/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/common/lib/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/hdfs/*.jar,/usr/local/src/hadoop-2.

6.1/share/hadoop/hdfs/lib/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/mapreduce/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/mapreduce/lib/*.jar,/usr/local/src

/hadoop-2.6.1/share/hadoop/tools/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/tools/lib/*.jar,/usr/local/src/hadoop-2.6.1/share/hadoop/yarn/*.jar,/usr/local/src/h

adoop-2.6.1/share/hadoop/yarn/lib/*.jar,/usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib/*.jar 修改sqoop/server/conf/sqoop.properties

org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/usr/local/src/hadoop-2.6.1/etc/hadoop

同时,把有LOGDIR, BASEDIR引用的均替换为实际的绝对路径

3.然后到对应目录新建目录 mkdir hadoop_lib

把hadoop相关依赖jar包拷贝到该目录,把sqoop/server/bin/*.jar和sqoop/server/lib/*.jar拷贝到该目录

cp /usr/local/src/hadoop-2.6.1/share/hadoop/common/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp /usr/local/src/hadoop-2.6.1/share/hadoop/common/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp /usr/local/src/hadoop-2.6.1/share/hadoop/hdfs/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp /usr/local/src/hadoop-2.6.1/share/hadoop/hdfs/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/mapreduce/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/mapreduce/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/tools/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/tools/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/yarn/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/yarn/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/hadoop-2.6.1/share/hadoop/httpfs/tomcat/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/sqoop-1.99.4-bin-hadoop200/server/bin/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib

cp -rf /usr/local/src/sqoop-1.99.4-bin-hadoop200/server/lib/*.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/hadoop_lib4.赋予权限

sudo chmod 777 -R /usr/local/src/sqoop-1.99.4-bin-hadoop200

5.配置Hadoop代理

Sqoop server 需要模拟用户访问集群内外的HDFS和其他资源,所以,需要配置Hadoop通过所谓proxyuser系统显示地允许这种模拟。也就是要在hadoop 目录的etc/hadoop/core-site.xml 中增加下面两个属性。两个value的地方写*或实际用户名均可。

<property>

<name>hadoop.proxyuser.sqoop2.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.sqoop2.groups</name>

<value>*</value>

</property>

6.验证

输入sqoop2-tool verify

7.开启

sqoop2-server start

二、下载链接驱动

1.到微软官网下载sqljdbc

把jar文件放到sqoop安装目录的lib文件夹里

cp sqljdbc41.jar /usr/local/src/sqoop-1.99.4-bin-hadoop200/server/lib

2.下载SQL Server-Hadoop Connector

Sqoop2开始不再需要驱动了,网上教程都是sqoop1的,好坑

三、操作

参考官网文档https://sqoop.apache.org/docs/1.99.4/Sqoop5MinutesDemo.html

1.进入sqoop客户端

sqoop.sh client或者sqoop2-shell

set server --host master(这里写自己的服务器名称) --port 12000 --webapp sqoop

最后一个--webapp官方文档说是指定的sqoop jetty服务器名称

show version --all

2.创建连接

查看连接show connector

创建连接create link -c 2(这里的1是指第一个connector也就是hdfs,2是指第二个connector,也就是jdbc)

输入下面信息

Name: testlink

Link configuration

JDBC Driver Class: com.mysql.jdbc.Driver

JDBC Connection String: jdbc:mysql://localhost/test

Username: root

Password: ******

JDBC Connection Properties:

There are currently 0 values in the map:

entry# protocol=tcp

There are currently 1 values in the map:

protocol = tcp

entry#

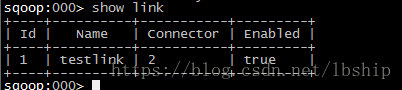

New link was successfully created with validation status OK and persistent可以看到一件连接成功

接下来连接Hadoop试试,URI是hadoop中配置hdfs-site.xml中的属性fs.defaultFS的值,可以看到有两个连接了

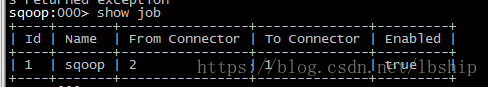

3.创建任务

create job -f 1 -t 2-f指定from,,-t指定to。参数值是link里面的id

create job -f 1 -t 2

Creating job for links with from id 1 and to id 2

Please fill following values to create new job object

Name: sqoop

From database configuration

Schema name: test

Table name: student

Table SQL statement:

Table column names:

Partition column name:

Null value allowed for the partition column:

Boundary query:

ToJob configuration

Output format:

0 : TEXT_FILE

1 : SEQUENCE_FILE

Choose: 0

Compression format:

0 : NONE

1 : DEFAULT

2 : DEFLATE

3 : GZIP

4 : BZIP2

5 : LZO

6 : LZ4

7 : SNAPPY

8 : CUSTOM

Choose: 0

Custom compression format:

Output directory: /root/projects/sqoop

Throttling resources

Extractors: 2

Loaders: 2

New job was successfully created with validation status OK and persistent id 14.开启任务

start job --jid 1

如果遇到报错Exception has occurred during processing command

Exception: org.apache.sqoop.common.SqoopException Message: CLIENT_0001:Server has returned exception

设置可查看具体出错信息set option --name verbose --value true

就可以看到报错的原因了。

查看任务状态status job --jid 1

删除任务和连接

delete job --jid 1

delete link --lid 1