简介

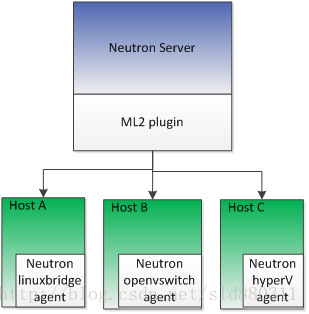

openStack Neutron 作为一种 SDN(Software Defined Network),在其内部使用 ML2 模块来管理Layer2。ML2 全称是 Modular Layer 2。它是一个可以同时管理多种 Layer 2 技术的框架。在 OpenStack Neutron 的项目代码中,ML2 目前支持 Open vSwitch,linux bridge,SR-IOV 等虚拟化 Layer 2 技术。在 Neutron 的各个子项目中,有更多的 Layer 2 技术被支持。

需要注意的是,ML2 与运行在各个 OpenStack 节点上的 L2 agents 是有区别的。ML2 是 Neutron server 上的模块,而运行在各个 OpenStack 节点上的 L2 agents 是实际与虚拟化 Layer 2 技术交互的服务。ML2 与运行在各个 OpenStack 节点上的 L2 agent 通过 AMQP(Advanced Message Queuing Protocol)进行交互,下发命令并获取信息。

发展过程

ML2 并非是随着 OpenStack Neutron 一同诞生的,直到 Havana 版本,OpenStack Neutron 才支持 ML2。OpenStack Neutron 最开始只支持 1-2 种 Layer 2 技术,随着发展,越来越多的 Layer 2 技术被支持。而在 ML2 之前,每支持一种 Layer 2 技术,都需要对 OpenStack Neutron 中的 L2 resource,例如 Network/Subnet/Port 的逻辑进行一次重写,这大大增加了相应的工作量。并且,在 ML2 之前,OpenStack Neutron 最多只支持一种 Layer 2 技术,也就是说如果配置使用了 Open vSwitch,那么整个 OpenStack 环境都只能使用 neutron-openvswitch-agent 作为 Layer 2 的管理服务与 Open vSwitch 交互。

ML2 的提出解决了上面两个问题。ML2 之前的 Layer 2 plugin 代码相同的部分被提取到了 ML2 plugin 中。这样,当一个新的 Layer 2 需要被 Neutron 支持时,只需要实现其特殊部分的代码,需要的代码工作大大减少,开发人员甚至不需要详细了解 Neutron 的具体实现机制,只需要实现对应的接口。并且,ML2 通过其中的 mechanism drivers 可以同时管理多种 Layer 2 技术,如下图 所示。

ML2架构

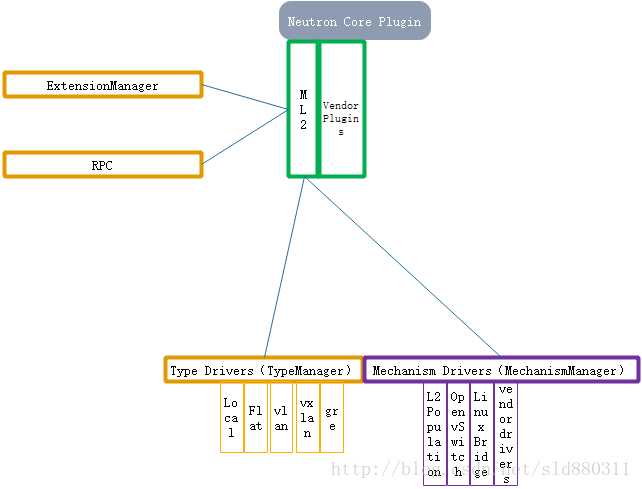

ML2对二层网络进行抽象和建模,引入了type driver和mechanism driver。在H版本中,ML2 Plugin被添加意图取代所有的Core Plugin,它采用了更加灵活的结构进行实现。

ML2的核心就是可以加载多个mechanism drivers,在一个openstack环境中支持多种虚拟网络实现技术。ML2解耦了网络拓扑类型与底层的虚拟网络实现机制,并且分别通过Driver的形式进行扩展,其中,不同的网络拓扑类型对应着TypeDriver,由TypeManager管理,不同的网络实现机制对应着MechanismDriver,由MechanismManger管理。

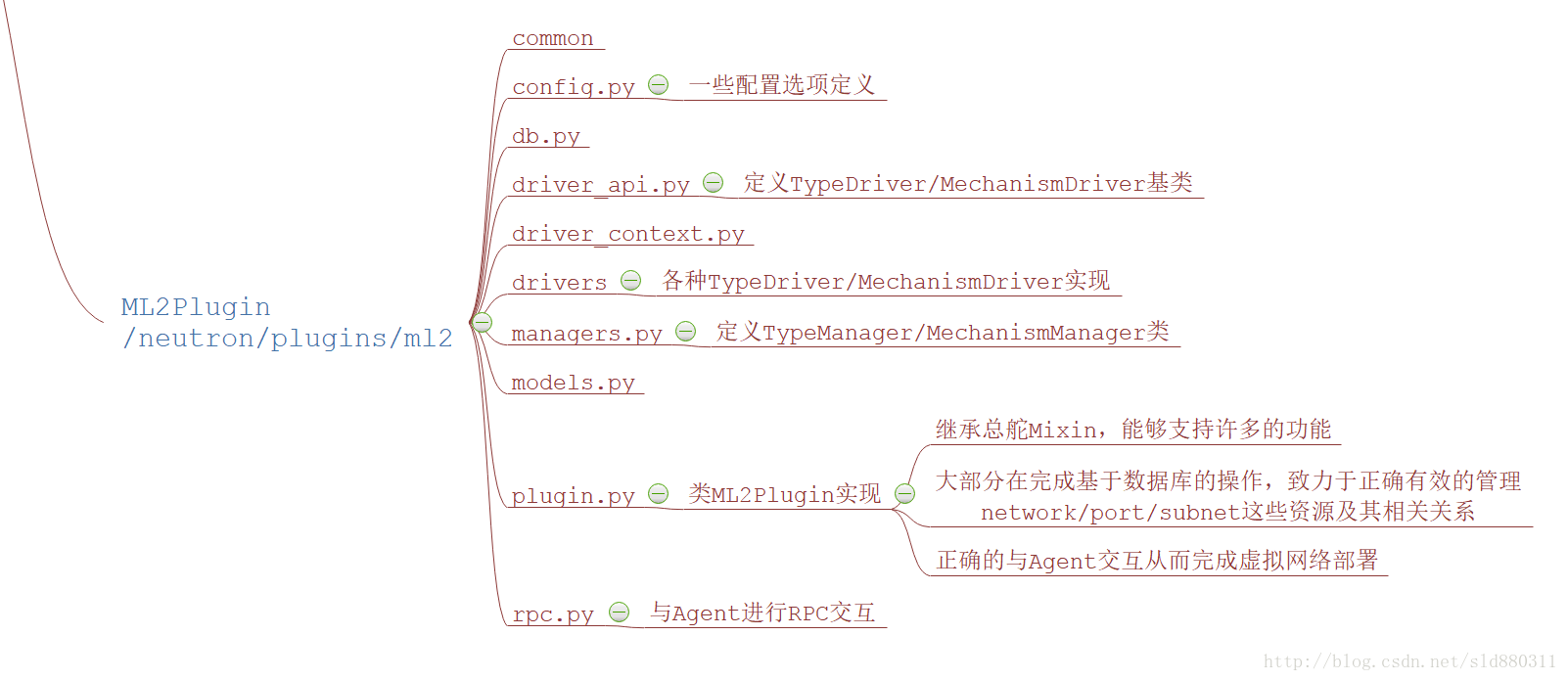

ML2代码结构

ML2 Plugin

这是所有对 Neutron 中 L2 resource 操作的入口,实现文件是 neutron/plugins/ml2/plugin.py。修改配置文件neutron.conf使配置生效方式代码如下:

core_plugin = ml2在配置文件setup.cfg已经配置了默认的core_plugins,如下所示:

neutron.core_plugins =

# 用于提供二层虚拟网络,实现了network/subnet/port资源的操作,

#这些操作最终由Plugin通过RPC调用OpenvSwitch Agent来完成。

# 根据setup.cfg文件可以看出代码路径是 neutron\plugins\ml2\plugin\Ml2Plugin

ml2 = neutron.plugins.ml2.plugin:Ml2Plugin

代码

class Ml2Plugin(db_base_plugin_v2.NeutronDbPluginV2,

dvr_mac_db.DVRDbMixin,

external_net_db.External_net_db_mixin,

sg_db_rpc.SecurityGroupServerRpcMixin,

agentschedulers_db.AZDhcpAgentSchedulerDbMixin,

addr_pair_db.AllowedAddressPairsMixin,

vlantransparent_db.Vlantransparent_db_mixin,

extradhcpopt_db.ExtraDhcpOptMixin,

address_scope_db.AddressScopeDbMixin,

service_type_db.SubnetServiceTypeMixin):

"""Implement the Neutron L2 abstractions using modules.

Ml2Plugin is a Neutron plugin based on separately extensible sets

of network types and mechanisms for connecting to networks of

those types. The network types and mechanisms are implemented as

drivers loaded via Python entry points. Networks can be made up of

multiple segments (not yet fully implemented).

"""

通过ML2Plugin类的定义看,它通过继承众多的Mixin,支持许多的功能。由于具体设备的操作由Agent来完成,ML2 Plugin本身大部分是完成基于数据库的一些操作,致力于正确有效的管理network/subnet/port这些资源及其相关关系,同时正确地与Agent交互从而完成虚拟网络部署。

除了三个核心的资源外,ML2Plugin还支持许多扩展资源,ML2Plugin类需要实现这些资源的操作接口,以供受到用户请求时资源对应的Controller调用。这些扩展资源并不是都由ML2Plugin实现,许多接口是由其父类实现。

# List of supported extensions

_supported_extension_aliases = ["provider", "external-net", "binding",

"quotas", "security-group", "agent",

"dhcp_agent_scheduler",

"multi-provider", "allowed-address-pairs",

"extra_dhcp_opt", "subnet_allocation",

"net-mtu", "net-mtu-writable",

"vlan-transparent",

"address-scope",

"availability_zone",

"network_availability_zone",

"default-subnetpools",

"subnet-service-types"]

初始化

def __init__(self):

# First load drivers, then initialize DB, then initialize drivers

self.type_manager = managers.TypeManager()

self.extension_manager = managers.ExtensionManager()

self.mechanism_manager = managers.MechanismManager()

super(Ml2Plugin, self).__init__()

self.type_manager.initialize()

self.extension_manager.initialize()

self.mechanism_manager.initialize()

self._setup_dhcp()

self._start_rpc_notifiers()

self.add_agent_status_check_worker(self.agent_health_check)

self.add_workers(self.mechanism_manager.get_workers())

self._verify_service_plugins_requirements()

LOG.info("Modular L2 Plugin initialization complete")

Segment说明

Segment可以简单的理解为对物理网络一部分的描述,比如它可以是物理网络中很多vlan中的一个vlan。ML2仅仅使用下面的数据结构来定义一个Segment。

{NETWORK_TYPE, PHYSICAL_NETWORK, and SEGMENTATION_ID}如果Segment对应了物理网络中的一个vlan,则segmentation_id就是这个vlan的vlan_id;如果Segment对应的是GRE网络中的一个Tunnel,则segmentation_id就是这个Tunnel的Tunnel ID。ML2就是使用这样简单的方式将Segment与物理网络对应起来。

TypeManager与MechanismManager

TypeManager和MechanismManager负责加载对应的TypeDriver和MechanismDriver,并将具体的操作分发到具体的Driver中。此外一些Driver通用的代码也由Manager完成。

TypeManager

TypeManager在初始化的时候,会根据配置文件加载对应的TypeDriver。TypeManager与其管理的TypeDriver一起提供了对Segment的各种操作,包括存储、验证、分配和回收。具体的步骤在Type drivers章节中详细介绍。

初始化

在ML2Plugin中的初始化过程中会实例化TypeManager,并且在TypeManager的初始化过程中完成配置文件中的type_drivers、tenant_network_types、external_network_type,具体代码如下:

# ML2Plugin中的初始化:

self.type_manager = managers.TypeManager()

self.type_manager.initialize()

# TypeManager初始化:

class TypeManager(stevedore.named.NamedExtensionManager):

"""Manage network segment types using drivers."""

def __init__(self):

# Mapping from type name to DriverManager

self.drivers = {}

LOG.info("Configured type driver names: %s",

cfg.CONF.ml2.type_drivers)

super(TypeManager, self).__init__('neutron.ml2.type_drivers',

cfg.CONF.ml2.type_drivers,

invoke_on_load=True)

LOG.info("Loaded type driver names: %s", self.names())

# 注册 type driver

self._register_types()

# 校验并注册tenant_network_types

self._check_tenant_network_types(cfg.CONF.ml2.tenant_network_types)

# 校验external_network_type

self._check_external_network_type(cfg.CONF.ml2.external_network_type)

def _register_types(self):

for ext in self:

network_type = ext.obj.get_type()

if network_type in self.drivers:

LOG.error("Type driver '%(new_driver)s' ignored because"

" type driver '%(old_driver)s' is already"

" registered for type '%(type)s'",

{'new_driver': ext.name,

'old_driver': self.drivers[network_type].name,

'type': network_type})

else:

self.drivers[network_type] = ext

LOG.info("Registered types: %s", self.drivers.keys())

def _check_tenant_network_types(self, types):

self.tenant_network_types = []

for network_type in types:

# tenant_network_types配置的type需要在type_drives配置中存在

if network_type in self.drivers:

self.tenant_network_types.append(network_type)

else:

LOG.error("No type driver for tenant network_type: %s. "

"Service terminated!", network_type)

raise SystemExit(1)

LOG.info("Tenant network_types: %s", self.tenant_network_types)

def _check_external_network_type(self, ext_network_type):

# 如果配置external_network_type,则必须要在type_drivers中配置过

if ext_network_type and ext_network_type not in self.drivers:

LOG.error("No type driver for external network_type: %s. "

"Service terminated!", ext_network_type)

raise SystemExit(1)

def initialize(self):

for network_type, driver in self.drivers.items():

LOG.info("Initializing driver for type '%s'", network_type)

driver.obj.initialize()

调用

在实现过程如何调用具体的type driver,参考代码如下:

def reserve_provider_segment(self, context, segment):

network_type = segment.get(ml2_api.NETWORK_TYPE)

# 根据segment中的network_type调用具体的type driver

driver = self.drivers.get(network_type)

if isinstance(driver.obj, api.TypeDriver):

return driver.obj.reserve_provider_segment(context.session,

segment)

else:

return driver.obj.reserve_provider_segment(context,

segment)

MechanismManager

MechanismManager的初始化参考TypeManager的初始化。

MechanismManager分发操作并具体传递操作到具体的MechanismDriver中,一个需要Mechanism Driver处理的操作会按照配置的顺序依次调用每一个Driver的对应函数来完成,比如对于需要配置交换机的操作,可能ovs虚拟交换机和外部真实的物理交换机比如Cisco交换机都需要进行配置,这个时候就需要ovs MechanismDriver和Cisco MechanismDriver都被调用进行处理。

调用具体MechanismDriver的代码

def _call_on_drivers(self, method_name, context,

continue_on_failure=False, raise_db_retriable=False):

"""Helper method for calling a method across all mechanism drivers.

:param method_name: name of the method to call

:param context: context parameter to pass to each method call

:param continue_on_failure: whether or not to continue to call

all mechanism drivers once one has raised an exception

:param raise_db_retriable: whether or not to treat retriable db

exception by mechanism drivers to propagate up to upper layer so

that upper layer can handle it or error in ML2 player

:raises: neutron.plugins.ml2.common.MechanismDriverError

if any mechanism driver call fails. or DB retriable error when

raise_db_retriable=False. See neutron.db.api.is_retriable for

what db exception is retriable

"""

errors = []

for driver in self.ordered_mech_drivers:

try:

getattr(driver.obj, method_name)(context)

except Exception as e:

if raise_db_retriable and db_api.is_retriable(e):

with excutils.save_and_reraise_exception():

LOG.debug("DB exception raised by Mechanism driver "

"'%(name)s' in %(method)s",

{'name': driver.name, 'method': method_name},

exc_info=e)

LOG.exception(

"Mechanism driver '%(name)s' failed in %(method)s",

{'name': driver.name, 'method': method_name}

)

errors.append(e)

if not continue_on_failure:

break

if errors:

raise ml2_exc.MechanismDriverError(

method=method_name,

errors=errors

)

Type Drivers

Each available network type is managed by an ml2 TypeDriver. TypeDrivers maintain any needed type-specific network state, and perform provider network validation and tenant network allocation. The ml2 plugin currently includes drivers for the local, flat, vlan, gre and vxlan network types.

物理环境中的L2网络存在多种类型,在Openstack Neutron中也支持多种网络类型。这些网络类型是由ML2Plugin中的type drivers完成。Openstack虚拟环境可以支持一种或多种网络类型(如果使用没有配置的网络类型,Neutron 会报网络类型不支持的错误),可以通过修改ml2_conf.ini配置文件完成配置,配置信息参考如下:

[ml2]

tenant_network_types = vxlan

extension_drivers = port_security

type_drivers = local,flat,vlan,gre,vxlan

mechanism_drivers = openvswitch,linuxbridge| tenant_network_types 也属于 types drivers 的配置项。它表示,在创建 Network 时,如果没有指定网络类型,按照该配置项的内容自动给 Neutron Network 指定网络类型。 |

可以理解成 Neutron Network 中网络类型的缺省值。当设定多个值时,第一个可用的值将被采用。

默认的网络类型在代码/neutron/plugins/ml2/config.py中,如下所示:

cfg.ListOpt('type_drivers',

default=['local', 'flat', 'vlan', 'gre', 'vxlan', 'geneve'],

help=_("List of network type driver entrypoints to be loaded "

"from the neutron.ml2.type_drivers namespace.")),

Type driver最主要的功能就是管理网络Segment,提供Provider Segment和Tenant Segment(命令行中没有指定任何Provider信息时,所创建的就是Tenant Segment)的验证、分配、释放等功能。ML2 plugin 通过 neutron.plugins.ml2.managers.TypeManager 来与配置了的 type driver 交互。Neutron支持的每一种网络类型都有一个对应的ML2 type driver。TypeManager可以根据用户创建的网络类型调用相应的类型驱动。

主要实现与底层技术无关的代码,有四个接口:

def validate_provider_segment(self, segment):

def reserve_provider_segment(self, session, segment):

def allocate_tenant_segment(self, session):

def release_segment(self, session, segment):

Flat Type Driver

Flat网络在创建时,必须指定physical_network(如–provider:physical_network flat),也就是必须指定物理网络的名称。需要首先在ml2_conf.ini中配置flat_network=physnet1和(bridge_mappings=physnet1:br-ex或physical_interface_mappings=physnet1:eth1)而且对于Flat网络来说没有所谓的segmentation_id。Flat Type Driver会根据以上要求对segment进行验证。

segment分配过程:将TypeManager传递过来的Segment保存在数据库中。

首先检测配置文件和rest中的信息:通常我们需要将需要创建的Flat网络的物理网络名称写入到配置文件中,如果这个名称使用“*”通配符替代,则表示任意的物理网络名称都满足要求。

def validate_provider_segment(self, segment):

physical_network = segment.get(api.PHYSICAL_NETWORK)

# 验证segment中是否存在physical_network

if not physical_network:

msg = _("physical_network required for flat provider network")

raise exc.InvalidInput(error_message=msg)

# 验证ml2_conf.ini中是否配置了flat_networks,可以配置为通配符*

if self.flat_networks is not None and not self.flat_networks:

msg = _("Flat provider networks are disabled")

raise exc.InvalidInput(error_message=msg)

# 验证segment中的physical_network是否与配置文件中配置的相同

if self.flat_networks and physical_network not in self.flat_networks:

msg = (_("physical_network '%s' unknown for flat provider network")

% physical_network)

raise exc.InvalidInput(error_message=msg)

# 验证 key 为network_type或physical_network对应的value不能为空

for key, value in segment.items():

if value and key not in [api.NETWORK_TYPE,

api.PHYSICAL_NETWORK]:

msg = _("%s prohibited for flat provider network") % key

raise exc.InvalidInput(error_message=msg)

其实判断数据库条目:如果存在则说明该segment已经被使用,这个Flat网络就创建失败,查询表为ml2_flat_allocations.

def reserve_provider_segment(self, context, segment):

physical_network = segment[api.PHYSICAL_NETWORK]

try:

LOG.debug("Reserving flat network on physical "

"network %s", physical_network)

alloc = flat_obj.FlatAllocation(

context,

physical_network=physical_network)

alloc.create()

except obj_base.NeutronDbObjectDuplicateEntry:

raise n_exc.FlatNetworkInUse(

physical_network=physical_network)

segment[api.MTU] = self.get_mtu(alloc.physical_network)

return segment

Tunnel Type Driver

Vxlan和GRE都是Tunnel类型的虚拟网络,针对Tunnel网络,ML2引入了类neutron.plugins.ml2.drivers.type_tunnel.EndpointTunnelTypeDriver,针对Tunnel类型网络定义了一些新的接口共vxlan和gre driver请实现。

还定义了与Agent进行交互的接口:

TunnelAgentRpcApiMixin:ML2Plugin到Agent的tunnel_update()

TunnelRpcCallbackMixin:Agent到ML2Plugin的tunnel_sync().

Vlan Type Driver

vlan的管理与gre和vxlan不同。vlan必须指定physical_network,这是因为vlan必须在主机的某个网络接口(比如eth0)上配置,而vxlan和gre不需要和主机网络接口绑定。

每个物理网络上都可以有4094个可用vlan id,及最多有个4094个segment。范围:[1,4094]

def is_valid_vlan_tag(vlan):

return p_const.MIN_VLAN_TAG <= vlan <= p_const.MAX_VLAN_TAG

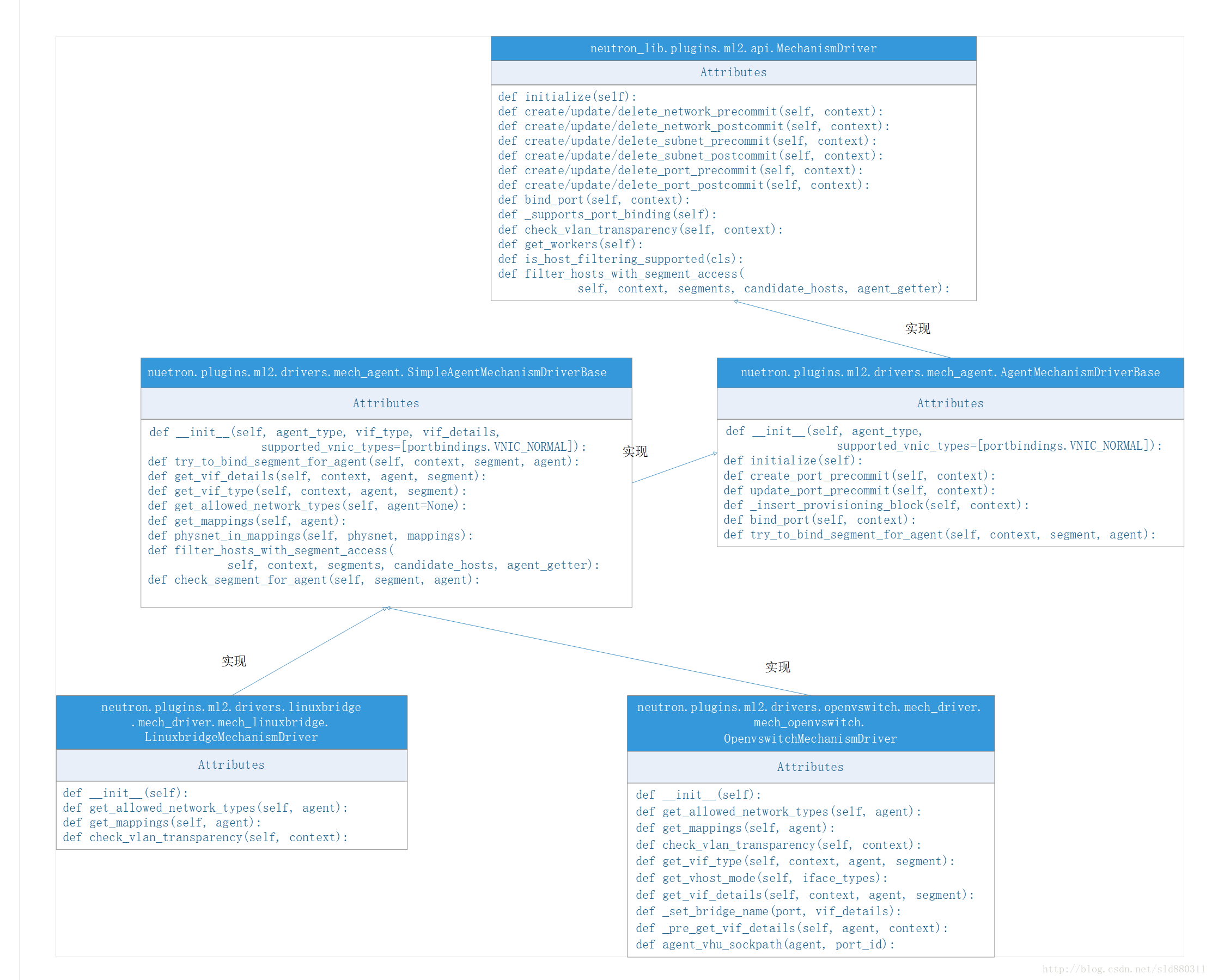

Mechanism drivers

Each networking mechanism is managed by an ml2 MechanismDriver. The MechanismDriver is responsible for taking the information established by the TypeDriver and ensuring that it is properly applied given the specific networking mechanisms that have been enabled.

The MechanismDriver interface currently supports the creation, update, and deletion of network and port resources. For every action that can be taken on a resource, the mechanism driver exposes two methods - ACTION_RESOURCE_precommit, which is called within the database transaction context, and ACTION_RESOURCE_postcommit, called after the database transaction is complete. The precommit method is used by mechanism drivers to validate the action being taken and make any required changes to the mechanism driver’s private database. The precommit method should not block, and therefore cannot communicate with anything outside of Neutron. The postcommit method is responsible for appropriately pushing the change to the resource to the entity responsible for applying that change. For example, the postcommit method would push the change to an external network controller, that would then be responsible for appropriately updating the network resources based on the change.

Support for mechanism drivers is currently a work-in-progress in pre-release Havana versions, and the interface is subject to change before the release of Havana. In a future version, the mechanism driver interface will also be called to establish a port binding, determining the VIF type and network segment to be used.

这部分是针对各种L2技术的支持,比如Open vSwitch、linux bridge等。最近流程的OVN(参考这里)也是作为一个Mechanism driver,通过ML2与Openstack Neutron工作。Mechanism dirver可以通过修改ml2_conf.ini中配置,配置信息参考如下:

mechanism_drivers = openvswitch,linuxbridge在使用Ml2Plugin之前Open vSwitch、LinuxBridge等采用自己的Agent,在使用Ml2Plugin之后,

| ML2 Plugin通过neutron.plugins.ml2.managers.MechanismManager与配置了的Mechanism driver交互。 |

每个网络进行被ml2 的Mechanism Driver管理。MechanismDeriver对已建立的TypeDriver获取的信息负有责任,以确保能够恰当的应用到所给的能工作的网络进程中。

Mechanism driver 负责获取由type driver 维护的网络状态,并确保在相应的网络设备(物理或虚拟)上正确实现这些状态。MechanismDriver同时支持创建、更新和删除网络和端口资源。

这种类型的Mechanism Driver的主要内容就是做Port Binding相关的处理。至于与Agent之间的交互,则是由ML2Plugin类中的两个函数负责:delete_network和update_port。

其中delete_network完成的事情比较单一,就是将一个network删除。其余众多牵涉设备的操作都是由update_port来通知Agent完成。

对于每个在资源上采取行动,MechanismDriver都使用两种方法:“{action}-{object}-{precommit}”,称为数据库事务处理上下文、“{action}-{object}-{postcommit}”称为数据库事务完成后。

Precommit方法被驱动机制用于验证采取的行动和做任何需要改变驱动机制的私有数据库。Precommit方法不应该阻塞以及因此不能和任何外部Neutron通信。会回滚数据库。

Postcommit方法负责适当推动改变资源的实体负责应用改变。例如,postcommit方法将推动一个外部网络控制器改变,那负责适当地更新网络资源基于这些改变。不会回滚数据库。

分类

Agent-based:linux bridge、open vswitch

Controller-based:OpenDaylight、Vmware NSX

物理交换机:Cisco Nexus, Arista, Mellanox

linux bridge 和 open vswitch 的 ML2 mechanism driver 的作用是配置各节点上的虚拟交换机。 linux bridge driver 支持的 type 包括 local, flat, vlan, and vxlan。 open vswitch driver 除了这 4 种 type 还支持 gre。

L2 population driver 作用是优化和限制 overlay 网络中的广播流量。 vxlan 和 gre 都属于 overlay 网络。

RPC

这是 ML2 与 L2 agents 通讯的部分,是基于 AMQP 的实现。例如,删除 Network,需要通过 rpc 通知 L2 agents 也删除相应的流表,虚拟端口等等。ML2 的 rpc 实现在 neutron.plugins.ml2.rpc。

在neutron.plugins.ml2.rpc.AgentNotifierApi定义了需要调用L2 Agent的方法,代码参考如下:

# 消息队列的生产者类(xxxxNotifyAPI)和对应的消费者类(xxxxRpcCallback)定义有相同的接口函数,

# 生产者类中的函数主要作用是rpc调用消费者类中的同名函数,消费者类中的函数执行实际的动作。

# 如:xxxNotifyAPI类中定义有network_delete()函数,则xxxRpcCallback类中也会定义有network_delete()函数。

# xxxNotifyAPI::network_delete()通过rpc调用xxxRpcCallback::network_delete()函数,

# xxxRpcCallback::network_delete()执行实际的network delete删除动作

class AgentNotifierApi(dvr_rpc.DVRAgentRpcApiMixin,

sg_rpc.SecurityGroupAgentRpcApiMixin,

type_tunnel.TunnelAgentRpcApiMixin):

"""Agent side of the openvswitch rpc API.

API version history:

1.0 - Initial version.

1.1 - Added get_active_networks_info, create_dhcp_port,

update_dhcp_port, and removed get_dhcp_port methods.

1.4 - Added network_update

"""

def __init__(self, topic):

self.topic = topic

self.topic_network_delete = topics.get_topic_name(topic,

topics.NETWORK,

topics.DELETE)

self.topic_port_update = topics.get_topic_name(topic,

topics.PORT,

topics.UPDATE)

self.topic_port_delete = topics.get_topic_name(topic,

topics.PORT,

topics.DELETE)

self.topic_network_update = topics.get_topic_name(topic,

topics.NETWORK,

topics.UPDATE)

target = oslo_messaging.Target(topic=topic, version='1.0')

self.client = n_rpc.get_client(target)

def network_delete(self, context, network_id):

cctxt = self.client.prepare(topic=self.topic_network_delete,

fanout=True)

cctxt.cast(context, 'network_delete', network_id=network_id)

def port_update(self, context, port, network_type, segmentation_id,

physical_network):

cctxt = self.client.prepare(topic=self.topic_port_update,

fanout=True)

cctxt.cast(context, 'port_update', port=port,

network_type=network_type, segmentation_id=segmentation_id,

physical_network=physical_network)

def port_delete(self, context, port_id):

cctxt = self.client.prepare(topic=self.topic_port_delete,

fanout=True)

cctxt.cast(context, 'port_delete', port_id=port_id)

def network_update(self, context, network):

cctxt = self.client.prepare(topic=self.topic_network_update,

fanout=True, version='1.4')

cctxt.cast(context, 'network_update', network=network)

Extension

Extension API有两种方式扩展现有资源:一种是为network/port/subnet增加属性,比如在端口中增加某些属性。一种是增加一些额外的资源(后续章节介绍),比如VPNaaS等。

Extension API的定义位于neutron/extensions目录,他们的基本以及一些公用的代码则位于neutron/api/extensions.py和neutron_lib/api/extensions.py文件。

neutron_lib.api.extensions.ExtensionDescriptor是所有Extension API的基类,添加新的资源时需要实现get_resources()方法,而扩展资源时,需要实现get_extended_resources()方法,相关代码定义如下:

def get_resources(self):

"""List of extensions.ResourceExtension extension objects.

Resources define new nouns, and are accessible through URLs.

"""

return []

def get_extended_resources(self, version):

"""Retrieve extended resources or attributes for core resources.

Extended attributes are implemented by a core plugin similarly

to the attributes defined in the core, and can appear in

request and response messages. Their names are scoped with the

extension's prefix. The core API version is passed to this

function, which must return a

map[<resource_name>][<attribute_name>][<attribute_property>]

specifying the extended resource attribute properties required

by that API version.

Extension can add resources and their attr definitions too.

The returned map can be integrated into RESOURCE_ATTRIBUTE_MAP.

"""

return {}

Extensions drivers

由于网络都遵循OSI7层模型,而且每层之间不是完全独立的,例如Neutron中DNS服务与Network和Port关联。Extensions Drivers就是建立Neutron中L2 Resource与其他resource之间的关系。当创建、更新或删除Network L2层resource时,对应的extensions driver会被执行,并更新对应的其他resource。同时 extensions drivers 还会将其他 resource 与 Neutron L2 resource 的关联报告给 ML2 plugin,这样,用户在查看 Port 信息的时候,就能看到对应的 Security Group。ML2 plugin 通过 neutron.plugins.ml2.managers.ExtensionManager 与配置了的 extension driver 交互。

可以通过ml2_conf.ini配置文件完成extensions drivers的配置,参考如下:

extension_drivers = port_security可扩展信息

通过配置文件neutron/setup.cfg中的extension_drivers的配置信息,可知可扩展的信息如下:

neutron.ml2.extension_drivers =

test = neutron.tests.unit.plugins.ml2.drivers.ext_test:TestExtensionDriver

testdb = neutron.tests.unit.plugins.ml2.drivers.ext_test:TestDBExtensionDriver

port_security = neutron.plugins.ml2.extensions.port_security:PortSecurityExtensionDriver

qos = neutron.plugins.ml2.extensions.qos:QosExtensionDriver

dns = neutron.plugins.ml2.extensions.dns_integration:DNSExtensionDriverML2

data_plane_status = neutron.plugins.ml2.extensions.data_plane_status:DataPlaneStatusExtensionDriver

dns_domain_ports = neutron.plugins.ml2.extensions.dns_integration:DNSDomainPortsExtensionDriver

具体可扩展属性,可以在neutron/extensions目录下找到对应的文件,比如portsecurity.py中的可扩展属性如下所示:

RESOURCE_ATTRIBUTE_MAP = {

network.COLLECTION_NAME: {

PORTSECURITY: {'allow_post': True, 'allow_put': True,

'convert_to': converters.convert_to_boolean,

'enforce_policy': True,

'default': DEFAULT_PORT_SECURITY,

'is_visible': True},

},

port.COLLECTION_NAME: {

PORTSECURITY: {'allow_post': True, 'allow_put': True,

'convert_to': converters.convert_to_boolean,

'default': constants.ATTR_NOT_SPECIFIED,

'enforce_policy': True,

'is_visible': True},

}

}

Port binding

扩展按照扩展资源的定义,端口绑定只是对port的扩展,而不需引入新的资源,只需要实现get_extended_resources()方法即可,参考代码如下:

# neutron.extensions.portbindings.Portbindings

class Portbindings(extensions.APIExtensionDescriptor):

"""Extension class supporting port bindings.

This class is used by neutron's extension framework to make

metadata about the port bindings available to external applications.

With admin rights one will be able to update and read the values.

"""

# 初始化可以扩展的属性

api_definition = portbindings# 可neutron_lib.api.definitions.portbindings.RESOURCE_ATTRIBUTE_MAP扩展的属性

# 这些属性使用binding:host_id的形式指定

RESOURCE_ATTRIBUTE_MAP = {

COLLECTION_NAME: {

# 未bind前,默认为unbond

# 如果host_id设置后未能bind则设置binding_failed

# 绑定成功之后是具体的VIF类型

VIF_TYPE: {'allow_post': False, 'allow_put': False,

'default': constants.ATTR_NOT_SPECIFIED,

'enforce_policy': True,

'is_visible': True},

# 提供更加详细的信息和功能,如port_filter相关的

# security group或者anti-MAC/IP spoofing

VIF_DETAILS: {'allow_post': False, 'allow_put': False,

'default': constants.ATTR_NOT_SPECIFIED,

'enforce_policy': True,

'is_visible': True},

# normal:虚拟网卡

# direct:直接passthrough给虚拟机的SRIOV网卡

# macvtap:Neutron SRIOV的一种实现

VNIC_TYPE: {'allow_post': True, 'allow_put': True,

'default': VNIC_NORMAL,

'is_visible': True,

'validate': {'type:values': VNIC_TYPES},

'enforce_policy': True},

# 端口绑定的host主机id,有Nova设置

HOST_ID: {'allow_post': True, 'allow_put': True,

'default': constants.ATTR_NOT_SPECIFIED,

'is_visible': True,

'enforce_policy': True},

# 字典域,包含诸如:“port_filter:True”等类型的信息

PROFILE: {'allow_post': True, 'allow_put': True,

'default': constants.ATTR_NOT_SPECIFIED,

'enforce_policy': True,

'validate': {'type:dict_or_none': None},

'is_visible': True},

}

}# 基类neutron_lib.api.extensions.APIExtensionDescriptor,用来实现get_extended_resources()

class APIExtensionDescriptor(ExtensionDescriptor):

"""Base class that defines the contract for extensions.

Concrete implementations of API extensions should first provide

an API definition in neutron_lib.api.definitions. The API

definition module (object reference) can then be specified as a

class level attribute on the concrete extension.

For example::

from neutron_lib.api.definitions import provider_net

from neutron_lib.api import extensions

class Providernet(extensions.APIExtensionDescriptor):

api_definition = provider_net

# nothing else needed if default behavior is acceptable

If extension implementations need to override the default behavior of

this class they can override the respective method directly.

"""

def get_extended_resources(self, version):

"""Retrieve the resource attribute map for the API definition."""

if version == "2.0":

self._assert_api_definition('RESOURCE_ATTRIBUTE_MAP')

return self.api_definition.RESOURCE_ATTRIBUTE_MAP

else:

return {}# VIF_TYPE: vif_types are required by Nova to determine which vif_driver to

# use to attach a virtual server to the network

# - vhost-user: The vhost-user interface type is a standard virtio interface

# provided by qemu 2.1+. This constant defines the neutron side

# of the vif binding type to provide a common definition

# to enable reuse in multiple agents and drivers.

VIF_TYPE_VHOST_USER = 'vhostuser'

VIF_TYPE_UNBOUND = 'unbound'

VIF_TYPE_BINDING_FAILED = 'binding_failed'

VIF_TYPE_DISTRIBUTED = 'distributed'

VIF_TYPE_OVS = 'ovs'

VIF_TYPE_BRIDGE = 'bridge'

VIF_TYPE_OTHER = 'other'

VIF_TYPE_TAP = 'tap'

# vif_type_macvtap: Tells Nova that the macvtap vif_driver should be used to

# create a vif. It does not require the VNIC_TYPE_MACVTAP,

# which is defined further below. E.g. Macvtap agent uses

# vnic_type 'normal'.

VIF_TYPE_MACVTAP = 'macvtap'

# vif_type_agilio_ovs: Tells Nova that the Agilio OVS vif_driver should be

# used to create a vif. In addition to the normal OVS

# vif types exposed, VNIC_DIRECT and

# VNIC_VIRTIO_FORWARDER are supported.

VIF_TYPE_AGILIO_OVS = 'agilio_ovs'

# SR-IOV VIF types

VIF_TYPE_HW_VEB = 'hw_veb'

VIF_TYPE_HOSTDEV_PHY = 'hostdev_physical'

# VNIC_TYPE: It's used to determine which mechanism driver to use to bind a

# port. It can be specified via the Neutron API. Default is normal,

# used by OVS and LinuxBridge agent.

VNIC_NORMAL = 'normal'

VNIC_DIRECT = 'direct'

VNIC_MACVTAP = 'macvtap'

VNIC_BAREMETAL = 'baremetal'

VNIC_DIRECT_PHYSICAL = 'direct-physical'

VNIC_VIRTIO_FORWARDER = 'virtio-forwarder'

VNIC_TYPES = [VNIC_NORMAL, VNIC_DIRECT, VNIC_MACVTAP, VNIC_BAREMETAL,

VNIC_DIRECT_PHYSICAL, VNIC_VIRTIO_FORWARDER]portbinding绑定流程

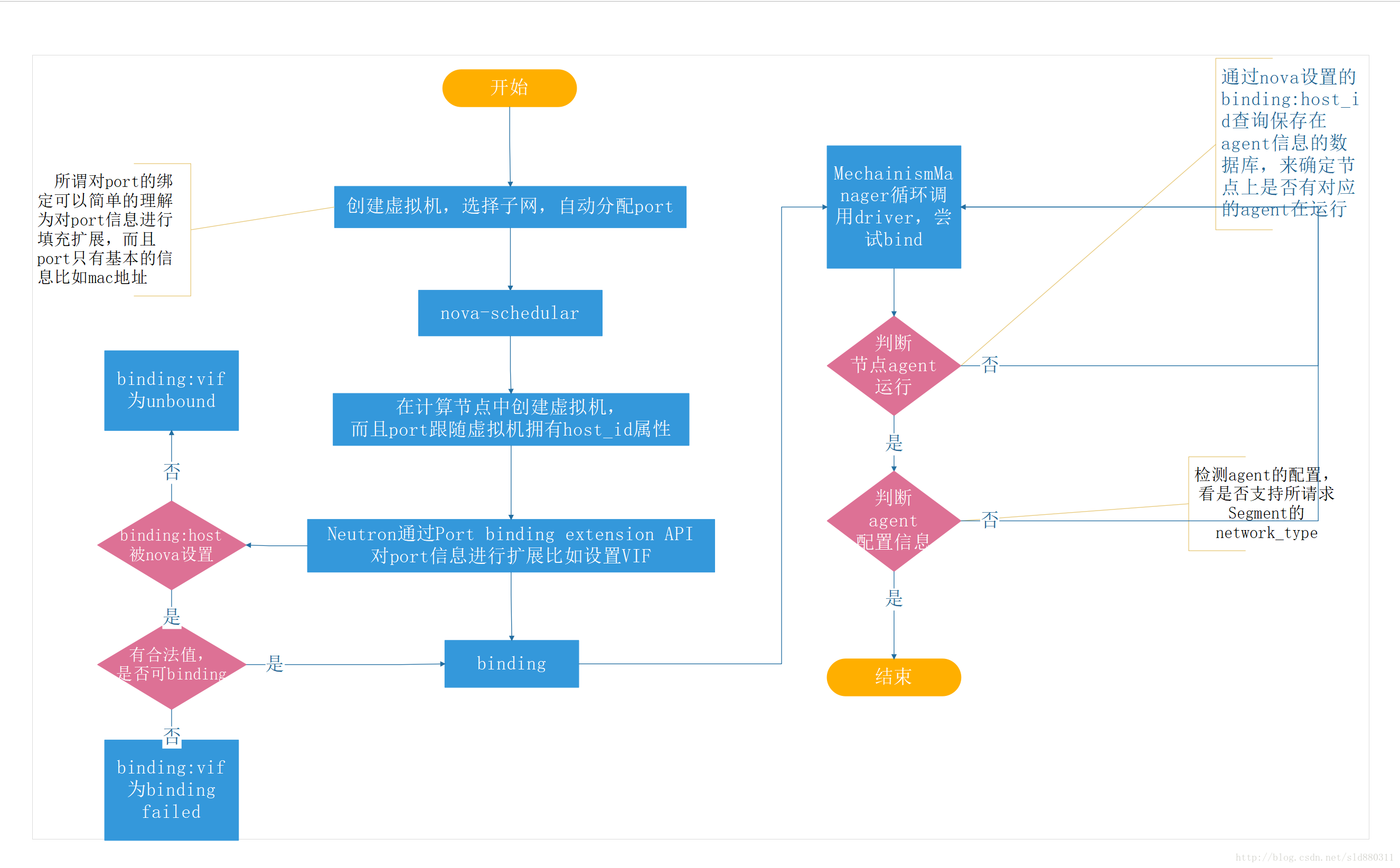

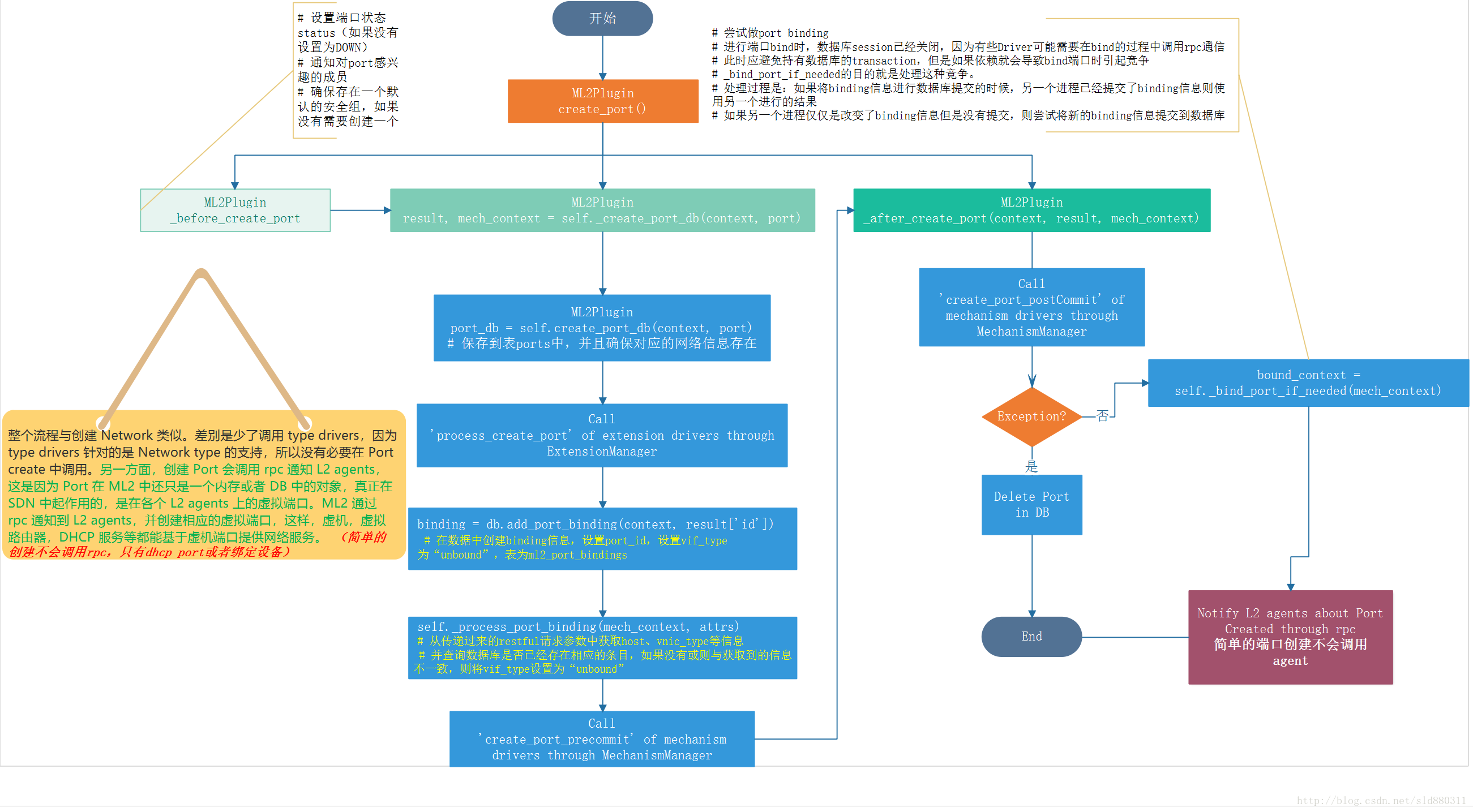

所谓对port的绑定可以简单的理解为对port信息进行填充扩展。在创建port时,port只具有MAC地址等少量信息,Nova会在创建虚拟机时(虚拟机增加网络信息),为虚拟机指定一个port,并通过nova-schedular调度选择一个计算节点创建虚拟机,此时这个port也相当于跟随这个新建的虚拟机一起来到这个计算节点,于是port就拥有了一个属性host_id表示它所位于的计算节点。

Neutron通过Port Binding Extension API对port的信息进行扩展,比如设置VIF(virtual network interface),nova需要根据Neutron设置的VIF_TYPE通过一些底层的库,比如libvirt来创建VIF和相关的Bridge。

在binding:host_id未被Nova设置前,binding:vif_type的值应该是“unbound”。如果binding:host_id已经有合法值,但是又不能创建binding,那么binding:vif_type的值应该为“binding_failed”。

针对一个port进行bind时,Mechanism Manager会依次调用所有的Driver来尝试bind(设置port binding扩展的属性),针对ovs、linuxbridge driver,它们会使用nova设置的binding:host_id查询保存agent信息的数据库,来确定节点上是否有对应的agent在运行,如果存在则进一步检测agent的配置,看是否支持所请求Segment的network_type,如果一个dirver尝试成功,则不在调用后续的Driver。

绑定时机

Plugin主要把握进行PortBinding的时机:port创建时,即执行create_port()时,如果已经提供足够的信息,则需要第一时间进行bind;由于update_port()导致端口信息变化时也需要重新bind端口;Agent通过RPC从Plugin获取port信息的时候,也会尝试bind端口,这样会避免在获取信息的过程中,由于有其他进行更新port但是并没有bind而导致Agent不能获取到最新的bind信息。

代码分析

ML2Plugin

@utils.transaction_guard

@db_api.retry_if_session_inactive()

def create_port(self, context, port):

# 设置端口状态status(如果没有设置为DOWN)

# 通知对port感兴趣的成员

# 确保存在一个默认的安全组,如果没有需要创建一个

self._before_create_port(context, port)

# 数据库操作以及对port binding的一些clean up

result, mech_context = self._create_port_db(context, port)

# 端口创建完成之后的后续处理

return self._after_create_port(context, result, mech_context)

def _create_port_db(self, context, port):

attrs = port[port_def.RESOURCE_NAME]

with db_api.context_manager.writer.using(context):

# 获取dhcp相关的信息

dhcp_opts = attrs.get(edo_ext.EXTRADHCPOPTS, [])

# 保存到表ports中,并且确保对应的网络信息存在

port_db = self.create_port_db(context, port)

# 返回数据

result = self._make_port_dict(port_db, process_extensions=False)

# 分别调用extension driver中的process_create_port方法

self.extension_manager.process_create_port(context, attrs, result)

self._portsec_ext_port_create_processing(context, result, port)

# sgids must be got after portsec checked with security group

sgids = self._get_security_groups_on_port(context, port)

self._process_port_create_security_group(context, result, sgids)

network = self.get_network(context, result['network_id'])

# 在数据中创建binding信息,设置port_id,设置vif_type为“unbound”,表为ml2_port_bindings

binding = db.add_port_binding(context, result['id'])

mech_context = driver_context.PortContext(self, context, result,

network, binding, None)

# 从传递过来的restful请求参数中获取host、vnic_type等信息

# 并查询数据库是否已经存在相应的条目,如果没有或则与获取到的信息不一致,则将vif_type设置为“unbound”

self._process_port_binding(mech_context, attrs)

result[addr_pair.ADDRESS_PAIRS] = (

self._process_create_allowed_address_pairs(

context, result,

attrs.get(addr_pair.ADDRESS_PAIRS)))

self._process_port_create_extra_dhcp_opts(context, result,

dhcp_opts)

kwargs = {'context': context, 'port': result}

registry.notify(

resources.PORT, events.PRECOMMIT_CREATE, self, **kwargs)

self.mechanism_manager.create_port_precommit(mech_context)

self._setup_dhcp_agent_provisioning_component(context, result)

resource_extend.apply_funcs('ports', result, port_db)

return result, mech_context

def _after_create_port(self, context, result, mech_context):

# notify any plugin that is interested in port create events

kwargs = {'context': context, 'port': result}

registry.notify(resources.PORT, events.AFTER_CREATE, self, **kwargs)

try:

self.mechanism_manager.create_port_postcommit(mech_context)

except ml2_exc.MechanismDriverError:

with excutils.save_and_reraise_exception():

LOG.error("mechanism_manager.create_port_postcommit "

"failed, deleting port '%s'", result['id'])

self.delete_port(context, result['id'], l3_port_check=False)

try:

# 尝试做port binding

# 进行端口bind时,数据库session已经关闭,因为有些Driver可能需要在bind的过程中调用rpc通信

# 此时应避免持有数据库的transaction,但是如果依赖就会导致bind端口时引起竞争

# _bind_port_if_needed的目的就是处理这种竞争。

# 处理过程是:如果将binding信息进行数据库提交的时候,另一个进程已经提交了binding信息则使用另一个进行的结果

# 如果另一个进程仅仅是改变了binding信息但是没有提交,则尝试将新的binding信息提交到数据库

bound_context = self._bind_port_if_needed(mech_context)

except ml2_exc.MechanismDriverError:

with excutils.save_and_reraise_exception():

LOG.error("_bind_port_if_needed "

"failed, deleting port '%s'", result['id'])

self.delete_port(context, result['id'], l3_port_check=False)

return bound_context.current

@db_api.retry_db_errors

def _bind_port_if_needed(self, context, allow_notify=False,

need_notify=False):

if not context.network.network_segments:

LOG.debug("Network %s has no segments, skipping binding",

context.network.current['id'])

return context

for count in range(1, MAX_BIND_TRIES + 1):

if count > 1:

# yield for binding retries so that we give other threads a

# chance to do their work

greenthread.sleep(0)

# multiple attempts shouldn't happen very often so we log each

# attempt after the 1st.

LOG.info("Attempt %(count)s to bind port %(port)s",

{'count': count, 'port': context.current['id']})

# 尝试绑定

bind_context, need_notify, try_again = self._attempt_binding(

context, need_notify)

if count == MAX_BIND_TRIES or not try_again:

if self._should_bind_port(context):

# At this point, we attempted to bind a port and reached

# its final binding state. Binding either succeeded or

# exhausted all attempts, thus no need to try again.

# Now, the port and its binding state should be committed.

context, need_notify, try_again = (

self._commit_port_binding(context, bind_context,

need_notify, try_again))

else:

context = bind_context

if not try_again:

if allow_notify and need_notify:

# 通知端口更新

self._notify_port_updated(context)

return context

LOG.error("Failed to commit binding results for %(port)s "

"after %(max)s tries",

{'port': context.current['id'], 'max': MAX_BIND_TRIES})

return context

# host不为空,且vif_type是unbound或binding_failed则返回true应该不绑定端口

def _should_bind_port(self, context):

return (context._binding.host and context._binding.vif_type

in (portbindings.VIF_TYPE_UNBOUND,

portbindings.VIF_TYPE_BINDING_FAILED))

# 绑定失败之后try_aggin返回true,再次试图绑定

# 绑定成功后try_agin=false,need_notify=true

def _attempt_binding(self, context, need_notify):

try_again = False

if self._should_bind_port(context):

bind_context = self._bind_port(context)

if bind_context.vif_type != portbindings.VIF_TYPE_BINDING_FAILED:

# Binding succeeded. Suggest notifying of successful binding.

need_notify = True

else:

# Current attempt binding failed, try to bind again.

try_again = True

context = bind_context

return context, need_notify, try_again

# 端口绑定

def _bind_port(self, orig_context):

# Construct a new PortContext from the one from the previous

# transaction.

port = orig_context.current

orig_binding = orig_context._binding

new_binding = models.PortBinding(

host=orig_binding.host,

vnic_type=orig_binding.vnic_type,

profile=orig_binding.profile,

vif_type=portbindings.VIF_TYPE_UNBOUND,

vif_details=''

)

self._update_port_dict_binding(port, new_binding)

new_context = driver_context.PortContext(

self, orig_context._plugin_context, port,

orig_context.network.current, new_binding, None,

original_port=orig_context.original)

# Attempt to bind the port and return the context with the

# result.

# 调用MechanismManager绑定端口,并且返回绑定信息

self.mechanism_manager.bind_port(new_context)

return new_context

MechanismManager

# 依次尝试调用每一个注册的Mechanism Driver,直到bind成功。

def bind_port(self, context):

"""Attempt to bind a port using registered mechanism drivers.

:param context: PortContext instance describing the port

Called outside any transaction to attempt to establish a port

binding.

"""

binding = context._binding

LOG.debug("Attempting to bind port %(port)s on host %(host)s "

"for vnic_type %(vnic_type)s with profile %(profile)s",

{'port': context.current['id'],

'host': context.host,

'vnic_type': binding.vnic_type,

'profile': binding.profile})

# 赋值self._binding_levels = []

context._clear_binding_levels()

# _bind_port_level的第二个参数“0”,表示从level 0 开始进行port binding

if not self._bind_port_level(context, 0,

context.network.network_segments):

binding.vif_type = portbindings.VIF_TYPE_BINDING_FAILED

LOG.error("Failed to bind port %(port)s on host %(host)s "

"for vnic_type %(vnic_type)s using segments "

"%(segments)s",

{'port': context.current['id'],

'host': context.host,

'vnic_type': binding.vnic_type,

'segments': context.network.network_segments})

# 这是一个嵌套函数,从给定的level开始一直向下做binding,直到

# 所有的segments全部bound,或者出错返回

def _bind_port_level(self, context, level, segments_to_bind):

binding = context._binding

port_id = context.current['id']

LOG.debug("Attempting to bind port %(port)s on host %(host)s "

"at level %(level)s using segments %(segments)s",

{'port': port_id,

'host': context.host,

'level': level,

'segments': segments_to_bind})

if level == MAX_BINDING_LEVELS:

LOG.error("Exceeded maximum binding levels attempting to bind "

"port %(port)s on host %(host)s",

{'port': context.current['id'],

'host': context.host})

return False

# 循环调用所有的Mechanism Driver

for driver in self.ordered_mech_drivers:

# 防止binding loop的发生,

# 即同一个driver 不能在同一个segment的不同level上进行binding

if not self._check_driver_to_bind(driver, segments_to_bind,

context._binding_levels):

continue

try:

# self._segments_to_bind = segments_to_bind

# self._new_bound_segment = None

# self._next_segments_to_bind = None

context._prepare_to_bind(segments_to_bind)

# 调用Mechanism driver的bind_port()接口,AgentMechanismDriverBase.bind_port()

driver.obj.bind_port(context)

# 如果driver bind成功的话,这个driver会将PortContext的

# new_bound_segment设置成刚刚被bound的segment。

# SimpleAgentMechanismDriverBase.try_to_bind_segment_for_agent()完成

segment = context._new_bound_segment

if segment:

# 将目前binding情况写入数据库:ml2_port_binding_levels

context._push_binding_level(

models.PortBindingLevel(port_id=port_id,

host=context.host,

level=level,

driver=driver.name,

segment_id=segment))

next_segments = context._next_segments_to_bind

# 并且如果这个driver认为还有下一级需要做port binding的话

# 将 next_segment_to_bind设置成这个driver动态分配的segment_id

# 如果设置了_next_segments_to_bind,将继续在下一个

# level上做binding

if next_segments:

# Continue binding another level.

if self._bind_port_level(context, level + 1,

next_segments):

return True

else:

LOG.warning("Failed to bind port %(port)s on "

"host %(host)s at level %(lvl)s",

{'port': context.current['id'],

'host': context.host,

'lvl': level + 1})

context._pop_binding_level()

else:

# Binding complete.

LOG.debug("Bound port: %(port)s, "

"host: %(host)s, "

"vif_type: %(vif_type)s, "

"vif_details: %(vif_details)s, "

"binding_levels: %(binding_levels)s",

{'port': port_id,

'host': context.host,

'vif_type': binding.vif_type,

'vif_details': binding.vif_details,

'binding_levels': context.binding_levels})

return True

except Exception:

LOG.exception("Mechanism driver %s failed in "

"bind_port",

driver.name)

MechanismDriver

# 执行逻辑

# 寻找port所在主机的所有该driver的Agent

# 获取状态为alive的agent

# 通过具体的Driver对每一个Segment进行逐一检查,如果此segment能够提供符合条件的port

# 返回binding信息给ML2Plugin

def bind_port(self, context):

LOG.debug("Attempting to bind port %(port)s on "

"network %(network)s",

{'port': context.current['id'],

'network': context.network.current['id']})

# 获取虚拟网卡 binding:vnic_type

vnic_type = context.current.get(portbindings.VNIC_TYPE,

portbindings.VNIC_NORMAL)

if vnic_type not in self.supported_vnic_types:

LOG.debug("Refusing to bind due to unsupported vnic_type: %s",

vnic_type)

return

# 根据agent_type查询主机中的agent,表为agents

agents = context.host_agents(self.agent_type)

if not agents:

LOG.debug("Port %(pid)s on network %(network)s not bound, "

"no agent of type %(at)s registered on host %(host)s",

{'pid': context.current['id'],

'at': self.agent_type,

'network': context.network.current['id'],

'host': context.host})

for agent in agents:

LOG.debug("Checking agent: %s", agent)

if agent['alive']:

for segment in context.segments_to_bind:

# 试图在agent上bind_segment

if self.try_to_bind_segment_for_agent(context, segment,

agent):

LOG.debug("Bound using segment: %s", segment)

return

else:

LOG.warning("Refusing to bind port %(pid)s to dead agent: "

"%(agent)s",

{'pid': context.current['id'], 'agent': agent})

def try_to_bind_segment_for_agent(self, context, segment, agent):

# 网络类型匹配

# 如果网络类型为flat、vlan则物理信息也需要保持一致

if self.check_segment_for_agent(segment, agent):

context.set_binding(segment[api.ID],

self.get_vif_type(context, agent, segment),

self.get_vif_details(context, agent, segment))

return True

else:

return False

ML2Plugin工作过程

创建网络

rest请求参考json

Request /Response Example

{

"network": {

"name": "sample_network",

"admin_state_up": true,

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e"

}

}

{

"network": {

"admin_state_up": true,

"availability_zone_hints": [],

"availability_zones": [

"nova"

],

"created_at": "2016-03-08T20:19:41",

"id": "4e8e5957-649f-477b-9e5b-f1f75b21c03c",

"mtu": 1500,

"name": "net1",

"port_security_enabled": true,

"project_id": "9bacb3c5d39d41a79512987f338cf177",

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e",

"router:external": false,

"shared": false,

"status": "ACTIVE",

"subnets": [],

"tenant_id": "9bacb3c5d39d41a79512987f338cf177",

"updated_at": "2016-03-08T20:19:41",

"vlan_transparent": false,

"description": ""

}

}

Request /Response Example (admin user; single segment mapping)

{

"network": {

"admin_state_up": true,

"name": "net1",

"provider:network_type": "vlan",

"provider:physical_network": "public",

"provider:segmentation_id": 2,

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e"

}

}

{

"network": {

"status": "ACTIVE",

"subnets": [],

"availability_zone_hints": [],

"name": "net1",

"provider:physical_network": "public",

"admin_state_up": true,

"project_id": "9bacb3c5d39d41a79512987f338cf177",

"tenant_id": "9bacb3c5d39d41a79512987f338cf177",

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e",

"router:external": false,

"provider:network_type": "vlan",

"mtu": 1500,

"shared": false,

"id": "4e8e5957-649f-477b-9e5b-f1f75b21c03c",

"provider:segmentation_id": 2,

"description": ""

}

}

Request /Response Example (admin user; multiple segment mappings)

{

"network": {

"segments": [

{

"provider:segmentation_id": 2,

"provider:physical_network": "public",

"provider:network_type": "vlan"

},

{

"provider:physical_network": "default",

"provider:network_type": "flat"

}

],

"name": "net1",

"admin_state_up": true,

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e"

}

}

{

"network": {

"status": "ACTIVE",

"subnets": [],

"name": "net1",

"admin_state_up": true,

"project_id": "9bacb3c5d39d41a79512987f338cf177",

"tenant_id": "9bacb3c5d39d41a79512987f338cf177",

"qos_policy_id": "6a8454ade84346f59e8d40665f878b2e",

"segments": [

{

"provider:segmentation_id": 2,

"provider:physical_network": "public",

"provider:network_type": "vlan"

},

{

"provider:segmentation_id": null,

"provider:physical_network": "default",

"provider:network_type": "flat"

}

],

"shared": false,

"id": "4e8e5957-649f-477b-9e5b-f1f75b21c03c",

"description": ""

}

}

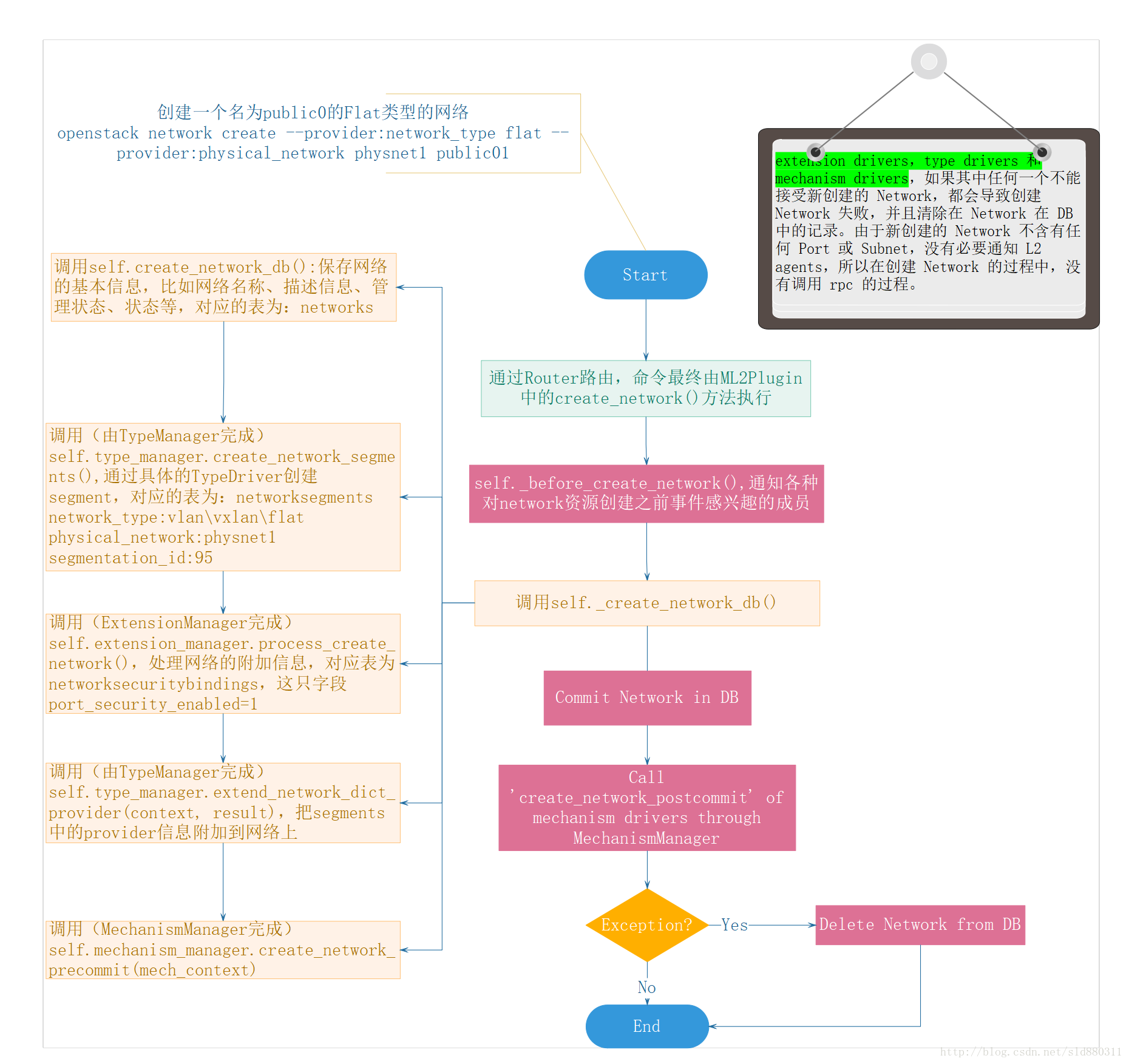

创建流程

创建Port

RESTAPI

Request /Response Example

{

"port": {

"admin_state_up": true,

"name": "private-port",

"network_id": "a87cc70a-3e15-4acf-8205-9b711a3531b7"

}

}

{

"port": {

"admin_state_up": true,

"allowed_address_pairs": [],

"created_at": "2016-03-08T20:19:41",

"data_plane_status": null,

"description": "",

"device_id": "",

"device_owner": "",

"extra_dhcp_opts": [],

"fixed_ips": [

{

"ip_address": "10.0.0.2",

"subnet_id": "a0304c3a-4f08-4c43-88af-d796509c97d2"

}

],

"id": "65c0ee9f-d634-4522-8954-51021b570b0d",

"mac_address": "fa:16:3e:c9:cb:f0",

"name": "private-port",

"network_id": "a87cc70a-3e15-4acf-8205-9b711a3531b7",

"port_security_enabled": true,

"project_id": "d6700c0c9ffa4f1cb322cd4a1f3906fa",

"security_groups": [

"f0ac4394-7e4a-4409-9701-ba8be283dbc3"

],

"status": "DOWN",

"tenant_id": "d6700c0c9ffa4f1cb322cd4a1f3906fa",

"updated_at": "2016-03-08T20:19:41"

}

}

Request/Response Example (admin user)

{

"port": {

"binding:host_id": "4df8d9ff-6f6f-438f-90a1-ef660d4586ad",

"binding:profile": {

"local_link_information": [

{

"port_id": "Ethernet3/1",

"switch_id": "0a:1b:2c:3d:4e:5f",

"switch_info": "switch1"

}

]

},

"binding:vnic_type": "baremetal",

"device_id": "d90a13da-be41-461f-9f99-1dbcf438fdf2",

"device_owner": "baremetal:none"

}

}

{

"port": {

"admin_state_up": true,

"allowed_address_pairs": [],

"binding:host_id": "4df8d9ff-6f6f-438f-90a1-ef660d4586ad",

"binding:profile": {

"local_link_information": [

{

"port_id": "Ethernet3/1",

"switch_id": "0a:1b:2c:3d:4e:5f",

"switch_info": "switch1"

}

]

},

"binding:vif_details": {},

"binding:vif_type": "unbound",

"binding:vnic_type": "other",

"data_plane_status": null,

"description": "",

"device_id": "d90a13da-be41-461f-9f99-1dbcf438fdf2",

"device_owner": "baremetal:none",

"fixed_ips": [

{

"ip_address": "10.0.0.2",

"subnet_id": "a0304c3a-4f08-4c43-88af-d796509c97d2"

}

],

"id": "65c0ee9f-d634-4522-8954-51021b570b0d",

"mac_address": "fa:16:3e:c9:cb:f0",

"name": "private-port",

"network_id": "a87cc70a-3e15-4acf-8205-9b711a3531b7",

"project_id": "d6700c0c9ffa4f1cb322cd4a1f3906fa",

"security_groups": [

"f0ac4394-7e4a-4409-9701-ba8be283dbc3"

],

"status": "DOWN",

"tenant_id": "d6700c0c9ffa4f1cb322cd4a1f3906fa"

}

}

创建流程

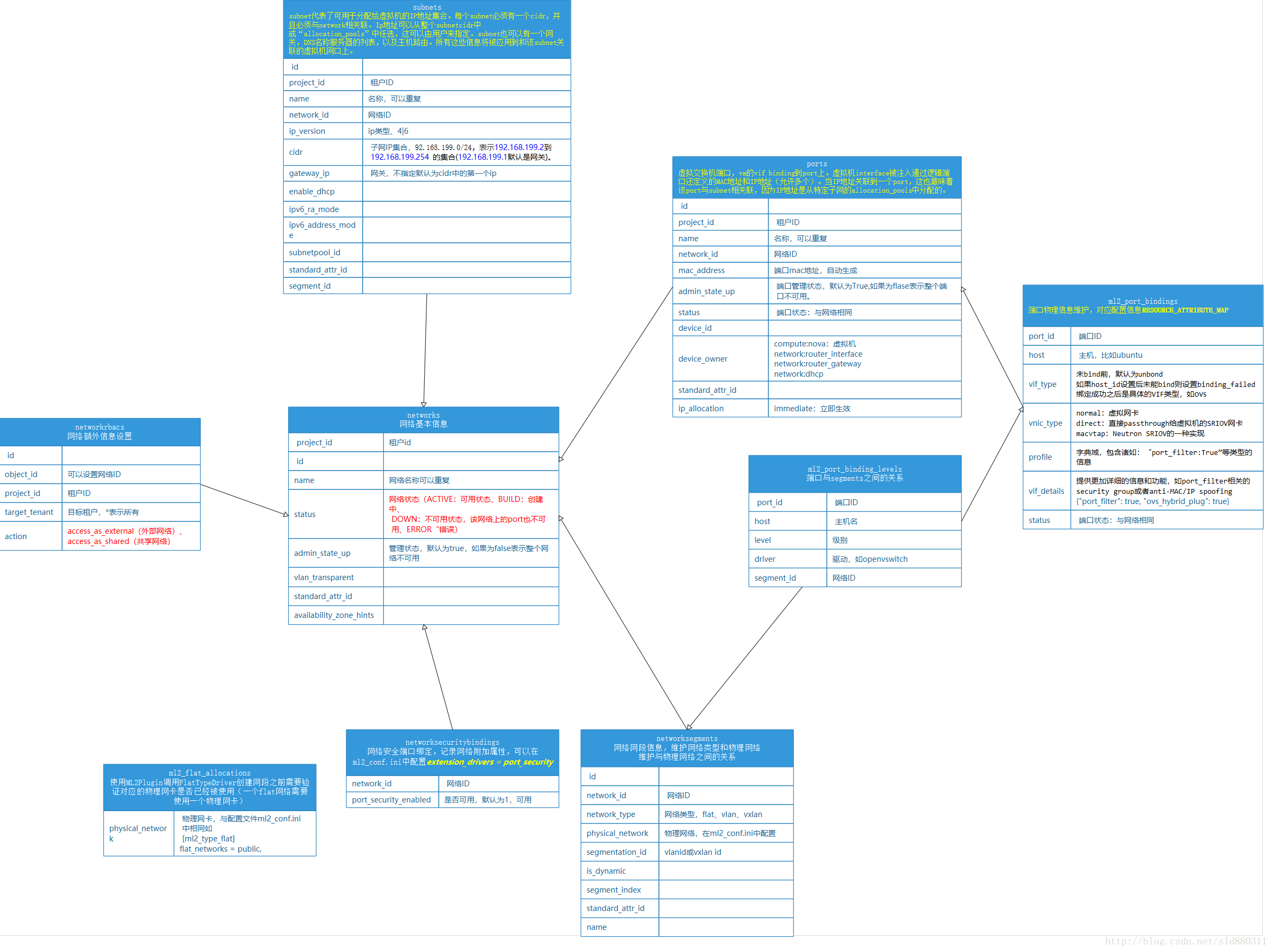

相关表结构

总结

ML2 框架是一种极易扩展的框架。使用者和开发人员可以在不用改变现有 OpenStack Neutron 代码的前提下,对 ML2 进行扩展。

• 当需要增加一种与 L2 resource 相关的 Neutron resource 时,不需要修改现有的 L2 resource 代码,只需要扩展 extension drivers。

• 当需要支持一种新的网络类型时,只需要扩展 type drivers。

当需要支持一种新的 L2 技术时,只需要扩展 mechanism drivers。

参考文档

ML2 介绍:OpenStack Neutron 官方有关 ML2 的 wiki。

RESTAPI:Openstack官网网络rest请求接送和response响应json参考。