以下操作基于Windows平台。

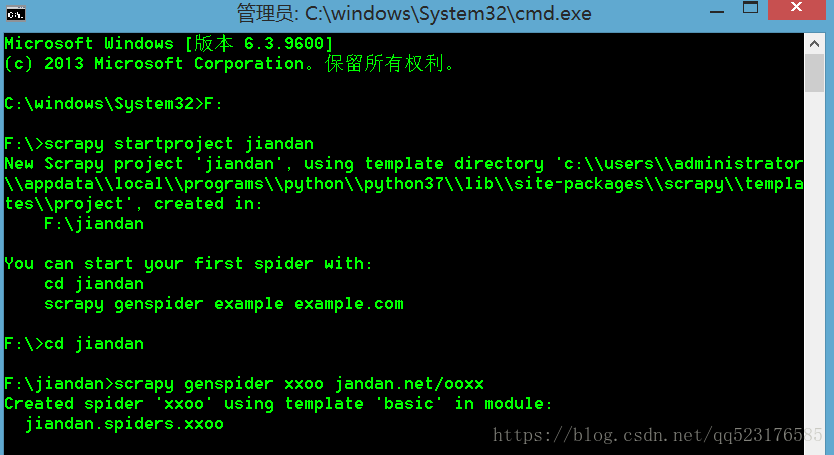

打开CMD命令提示框:

新建一个项目如下:

打开项目里的setting文件,添加如下代码

IMAGES_STORE = './XXOO' #在当前目录下新建一个XXOO文件夹

MAX_PAGE = 40 #定义爬取的总得页数打开项目里的middlewares.py文件,添加如下代码:

import random

class RandomUserAgentMiddleware():

def __inti__(self):

self.user_agents = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 ",

"(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11",

"(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6",

"(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6",

"(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5",

"(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5",

"(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3",

"(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24",

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 ",

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",]

def process_request(self,request,spider):

request.headers['User-Agent'] = random.choice(self.user_agents) #选择选举User-Agent加pipelines.py入如下代码:

from scrapy import Request

from scrapy.exceptions import DropItem

from scrapy.pipelines.images import ImagesPipeline

class JiandanPipeline(ImagesPipeline):

def file_path(self,request,response = None,info = None):

url = request.url

file_name = url.split('/')[-1]

return file_name

def item_completed(self,results,item,info):

image_paths = [x['path'] for ok, x in results if ok]

if not image_paths:

raise DropItem('Image DownLoaded Failed')

return item

def get_media_requests(self,item,info):

yield Request(item['url'])在setting文件加入如下代码:

DOWNLOADER_MIDDLEWARES = {

'jiandan.middlewares.RandomUserAgentMiddleware': 543,

}

ITEM_PIPELINES = {

'jiandan.pipelines.JiandanPipeline':300

}在items文件加入变量:

from scrapy import Item,Field

class JiandanItem(Item):

# define the fields for your item here like:

# name = scrapy.Field()

url = Field()在xxoo.py里加入如下代码:

# -*- coding: utf-8 -*-

import scrapy

import base64

from jiandan.items import JiandanItem

class XxooSpider(scrapy.Spider):

name = 'xxoo'

def start_requests(self):

for page in range(1,self.settings.get('MAX_PAGE') + 1):

url = 'http://jandan.net/ooxx/page-{}#comments'.format(page)

yield scrapy.Request(url,self.parse)

def parse(self, response):

urls = response.css('li[id]')

for url in urls:

item = JiandanItem()

encodeurl = url.css('.img-hash::text').extract_first()

decodeurl = 'http:' + base64.b64decode(encodeurl).decode()

item['url'] = decodeurl

yield item

运行命令:

scrapy crawl xxoo很快就还是下载了,而且速度是非常的快。

效果如下: