原理性的东西粘贴自官方网站:

TiDB 简介:

TiDB 是 PingCAP 公司设计的开源分布式 HTAP (Hybrid Transactional and Analytical Processing)

数据库,结合了传统的 RDBMS 和 NoSQL 的最佳特性。TiDB 兼容 MySQL,支持无限的水平扩展,具备强一致性

和高可用性。TiDB 的目标是为 OLTP (Online Transactional Processing) 和

OLAP (Online Analytical Processing) 场景提供一站式的解决方案。TiDB 整体架构:

要深入了解 TiDB 的水平扩展和高可用特点,首先需要了解 TiDB 的整体架构。TiDB 集群主要包括三个核心组件:

TiDB Server,PD Server 和 TiKV Server。此外,还有用于解决用户复杂 OLAP 需求的 TiSpark 组件。TiDB Server 负责接收 SQL 请求,处理 SQL 相关的逻辑,并通过 PD 找到存储计算所需数据的 TiKV 地址,与

TiKV 交互获取数据,最终返回结果。TiDB Server 是无状态的,其本身并不存储数据,只负责计算,可以无限水平

扩展,可以通过负载均衡组件(如LVS、HAProxy 或 F5)对外提供统一的接入地址。

PD Server

Placement Driver (简称 PD) 是整个集群的管理模块,其主要工作有三个:一是存储集群的元信息(某个 Key 存储

在哪个 TiKV 节点);二是对 TiKV 集群进行调度和负载均衡(如数据的迁移、Raft group leader 的迁移等);三

是分配全局唯一且递增的事务 ID。

PD 是一个集群,需要部署奇数个节点,一般线上推荐至少部署 3 个节点。

TiKV Server

TiKV Server 负责存储数据,从外部看 TiKV 是一个分布式的提供事务的 Key-Value 存储引擎。存储数据的基本单位

是 Region,每个 Region 负责存储一个 Key Range(从 StartKey 到 EndKey 的左闭右开区间)的数据,每个 TiKV

节点会负责多个 Region。TiKV 使用 Raft 协议做复制,保持数据的一致性和容灾。副本以 Region 为单位进行管理,

不同节点上的多个 Region 构成一个 Raft Group,互为副本。数据在多个 TiKV 之间的负载均衡由 PD 调度,这里也

是以 Region 为单位进行调度。

TiSpark

TiSpark 作为 TiDB 中解决用户复杂 OLAP 需求的主要组件,将 Spark SQL 直接运行在 TiDB 存储层上,同时融合

TiKV 分布式集群的优势,并融入大数据社区生态。至此,TiDB 可以通过一套系统,同时支持 OLTP 与 OLAP,免除用户

数据同步的烦恼。核心特性:

1,水平扩展:

无限水平扩展是 TiDB 的一大特点,这里说的水平扩展包括两方面:计算能力和存储能力。TiDB Server 负责处理

SQL 请求,随着业务的增长,可以简单的添加 TiDB Server 节点,提高整体的处理能力,提供更高的吞吐。TiKV

负责存储数据,随着数据量的增长,可以部署更多的 TiKV Server 节点解决数据 Scale 的问题。PD 会在 TiKV

节点之间以 Region 为单位做调度,将部分数据迁移到新加的节点上。所以在业务的早期,可以只部署少量的服务实例

(推荐至少部署 3 个 TiKV, 3 个 PD,2 个 TiDB),随着业务量的增长,按照需求添加 TiKV 或者 TiDB 实例。2,高可用:

高可用是 TiDB 的另一大特点,TiDB/TiKV/PD 这三个组件都能容忍部分实例失效,不影响整个集群的可用性。官方网站要求安装docker最新版:

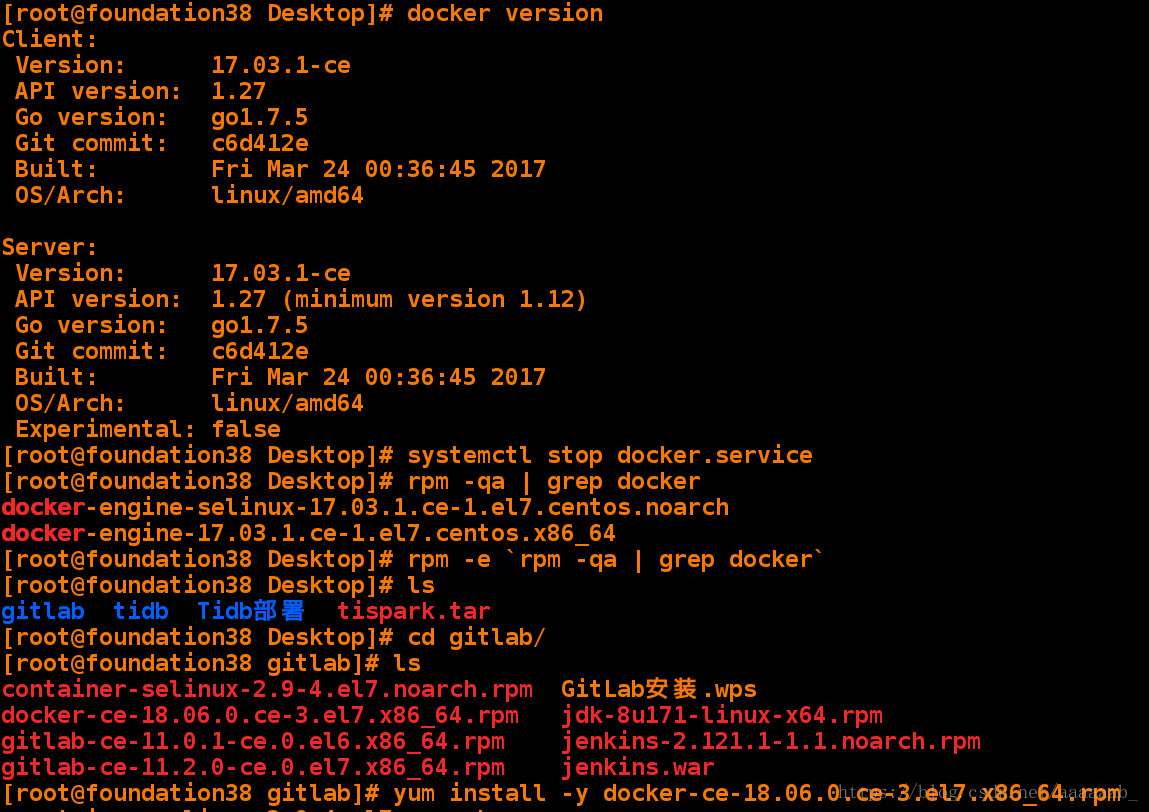

[root@foundation38 Desktop]# docker version 查看docker版本

Client:

Version: 17.03.1-ce

API version: 1.27

Go version: go1.7.5

Git commit: c6d412e

Built: Fri Mar 24 00:36:45 2017

OS/Arch: linux/amd64

Server:

Version: 17.03.1-ce

API version: 1.27 (minimum version 1.12)

Go version: go1.7.5

Git commit: c6d412e

Built: Fri Mar 24 00:36:45 2017

OS/Arch: linux/amd64

Experimental: false

[root@foundation38 Desktop]# systemctl stop docker.service 停止docker服务

[root@foundation38 Desktop]# rpm -qa | grep docker

docker-engine-selinux-17.03.1.ce-1.el7.centos.noarch

docker-engine-17.03.1.ce-1.el7.centos.x86_64

[root@foundation38 Desktop]# rpm -e `rpm -qa | grep docker` 卸载旧版docker

[root@foundation38 Desktop]# ls

gitlab tidb Tidb部署 tispark.tar

[root@foundation38 Desktop]# cd gitlab/

[root@foundation38 gitlab]# ls

container-selinux-2.9-4.el7.noarch.rpm GitLab安装.wps

docker-ce-18.06.0.ce-3.el7.x86_64.rpm jdk-8u171-linux-x64.rpm

gitlab-ce-11.0.1-ce.0.el6.x86_64.rpm jenkins-2.121.1-1.1.noarch.rpm

gitlab-ce-11.2.0-ce.0.el7.x86_64.rpm jenkins.war

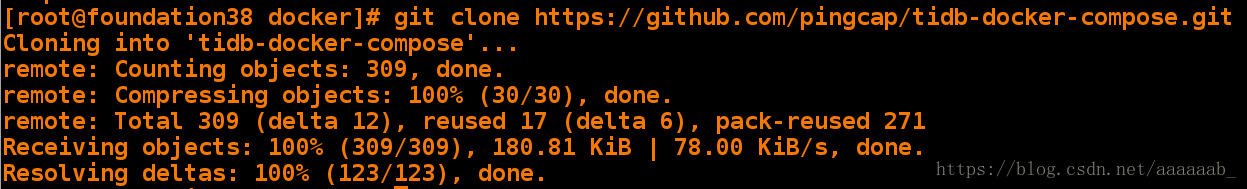

[root@foundation38 gitlab]# yum install -y docker-ce-18.06.0.ce-3.el7.x86_64.rpm 安装docker最新版[root@foundation38 docker]# git clone https://github.com/pingcap/tidb-docker-compose.git

Cloning into 'tidb-docker-compose'...

fatal: unable to access 'https://github.com/pingcap/tidb-docker-compose.git/': Peer reports incompatible or unsupported protocol version. 报错由于版本原因无法下载

[root@foundation38 docker]# yum update nss curl yum update nss curl 进行uodate

[root@foundation38 docker]# git clone https://github.com/pingcap/tidb-docker-compose.git 可以正常下载

Cloning into 'tidb-docker-compose'...

remote: Counting objects: 309, done.

remote: Compressing objects: 100% (30/30), done.

remote: Total 309 (delta 12), reused 17 (delta 6), pack-reused 271

Receiving objects: 100% (309/309), 180.81 KiB | 78.00 KiB/s, done.

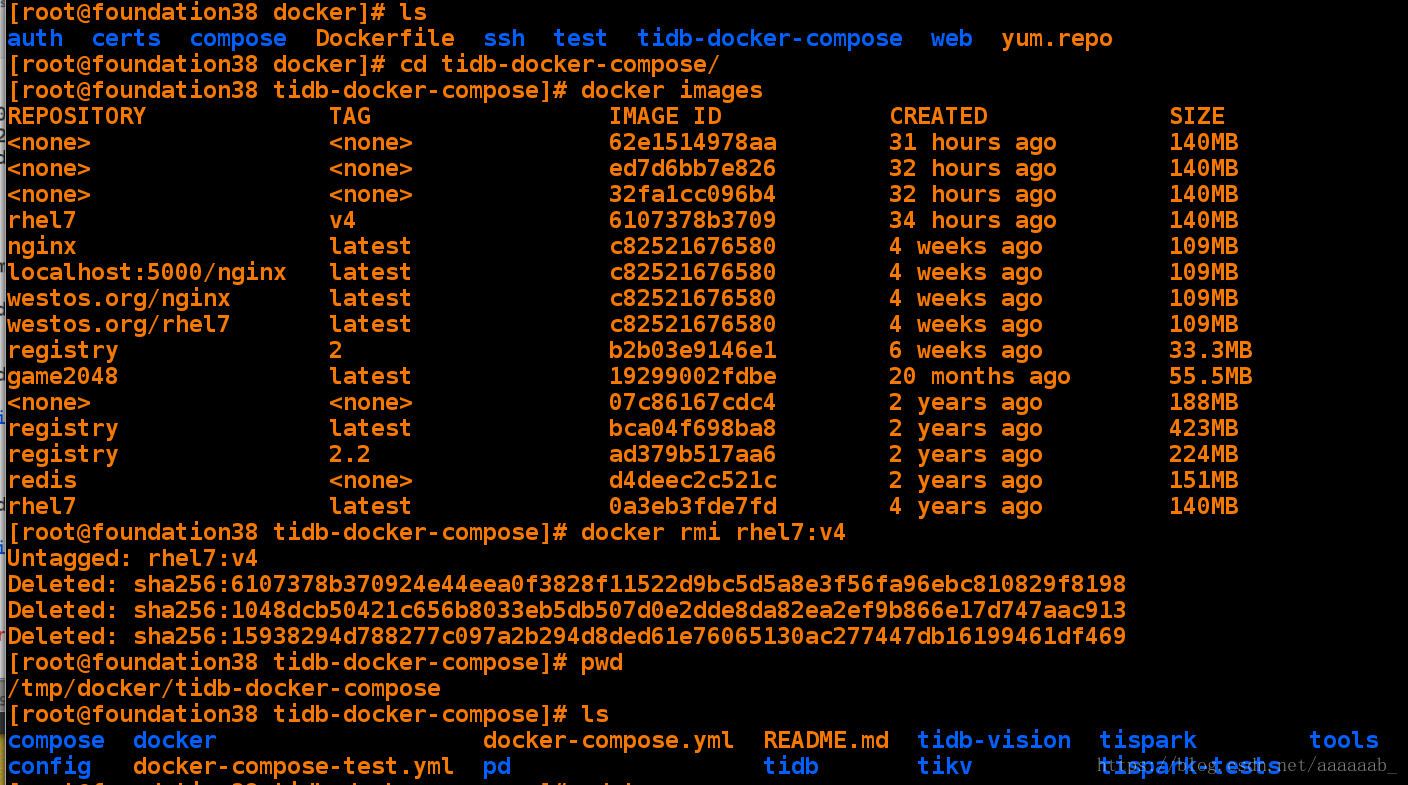

Resolving deltas: 100% (123/123), done.root@foundation38 docker]# ls

auth certs compose Dockerfile ssh test tidb-docker-compose web yum.repo

[root@foundation38 docker]# cd tidb-docker-compose/ 进入从官方下载下来的目录中

[root@foundation38 tidb-docker-compose]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

<none> <none> 62e1514978aa 31 hours ago 140MB

<none> <none> ed7d6bb7e826 32 hours ago 140MB

<none> <none> 32fa1cc096b4 32 hours ago 140MB

rhel7 v4 6107378b3709 34 hours ago 140MB

nginx latest c82521676580 4 weeks ago 109MB

localhost:5000/nginx latest c82521676580 4 weeks ago 109MB

westos.org/nginx latest c82521676580 4 weeks ago 109MB

westos.org/rhel7 latest c82521676580 4 weeks ago 109MB

registry 2 b2b03e9146e1 6 weeks ago 33.3MB

game2048 latest 19299002fdbe 20 months ago 55.5MB

<none> <none> 07c86167cdc4 2 years ago 188MB

registry latest bca04f698ba8 2 years ago 423MB

registry 2.2 ad379b517aa6 2 years ago 224MB

redis <none> d4deec2c521c 2 years ago 151MB

rhel7 latest 0a3eb3fde7fd 4 years ago 140MB

[root@foundation38 tidb-docker-compose]# docker rmi rhel7:v4 删除不需要的镜像

Untagged: rhel7:v4

Deleted: sha256:6107378b370924e44eea0f3828f11522d9bc5d5a8e3f56fa96ebc810829f8198

Deleted: sha256:1048dcb50421c656b8033eb5db507d0e2dde8da82ea2ef9b866e17d747aac913

Deleted: sha256:15938294d788277c097a2b294d8ded61e76065130ac277447db16199461df469

[root@foundation38 tidb-docker-compose]# pwd

/tmp/docker/tidb-docker-compose

[root@foundation38 tidb-docker-compose]# ls

compose docker docker-compose.yml README.md tidb-vision tispark tools

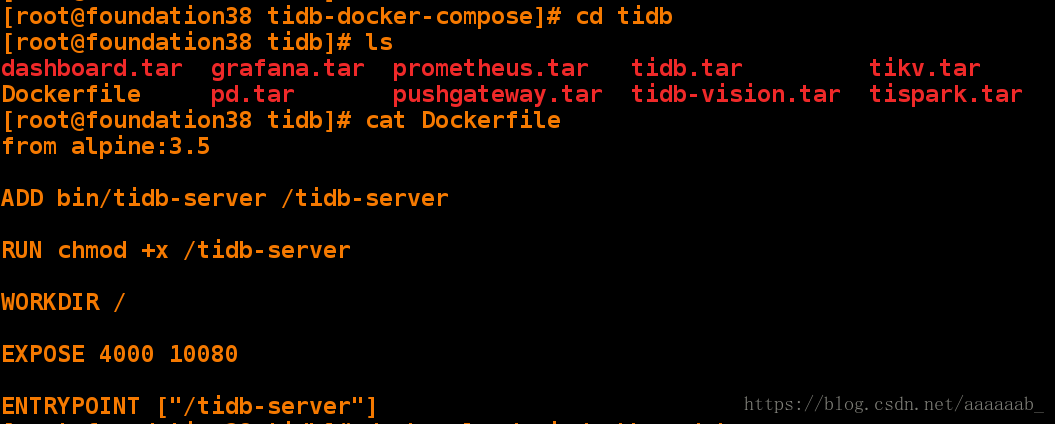

config docker-compose-test.yml pd tidb tikv tispark-tests[root@foundation38 tidb-docker-compose]# cd tidb

[root@foundation38 tidb]# ls

dashboard.tar grafana.tar prometheus.tar tidb.tar tikv.tar

Dockerfile pd.tar pushgateway.tar tidb-vision.tar tispark.tar

[root@foundation38 tidb]# cat Dockerfile 官方编写的Dockerfile

from alpine:3.5

ADD bin/tidb-server /tidb-server

RUN chmod +x /tidb-server

WORKDIR /

EXPOSE 4000 10080

ENTRYPOINT ["/tidb-server"]

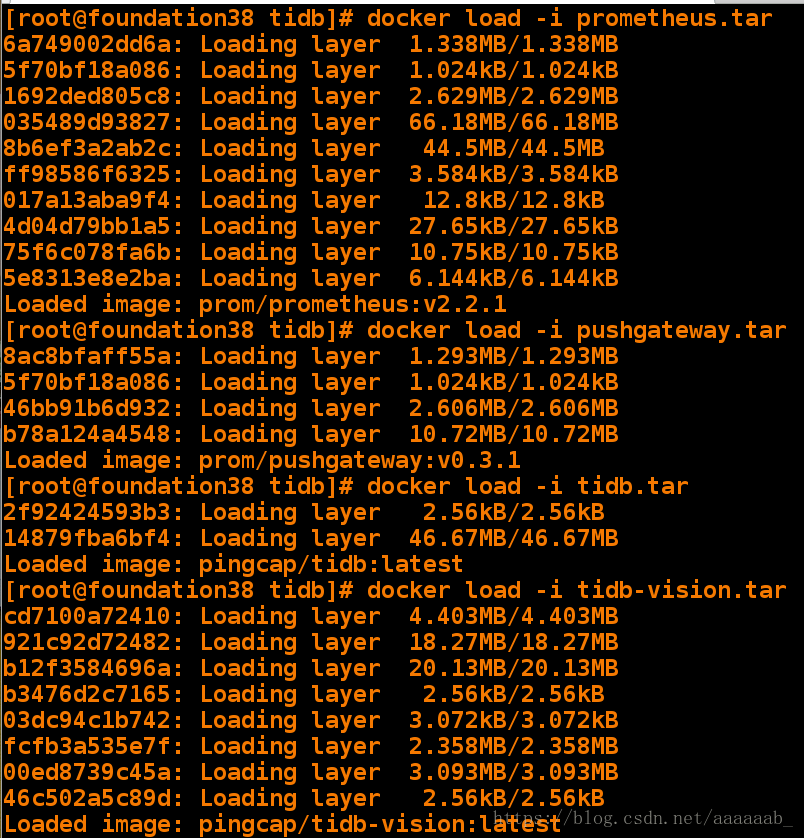

依次加载镜像:

[root@foundation38 tidb]# docker load -i dashboard.tar

[root@foundation38 tidb]# docker load -i grafana.tar

[root@foundation38 tidb]# docker load -i pd.tar

[root@foundation38 tidb]# docker load -i prometheus.tar

[root@foundation38 tidb]# docker load -i pushgateway.tar

[root@foundation38 tidb]# docker load -i tidb.tar

[root@foundation38 tidb]# docker load -i tidb-vision.tar

[root@foundation38 tidb]# docker load -i tikv.tar

[root@foundation38 tidb]# docker load -i tispark.tar

还原docker环境删除容器删除卷组:

扫描二维码关注公众号,回复:

3459638 查看本文章

[root@foundation38 tidb-docker-compose]# docker ps -a

[root@foundation38 tidb-docker-compose]# docker rm -f `docker ps -aq`

[root@foundation38 tidb-docker-compose]# docker volume ls

[root@foundation38 tidb-docker-compose]# docker volume rm `docker volume ls -q`

[root@foundation38 tidb-docker-compose]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@foundation38 tidb-docker-compose]# docker volume ls

DRIVER VOLUME NAME

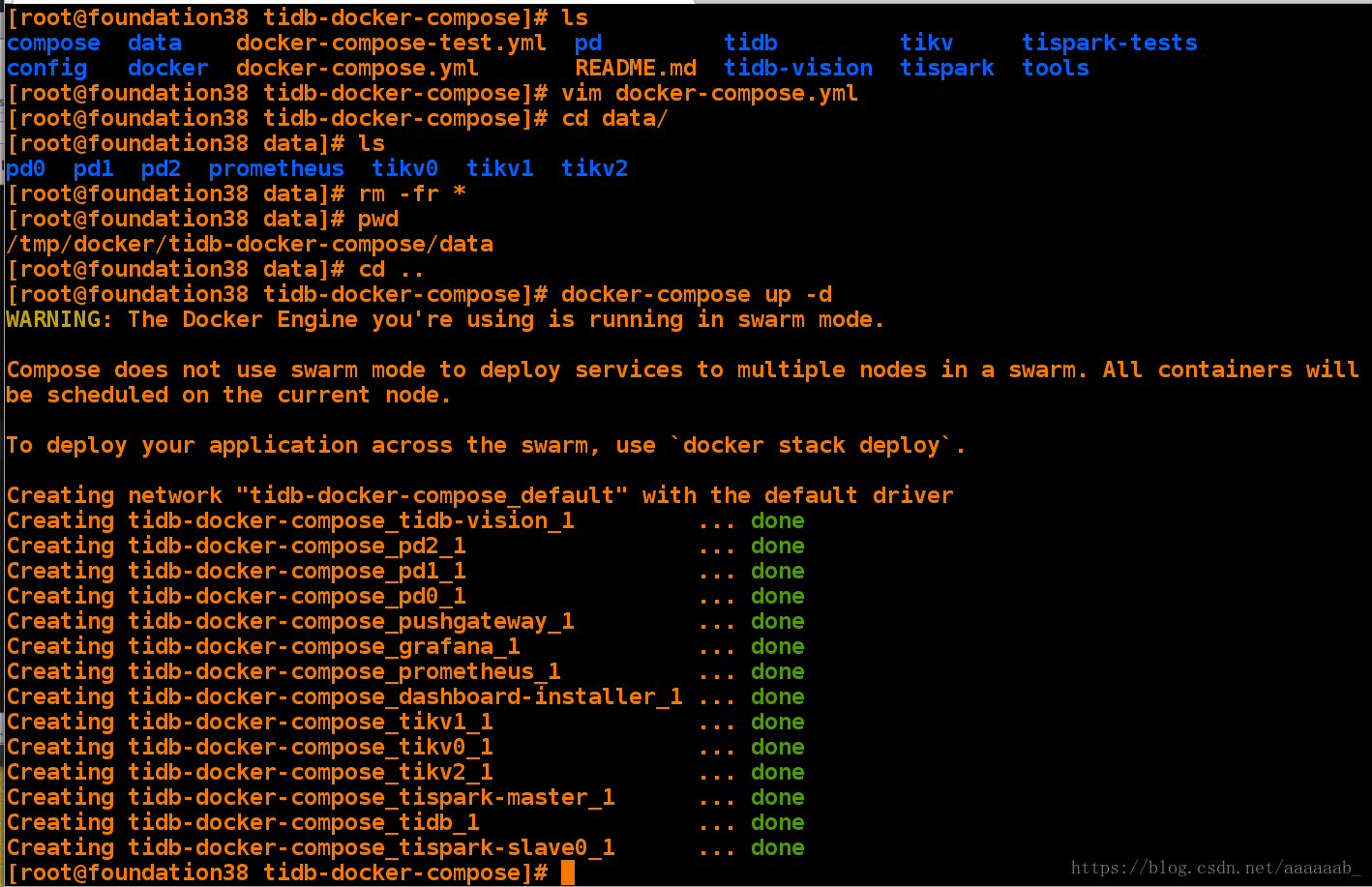

部署Tidb集群:

[root@foundation38 tidb-docker-compose]# ls

compose data docker-compose-test.yml pd tidb tikv tispark-tests

config docker docker-compose.yml README.md tidb-vision tispark tools

[root@foundation38 tidb-docker-compose]# vim docker-compose.yml

[root@foundation38 tidb-docker-compose]# cd data/

[root@foundation38 data]# ls

pd0 pd1 pd2 prometheus tikv0 tikv1 tikv2

[root@foundation38 data]# rm -fr *

[root@foundation38 data]# pwd

/tmp/docker/tidb-docker-compose/data

[root@foundation38 data]# cd ..

[root@foundation38 tidb-docker-compose]# docker-compose up -d

WARNING: The Docker Engine you're using is running in swarm mode.

Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node.

To deploy your application across the swarm, use `docker stack deploy`.

Creating network "tidb-docker-compose_default" with the default driver

Creating tidb-docker-compose_tidb-vision_1 ... done

Creating tidb-docker-compose_pd2_1 ... done

Creating tidb-docker-compose_pd1_1 ... done

Creating tidb-docker-compose_pd0_1 ... done

Creating tidb-docker-compose_pushgateway_1 ... done

Creating tidb-docker-compose_grafana_1 ... done

Creating tidb-docker-compose_prometheus_1 ... done

Creating tidb-docker-compose_dashboard-installer_1 ... done

Creating tidb-docker-compose_tikv1_1 ... done

Creating tidb-docker-compose_tikv0_1 ... done

Creating tidb-docker-compose_tikv2_1 ... done

Creating tidb-docker-compose_tispark-master_1 ... done

Creating tidb-docker-compose_tidb_1 ... done

Creating tidb-docker-compose_tispark-slave0_1 ... done

docker-compose.yml文件修改内容:

可以自行编写,本实例的yml是从网上下载下来修改的

[root@foundation38 tidb-docker-compose]# cat docker-compose.yml

version: '2.1'

services:

pd0:

image: pingcap/pd:latest

ports:

- "2379"

volumes:

- ./config/pd.toml:/pd.toml:ro

- ./data:/data

command:

- --name=pd0

- --client-urls=http://0.0.0.0:2379

- --peer-urls=http://0.0.0.0:2380

- --advertise-client-urls=http://pd0:2379

- --advertise-peer-urls=http://pd0:2380

- --initial-cluster=pd0=http://pd0:2380,pd1=http://pd1:2380,pd2=http://pd2:2380

- --data-dir=/data/pd0

- --config=/pd.toml

restart: on-failure

pd1:

image: pingcap/pd:latest

ports:

- "2379"

volumes:

- ./config/pd.toml:/pd.toml:ro

- ./data:/data

command:

- --name=pd1

- --client-urls=http://0.0.0.0:2379

- --peer-urls=http://0.0.0.0:2380

- --advertise-client-urls=http://pd1:2379

- --advertise-peer-urls=http://pd1:2380

- --initial-cluster=pd0=http://pd0:2380,pd1=http://pd1:2380,pd2=http://pd2:2380

- --data-dir=/data/pd1

- --config=/pd.toml

restart: on-failure

pd2:

image: pingcap/pd:latest

ports:

- "2379"

volumes:

- ./config/pd.toml:/pd.toml:ro

- ./data:/data

command:

- --name=pd2

- --client-urls=http://0.0.0.0:2379

- --peer-urls=http://0.0.0.0:2380

- --advertise-client-urls=http://pd2:2379

- --advertise-peer-urls=http://pd2:2380

- --initial-cluster=pd0=http://pd0:2380,pd1=http://pd1:2380,pd2=http://pd2:2380

- --data-dir=/data/pd2

- --config=/pd.toml

restart: on-failure

tikv0:

image: pingcap/tikv:latest

ulimits:

nofile:

soft: 1000000

hard: 1000000

volumes:

- ./config/tikv.toml:/tikv.toml:ro

- ./data:/data

command:

- --addr=0.0.0.0:20160

- --advertise-addr=tikv0:20160

- --data-dir=/data/tikv0

- --pd=pd0:2379,pd1:2379,pd2:2379

- --config=/tikv.toml

depends_on:

- "pd0"

- "pd1"

- "pd2"

restart: on-failure

tikv1:

image: pingcap/tikv:latest

ulimits:

nofile:

soft: 1000000

hard: 1000000

volumes:

- ./config/tikv.toml:/tikv.toml:ro

- ./data:/data

command:

- --addr=0.0.0.0:20160

- --advertise-addr=tikv1:20160

- --data-dir=/data/tikv1

- --pd=pd0:2379,pd1:2379,pd2:2379

- --config=/tikv.toml

depends_on:

- "pd0"

- "pd1"

- "pd2"

restart: on-failure

tikv2:

image: pingcap/tikv:latest

ulimits:

nofile:

soft: 1000000

hard: 1000000

volumes:

- ./config/tikv.toml:/tikv.toml:ro

- ./data:/data

command:

- --addr=0.0.0.0:20160

- --advertise-addr=tikv2:20160

- --data-dir=/data/tikv2

- --pd=pd0:2379,pd1:2379,pd2:2379

- --config=/tikv.toml

depends_on:

- "pd0"

- "pd1"

- "pd2"

restart: on-failure

tidb:

image: pingcap/tidb:latest

ports:

- "4000:4000"

- "10080:10080"

volumes:

- ./config/tidb.toml:/tidb.toml:ro

command:

- --store=tikv

- --path=pd0:2379,pd1:2379,pd2:2379

- --config=/tidb.toml

depends_on:

- "tikv0"

- "tikv1"

- "tikv2"

restart: on-failure

tispark-master:

image: pingcap/tispark:latest

command:

- /opt/spark/sbin/start-master.sh

volumes:

- ./config/spark-defaults.conf:/opt/spark/conf/spark-defaults.conf:ro

environment:

SPARK_MASTER_PORT: 7077

SPARK_MASTER_WEBUI_PORT: 8080

ports:

- "7077:7077"

- "8080:8080"

depends_on:

- "tikv0"

- "tikv1"

- "tikv2"

restart: on-failure

tispark-slave0:

image: pingcap/tispark:latest

command:

- /opt/spark/sbin/start-slave.sh

- spark://tispark-master:7077

volumes:

- ./config/spark-defaults.conf:/opt/spark/conf/spark-defaults.conf:ro

environment:

SPARK_WORKER_WEBUI_PORT: 38081

ports:

- "38081:38081"

depends_on:

- tispark-master

restart: on-failure

tidb-vision:

image: pingcap/tidb-vision:latest

environment:

PD_ENDPOINT: pd0:2379

ports:

- "8010:8010"

# monitors

pushgateway:

image: prom/pushgateway:v0.3.1

command:

- --log.level=error

restart: on-failure

prometheus:

user: root

image: prom/prometheus:v2.2.1

command:

- --log.level=error

- --storage.tsdb.path=/data/prometheus

- --config.file=/etc/prometheus/prometheus.yml

ports:

- "9090:9090"

volumes:

- ./config/prometheus.yml:/etc/prometheus/prometheus.yml:ro

- ./config/pd.rules.yml:/etc/prometheus/pd.rules.yml:ro

- ./config/tikv.rules.yml:/etc/prometheus/tikv.rules.yml:ro

- ./config/tidb.rules.yml:/etc/prometheus/tidb.rules.yml:ro

- ./data:/data

restart: on-failure

grafana:

image: grafana/grafana:4.6.3

environment:

GF_LOG_LEVEL: error

ports:

- "3000:3000"

restart: on-failure

dashboard-installer:

image: pingcap/tidb-dashboard-installer:v2.0.0

command: ["grafana:3000"]

volumes:

- ./config/grafana-datasource.json:/datasource.json:ro

- ./config/pd-dashboard.json:/pd.json:ro

- ./config/tikv-dashboard.json:/tikv.json:ro

- ./config/tidb-dashboard.json:/tidb.json:ro

- ./config/overview-dashboard.json:/overview.json:ro

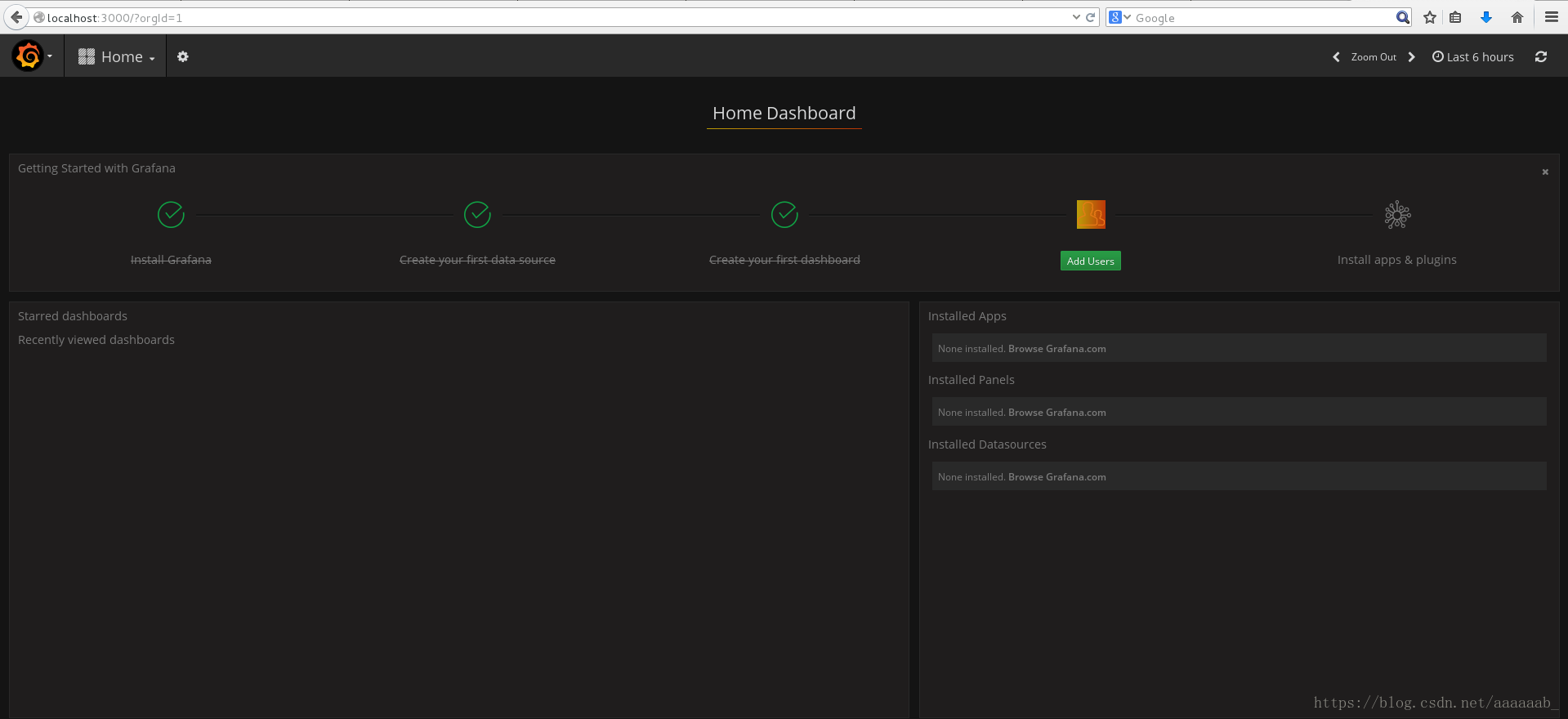

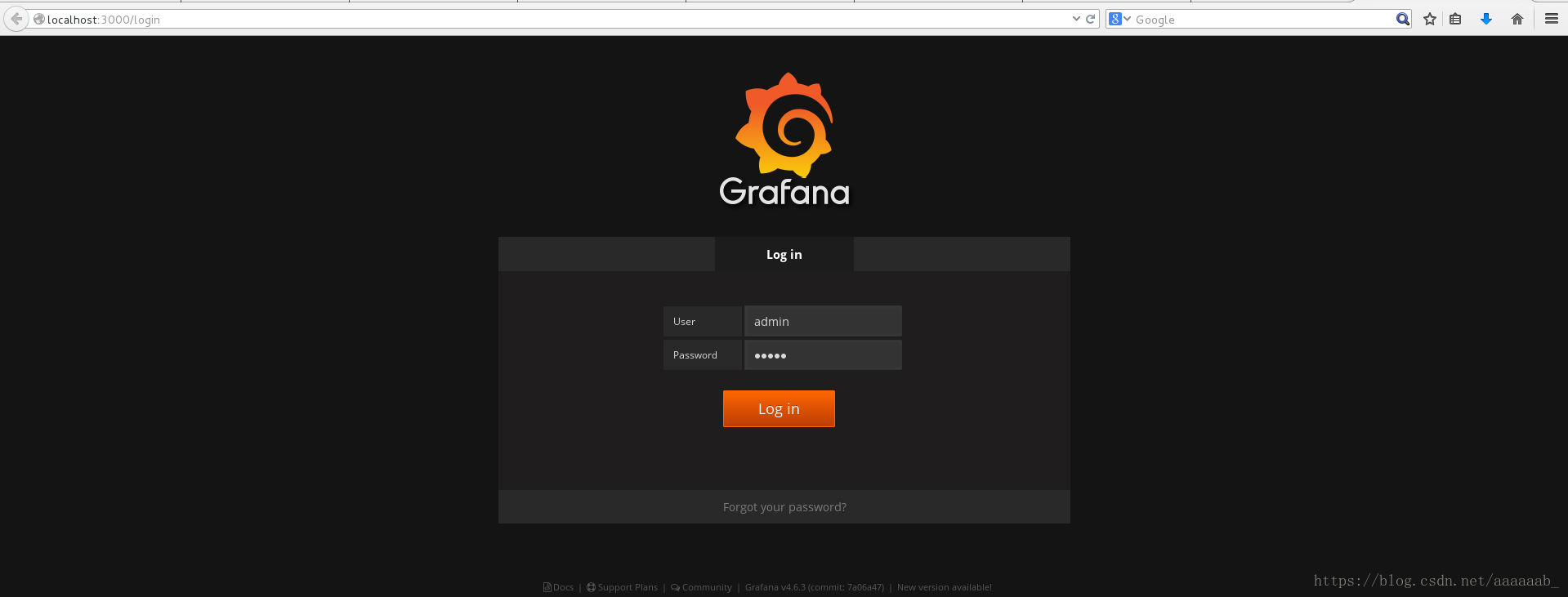

restart: on-failure在网页访问可以访问Grafana:

Grafana 是一个开源仪表盘工具,它可用于Graphite、InfluxDB与 OpenTSDB 一起使用。最新的版本还可以

用于其他的数据源,比如Elasticsearch。

从本质上说,它是一个功能丰富的Graphite-web 替代品,能帮助用户更简单地创建和编辑仪表盘。它包含一个

独一无二的Graphite目标解析器,从而可以简化度量和函数的编辑 默认用户密码均为admin:

登陆成功:

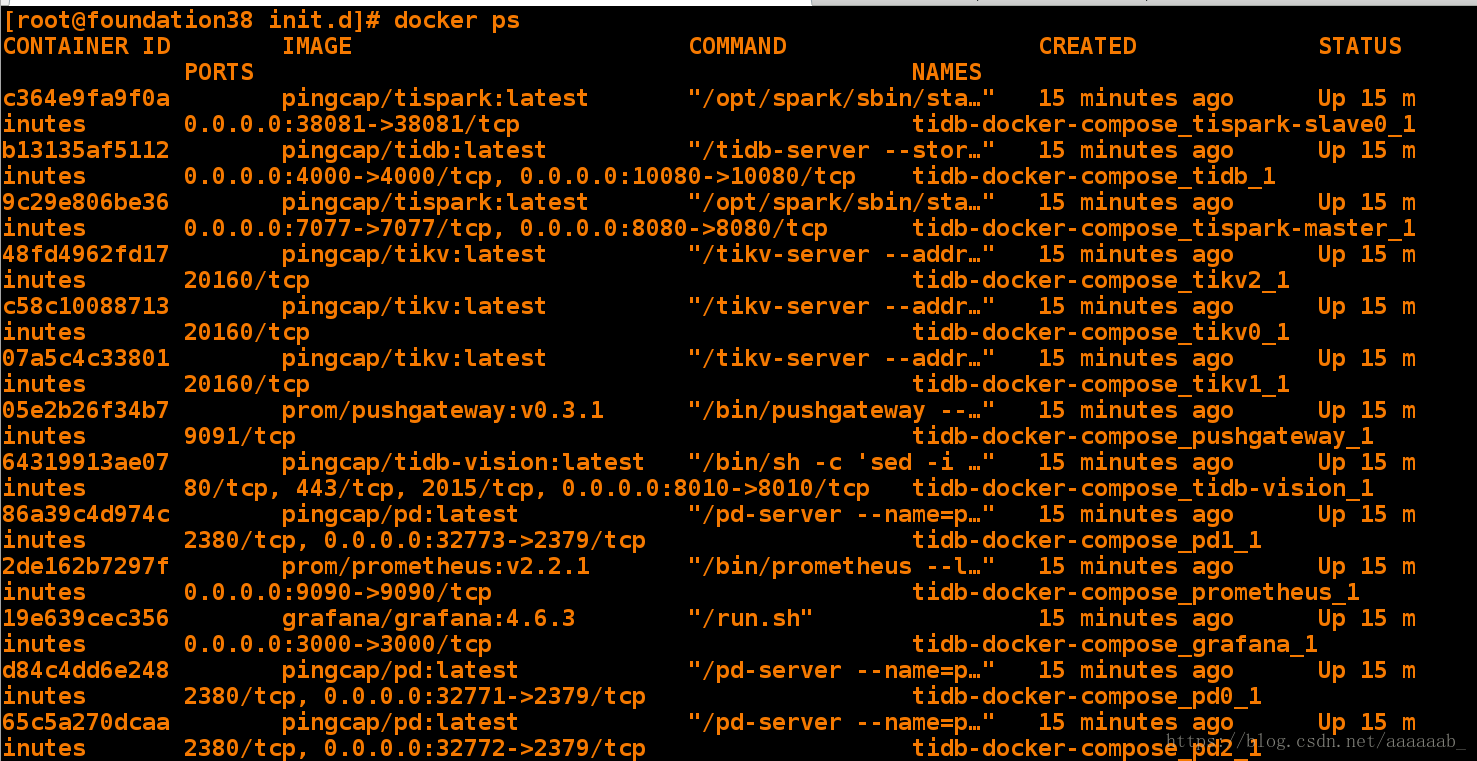

[root@foundation38 init.d]# docker ps 查看docker进程

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c364e9fa9f0a pingcap/tispark:latest "/opt/spark/sbin/sta…" 15 minutes ago Up 15 minutes 0.0.0.0:38081->38081/tcp tidb-docker-compose_tispark-slave0_1

b13135af5112 pingcap/tidb:latest "/tidb-server --stor…" 15 minutes ago Up 15 minutes 0.0.0.0:4000->4000/tcp, 0.0.0.0:10080->10080/tcp tidb-docker-compose_tidb_1

9c29e806be36 pingcap/tispark:latest "/opt/spark/sbin/sta…" 15 minutes ago Up 15 minutes 0.0.0.0:7077->7077/tcp, 0.0.0.0:8080->8080/tcp tidb-docker-compose_tispark-master_1

48fd4962fd17 pingcap/tikv:latest "/tikv-server --addr…" 15 minutes ago Up 15 minutes 20160/tcp tidb-docker-compose_tikv2_1

c58c10088713 pingcap/tikv:latest "/tikv-server --addr…" 15 minutes ago Up 15 minutes 20160/tcp tidb-docker-compose_tikv0_1

07a5c4c33801 pingcap/tikv:latest "/tikv-server --addr…" 15 minutes ago Up 15 minutes 20160/tcp tidb-docker-compose_tikv1_1

05e2b26f34b7 prom/pushgateway:v0.3.1 "/bin/pushgateway --…" 15 minutes ago Up 15 minutes 9091/tcp tidb-docker-compose_pushgateway_1

64319913ae07 pingcap/tidb-vision:latest "/bin/sh -c 'sed -i …" 15 minutes ago Up 15 minutes 80/tcp, 443/tcp, 2015/tcp, 0.0.0.0:8010->8010/tcp tidb-docker-compose_tidb-vision_1

86a39c4d974c pingcap/pd:latest "/pd-server --name=p…" 15 minutes ago Up 15 minutes 2380/tcp, 0.0.0.0:32773->2379/tcp tidb-docker-compose_pd1_1

2de162b7297f prom/prometheus:v2.2.1 "/bin/prometheus --l…" 15 minutes ago Up 15 minutes 0.0.0.0:9090->9090/tcp tidb-docker-compose_prometheus_1

19e639cec356 grafana/grafana:4.6.3 "/run.sh" 15 minutes ago Up 15 minutes 0.0.0.0:3000->3000/tcp tidb-docker-compose_grafana_1

d84c4dd6e248 pingcap/pd:latest "/pd-server --name=p…" 15 minutes ago Up 15 minutes 2380/tcp, 0.0.0.0:32771->2379/tcp tidb-docker-compose_pd0_1

65c5a270dcaa pingcap/pd:latest "/pd-server --name=p…" 15 minutes ago Up 15 minutes 2380/tcp, 0.0.0.0:32772->2379/tcp tidb-docker-compose_pd2_1

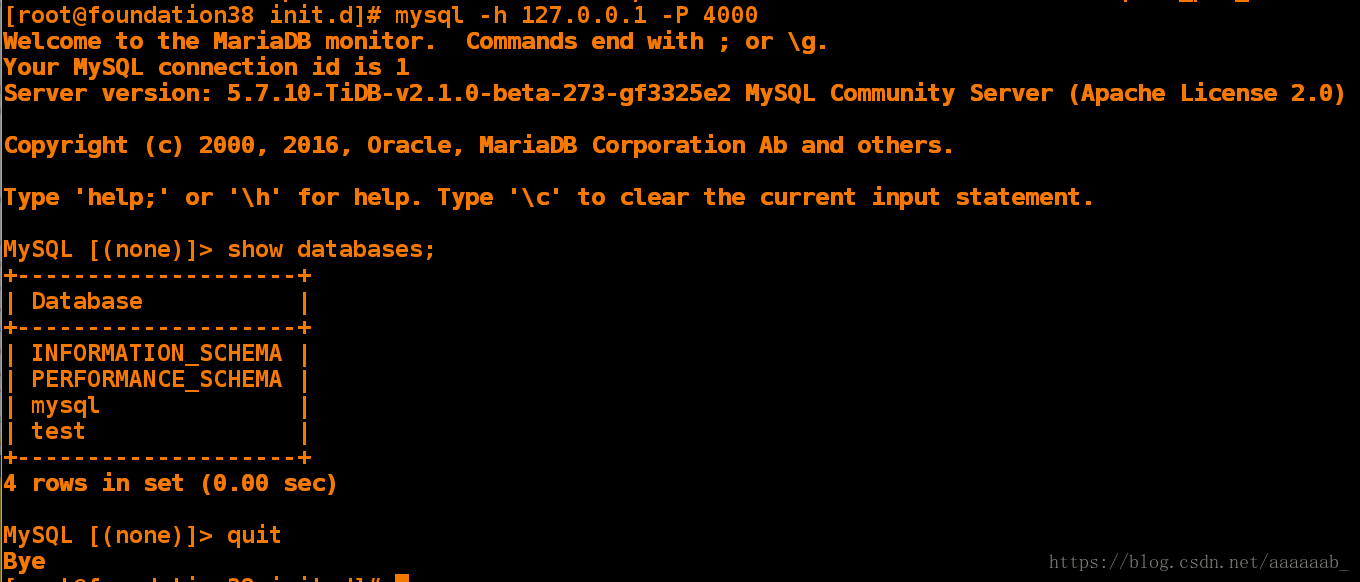

可以登陆数据库:

[root@foundation38 init.d]# mysql -h 127.0.0.1 -P 4000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.7.10-TiDB-v2.1.0-beta-273-gf3325e2 MySQL Community Server (Apache License 2.0)

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| INFORMATION_SCHEMA |

| PERFORMANCE_SCHEMA |

| mysql |

| test |

+--------------------+

4 rows in set (0.00 sec)

MySQL [(none)]> quit

Bye

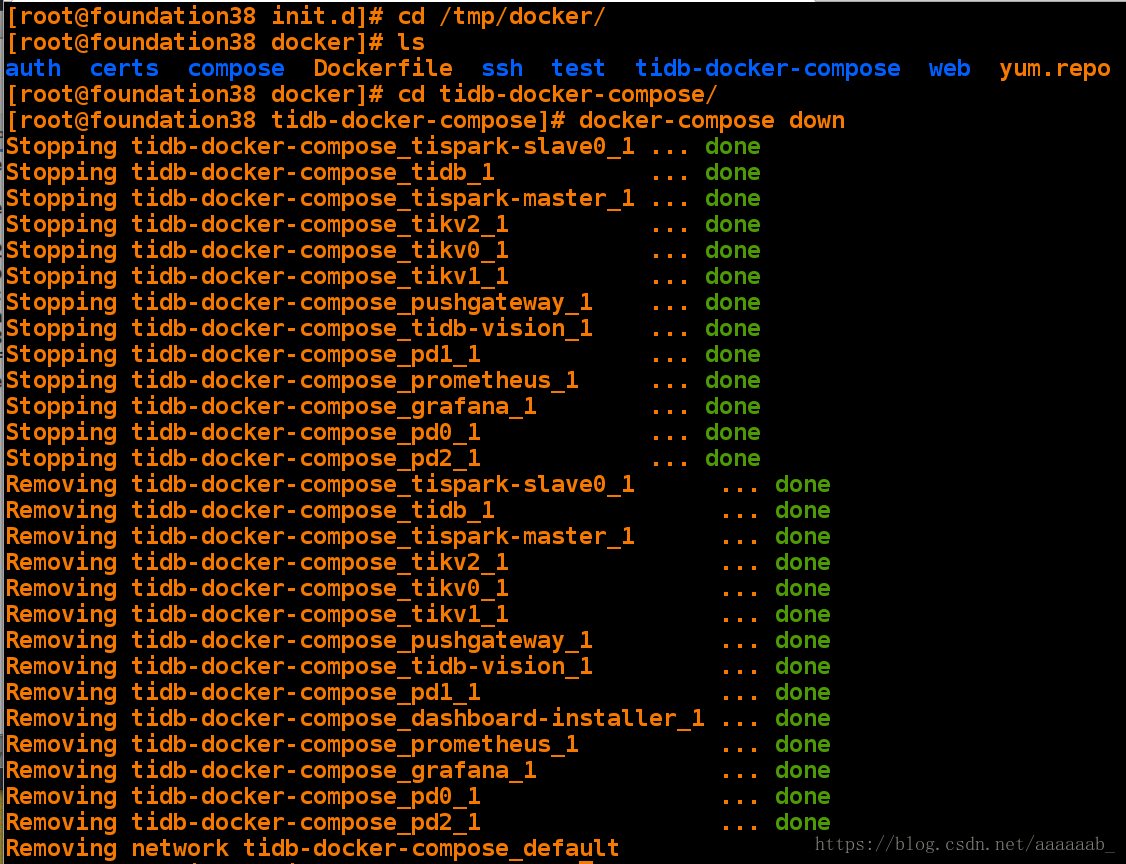

停止docker-compose删除容器卷组还原环境:

[root@foundation38 init.d]# cd /tmp/docker/

[root@foundation38 docker]# ls

auth certs compose Dockerfile ssh test tidb-docker-compose web yum.repo

[root@foundation38 docker]# cd tidb-docker-compose/

[root@foundation38 tidb-docker-compose]# docker-compose down

Stopping tidb-docker-compose_tispark-slave0_1 ... done

Stopping tidb-docker-compose_tidb_1 ... done

Stopping tidb-docker-compose_tispark-master_1 ... done

Stopping tidb-docker-compose_tikv2_1 ... done

Stopping tidb-docker-compose_tikv0_1 ... done

Stopping tidb-docker-compose_tikv1_1 ... done

Stopping tidb-docker-compose_pushgateway_1 ... done

Stopping tidb-docker-compose_tidb-vision_1 ... done

Stopping tidb-docker-compose_pd1_1 ... done

Stopping tidb-docker-compose_prometheus_1 ... done

Stopping tidb-docker-compose_grafana_1 ... done

Stopping tidb-docker-compose_pd0_1 ... done

Stopping tidb-docker-compose_pd2_1 ... done

Removing tidb-docker-compose_tispark-slave0_1 ... done

Removing tidb-docker-compose_tidb_1 ... done

Removing tidb-docker-compose_tispark-master_1 ... done

Removing tidb-docker-compose_tikv2_1 ... done

Removing tidb-docker-compose_tikv0_1 ... done

Removing tidb-docker-compose_tikv1_1 ... done

Removing tidb-docker-compose_pushgateway_1 ... done

Removing tidb-docker-compose_tidb-vision_1 ... done

Removing tidb-docker-compose_pd1_1 ... done

Removing tidb-docker-compose_dashboard-installer_1 ... done

Removing tidb-docker-compose_prometheus_1 ... done

Removing tidb-docker-compose_grafana_1 ... done

Removing tidb-docker-compose_pd0_1 ... done

Removing tidb-docker-compose_pd2_1 ... done

Removing network tidb-docker-compose_default[root@foundation38 tidb-docker-compose]# docker volume rm `docker volume ls -q`

4743c520a25cf82410ac8ac630d90284d51eec0ba83859ae0371653f6c2855f3

729dcff2993ff0ad8a0a0ce4c2497845ab6891460d377761011e56ed8ff6d633

77f1ee6173fd9c68884192a52042fd40fd550a978f750924a6d13b6eb0f36b07

bd73248b51447b7d112b09bab74a68403c66ad8f75759bb02abef72752e5303d

d8706bea8b14999ddad384e359a5385520481697299e8c1149b56f0a35743a2c

ea07be24a463d4fc62226f7bc1a06e818633f63c1e7deb38f904848c148c4dfa