Terminology and basic concepts

1.Implementation : executable computer code that follows the process defined by the algorithm

2.Running time of a sequential algorithm = the number of primitive operations or “steps” on 3.The RAM (random access machine) model:

• one memory (infinite)

• one processor

• instructions are executed sequentially. The instructions are simple: assignment, addition, multiplication, comparison, etc.

4. Message complexity = the total number of messages flowing into the channels (over all the channels) until the distributed system reaches a terminal configuration.

5. Parallel computing: many computations are executed simultaneously

6. Distributed computing: distributed use of multiple resources (e.g. memory, CPU, bandwidth) to solve a computational problem

7. Computer Cluster: multiple stand-alone machines running in parallel across a high speed network

8.Grid computing: a form of distributed computing in which a super and virtual computer is composed of a cluster of networked, loosely-coupled computers; the resources offered by these computers are coordinated to solve large-scale computation problems

9. Cluster computing: a cluster computer is a group of interconnected computers (usually high-speed networking) working together closely

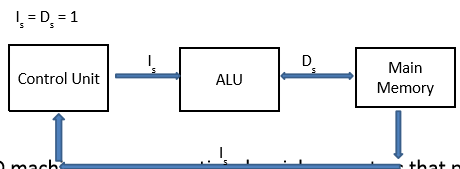

10.Von Neumann model: The classical von Neumann machine has a single CPU and a single main memory (RAM); CPU has a single control unit, an arithmetic-logic unit (ALU), and (few) registers

11. Von Neumann bottleneck: There is a single bus, how fast we can transfer the instructions & data between memory and registers

*12.The first extension of Von Neumann model: pipelining

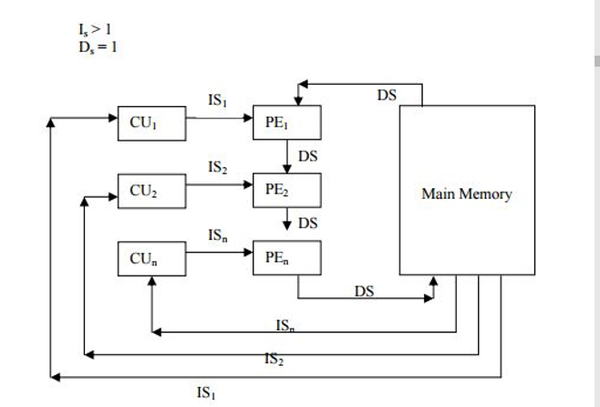

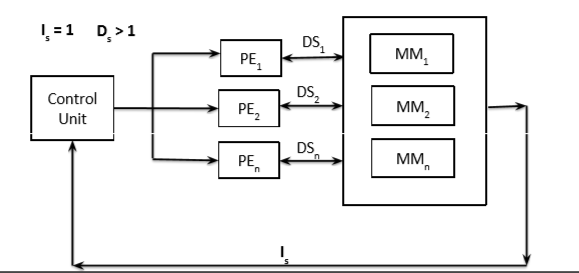

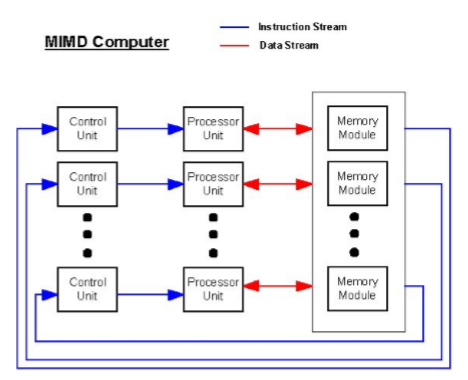

13.Classification based on the instruction and data streams: Flynn's taxonomy, the best known classification scheme for parallel computers ;

The instruction stream (I) and the data stream (D) can be either single (S) or multiple (M)

Four combinations: SISD, SIMD, MISD, MIMD

–SISD: single-instruction-single-data (Most important member is a sequential computer )

– MISD: multiple-instructions-single-data ( One of the two most important in Flynn’s Taxonomy )

– SIMD: single-instruction-multiple-data ( Relatively unused terminology)

– MIMD: multiple-instructions-multiple-data(Important)

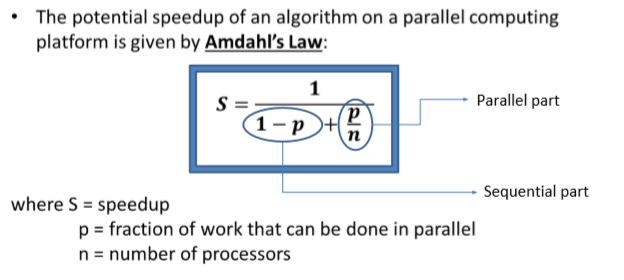

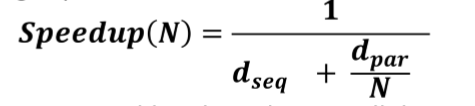

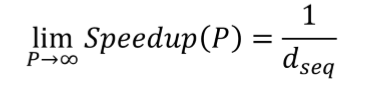

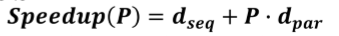

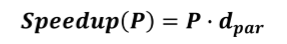

14. Amdahl's law:------n is fixed

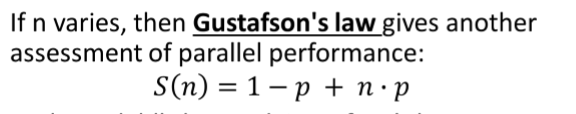

15. Gustafson's law: -----n varies

16.Dependency Conditions:

• Data Dependency: two or more instructions share the same data.

• Control Dependency: instructions contain one or more control structures (if-then-else, if-then, if-else) that can change the content of data; problem arises because the order of execution in control structures is not known at run-time

• Resource Dependency: there are one or more resources that are accessed by different instructions and only an instruction can access the resource at time

17.Data Dependency:

– Flow dependence : If instruction I2 follows I1 and output of I1 becomes input of I2, then I2 is said to be flow dependent on I1.

– Anti-dependence : When instruction I2 follows I1 such that output of I2 overlaps with the input of I1 on the same data.

– Output dependence : When output of the two instructions I1 and I2 overlap on the same data, the instructions are said to be output dependent.

– I/O dependence : When read and write operations by two instructions are invoked on the same file.

18.Bernstein's Conditions:

1). If process Pi writes to a memory cell Mi , then no process Pj can read the cell Mi.

2). If process Pi read from a memory cell Mi, then no process Pj can write to the cell Mi.

3). If process Pi writes to a memory cell Mi, then no process Pj can write to the cell Mi.

19. Portions of code that are not dependent (are independent) can be parallelized.

20.Barrier:a type of synchronization method: any process must stop at this point and cannot proceed until all other processes reach this barrier.

21.Shared-Memory Programming:

int private_x;

shared int sum = 0;

shared int s=1; /* Compute private_x */

P(&s); /* Wait until s = 1 */

s = 0; /* Enter critical section */

sum = sum + private_x; /* Critical section */

V(&s); /* Exit critical section */

Barrier();

if (I am process 1)

printf(“sum = %d\n”, sum);

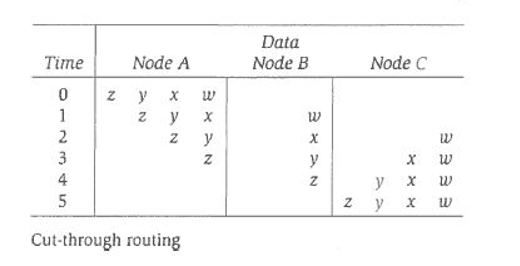

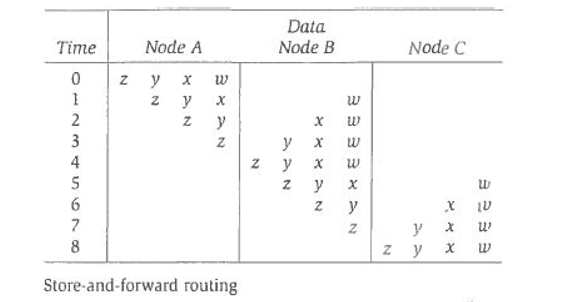

22.Routing: If two nodes are not connected directly then the data needs to be routed

– Store-and-forward routing: the packets of a message are collected one by one at each intermediate node; when all the pieces are received, the message is transmitted, packet by packet, to the next node

– Cut-through-routing: pieces of a message are sent one by one and there is no waiting at the intermediate node for the rest of the packets