记得以前处理这个碰见很多坑,现在贴出自己的代码供别人参考,创建Spring Boot项目过程就不在赘述

一 项目代码

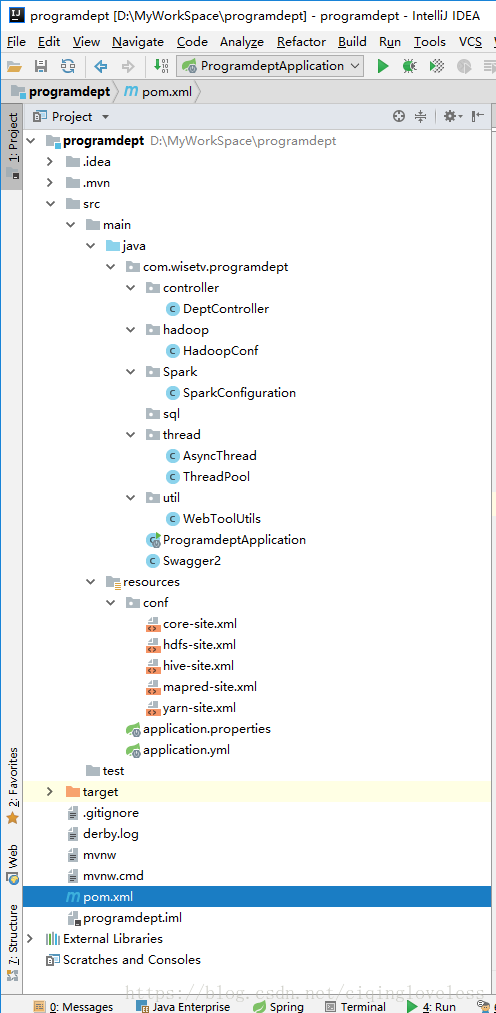

1 项目结构

2 pom.xml

部分可精简,我就不调优了

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.wisetv</groupId>

<artifactId>programdept</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>sparkroute</name>

<description>Demo project for Spring Boot</description>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.0.0.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<hadoop.version>2.9.0</hadoop.version>

<springboot.version>1.5.9.RELEASE</springboot.version>

<org.apache.hive.version>1.2.1</org.apache.hive.version>

<swagger.version>2.7.0</swagger.version>

<ojdbc6.version>11.2.0.1.0</ojdbc6.version>

<scala.version>2.11.12</scala.version>

<spark.version>2.3.0</spark.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.tomcat</groupId>

<artifactId>tomcat-jdbc</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${org.apache.hive.version}</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.aggregate</groupId>

<artifactId>jetty-all</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.hive</groupId>

<artifactId>hive-shims</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.parquet</groupId>

<artifactId>parquet-hadoop-bundle</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- hadoop -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.8</version>

</dependency>

<dependency>

<groupId>com.oracle</groupId>

<artifactId>ojdbc6</artifactId>

<version>${ojdbc6.version}</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>${swagger.version}</version>

<exclusions>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-context-support</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-aop</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-tx</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-orm</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-oxm</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>${swagger.version}</version>

</dependency>

<!-- scala -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- spark -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-graphx_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>${spark.version}</version>

<exclusions>

<exclusion>

<groupId>com.twitter</groupId>

<artifactId>parquet-hadoop-bundle</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mesos_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-yarn_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>24.0-jre</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.46</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>6.0.6</version>

</dependency>

<dependency>

<groupId>com.hadoop.compression.lzo</groupId>

<artifactId>LzoCodec</artifactId>

<version>0.4.20</version>

<scope>system</scope>

<systemPath>${basedir}/src/main/resources/lib/hadoop-lzo-0.4.20-SNAPSHOT.jar</systemPath>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

3 Hadoop配置文件

hadoop配置文件放于resource文件夹下,创建conf文件夹,然后将hadoop的配置文件拷贝到conf文件夹下,注意一点,需要注释关于压缩类的参数,避免项目报错

<!--<property>

<name>io.compression.codecs</name> <value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec,org.apache.hadoop.io.compress.BZip2Codec</value>

</property>-->

<!--<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>-->hadoop配置文件

3.1 core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop/tmp/hadoop</value>

<description>A base for other temporarydirectories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://sparkdis1:8020</value>

</property>

<property>

<name>dfs.client.failover.max.attempts</name>

<value>15</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!--<property>

<name>io.compression.codecs</name> <value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec,org.apache.hadoop.io.compress.BZip2Codec</value>

</property>-->

<!--<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>-->

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

</configuration>

3.2 hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.rpc-address</name>

<value>sparkdis1:8020</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>sparkdis1:50070</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/hadoop/dfs/name,file:/app/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:/hadoop/dfs/name,file:/app/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/app/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.datanode.handler.count</name>

<value>100</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>1024</value>

</property>

<property>

<name>dfs.datanode.max.xcievers</name>

<value>8096</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.enable</name>

<value>false</value>

</property>

<property>

<name>dfs.client.block.write.replace-datanode-on-failure.policy</name>

<value>NEVER</value>

</property>

</configuration>

3.3 hive-site.xml

由于文件太大我就发这个了,记得把自己的hive文件拷贝进去就好

3.4 mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>sparkdis1:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>sparkdis1:19888</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>50</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx4096M</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx8192M</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>4096</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>8192</value>

</property>

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapred.child.env</name>

<value>JAVA_LIBRARY_PATH=/app/hadoop-2.9.0/lib/native</value>

</property>

<!--<property>

<name>mapreduce.map.output.compress.codec</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>-->

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>512</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapred.reduce.tasks</name>

<value>4</value>

</property>

<property>

<name>mapred.map.tasks</name>

<value>20</value>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx4096m</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.memory.limit.percent</name>

<value>0.1</value>

</property>

<property>

<name>mapred.job.shuffle.input.buffer.percent</name>

<value>0.6</value>

</property>

</configuration>3.5 yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>sparkdis1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>sparkdis1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>sparkdis1:8033</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>sparkdis1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>sparkdis1:8088</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>15360</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>122880</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/app/hadoop/hadoop_data/local</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/app/hadoop/hadoop_data/logs</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>60</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>10</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>6</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle,spark_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.class</name>

<value>org.apache.spark.network.yarn.YarnShuffleService</value>

</property>

</configuration>4 自定义端口

application.yml

server:

port: 9706

max-http-header-size: 100000

spring:

application:

name: service-programdept

servlet:

multipart:

max-file-size: 50000Mb

max-request-size: 50000Mb

enabled: true5 项目代码

5.1 Swagger2

我在项目中加入了Swagger2进行测试,可以不加,看自己选择

ProgramdeptApplication.java

package com.wisetv.programdept;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;

import org.springframework.web.cors.CorsConfiguration;

import org.springframework.web.cors.UrlBasedCorsConfigurationSource;

import org.springframework.web.filter.CorsFilter;

import springfox.documentation.swagger2.annotations.EnableSwagger2;

@SpringBootApplication

@EnableSwagger2

@EnableAutoConfiguration

public class ProgramdeptApplication {

public static void main(String[] args) {

SpringApplication.run(ProgramdeptApplication.class, args);

}

@Bean

public CorsFilter corsFilter() {

final UrlBasedCorsConfigurationSource source = new UrlBasedCorsConfigurationSource();

final CorsConfiguration config = new CorsConfiguration();

config.setAllowCredentials(true); // 允许cookies跨域

config.addAllowedOrigin("*");// #允许向该服务器提交请求的URI,*表示全部允许,在SpringMVC中,如果设成*,会自动转成当前请求头中的Origin

config.addAllowedHeader("*");// #允许访问的头信息,*表示全部

config.setMaxAge(18000L);// 预检请求的缓存时间(秒),即在这个时间段里,对于相同的跨域请求不会再预检了

config.addAllowedMethod("OPTIONS");// 允许提交请求的方法,*表示全部允许

config.addAllowedMethod("HEAD");

config.addAllowedMethod("GET");// 允许Get的请求方法

config.addAllowedMethod("PUT");

config.addAllowedMethod("POST");

config.addAllowedMethod("DELETE");

config.addAllowedMethod("PATCH");

source.registerCorsConfiguration("/**", config);

return new CorsFilter(source);

}

}

Swagger2.java

package com.wisetv.programdept;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import springfox.documentation.builders.ApiInfoBuilder;

import springfox.documentation.builders.PathSelectors;

import springfox.documentation.builders.RequestHandlerSelectors;

import springfox.documentation.service.ApiInfo;

import springfox.documentation.spi.DocumentationType;

import springfox.documentation.spring.web.plugins.Docket;

@Configuration

public class Swagger2 {

@Bean

public Docket createRestApi() {

return new Docket(DocumentationType.SWAGGER_2)

.apiInfo(apiInfo())

.select()

.apis(RequestHandlerSelectors.basePackage("com.wisetv.sparkuseranalysis"))

.paths(PathSelectors.any())

.build();

}

private ApiInfo apiInfo() {

return new ApiInfoBuilder()

.title("spark接口文档")

.description("简单优雅的restfun风格")

// .termsOfServiceUrl("http://blog.csdn.net/saytime")

.version("1.0")

.build();

}

}

5.2 SparkConfiguration.java

这个是spark启动参数,这其中有很多坑,没有把握尽量不要注释我的参数

package com.wisetv.programdept.Spark;

import com.wisetv.programdept.util.WebToolUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.sql.SparkSession;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.stereotype.Service;

import java.net.SocketException;

import java.net.UnknownHostException;

import java.util.Iterator;

import java.util.Map;

@Service

public class SparkConfiguration {

@Bean

public SparkConf getSparkConf() {

SparkConf sparkConf = new SparkConf().setAppName("programdept")

.setMaster("yarn-client")

.set("spark.executor.uri", "hdfs://sparkdis1:8020/spark/binary/spark-sql.tar.gz")

.set("spark.testing.memory", "2147480000")

.set("spark.sql.hive.verifyPartitionPath", "true")

.set("spark.yarn.executor.memoryOverhead", "2048m")

.set("spark.dynamicAllocation.enabled", "true")

.set("spark.shuffle.service.enabled", "true")

.set("spark.dynamicAllocation.executorIdleTimeout", "60")

.set("spark.dynamicAllocation.cachedExecutorIdleTimeout", "18000")

.set("spark.dynamicAllocation.initialExecutors", "3")

.set("spark.dynamicAllocation.maxExecutors", "10")

.set("spark.dynamicAllocation.minExecutors", "3")

.set("spark.dynamicAllocation.schedulerBacklogTimeout", "10")

.set("spark.eventLog.enabled", "true")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.set("spark.hadoop.yarn.resourcemanager.hostname", "sparkdis1")

.set("spark.hadoop.yarn.resourcemanager.address", "sparkdis1:8032")

.set("spark.hadoop.fs.defaultFS", "hdfs://sparkdis1:8020")

.set("spark.yarn.access.namenodes", "hdfs://sparkdis1:8020")

.set("spark.history.fs.logDirectory", "hdfs://sparkdis1:8020/spark/historyserverforSpark")

.set("spark.cores.max","10")

.set("spark.mesos.coarse","true")

.set("spark.executor.cores","2")

.set("spark.executor.memory","4g")

.set("spark.eventLog.dir", "hdfs://sparkdis1:8020/spark/eventLog")

.set("spark.sql.parquet.cacheMetadata","false")

.set("spark.sql.hive.verifyPartitionPath", "true")

//下面这个在打包之后需要解开注释,setJars是需要的,需要打一个无依赖包的jar让spark传到yarn中

// .setJars(new String[]{"/app/springserver/addjars/sparkroute.jar","/app/springserver/addjars/fastjson-1.2.46.jar"})

.set("spark.yarn.stagingDir", "hdfs://sparkdis1/user/hadoop/");

// .set("spark.sql.hive.metastore.version","2.1.0");

String hostname = null;

try {

hostname = WebToolUtils.getLocalIP();

} catch (SocketException e) {

e.printStackTrace();

} catch (UnknownHostException e) {

e.printStackTrace();

}

sparkConf.set("spark.driver.host", hostname);

return sparkConf;

}

@Bean

public SparkSession getSparkSession(@Autowired SparkConf sparkConf, @Autowired Configuration conf) {

SparkSession.Builder config = SparkSession.builder();

Iterator<Map.Entry<String, String>> iterator = conf.iterator();

while (iterator.hasNext()) {

Map.Entry<String, String> next = iterator.next();

config.config(next.getKey(), next.getValue());

}

SparkSession sparkSession = config.config(sparkConf).enableHiveSupport().getOrCreate();

return sparkSession;

}

@Bean

public JavaSparkContext getSparkContext(@Autowired SparkSession sparkSession){

JavaSparkContext javaSparkContext = new JavaSparkContext(sparkSession.sparkContext());

return javaSparkContext;

}

}

5.3 HadoopConf.java

hadoop配置文件

package com.wisetv.programdept.hadoop;

import org.apache.hadoop.conf.Configuration;

import org.springframework.context.annotation.Bean;

@org.springframework.context.annotation.Configuration

public class HadoopConf {

@Bean

public Configuration getHadoopConf() {

Configuration conf = new Configuration();

conf.addResource("conf/core-site.xml");

conf.addResource("conf/hdfs-site.xml");

conf.addResource("conf/hive-site.xml");

conf.addResource("conf/yarn-site.xml");

conf.addResource("conf/mapred-site.xml");

return conf;

}

}

5.4 WebToolUtils.java

在旧版本中spark获取的是本机ip,新版本中用的是机器名,有两种方案一个是在spark所有服务器上配置你的机器名,另一种是我代码中的方式,指定sparkConf.set(“spark.driver.host”, hostname);参数。下面这个类是获取IP的工具类,但是当机器有多IP时可能会有问题,可以将上面参数写死,或者自行解决.

package com.wisetv.programdept.util;

import org.apache.http.HttpEntity;

import org.apache.http.NameValuePair;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import javax.servlet.http.HttpServletRequest;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.net.*;

import java.util.ArrayList;

import java.util.Enumeration;

import java.util.List;

/**

* 常用工具类

*

* @author 席红蕾

* @date 2016-09-27

* @version 1.0

*/

public class WebToolUtils {

/**

* 获取本地IP地址

*

* @throws SocketException

*/

public static String getLocalIP() throws UnknownHostException, SocketException {

if (isWindowsOS()) {

return InetAddress.getLocalHost().getHostAddress();

} else {

return getLinuxLocalIp();

}

}

/**

* 判断操作系统是否是Windows

*

* @return

*/

public static boolean isWindowsOS() {

boolean isWindowsOS = false;

String osName = System.getProperty("os.name");

if (osName.toLowerCase().indexOf("windows") > -1) {

isWindowsOS = true;

}

return isWindowsOS;

}

/**

* 获取本地Host名称

*/

public static String getLocalHostName() throws UnknownHostException {

return InetAddress.getLocalHost().getHostName();

}

/**

* 获取Linux下的IP地址

*

* @return IP地址

* @throws SocketException

*/

private static String getLinuxLocalIp() throws SocketException {

String ip = "";

try {

for (Enumeration<NetworkInterface> en = NetworkInterface.getNetworkInterfaces(); en.hasMoreElements();) {

NetworkInterface intf = en.nextElement();

String name = intf.getName();

if (!name.contains("docker") && !name.contains("lo")) {

for (Enumeration<InetAddress> enumIpAddr = intf.getInetAddresses(); enumIpAddr.hasMoreElements();) {

InetAddress inetAddress = enumIpAddr.nextElement();

if (!inetAddress.isLoopbackAddress()) {

String ipaddress = inetAddress.getHostAddress().toString();

if (!ipaddress.contains("::") && !ipaddress.contains("0:0:") && !ipaddress.contains("fe80")) {

ip = ipaddress;

}

}

}

}

}

} catch (SocketException ex) {

System.out.println("获取ip地址异常");

ip = "127.0.0.1";

ex.printStackTrace();

}

return ip;

}

/**

* 获取用户真实IP地址,不使用request.getRemoteAddr();的原因是有可能用户使用了代理软件方式避免真实IP地址,

*

* 可是,如果通过了多级反向代理的话,X-Forwarded-For的值并不止一个,而是一串IP值,究竟哪个才是真正的用户端的真实IP呢?

* 答案是取X-Forwarded-For中第一个非unknown的有效IP字符串。

*

* 如:X-Forwarded-For:192.168.1.110, 192.168.1.120, 192.168.1.130,

* 192.168.1.100

*

* 用户真实IP为: 192.168.1.110

*

* @param request

* @return

*/

public static String getIpAddress(HttpServletRequest request) {

String ip = request.getHeader("x-forwarded-for");

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("WL-Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_CLIENT_IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_X_FORWARDED_FOR");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getRemoteAddr();

}

return ip;

}

/**

* 向指定URL发送GET方法的请求

*

* @param url

* 发送请求的URL

* @param param

* 请求参数,请求参数应该是 name1=value1&name2=value2 的形式。

* @return URL 所代表远程资源的响应结果

*/

// public static String sendGet(String url, String param) {

// String result = "";

// BufferedReader in = null;

// try {

// String urlNameString = url + "?" + param;

// URL realUrl = new URL(urlNameString);

// // 打开和URL之间的连接

// URLConnection connection = realUrl.openConnection();

// // 设置通用的请求属性

// connection.setRequestProperty("accept", "*/*");

// connection.setRequestProperty("connection", "Keep-Alive");

// connection.setRequestProperty("user-agent", "Mozilla/4.0 (compatible;

// MSIE 6.0; Windows NT 5.1;SV1)");

// // 建立实际的连接

// connection.connect();

// // 获取所有响应头字段

// Map<String, List<String>> map = connection.getHeaderFields();

// // 遍历所有的响应头字段

// for (String key : map.keySet()) {

// System.out.println(key + "--->" + map.get(key));

// }

// // 定义 BufferedReader输入流来读取URL的响应

// in = new BufferedReader(new

// InputStreamReader(connection.getInputStream()));

// String line;

// while ((line = in.readLine()) != null) {

// result += line;

// }

// } catch (Exception e) {

// System.out.println("发送GET请求出现异常!" + e);

// e.printStackTrace();

// }

// // 使用finally块来关闭输入流

// finally {

// try {

// if (in != null) {

// in.close();

// }

// } catch (Exception e2) {

// e2.printStackTrace();

// }

// }

// return result;

// }

/**

* 向指定 URL 发送POST方法的请求

*

* @param url

* 发送请求的 URL

* @param param

* 请求参数,请求参数应该是 name1=value1&name2=value2 的形式。

* @return 所代表远程资源的响应结果

*/

public static void sendPost(String pathUrl, String name, String pwd, String phone, String content) {

// PrintWriter out = null;

// BufferedReader in = null;

// String result = "";

// try {

// URL realUrl = new URL(url);

// // 打开和URL之间的连接

// URLConnection conn = realUrl.openConnection();

// // 设置通用的请求属性

// conn.setRequestProperty("accept", "*/*");

// conn.setRequestProperty("connection", "Keep-Alive");

// conn.setRequestProperty("user-agent",

// "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1;SV1)");

// // 发送POST请求必须设置如下两行

// conn.setDoOutput(true);

// conn.setDoInput(true);

// // 获取URLConnection对象对应的输出流

// out = new PrintWriter(conn.getOutputStream());

// // 发送请求参数

// out.print(param);

// // flush输出流的缓冲

// out.flush();

// // 定义BufferedReader输入流来读取URL的响应

// in = new BufferedReader(

// new InputStreamReader(conn.getInputStream()));

// String line;

// while ((line = in.readLine()) != null) {

// result += line;

// }

// } catch (Exception e) {

// System.out.println("发送 POST 请求出现异常!"+e);

// e.printStackTrace();

// }

// //使用finally块来关闭输出流、输入流

// finally{

// try{

// if(out!=null){

// out.close();

// }

// if(in!=null){

// in.close();

// }

// }

// catch(IOException ex){

// ex.printStackTrace();

// }

// }

// return result;

try {

// 建立连接

URL url = new URL(pathUrl);

HttpURLConnection httpConn = (HttpURLConnection) url.openConnection();

// //设置连接属性

httpConn.setDoOutput(true);// 使用 URL 连接进行输出

httpConn.setDoInput(true);// 使用 URL 连接进行输入

httpConn.setUseCaches(false);// 忽略缓存

httpConn.setRequestMethod("POST");// 设置URL请求方法

String requestString = "客服端要以以流方式发送到服务端的数据...";

// 设置请求属性

// 获得数据字节数据,请求数据流的编码,必须和下面服务器端处理请求流的编码一致

byte[] requestStringBytes = requestString.getBytes("utf-8");

httpConn.setRequestProperty("Content-length", "" + requestStringBytes.length);

httpConn.setRequestProperty("Content-Type", " application/x-www-form-urlencoded");

httpConn.setRequestProperty("Connection", "Keep-Alive");// 维持长连接

httpConn.setRequestProperty("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8");

httpConn.setRequestProperty("Accept-Encoding", "gzip, deflate");

httpConn.setRequestProperty("Accept-Language", "zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3");

httpConn.setRequestProperty("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0");

httpConn.setRequestProperty("Upgrade-Insecure-Requests", "1");

httpConn.setRequestProperty("account", name);

httpConn.setRequestProperty("passwd", pwd);

httpConn.setRequestProperty("phone", phone);

httpConn.setRequestProperty("content", content);

// 建立输出流,并写入数据

OutputStream outputStream = httpConn.getOutputStream();

outputStream.write(requestStringBytes);

outputStream.close();

// 获得响应状态

int responseCode = httpConn.getResponseCode();

if (HttpURLConnection.HTTP_OK == responseCode) {// 连接成功

// 当正确响应时处理数据

StringBuffer sb = new StringBuffer();

String readLine;

BufferedReader responseReader;

// 处理响应流,必须与服务器响应流输出的编码一致

responseReader = new BufferedReader(new InputStreamReader(httpConn.getInputStream(), "utf-8"));

while ((readLine = responseReader.readLine()) != null) {

sb.append(readLine).append("\n");

}

responseReader.close();

}

} catch (Exception ex) {

ex.printStackTrace();

}

}

/**

* 执行一个HTTP POST请求,返回请求响应的HTML

*

* @param url

* 请求的URL地址

* @param params

* 请求的查询参数,可以为null

* @return 返回请求响应的HTML

*/

public static void doPost(String url, String name, String pwd, String phone, String content) {

// 创建默认的httpClient实例.

CloseableHttpClient httpclient = HttpClients.createDefault();

// 创建httppost

HttpPost httppost = new HttpPost(url);

// 创建参数队列

List<NameValuePair> formparams = new ArrayList<NameValuePair>();

formparams.add(new BasicNameValuePair("account", name));

formparams.add(new BasicNameValuePair("passwd", pwd));

formparams.add(new BasicNameValuePair("phone", phone));

formparams.add(new BasicNameValuePair("content", content));

UrlEncodedFormEntity uefEntity;

try {

uefEntity = new UrlEncodedFormEntity(formparams, "UTF-8");

httppost.setEntity(uefEntity);

System.out.println("executing request " + httppost.getURI());

CloseableHttpResponse response = httpclient.execute(httppost);

try {

HttpEntity entity = response.getEntity();

if (entity != null) {

System.out.println("--------------------------------------");

System.out.println("Response content: " + EntityUtils.toString(entity, "UTF-8"));

System.out.println("--------------------------------------");

}

} finally {

response.close();

}

} catch (Exception e) {

e.printStackTrace();

} finally {

// 关闭连接,释放资源

try {

httpclient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}5.5 DeptController.java

控制类

package com.wisetv.programdept.controller;

import com.google.gson.Gson;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiImplicitParam;

import io.swagger.annotations.ApiImplicitParams;

import io.swagger.annotations.ApiOperation;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

import java.util.stream.Collectors;

@RestController

@ResponseBody

@Api(value = "接口控制类")

public class DeptController {

@Autowired

SparkSession sparkSession;

Gson gson = new Gson();

@ApiOperation(value = "测试接口", notes = "测试接口")

@RequestMapping(value = {"/test"}, method = {RequestMethod.GET, RequestMethod.POST})

public String test(){

Dataset<Row> sql = sparkSession.sql("select data from mobile.noreplace limit 1");

List<Object> collect = sql.collectAsList().stream().map(x -> x.getString(0)).collect(Collectors.toList());

return gson.toJson(collect);

}

}

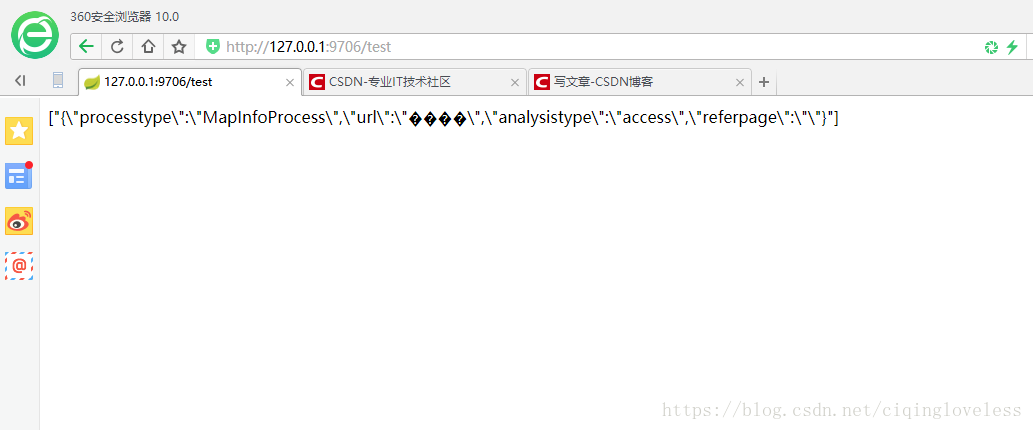

二 测试

在IDEA中启动项目打开如下界面

三 部署

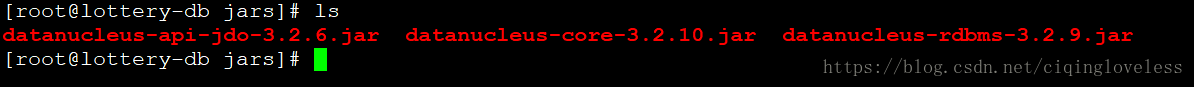

上面参数调整坑比较多,但是部署jar坑更多,我给大家演示一下部署的java指令

#!/bin/sh

nohup java -cp sparkroute-0.0.1-SNAPSHOT.jar:jars/* org.springframework.boot.loader.JarLauncher > sparkroute.log &其中:jars/*比较重要,因为hive的依赖包中有几个osgi的包,所以需要根据hive版本将下面几个jar包引入,