熟悉java的开发者在开发spark应用时,常常会遇到spark对java的接口文档不完善或者不提供对应的java接口的问题。这个时候,如果在java项目中能直接使用scala来开发spark应用,同时使用java来处理项目中的其它需求,将在一定程度上降低开发spark项目的难度。下面就来探索一下java、scala、spark、maven这一套开发环境要怎样来搭建。

1、下载scala sdk

http://www.scala-lang.org/download/ 直接到这里下载sdk,目前最新的稳定版为2.11.7,下载后解压就行

(后面在intellijidea中创建.scala后缀源代码时,ide会智能感知并提示你设置scala sdk,按提示指定sdk目录为解压目录即可)

也可以手动配置scala SDK:ideal =>File =>project struct.. =>library..=> +...

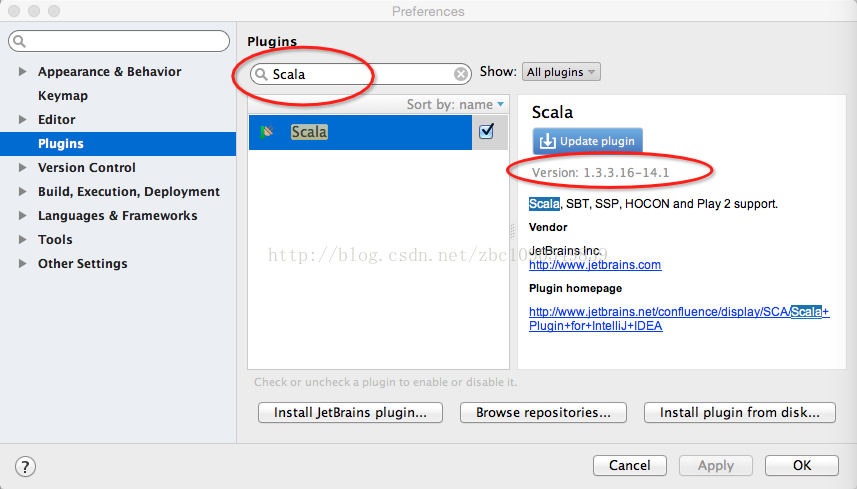

2、下载scala forintellij idea的插件

如上图,直接在plugins里搜索Scala,然后安装即可,如果不具备上网环境,或网速不给力。也可以直接到http://plugins.jetbrains.com/plugin/?idea&id=1347手动下载插件的zip包,手动下载时,要特别注意版本号,一定要跟本机的intellij idea的版本号匹配,否则下载后无法安装。下载完成后,在上图中,点击“Install plugin from disk...”,选择插件包的zip即可。

3、如何跟maven整合

使用maven对项目进行打包的话,需要在pom文件中配置scala-maven-plugin这个插件。同时,由于是spark开发,jar包需要打包为可执行java包,还需要在pom文件中配置maven-assembly-plugin和maven-shade-plugin插件并设置mainClass。经过实验摸索,下面贴出一个可用的pom文件,使用时只需要在包依赖上进行增减即可使用。

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>my-project-groupid</groupId>

<artifactId>sparkTest</artifactId>

<packaging>jar</packaging>

<version>1.0-SNAPSHOT</version>

<name>sparkTest</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hbase.version>0.98.3</hbase.version>

<!--<spark.version>1.3.1</spark.version>-->

<spark.version>1.6.0</spark.version>

<jdk.version>1.7</jdk.version>

<scala.version>2.10.5</scala.version>

<!--<scala.maven.version>2.11.1</scala.maven.version>-->

</properties>

<repositories>

<repository>

<id>repo1.maven.org</id>

<url>http://repo1.maven.org/maven2</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>repository.jboss.org</id>

<url>http://repository.jboss.org/nexus/content/groups/public/

</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>cloudhopper</id>

<name>Repository for Cloudhopper</name>

<url>http://maven.cloudhopper.com/repos/third-party/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>mvnr</id>

<name>Repository maven</name>

<url>http://mvnrepository.com/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>scala</id>

<name>Scala Tools</name>

<url>https://mvnrepository.com/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala</id>

<name>Scala Tools</name>

<url>https://mvnrepository.com/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-compiler</artifactId>

<version>${scala.version}</version>

<scope>compile</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/javax.mail/javax.mail-api -->

<dependency>

<groupId>javax.mail</groupId>

<artifactId>javax.mail-api</artifactId>

<version>1.4.7</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-sql_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-streaming_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-mllib_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-hive_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-graphx_2.10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-graphx_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.30</version>

</dependency>

<!--<dependency>-->

<!--<groupId>org.spark-project.akka</groupId>-->

<!--<artifactId>akka-actor_2.10</artifactId>-->

<!--<version>2.3.4-spark</version>-->

<!--</dependency>-->

<!--<dependency>-->

<!--<groupId>org.spark-project.akka</groupId>-->

<!--<artifactId>akka-remote_2.10</artifactId>-->

<!--<version>2.3.4-spark</version>-->

<!--</dependency>-->

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>14.0.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>p6spy</groupId>

<artifactId>p6spy</artifactId>

<version>1.3</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-math3</artifactId>

<version>3.3</version>

</dependency>

<dependency>

<groupId>org.jdom</groupId>

<artifactId>jdom</artifactId>

<version>2.0.2</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>14.0.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.6.0</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>0.98.6-hadoop2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase</artifactId>

<version>0.98.6-hadoop2</version>

<type>pom</type>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-common -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>0.98.6-hadoop2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-server -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>0.98.6-hadoop2</version>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>6.8.8</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.30</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.jaxrs</groupId>

<artifactId>jackson-jaxrs-json-provider</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>net.sf.json-lib</groupId>

<artifactId>json-lib</artifactId>

<version>2.4</version>

<classifier>jdk15</classifier>

</dependency>

<!-- https://mvnrepository.com/artifact/javax.mail/javax.mail-api -->

<dependency>

<groupId>javax.mail</groupId>

<artifactId>javax.mail-api</artifactId>

<version>1.4.7</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<!--<打包后的项目必须spark submit方式提交给spark运行,勿使用java -jar运行java包>-->

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<appendAssemblyId>false</appendAssemblyId>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<mainClass>rrkd.dt.sparkTest.HelloWorld</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>assembly</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>${jdk.version}</source>

<target>${jdk.version}</target>

<encoding>${project.build.sourceEncoding}</encoding>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.1</version>

<configuration>

<createDependencyReducedPom>false</createDependencyReducedPom>

</configuration>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<shadedArtifactAttached>true</shadedArtifactAttached>

<shadedClassifierName>allinone</shadedClassifierName>

<artifactSet>

<includes>

<include>*:*</include>

</includes>

</artifactSet>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>reference.conf</resource>

</transformer>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>rrkd.dt.sparkTest.HelloWorld</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

<!--< build circular dependencies between Java and Scala>-->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<id>compile-scala</id>

<phase>compile</phase>

<goals>

<goal>add-source</goal>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>test-compile-scala</id>

<phase>test-compile</phase>

<goals>

<goal>add-source</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</build>

</project>项目的目录结构,大体跟maven的默认约定一样,只是src下多了一个scala目录,主要还是为了便于组织java源码和scala源码,如下图:

在java目录下建立HelloWorld类HelloWorld.class:

package test;

import test.Hello;

/**

* Created by L on 2017/1/5.

*/

public class HelloWorld {

public static void main(String[] args){

System.out.print("test");

Hello.sayHello("scala");

Hello.runSpark();

}

}

在scala目录下建立hello类hello.scala:

package test

import org.apache.spark.graphx.{Graph, Edge, VertexId, GraphLoader}

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkContext, SparkConf}

import breeze.linalg.{Vector, DenseVector, squaredDistance}

/**

* Created by L on 2017/1/5.

*/

object Hello {

def sayHello(x: String): Unit = {

println("hello," + x);

}

// def main(args: Array[String]) {

def runSpark() {

val sparkConf = new SparkConf().setAppName("SparkKMeans").setMaster("local[*]")

val sc = new SparkContext(sparkConf)

// Create an RDD for the vertices

val users: RDD[(VertexId, (String, String))] =

sc.parallelize(Array((3L, ("rxin", "student")), (7L, ("jgonzal", "postdoc")),

(5L, ("franklin", "prof")), (2L, ("istoica", "prof")),

(4L, ("peter", "student"))))

// Create an RDD for edges

val relationships: RDD[Edge[String]] =

sc.parallelize(Array(Edge(3L, 7L, "collab"), Edge(5L, 3L, "advisor"),

Edge(2L, 5L, "colleague"), Edge(5L, 7L, "pi"),

Edge(4L, 0L, "student"), Edge(5L, 0L, "colleague")))

// Define a default user in case there are relationship with missing user

val defaultUser = ("John Doe", "Missing")

// Build the initial Graph

val graph = Graph(users, relationships, defaultUser)

// Notice that there is a user 0 (for which we have no information) connected to users

// 4 (peter) and 5 (franklin).

graph.triplets.map(

triplet => triplet.srcAttr._1 + " is the " + triplet.attr + " of " + triplet.dstAttr._1

).collect.foreach(println(_))

// Remove missing vertices as well as the edges to connected to them

val validGraph = graph.subgraph(vpred = (id, attr) => attr._2 != "Missing")

// The valid subgraph will disconnect users 4 and 5 by removing user 0

validGraph.vertices.collect.foreach(println(_))

validGraph.triplets.map(

triplet => triplet.srcAttr._1 + " is the " + triplet.attr + " of " + triplet.dstAttr._1

).collect.foreach(println(_))

sc.stop()

}

}这样子,在scala项目中调用spark的接口来运行一些spark应用,在java项目中再调用scala。

4、scala项目maven的编译打包

java/scala混合的项目,怎么先编译scala再编译java,可以使用以下maven 命令来进行编译打包:

mvn clean scala:compile assembly:assembly

5、spark项目的jar包的运行问题

在开发时,我们可能会以local模式在IDEA中运行,然后使用了上面的命令进行打包。打包后的spark项目必须要放到spark集群下以spark-submit的方式提交运行。