前些日子部门计划搞并行开发,需要对开发及测试环境进行隔离,所以打算用kubernetes对docker容器进行版本管理,搭建了下Kubernetes集群,过程如下:

本流程使用了阿里云加速器,配置流程自行百度。

系统设置(Ubuntu14.04):

禁用swap:

sudo swapoff -a

禁用防火墙:

$ systemctl stop firewalld

$ systemctl disable firewalld

禁用SELINUX:

$ setenforce 0

首先在每个节点上安装Docker:

apt-get update && apt-get install docker.io

(如果apt-get update命令报 Problem executing scripts APT::Update::Post-Invoke-Success 'if /usr/bin/test -w /var/ 类似错误,解决方法如下:

sudo pkill -KILL appstreamcli

wget -P /tmp https://launchpad.net/ubuntu/+archive/primary/+files/appstream_0.9.4-1ubuntu1_amd64.deb https://launchpad.net/ubuntu/+archive/primary/+files/libappstream3_0.9.4-1ubuntu1_amd64.deb

sudo dpkg -i /tmp/appstream_0.9.4-1ubuntu1_amd64.deb /tmp/libappstream3_0.9.4-1ubuntu1_amd64.deb)

然后在所有节点上安装kubelet kubeadm kubectl三个组件:

kubelet 运行在 Cluster 所有节点上,负责启动 Pod 和容器。

kubeadm 用于初始化 Cluster。

kubectl 是 Kubernetes 命令行工具。通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件。

编辑源:

sudo vi /etc/apt/sources.list

添加kubeadm及kubernetes组件安装源:

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

更新源:

更新源:apt-get update

强制安装kubeadm,kubectl,kubelet软件包(由于最新版没有镜像,改为安装1.10.0版本):

apt-get install kubelet=1.10.0-00

apt-get install kubeadm=1.10.0-00

apt-get install kubectl=1.10.0-00

拉取k8s所需镜像(防止初始化时被墙,我在阿里镜像库上放置了所需镜像),创建为脚本执行:

touch docker.sh

chmod 755 docker.sh

编辑脚本,添加如下:

# 安装镜像

url= registry.cn-hangzhou.aliyuncs.com

#基础核心

docker pull $url/sach-k8s/etcd-amd64:3.1.12

docker pull $url/sach-k8s/kube-apiserver-amd64:v1.10.0

docker pull $url/sach-k8s/kube-scheduler-amd64:v1.10.0

docker pull $url/sach-k8s/kube-controller-manager-amd64:v1.10.0

#网络

docker pull $url/sach-k8s/flannel:v0.10.0-amd64

docker pull $url/sach-k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker pull $url/sach-k8s/k8s-dns-sidecar-amd64:1.14.8

docker pull $url/sach-k8s/k8s-dns-kube-dns-amd64:1.14.8

docker pull $url/sach-k8s/pause-amd64:3.1

docker pull $url/sach-k8s/kube-proxy-amd64:v1.10.0

#dashboard

docker pull $url/sach-k8s/kubernetes-dashboard-amd64:v1.8.3

#heapster

docker pull $url/sach-k8s/heapster-influxdb-amd64:v1.3.3

docker pull $url/sach-k8s/heapster-grafana-amd64:v4.4.3

docker pull $url/sach-k8s/heapster-amd64:v1.4.2

#ingress

docker pull $url/sach-k8s/nginx-ingress-controller:0.15.0

docker pull $url/sach-k8s/defaultbackend:1.4

#还原kubernetes使用的镜像

docker tag $url/sach-k8s/etcd-amd64:3.1.12 k8s.gcr.io/etcd-amd64:3.1.12

docker tag $url/sach-k8s/kube-apiserver-amd64:v1.10.0 k8s.gcr.io/kube-apiserver-amd64:v1.10.0

docker tag $url/sach-k8s/kube-scheduler-amd64:v1.10.0 k8s.gcr.io/kube-scheduler-amd64:v1.10.0

docker tag $url/sach-k8s/kube-controller-manager-amd64:v1.10.0 k8s.gcr.io/kube-controller-manager-amd64:v1.10.0

docker tag $url/sach-k8s/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker tag $url/sach-k8s/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

docker tag $url/sach-k8s/kube-proxy-amd64:v1.10.0 k8s.gcr.io/kube-proxy-amd64:v1.10.0

docker tag $url/sach-k8s/k8s-dns-kube-dns-amd64:1.14.8 k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8

docker tag $url/sach-k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8 k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker tag $url/sach-k8s/k8s-dns-sidecar-amd64:1.14.8 k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8

docker tag $url/sach-k8s/kubernetes-dashboard-amd64:v1.8.3 k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

docker tag $url/sach-k8s/heapster-influxdb-amd64:v1.3.3 k8s.gcr.io/heapster-influxdb-amd64:v1.3.3

docker tag $url/sach-k8s/heapster-grafana-amd64:v4.4.3 k8s.gcr.io/heapster-grafana-amd64:v4.4.3

docker tag $url/sach-k8s/heapster-amd64:v1.4.2 k8s.gcr.io/heapster-amd64:v1.4.2

docker tag $url/sach-k8s/defaultbackend:1.4 gcr.io/google_containers/defaultbackend:1.4

docker tag $url/sach-k8s/nginx-ingress-controller:0.15.0 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.15.0

#删除多余镜像

#基础核心

docker rmi $url/sach-k8s/etcd-amd64:3.1.12

docker rmi $url/sach-k8s/kube-apiserver-amd64:v1.10.0

docker rmi $url/sach-k8s/kube-scheduler-amd64:v1.10.0

docker rmi $url/sach-k8s/kube-controller-manager-amd64:v1.10.0

#网络

docker rmi $url/sach-k8s/flannel:v0.10.0-amd64

docker rmi $url/sach-k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker rmi $url/sach-k8s/k8s-dns-sidecar-amd64:1.14.8

docker rmi $url/sach-k8s/k8s-dns-kube-dns-amd64:1.14.8

docker rmi $url/sach-k8s/pause-amd64:3.1

docker rmi $url/sach-k8s/kube-proxy-amd64:v1.10.0

#dashboard

docker rmi $url/sach-k8s/kubernetes-dashboard-amd64:v1.8.3

#heapster

docker rmi $url/sach-k8s/heapster-influxdb-amd64:v1.3.3

docker rmi $url/sach-k8s/heapster-grafana-amd64:v4.4.3

docker rmi $url/sach-k8s/heapster-amd64:v1.4.2

#ingress

docker rmi $url/sach-k8s/nginx-ingress-controller:0.15.0

docker rmi $url/sach-k8s/defaultbackend:1.4

执行脚本:

./docker.sh

每个节点都拉取完成所有镜像后,在主节点上初始化集群:

初始化:

kubeadm init --kubernetes-version=v1.10.0 --apiserver-advertise-address 192.168.254.128 --pod-network-cidr=10.244.0.0/16

配置kubectl:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

如果是root用户:

export KUBECONFIG=/etc/kubernetes/admin.conf

如果不是root用户:

echo "source <(kubectl completion bash)" >> ~/.bashrc

初始化网络方案(本文采用flannel方案):

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

查看节点状态:

Kubectl get nodes

(主节点状态为notready,由于没有调度pod)

主节点调度pod:

kubectl taint nodes --all node-role.kubernetes.io/master-

查看日志:

journalctl -xeu kubelet 或journalctl -xeu kubelet > a

查看pod 状态:

kubectl get pods --all-namespaces

从节点加入集群:

kubeadm join --token q500wd.kcjrb2zwvhwqt7su 192.168.254.128:6443 --discovery-token-ca-cert-hash sha256:29e091cca420e505d0c5e091e68f6b5c4ba3f2a54fdcd693c681307c8a041a8b

(token和证书都是集群初始化后输出在主节点控制台的,在主节点输出中查找token 和discovery-token-ca-cert-hash)

查看集群节点:

kubectl get nodes

如果从节点加入失败则可能是token过期,查看日志:

kubelet logs

查看token是否过期:

kubeadm token list

如果过期生成新的token和证书:

kubeadm token create

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

0fd95a9bc67a7bf0ef42da968a0d55d92e52898ec37c971bd77ee501d845b538

删除之前证书:

rm -rf /etc/kubernetes/pki/ca.crt

从节点状态重置:

kubeadm reset

完成上述步骤后重新加入集群即可。

(在从节点上执行相关命令报错,如:

kubectl get nodes

报The connection to the server localhost:8080 was refused - did you specify the right host or port?错误,由于配置文件没有应用,解决如下:

sudo cp /etc/kubernetes/kubelet.conf $HOME/

sudo chown $(id -u):$(id -g) $HOME/kubelet.conf

export KUBECONFIG=$HOME/kubelet.conf)

部署应用:

创建pod:

kubectl run nginx --replicas=1 --labels="run=load-balancer-example" --image=nginx --port=80

(replicas配置副本数)

查询部署相关信息:

kubectl get deployments nginx

kubectl describe deployments nginx

kubectl get replicasets

kubectl describe replicasets

创建service:

kubectl expose deployment nginx --type=NodePort --name=example-service

查看service:

kubectl describe services example-service

(service如果外网无法访问,原因往往只是Service的selector的值没有和pod匹配,这种错误很容易通过查看service的endpoints信息来验证,如果endpoints为空,就说明selector的值配错了。只需要修改为对应pod的标签就可以了。参考博文https://blog.csdn.net/bluishglc/article/details/52440312)

ps:

服务器重启后集群无法访问,如kubectl get pods命令报错时,需要重新禁用swap,重启kubelet:

sudo swapoff -a

systemctl daemon-reload

sytemctl restart kubelet

kubelet 状态查看:

systemctl status kubelet

kubelet 日志:

journalctl -xefu kubelet

查看swap状态:

cat /proc/swaps

安装Dashboard :

创建配置文件:

touch kubernetes-dashboard.yaml

添加如下配置:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Configuration to deploy release version of the Dashboard UI compatible with

# Kubernetes 1.8.

#

# Example usage: kubectl create -f <this_file>

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

安装:

kubectl apply -f kubernetes-dashboard.yaml

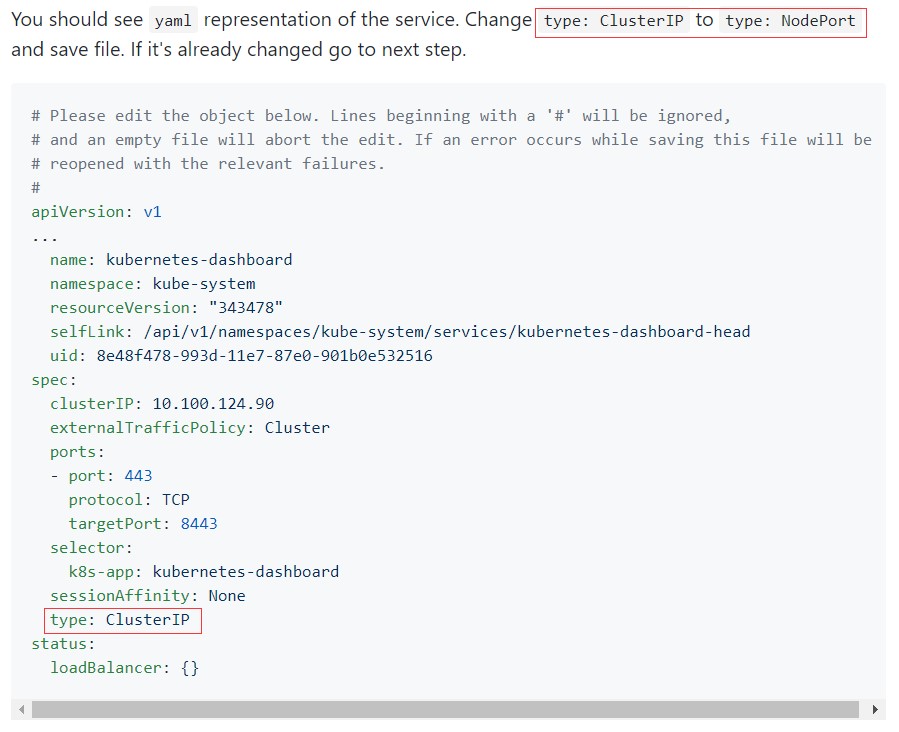

修改服务类别,使可以外网访问:

kubectl -n kube-system edit service kubernetes-dashboard

修改如下图:

查看修改后service:

kubectl get services kubernetes-dashboard -n kube-system

Dashboard通过nodeIp:[暴露端口]可以访问,页面打开后选择token登录。

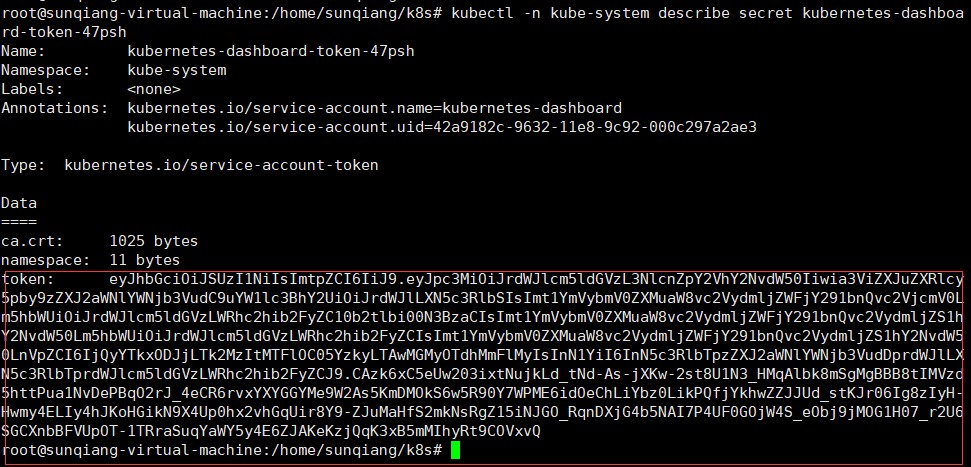

Token获取方式:

kubectl -n kube-system get secret

(找到Name为kubernetes-dashboard-token-XXXXX名称(此处为47psh))

kubectl -n kube-system describe secret kubernetes-dashboard-token-47psh

将token复制到页面中,进入面板。

至此,全部安装完成,可以进入面板对kubernetes集群进行管理。