一、环境准备

jdk-1.8+scala-2.11.X+python-2.7

二、创建目录

mkdir -p /opt/cloudera/csd

修改权限

chown cloudera-scm:cloudera-scm /opt/cloudera/csd

获取csd(放到/opt/cloudera/csd目录)

wget http://archive.cloudera.com/spark2/csd/SPARK2_ON_YARN-2.1.0.cloudera2.jar

修改组权限和用户权限

chgrp cloudera-scm SPARK2_ON_YARN-2.1.0.cloudera2.jar

chown cloudera-scm SPARK2_ON_YARN-2.1.0.cloudera2.jar

三、添加parcels

注意:

(1)2.1.0.cloudera2 和2.1.0.cloudera1的区别

(详见表格:https://www.cloudera.com/documentation/spark2/latest/topics/spark2_requirements.html)

(2)jar版本要和此处的2.1.0.cloudera2或者2.1.0.cloudera1版本一致

url----->http://archive.cloudera.com/spark2/parcels/2.1.0.cloudera2/

等待下载结束

四、激活spark2

五、重启群集和cloudera-scm-server

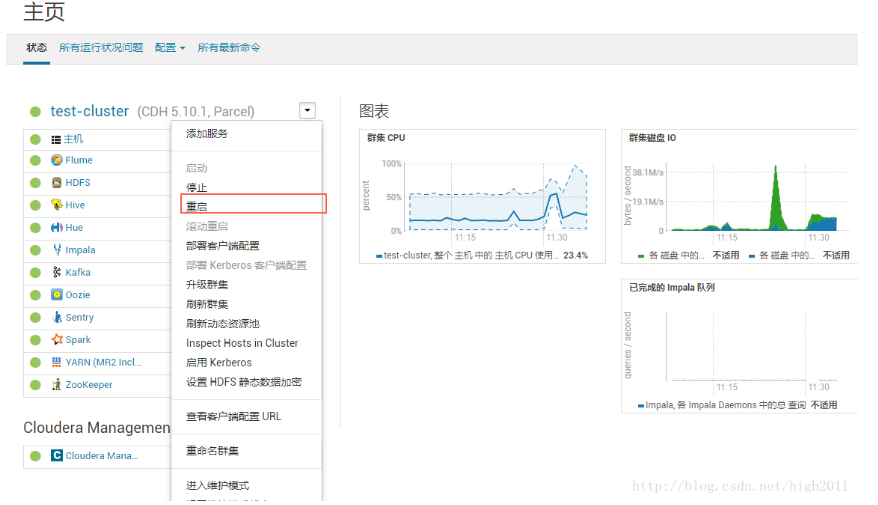

(1)先重启cdh集群

(2)再重启cloudera-scm-server

#/opt/cloudera-manager/cm-5.15.1/etc/init.d/cloudera-scm-server restart

#tail -f/opt/cloudera-manager/cm-5.15.1/log/cloudera-scm-server/cloudera-scm-server.log

#tail -f/opt/cloudera-manager/cm-5.15.1/log/cloudera-scm-agent/cloudera-scm-agent.log

六、添加spark2的服务

(1)点击添加服务

(2)选择spark2

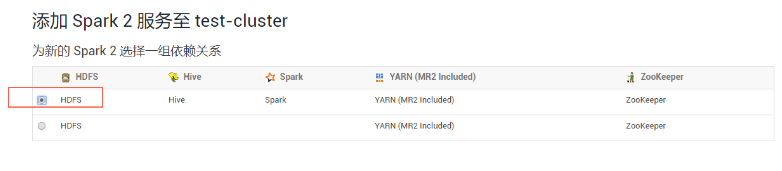

(3)选择依赖最多的

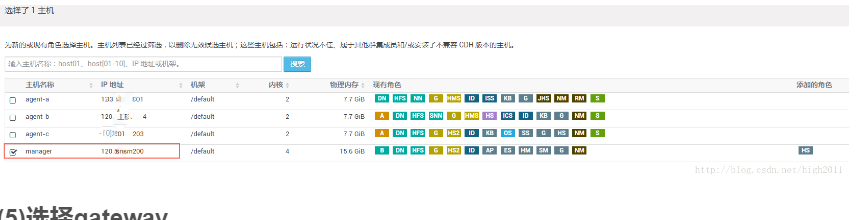

(4)选择history spark2

(5)选择gateway

(6)等待执行成功

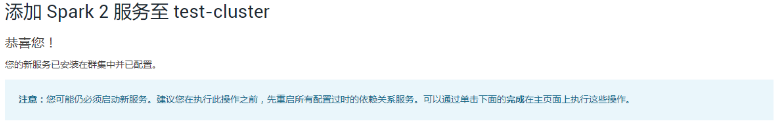

(7)成功后的界面

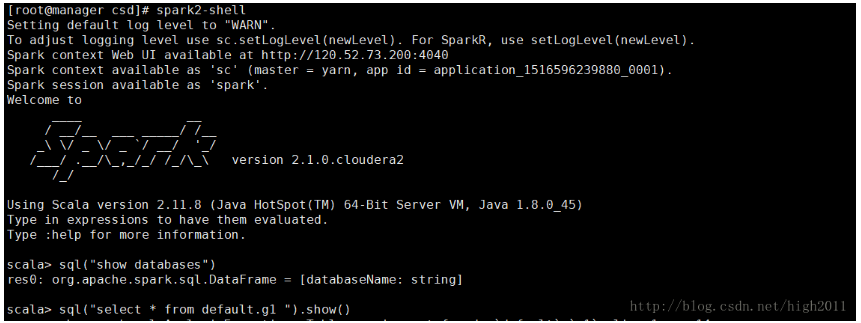

七、测试spark2

(1)在命令行输入

spark-shell --conf spark.executor.memory=2g --confspark.executor.cores=2

(2)参考举例

https://spark.apache.org/docs/2.1.0/quick-start.html