版权声明: https://blog.csdn.net/qq_25233621/article/details/81271553

本次选择的网站为一个网上免费的视频网站

爬取的内容为页面首页的内容:包括tittle,播放连接,二级页面爬取,二级页面下电视剧或动漫的集数链接爬取

视频网站为www.maomitt9.com(对不住了,滑稽脸)

在二级页面的爬取中,无法将网页网址和文件目录粘合在一起:

错误如下

TypeError: must be str, not tuple

但是第一次爬取就可以,有大佬可以指导一下

代码如下:

#encoding:utf-8

import requests

import re

import time

def getHtml1(url):

Bs ={'user-agent':'Firefox/18.1.0.2145'}

r=requests.get(url,headers=Bs,timeout=60)

r.encoding=r.apparent_encoding

pattern = "<li class=\"item\"><a class=\"js-tongjic\" href=\"(.*?)\">"

href = re.findall(pattern, r.text, re.S)

pattern = "<p class=\"title g-clear\"> <span class=\"s1\">(.*?)</span> "

names = re.findall(pattern, r.text, re.S)

infolist = []

for i in range(len(href)):

tittle = names[i]

URL = "http://www.maomitt9.com" + href[i]

infolist.append([tittle, URL])

for i in range(len(infolist)):

print(infolist[i])

def getHtml2(url):

Bs = {'user-agent': 'Firefox/18.1.0.2145'}

r = requests.get(url, headers=Bs, timeout=60)

r.encoding = r.apparent_encoding

pattern="<meta name=\"description\" content=\"(.*?)\" />"

name=re.findall(pattern,r.text,re.S)

print(name)

pattern = "<a class=\".*?\" style=\"line-height:26px\" href=\"(.*?)\" target= \"iFrame1\">(.*?)</a>"

href = re.findall(pattern,r.text,re.S)

print(len(href))

print("将.com 后的括号去掉即可正确访问")

for i in range(len(href)):

print("www.maomitt9.com",end="")

print(href[i])

def start():

scale = 50

print("执行开始")

start=time.perf_counter()

for i in range(101):

a = '|'*i

b = ''*(scale-i)

c = ((i/scale)*100)/2

dur = time.perf_counter()-start

print("\r{:^3.0f}%[{}->{}]{:.2f}s".format(c,a,b,dur),end="")

time.sleep(0.01)

print("\n执行结束")

if __name__ == '__main__':

url="http://www.maomitt9.com"

start()

getHtml1(url)

while(1):

try:

#url = "http://www.maomitt9.com/dm/rbdm/12948.html"

url2=input("输入要爬取下级页面的网页(仅支持具有集数的栏目):")

getHtml2(url2)

print("请等待!")

time.sleep(5)

except:

print("失败!")

print("请等待!")

time.sleep(5)

欢迎大家指导,评论

如果这篇文章牵扯到任何版权问题,请及时联系我,删除文章

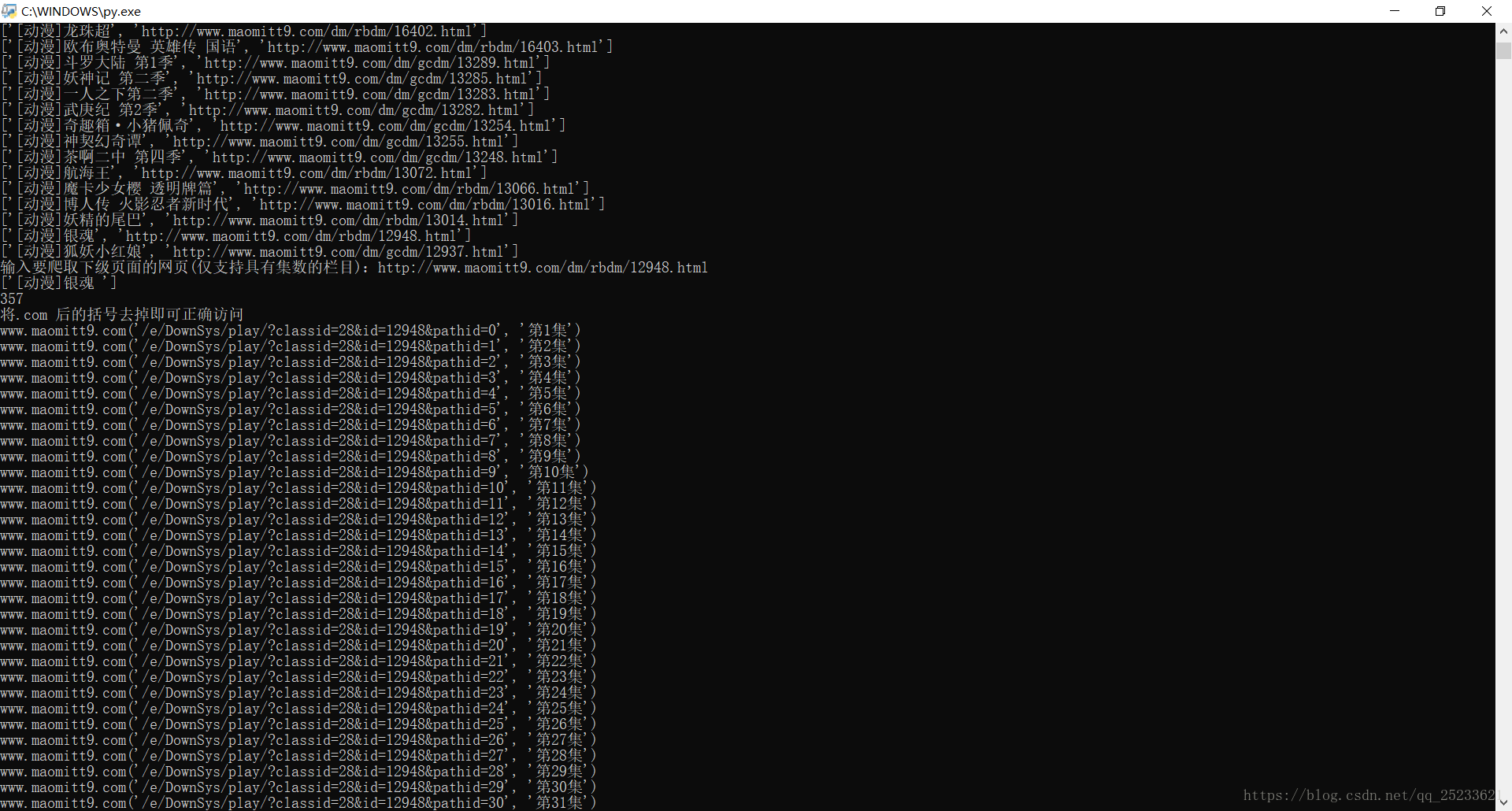

效果如下

二级页面爬取效果如下: