版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/u010472607/article/details/82319295

运行环境:

Anaconda5.2

Python3.6.5

TensorFlow1.10

Model子类化的方式定义Lenet网络, 区别于一般的直接使用Sequential和Model搭建网络的方式, 代码如下

# -*- coding: utf-8 -*-

"""

tf.keras 标准Lenet

"""

import tensorflow as tf

from tensorflow.keras.models import Model, Sequential

from tensorflow.keras.layers import Input, Conv2D, MaxPooling2D, Activation, Flatten, Dense

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

import numpy as np

import matplotlib.pyplot as plt

# tf.enable_eager_execution()

input_shape = 28 * 28

classes = 10

NB_EPOCH = 20

BATCH_SIZE = 200

VERBOSE = 1

OPTIMIZER = Adam()

VALIDATION_SPLIT = 0.2

IMG_ROWS, IMG_COLS = 28, 28

NB_CLASSES = 10

INPUT_SHAPE = (IMG_ROWS, IMG_COLS, 1) # 注意在TF中的数据格式 NHWC

# 加载数据,转换编码格式并归一化

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype("float32") / 255.

x_test = x_test.astype(np.float32) / 255.

# 扩展1维, 等效写法

x_train = x_train[:, :, :, None]

x_test = x_test[:, :, :, None]

print(x_train.shape, "train shape")

print(x_test.shape, "test shape")

y_train = to_categorical(y_train, NB_CLASSES)

y_test = to_categorical(y_test, NB_CLASSES)

# 创建网络

"""

Model 子类化

在__init__方法中创建层 并将其设置为示例的属性, 在call方法中前向传播计算

当启用eager执行时,模型子类化特别有用, 因为可以强制写入正向传递

关键点:

虽然Model子类化提供了灵活性,但其代价是更高的复杂性和更多的用户错误机会。

"""

class LeNet(Model):

def __init__(self, input_shape=(28, 28, 1), num_classes=10):

# super(LeNet, self).__init__(name="LeNet")

self.num_classes = num_classes

''' 定义要用到的层 layers '''

# 输入层

img_input = Input(shape=input_shape)

# Conv => ReLu => Pool

x = Conv2D(filters=20, kernel_size=5, padding="same", activation="relu" ,name='block1_conv1')(img_input)

x = MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name='block1_pool')(x)

# Conv => ReLu => Pool

x = Conv2D(filters=50, kernel_size=5, padding="same", activation="relu", name='block1_conv2')(x)

x = MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name='block1_poo2')(x)

# 压成一维

x = Flatten(name='flatten')(x)

# 全连接层

x = Dense(units=500, activation="relu", name="f1")(x)

# softmax分类器

x = Dense(units=num_classes, activation="softmax", name="prediction")(x)

# 调用Model类的Model(input, output, name="***")构造方法

super(LeNet, self).__init__(img_input, x, name="LeNet")

def call(self, inputs):

# 前向传播计算

# 使用在__init__方法中定义的层

return self.output(inputs)

# model = LeNet(10)

# print(model.output_shape)

# model.summary()

# 初始化优化器和模型

model = LeNet(INPUT_SHAPE, NB_CLASSES)

model.summary()

model.compile(loss="categorical_crossentropy", optimizer=tf.train.RMSPropOptimizer(learning_rate=0.001),

metrics=["accuracy"])

history = model.fit(x=x_train, y=y_train, batch_size=BATCH_SIZE, epochs=NB_EPOCH, verbose=VERBOSE,

validation_split=VALIDATION_SPLIT)

score = model.evaluate(x=x_test, y=y_test, verbose=VERBOSE)

print("test loss:", score[0])

print("test acc:", score[1])

# 列出历史数据

print(history.history.keys())

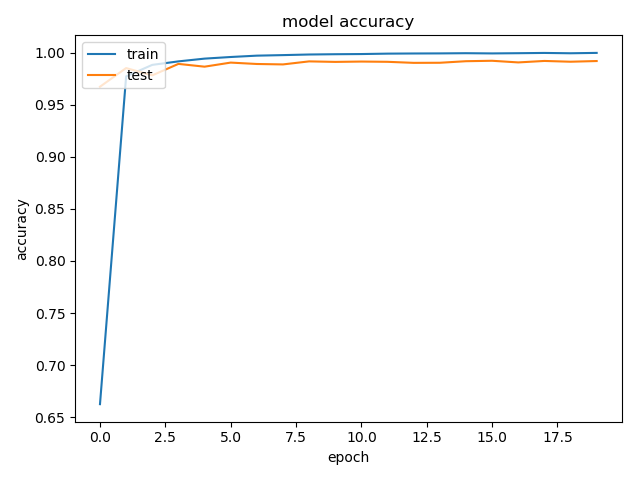

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title("model accuracy")

plt.ylabel("accuracy")

plt.xlabel("epoch")

plt.legend(["train", "test"], loc="upper left")

plt.show()

# 汇总损失函数历史数据

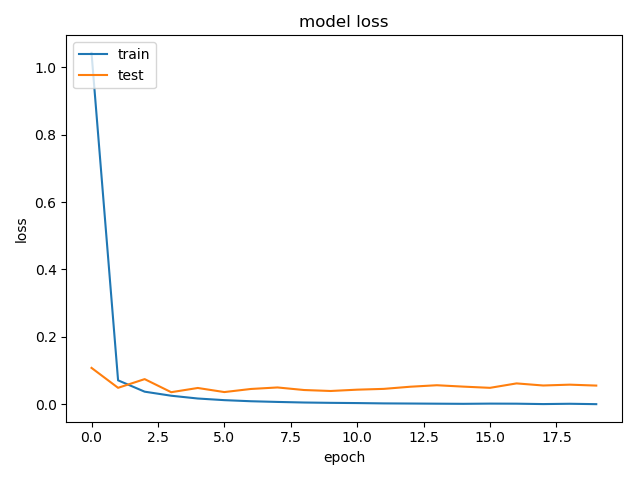

plt.plot(history.history["loss"])

plt.plot(history.history["val_loss"])

plt.title("model loss")

plt.ylabel("loss")

plt.xlabel("epoch")

plt.legend(["train", "test"], loc="upper left")

plt.show()

部分输出

(60000, 28, 28, 1) train shape

(10000, 28, 28, 1) test shape

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 28, 28, 1) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 28, 28, 20) 520

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 14, 14, 20) 0

_________________________________________________________________

block1_conv2 (Conv2D) (None, 14, 14, 50) 25050

_________________________________________________________________

block1_poo2 (MaxPooling2D) (None, 7, 7, 50) 0

_________________________________________________________________

flatten (Flatten) (None, 2450) 0

_________________________________________________________________

f1 (Dense) (None, 500) 1225500

_________________________________________________________________

prediction (Dense) (None, 10) 5010

=================================================================

Total params: 1,256,080

Trainable params: 1,256,080

Non-trainable params: 0

_________________________________________________________________

Train on 48000 samples, validate on 12000 samples

Epoch 1/20

200/48000 [..............................] - ETA: 17:09 - loss: 2.2920 - acc: 0.1050

2000/48000 [>.............................] - ETA: 1:40 - loss: 2.2958 - acc: 0.0990

3800/48000 [=>............................] - ETA: 51s - loss: 2.2971 - acc: 0.1016

5800/48000 [==>...........................] - ETA: 32s - loss: 2.2942 - acc: 0.1102

7800/48000 [===>..........................] - ETA: 23s - loss: 2.2914 - acc: 0.1181

9800/48000 [=====>........................] - ETA: 17s - loss: 2.2877 - acc: 0.1272

12000/48000 [======>.......................] - ETA: 13s - loss: 2.2808 - acc: 0.1493

14200/48000 [=======>......................] - ETA: 11s - loss: 2.2671 - acc: 0.2039

16400/48000 [=========>....................] - ETA: 9s - loss: 2.2313 - acc: 0.2579

18600/48000 [==========>...................] - ETA: 7s - loss: 2.1376 - acc: 0.3003

20800/48000 [============>.................] - ETA: 6s - loss: 2.0313 - acc: 0.3377

23000/48000 [=============>................] - ETA: 5s - loss: 1.8994 - acc: 0.3809

25200/48000 [==============>...............] - ETA: 4s - loss: 1.7820 - acc: 0.4192

27400/48000 [================>.............] - ETA: 3s - loss: 1.6768 - acc: 0.4542

29600/48000 [=================>............] - ETA: 3s - loss: 1.5772 - acc: 0.4874

31800/48000 [==================>...........] - ETA: 2s - loss: 1.4859 - acc: 0.5175

34000/48000 [====================>.........] - ETA: 2s - loss: 1.4071 - acc: 0.5435

36200/48000 [=====================>........] - ETA: 1s - loss: 1.3334 - acc: 0.5677

38000/48000 [======================>.......] - ETA: 1s - loss: 1.2792 - acc: 0.5852

40000/48000 [========================>.....] - ETA: 1s - loss: 1.2237 - acc: 0.6035

42000/48000 [=========================>....] - ETA: 0s - loss: 1.1731 - acc: 0.6200

44200/48000 [==========================>...] - ETA: 0s - loss: 1.1221 - acc: 0.6365

46400/48000 [============================>.] - ETA: 0s - loss: 1.0745 - acc: 0.6520

48000/48000 [==============================] - 6s 118us/step - loss: 1.0419 - acc: 0.6625 - val_loss: 0.1084 - val_acc: 0.9673

Epoch 2/20

...

Epoch 19/20

...

Epoch 20/20

200/48000 [..............................] - ETA: 1s - loss: 2.3955e-06 - acc: 1.0000

2400/48000 [>.............................] - ETA: 1s - loss: 1.6718e-04 - acc: 1.0000

4600/48000 [=>............................] - ETA: 1s - loss: 8.8650e-05 - acc: 1.0000

6800/48000 [===>..........................] - ETA: 0s - loss: 8.8033e-05 - acc: 1.0000

9000/48000 [====>.........................] - ETA: 0s - loss: 0.0014 - acc: 0.9999

11200/48000 [======>.......................] - ETA: 0s - loss: 0.0011 - acc: 0.9999

13400/48000 [=======>......................] - ETA: 0s - loss: 9.5830e-04 - acc: 0.9999

15600/48000 [========>.....................] - ETA: 0s - loss: 9.3345e-04 - acc: 0.9999

17800/48000 [==========>...................] - ETA: 0s - loss: 8.3938e-04 - acc: 0.9999

20200/48000 [===========>..................] - ETA: 0s - loss: 7.6238e-04 - acc: 0.9999

22400/48000 [=============>................] - ETA: 0s - loss: 7.5516e-04 - acc: 0.9999

24400/48000 [==============>...............] - ETA: 0s - loss: 7.1492e-04 - acc: 0.9999

26600/48000 [===============>..............] - ETA: 0s - loss: 8.5314e-04 - acc: 0.9998

28800/48000 [=================>............] - ETA: 0s - loss: 8.3093e-04 - acc: 0.9998

31000/48000 [==================>...........] - ETA: 0s - loss: 7.8067e-04 - acc: 0.9998

33200/48000 [===================>..........] - ETA: 0s - loss: 7.3853e-04 - acc: 0.9998

35400/48000 [=====================>........] - ETA: 0s - loss: 8.4584e-04 - acc: 0.9998

37600/48000 [======================>.......] - ETA: 0s - loss: 9.0971e-04 - acc: 0.9997

39800/48000 [=======================>......] - ETA: 0s - loss: 9.6476e-04 - acc: 0.9997

42000/48000 [=========================>....] - ETA: 0s - loss: 9.3635e-04 - acc: 0.9997

44200/48000 [==========================>...] - ETA: 0s - loss: 8.9145e-04 - acc: 0.9997

46400/48000 [============================>.] - ETA: 0s - loss: 8.5120e-04 - acc: 0.9997

48000/48000 [==============================] - 1s 26us/step - loss: 8.4154e-04 - acc: 0.9997 - val_loss: 0.0559 - val_acc: 0.9919

32/10000 [..............................] - ETA: 1s

1152/10000 [==>...........................] - ETA: 0s

2368/10000 [======>.......................] - ETA: 0s

3552/10000 [=========>....................] - ETA: 0s

4864/10000 [=============>................] - ETA: 0s

6176/10000 [=================>............] - ETA: 0s

7488/10000 [=====================>........] - ETA: 0s

8800/10000 [=========================>....] - ETA: 0s

10000/10000 [==============================] - 0s 41us/step

test loss: 0.04930802768102574

test acc: 0.9923

dict_keys(['val_loss', 'val_acc', 'loss', 'acc'])loss曲线

acc曲线