最近在做数据收集平台,用openresty往kafka里push数据,不管是kafka broker也好,还是一个配置也好,希望做到动态更新,不需要reload openresty。尤其是针对接口调用的认证,配置很少,如果每次都去redis或mysql中去取感觉也没有必要,直接用lua做配置表无疑性能提高不少。再说kafka broker问题,虽说producer会感知到broker的增加(http://blog.csdn.net/liuzhenfeng/article/details/50688842),但如果写在配置的那个默认的broker不可用,这就有点尴尬了,有可能业务重启了,还需要修改配置文件;或通过服务注册重新发现新的可用broker。

到最后还是跑到了服务注册与发现的问题,可以通过consul,zookeeper或etcd实现,下面是我在openresty+consul实现的动态配置更新。

原理很简单,在openresty通过长轮训和版本号及时获取consul的kv store变化。consul提供了time_wait和修改版本号概念,如果consul发现该kv没有变化就会hang住这个请求5分钟,在这5分钟内如果有任何变化都会及时返回结果。通过比较版本号我们就知道是超时了还是kv的确被修改了。其实原理和上一篇nginx upsync一样(http://blog.csdn.net/yueguanghaidao/article/details/52801043)

consul的node和service也支持阻塞查询,相对来说用service更好一点,毕竟支持服务的健康检查。阻塞api和kv一样,加一个index就好了

curl “172.20.20.10:8500/v1/catalog/nodes?index=4”

代码如下:

local json = require "cjson"

local http = require "resty.http"

-- consul watcher

-- 设置key

-- curl -X PUT http://172.20.20.10:8500/v1/kv/broker/kafka/172.20.20.11:8080

--

-- 获取所有前缀key

-- curl http://172.20.20.10:8500/v1/kv/broker/kafka/?recurse

-- [{"LockIndex":0,"Key":"broker/kafka/172.20.20.11:8080","Flags":0,"Value":null,"CreateIndex":34610,"ModifyIndex":34610}]

--

-- 获取所有key,index大于34610版本号(当有多个key时需要获取最大版本号)

-- 没有更新,consul阻塞5分钟

-- curl "http://172.20.20.10:8500/v1/kv/broker/kafka/?recurse&index=34610"

local cache = {}

setmetatable(cache, { __mode="kv"} )

local DEFAULT_TIMEOUT = 6 * 60 * 1000 -- consul默认超时5分钟

local _M = {}

local mt = { __index = _M }

function _M.new(self, watch_url, callback)

local watch = cache[watch_url]

if watch ~= nil then

return watch

end

local httpc, err = http.new()

if not httpc then

return nil, err

end

httpc:set_timeout(DEFAULT_TIMEOUT)

local recurse_url = watch_url .. "?recurse"

watch = setmetatable({

httpc = httpc,

recurse_url = recurse_url,

modify_index = 0,

running = false,

stop = false,

callback = callback,

}, mt)

cache[watch_url] = watch

return watch

end

function _M.start(self)

if self.running then

ngx.log(ngx.ERR, "watch already start, url:", self.recurse_url)

return

end

local is_exiting = ngx.worker.exiting

local watch_index= function()

repeat

local prev_index = self.modify_index

local wait_url = self.recurse_url .. "&index=" .. prev_index

ngx.log(ngx.ERR, "wait:", wait_url)

local result = self:request(wait_url)

if result then

self:get_modify_index(result)

if self.modify_index > prev_index then -- modify_index change

ngx.log(ngx.ERR, "watch,url:", self.recurse_url, " index change")

self:callback(result)

end

end

until self.stop or is_exiting()

ngx.log(ngx.ERR, "watch exit, url: ", self.recurse_url)

end

local ok, err = ngx.timer.at(1, watch_index)

if not ok then

ngx.log(ngx.ERR, "failed to create watch timer: ", err)

return

end

self.running = true

end

function _M.stop(self)

self.stop = true

ngx.log(ngx.ERR, "watch stop, url:", self.recurse_url)

end

function _M.get_modify_index(self, result)

local key = "ModifyIndex"

local max_index = self.modify_index

for _, v in ipairs(result) do

local index = v[key]

if index > max_index then

max_index = index

end

end

self.modify_index = max_index

end

function _M.request(self, url)

local res, err = self.httpc:request_uri(url)

if not res then

ngx.log(ngx.ERR, "watch request error, url:", url, " error:", err)

return nil, err

end

return json.decode(res.body)

end

return _M使用就很简单了

local watch = require "comm.watch"

local broker_watch = watch:new(

"http://172.20.20.10:8500/v1/kv/broker/kafka",

function(co, k)

ngx.log(ngx.ERR, "callback key:", k[1]["Key"])

end

)

broker_watch:start()对于不同的变更需求,通过watch的callback回调函数做处理就好了。

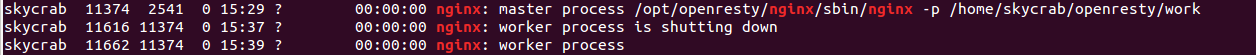

这里有一点需要注意, 由于处理函数基本是一个死循环,所以需要判断当前nginx worker是否还存在,如果until那不加ngx.worker.exiting()判断,当reload时会导致该worker永远不会挂掉,一直处于is shutting down状态,我们知道这是nginx reload时老的worker退出时状态。

为什么会出现这种情况,根据openresty实现介绍

According to the current implementation, each "running timer" will take one (fake) connection record from the global connection record list configured by the standard worker_connections directive in nginx.conf. So ensure that the worker_connections directive is set to a large enough value that takes into account both the real connections and fake connections required by timer callbacks (as limited by the lua_max_running_timers directive).也就是说每一个timer都相当于一个假请求(fake request),这会占用一个work_connection,而nginx reload时老worker会处理完所有的请求才会退出。文档也说了如果timer使用的比较多,需要调大nginx.conf配置文件中的worker_connections参数。

在这里不得不说openresty的高性能,producer使用了async单进程轻松pqs过万,当然这也和你配置参数和消息大小有关,建议producer使用async模式代替sync模式,有着10倍的性能差异。