安装TensorFlow的话,这里就不多说了,参照官网即可,其中各有曲折,自己搜索查阅就好。

接着就是跟着官方的tutorial入门了https://tensorflow.google.cn/get_started/get_started 这里的网站结尾后缀是cn的,如果是com的,那个注意需要翻墙。

先跑tutorial,我这里实战了四个,到TensorFlow Mechanics 101。然后有些不懂的可以查阅网站和书籍,我的参考书,手边买了本周志华的《机器学习》和《面向机器智能的TensorFlow实践》,一本理论,一本实战,都很好读,对回归、分类、前馈神经网络、sigmod、softmax等术语、发展历史也有个大概了解。

官网一上来就是Computation Graph然后就是线性回归中的参数拟合,求解y = wx + b。这里我结合自己的操作和参看的书籍有些学习记录和整理。

启动时需要source ~/tensorflow/bin/activate,添加一个别名alias就行,在你的.bashrc文件加入一行:alias tensorflow='source /home/yake/tensorflow/bin/activate'

关闭的时候需要用指令deactive

查看TensorFlow的版本

import tensorflow as tf

print(tf.__version__)

它的那个Computation Graph很好看,是怎么保存的

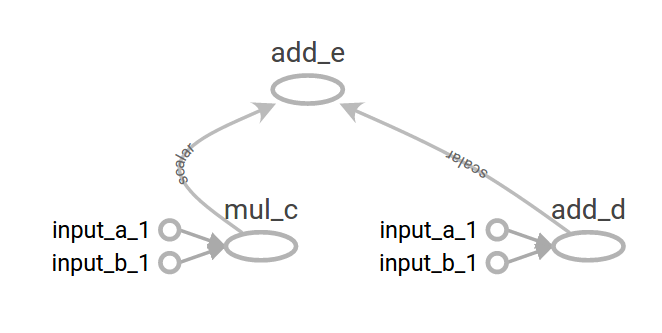

1. Computation Graph的保存

import tensorflow as tf

a = tf.constant(5, name = "input_a")

b = tf.constant(3, name = "input_b")

c = tf.multiply(a,b, name = "mul_c")

d = tf.add(a,b, name = "add_d")

e = tf.add(c,d, name = "add_e")

sess = tf.Session()

output = sess.run(e)

writer = tf.summary.FileWriter('./2tfbasic_save', sess.graph)

writer.close()

sess.close()1.开启TensorFlow环境,source ~/tensorflow/bin/activate或者命令别名tensorflow

2. 输入tensorboard --logdir="2tfbasic_save"

2. Tensor的基本操作

接触到的时候一堆术语,Constant,Variable,PlaceHolder,不过好在某些网友和书籍讲的还算可以。我这里直接贴上我的jupyter notebook的结果(这里是将ipynb导出为Python源码然后插入到博客里)

# coding: utf-8

# In[1]:

# https://www.tensorflow.org/programmers_guide/tensors

# P59 shape, value, dtype, cast

# numpy array

import tensorflow as tf

import numpy as np

a = tf.constant(4.0)

print(a)

print(" a \n{}".format(a))

sess = tf.InteractiveSession()

print("a value:\n{}\n".format(a.eval()))

a = tf.zeros(2)

print("a value before expand:\n{}".format(a.eval()))

print(a.shape)

a = tf.expand_dims(a, 1)

print("a value after expand:\n{}".format(a.eval()))

print(a.shape)

# caution with the double ()

a = tf.zeros((2, 2))

print("\na zeros 2x2 \n{}".format(a))

print("a value:\n{}".format(a.eval()))

sess.close()

# In[2]:

b = tf.constant([[1,2,3],

[4,5,6]])

print(b)

print("b \n{}".format(b))

print(b.shape)

print("\nb shape: \n{}".format(b.get_shape()))

print(b.get_shape())

print(b.dtype)

b = tf.cast(b, tf.float32)

print(b.dtype)

# np.array

c = tf.constant(np.array([

[[1,2,3],

[4,5,6]],

[[1,1,1],

[2,2,2]]

]))

print("\nc numpy array shape: {}".format(c.get_shape()))

sess = tf.InteractiveSession()

print("c numpy array value:\n{}".format(c.eval()))

d = tf.constant([

[[1,2,3],

[4,5,6]],

[[7,8,9],

[10,11,12]]

], tf.int32, name='matrix')

print("\nd tf array shape: {}".format(d.shape))

print("d tf array value:\n{}".format(d.eval()))

sess.close()

# In[3]:

import tensorflow as tf

import numpy as np

a = np.array([2,3],dtype = np.int32)

b = tf.constant(5.0, tf.float32)

c = tf.constant([5.0,6.0], tf.float32)

d = tf.constant([[5.0,6.0, 7.0],[7.0,8.0,9.0]], tf.float32, name='matrix')

e = tf.placeholder(tf.int32, name='yake_input')

# 利用shape函数引入numpy.array

shape = tf.shape(a, name='a_shape')

sess = tf.Session()

#out = sess.run(e)

out = sess.run(shape)

sess.close()

print ("shape of a: " ,out)

print "a value: ",a

print("b shape: ",b)

print("c shape: ",c)

print("d shape: ",d)

print("e shape: ",e)关于打印

for step in range(1001):

curr_W, curr_b, curr_loss, _ = sess.run([W, b, cost, train], {x: x_train, y: y_train})

if step % 200 == 0:

print("step: %s W: %s b: %s loss: %s"%(step, curr_W, curr_b, curr_loss))print("b value: {}".format(b.eval()))

print("step: %s loss: %s" %(step loss))

print "Step: ", step, "Loss: ", loss关于公开课:

- https://www.leiphone.com/news/201701/0milWCyQO4ZbBvuW.html?from=timeline&viewType=weixin

- https://zhuanlan.zhihu.com/p/30344193