keras每个批次(或设置批次间隔)保存训练信息(模型),防止耗时较长的的模型训练中断导致重新训练。

方法

使用 model.train_on_batch(X_train, Y_train) 函数进行训练。

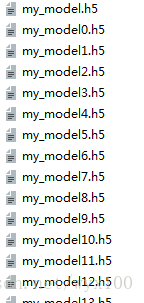

保存模型图示

完整实例(简单实例说明原理)

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.models import Sequential

from keras.layers import Dense

from keras.models import load_model

# create some data

X = np.linspace(-1, 1, 200)

np.random.shuffle(X) # randomize the data

Y = 0.5 * X + 2 + np.random.normal(0, 0.05, (200,))

X_train, Y_train = X[:160], Y[:160] # first 160 data points

X_test, Y_test = X[160:], Y[160:] # last 40 data points

model = Sequential()

model.add(Dense(output_dim=1, input_dim=1))

model.compile(loss='mse', optimizer='sgd')

for step in range(301):

cost = model.train_on_batch(X_train, Y_train)

strModel = 'my_model' + str(step) + '.h5'

model.save(strModel) # HDF5 file, you have to pip3 install h5py if don't have it

# save

print('test before save: ', model.predict(X_test[0:2]))

model.save('my_model.h5') # HDF5 file, you have to pip3 install h5py if don't have it

del model # deletes the existing model

# load

model = load_model('my_model.h5')

print('test after load: ', model.predict(X_test[0:2]))