前言:

配置:windows10 + Tensorflow1.6.0 + Python3.6.4(笔记本无GPU)

源码:https://github.com/tensorflow/models/tree/master/research/deeplab

权重下载地址:https://github.com/tensorflow/models/blob/master/research/deeplab/g3doc/model_zoo.md

1. 运行model_test.py

测试安装环境,如果正常,提示:

Ran 5 tests in 10.758s2. 运行build_voc2012_data.py 生成 .tfrecord数据

在VOC2012数据集中,文件夹JPEGImages存放着原始的[n*m*3] .jpg格式图片,文件夹SegmentationClass中存放Label数据,为[n*m*3]的 .png图片,首先应将这些label数据转换为[n*m*1]的单通道图片。

具体转换方法见:https://blog.csdn.net/weixin_41713230/article/details/81076292

python build_voc2012_data.py \

--image_folder="./VOC2012/JPEGImages" \ # 保存images的路径

--semantic_segmentation_folder="./VOC2012/SegmentationClass" \ #保存labels的路径,为单通道

--list_folder='./VOC2012/ImageSets/Segmentation' \ # 保存train\val.txt文件的路径

--image_format="jpg(image格式)" \

--output_dir='./tfrecord' # 生成tfrecord格式的数据所要保存的位置运行成功后,会提示如下信息:

>> Converting image 366/1464 shard 0 # 1464为train.txt中图片名个数

>> Converting image 732/1464 shard 1

>> Converting image 1098/1464 shard 2

>> Converting image 1464/1464 shard 3

>> Converting image 22/85 shard 0 # 85为val.txt中图片名数量,源文件中为1449

>> Converting image 44/85 shard 1

>> Converting image 66/85 shard 2

>> Converting image 85/85 shard 33. 运行train.py训练模型

python train.py \

--logtostderr \

--train_split="train" \ 选择用于训练的数据集

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--train_crop_size=256 \ # 当因内存不够而报错时,可适当调小该参数

--train_crop_size=256 \

--train_batch_size=2 \ # 因内存不够,设置为2

--training_number_of_steps=100 \ # 尝试训练100步

--fine_tune_batch_norm=False \ # 当batch_size大于12时,设置为True

--tf_initial_checkpoint='./weights/deeplabv3_pascal_train_aug/model.ckpt' \ # 加载权重,权重下载链接见文章开头

--train_logdir='./checkpoint' \ # 保存训练的中间结果的路径

--dataset_dir='./datasets/tfrecord' # 第二步生成的tfrecord的路径运行成功信息如下:

如果在第2步,没有将label数据转换为单通道数据,loss可能会爆炸性增长,也可能报错,提示:Loss is inf or nan.

INFO:tensorflow:global step 1: loss = 0.3148 (15.549 sec/step)

INFO:tensorflow:global step 2: loss = 0.1740 (8.497 sec/step)

INFO:tensorflow:global step 3: loss = 0.5984 (6.847 sec/step)

INFO:tensorflow:global step 4: loss = 0.1599 (6.683 sec/step)

INFO:tensorflow:global step 5: loss = 0.3978 (6.617 sec/step)

INFO:tensorflow:global step 6: loss = 0.4886 (5.881 sec/step)

………………

INFO:tensorflow:global step 97: loss = 0.7356 (6.090 sec/step)

INFO:tensorflow:global step 98: loss = 0.5818 (6.163 sec/step)

INFO:tensorflow:global_step/sec: 0.163526

INFO:tensorflow:Recording summary at step 98.

INFO:tensorflow:global step 99: loss = 0.2523 (8.893 sec/step)

INFO:tensorflow:global step 100: loss = 0.2783 (6.421 sec/step)

INFO:tensorflow:Stopping Training.

INFO:tensorflow:Finished training! Saving model to disk.

4. 运行eval.py,输出为MIOU值

python eval.py \

--logtostderr \

--eval_split="val" \ # 验证数据集

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--eval_crop_size=513 \

--eval_crop_size=513 \

--checkpoint_dir='./checkpoint' \ # 模型checkpoint所在路径

--eval_logdir='./validation_output' \ # 结果输出路径,该文件夹需手动创建

--dataset_dir='./datasets/tfrecord' # 验证集tfrecord文件所在路径运行成功信息如下:

如果在第2步,没有将label数据转换为单通道数据,这里会报错提示:['predictions' out of bound]。

INFO:tensorflow:Restoring parameters from ./checkpoint\model.ckpt-100

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Starting evaluation at 2018-07-17-07:42:06

INFO:tensorflow:Evaluation [8/85]

INFO:tensorflow:Evaluation [16/85]

INFO:tensorflow:Evaluation [24/85]

INFO:tensorflow:Evaluation [32/85]

INFO:tensorflow:Evaluation [40/85]

INFO:tensorflow:Evaluation [48/85]

INFO:tensorflow:Evaluation [56/85]

INFO:tensorflow:Evaluation [64/85]

INFO:tensorflow:Evaluation [72/85]

INFO:tensorflow:Evaluation [80/85]

INFO:tensorflow:Evaluation [85/85]

INFO:tensorflow:Finished evaluation at 2018-07-17-07:48:46

miou_1.0[0.721848249]5. 运行vis.py,查看结果

python vis.py \

--logtostderr \

--vis_split="val" \ # 共85张图片

--model_variant="xception_65" \

--atrous_rates=6 \

--atrous_rates=12 \

--atrous_rates=18 \

--output_stride=16 \

--decoder_output_stride=4 \

--vis_crop_size=513 \

--vis_crop_size=513 \

--checkpoint_dir='./checkpoint' \ # 模型checkpoint所在路径

--vis_logdir='./vis_output' \ # 预测结果保存路径,自己创建

--dataset_dir='./datasets/tfrecord' # 生成的tfrecord数据集所在路径成功运行提示信息如下:

INFO:tensorflow:Visualizing batch 1 / 85

INFO:tensorflow:Visualizing batch 2 / 85

INFO:tensorflow:Visualizing batch 3 / 85

INFO:tensorflow:Visualizing batch 4 / 85

INFO:tensorflow:Visualizing batch 5 / 85

INFO:tensorflow:Visualizing batch 6 / 85

INFO:tensorflow:Visualizing batch 7 / 85

INFO:tensorflow:Visualizing batch 8 / 85

INFO:tensorflow:Visualizing batch 9 / 85

INFO:tensorflow:Visualizing batch 10 / 85

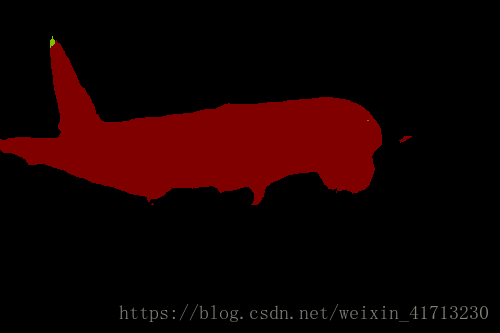

……………………最后,在输出文件夹('./vis_output')中查看模型的预测结果,如下: