ceph作为后端存储:

ceph提供三种存储:

1.对象存储

2.文件存储

3.块存储

框架图:

元数据服务器 MD/MDS

集群监视器MON

对象存储服务器OSD

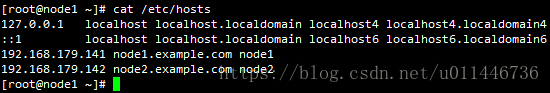

实际部署的时候,新建了node1(RHEL7.1)和node2(RHEL7.1),其中node1作为MD/MON/OSD1,node2作为OSD2

更新hosts文件:

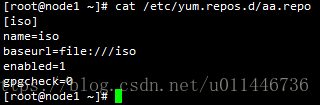

配置下yum源:

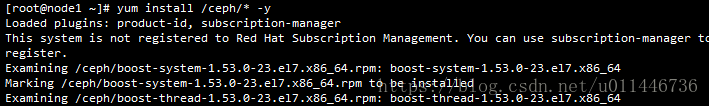

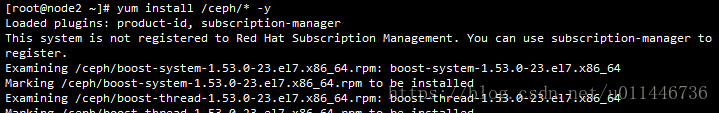

上传软件包ceph软件包:

安装并部署ceph:

# yum install /ceph/* -y

创建步骤:

所有的操作都是在node1上配置,其中node1作为MD/MON/OSD1,node2作为OSD2

1.随意创建一个目录

mkdir xx ; cd xx

2.创建一个ceph集群

ceph-deploy new node1

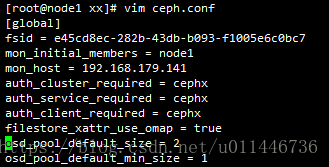

3.修改ceph配置的配置ceph.conf

osd_pool_default_size = 2

osd_pool_default_min_size = 1

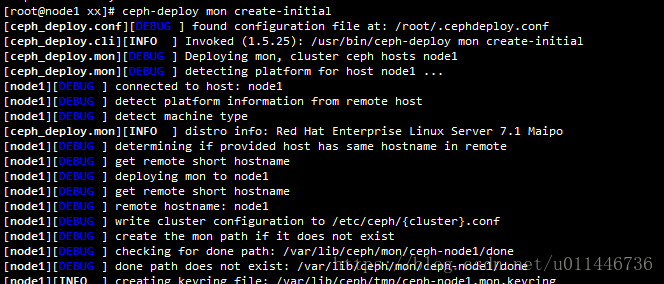

4.创建mon

ceph-deploy mon create-initial

如果配置文件修改了,想重新初始化的话:

ceph-deploy --overwrite-conf mon create-initial

5.准备OSD

ceph-deploy osd prepare node1:/yy node2:/xx

ceph-deploy osd activate node1:/yy node2:/xx

6.创建MD

ceph-deploy mds create node1

7.把密钥拷贝到所有的节点上去

ceph-deploy admin node1 node2

具体步骤如下:

1.随意创建一个目录

mkdir xx ; cd xx

2.创建一个ceph集群

ceph-deploy new node1

3.修改ceph配置的配置ceph.conf

osd_pool_default_size = 2

osd_pool_default_min_size = 1

4.创建mon

ceph-deploy mon create-initial

如果配置文件修改了,想重新初始化的话:

ceph-deploy --overwrite-conf mon create-initial

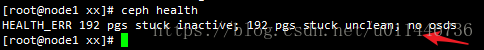

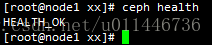

检查集群:

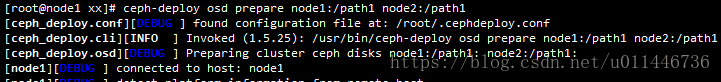

5.准备OSD

ceph-deploy osd prepare node1:/path1 node2:/path1

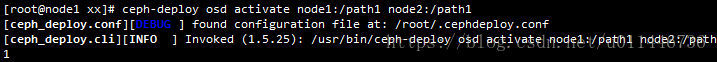

ceph-deploy osd activate node1:/path1 node2:/path1

[root@node1 xx]# ceph-deploy osd prepare node1:/path1 node2:/path1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.25): /usr/bin/ceph-deploy osd prepare node1:/path1 node2:/path1

[ceph_deploy.osd][DEBUG ] Preparing cluster ceph disks node1:/path1: node2:/path1:

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Red Hat Enterprise Linux Server 7.1 Maipo

[ceph_deploy.osd][DEBUG ] Deploying osd to node1

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][INFO ] Running command: udevadm trigger --subsystem-match=block --action=add

[ceph_deploy.osd][DEBUG ] Preparing host node1 disk /path1 journal None activate False

[node1][INFO ] Running command: ceph-disk -v prepare --fs-type xfs --cluster ceph -- /path1

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=f

sid[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_mkfs_options_xfs[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_fs_mkfs_options_xfs[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_mount_options_xfs[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_fs_mount_options_xfs[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=o

sd_journal_size[node1][WARNIN] DEBUG:ceph-disk:Preparing osd data dir /path1

[node1][INFO ] checking OSD status...

[node1][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host node1 is now ready for osd use.

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Red Hat Enterprise Linux Server 7.1 Maipo

[ceph_deploy.osd][DEBUG ] Deploying osd to node2

[node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node2][WARNIN] osd keyring does not exist yet, creating one

[node2][DEBUG ] create a keyring file

[node2][INFO ] Running command: udevadm trigger --subsystem-match=block --action=add

[ceph_deploy.osd][DEBUG ] Preparing host node2 disk /path1 journal None activate False

[node2][INFO ] Running command: ceph-disk -v prepare --fs-type xfs --cluster ceph -- /path1

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=f

sid[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_mkfs_options_xfs[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_fs_mkfs_options_xfs[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_mount_options_xfs[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup

osd_fs_mount_options_xfs[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=o

sd_journal_size[node2][WARNIN] DEBUG:ceph-disk:Preparing osd data dir /path1

[node2][INFO ] checking OSD status...

[node2][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host node2 is now ready for osd use.

[root@node1 xx]# ceph-deploy osd activate node1:/path1 node2:/path1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.25): /usr/bin/ceph-deploy osd activate node1:/path1 node2:/path

1[ceph_deploy.osd][DEBUG ] Activating cluster ceph disks node1:/path1: node2:/path1:

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Red Hat Enterprise Linux Server 7.1 Maipo

[ceph_deploy.osd][DEBUG ] activating host node1 disk /path1

[ceph_deploy.osd][DEBUG ] will use init type: sysvinit

[node1][INFO ] Running command: ceph-disk -v activate --mark-init sysvinit --mount /path1

[node1][WARNIN] DEBUG:ceph-disk:Cluster uuid is e45cd8ec-282b-43db-b093-f1005e6c0bc7

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=f

sid[node1][WARNIN] DEBUG:ceph-disk:Cluster name is ceph

[node1][WARNIN] DEBUG:ceph-disk:OSD uuid is 297a37aa-8775-4aec-b898-606a61f18ce6

[node1][WARNIN] DEBUG:ceph-disk:Allocating OSD id...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd create --concise 297a37aa-8775-4aec-b898-606a61f18ce6[node1][WARNIN] DEBUG:ceph-disk:OSD id is 0

[node1][WARNIN] DEBUG:ceph-disk:Initializing OSD...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /path1/activate.monmap[node1][WARNIN] got monmap epoch 1

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster ceph --mkfs --mkkey -i 0 -

-monmap /path1/activate.monmap --osd-data /path1 --osd-journal /path1/journal --osd-uuid 297a37aa-8775-4aec-b898-606a61f18ce6 --keyring /path1/keyring[node1][WARNIN] 2018-01-27 19:47:48.351622 7ff4fceaa7c0 -1 journal FileJournal::_open: disabling aio f

or non-block journal. Use journal_force_aio to force use of aio anyway[node1][WARNIN] 2018-01-27 19:47:48.376509 7ff4fceaa7c0 -1 journal FileJournal::_open: disabling aio f

or non-block journal. Use journal_force_aio to force use of aio anyway[node1][WARNIN] 2018-01-27 19:47:48.377265 7ff4fceaa7c0 -1 filestore(/path1) could not find 23c2fcde/o

sd_superblock/0//-1 in index: (2) No such file or directory[node1][WARNIN] 2018-01-27 19:47:48.385546 7ff4fceaa7c0 -1 created object store /path1 journal /path1/

journal for osd.0 fsid e45cd8ec-282b-43db-b093-f1005e6c0bc7[node1][WARNIN] 2018-01-27 19:47:48.385611 7ff4fceaa7c0 -1 auth: error reading file: /path1/keyring: c

an't open /path1/keyring: (2) No such file or directory[node1][WARNIN] 2018-01-27 19:47:48.385736 7ff4fceaa7c0 -1 created new key in keyring /path1/keyring

[node1][WARNIN] DEBUG:ceph-disk:Marking with init system sysvinit

[node1][WARNIN] DEBUG:ceph-disk:Authorizing OSD key...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring auth add osd.0 -i /path1/keyring osd allow * mon allow profile osd[node1][WARNIN] added key for osd.0

[node1][WARNIN] DEBUG:ceph-disk:ceph osd.0 data dir is ready at /path1

[node1][WARNIN] DEBUG:ceph-disk:Creating symlink /var/lib/ceph/osd/ceph-0 -> /path1

[node1][WARNIN] DEBUG:ceph-disk:Starting ceph osd.0...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/sbin/service ceph --cluster ceph start osd.0

[node1][DEBUG ] === osd.0 ===

[node1][WARNIN] create-or-move updating item name 'osd.0' weight 0.04 at location {host=node1,root=def

ault} to crush map[node1][DEBUG ] Starting Ceph osd.0 on node1...

[node1][WARNIN] Running as unit run-12498.service.

[node1][INFO ] checking OSD status...

[node1][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[node1][INFO ] Running command: systemctl enable ceph

[node1][WARNIN] ceph.service is not a native service, redirecting to /sbin/chkconfig.

[node1][WARNIN] Executing /sbin/chkconfig ceph on

[node1][WARNIN] The unit files have no [Install] section. They are not meant to be enabled

[node1][WARNIN] using systemctl.

[node1][WARNIN] Possible reasons for having this kind of units are:

[node1][WARNIN] 1) A unit may be statically enabled by being symlinked from another unit's

[node1][WARNIN] .wants/ or .requires/ directory.

[node1][WARNIN] 2) A unit's purpose may be to act as a helper for some other unit which has

[node1][WARNIN] a requirement dependency on it.

[node1][WARNIN] 3) A unit may be started when needed via activation (socket, path, timer,

[node1][WARNIN] D-Bus, udev, scripted systemctl call, ...).

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: Red Hat Enterprise Linux Server 7.1 Maipo

[ceph_deploy.osd][DEBUG ] activating host node2 disk /path1

[ceph_deploy.osd][DEBUG ] will use init type: sysvinit

[node2][INFO ] Running command: ceph-disk -v activate --mark-init sysvinit --mount /path1

[node2][WARNIN] DEBUG:ceph-disk:Cluster uuid is e45cd8ec-282b-43db-b093-f1005e6c0bc7

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=f

sid[node2][WARNIN] DEBUG:ceph-disk:Cluster name is ceph

[node2][WARNIN] DEBUG:ceph-disk:OSD uuid is 9245a83a-3058-45df-a8d2-57702442e230

[node2][WARNIN] DEBUG:ceph-disk:Allocating OSD id...

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd create --concise 9245a83a-3058-45df-a8d2-57702442e230[node2][WARNIN] DEBUG:ceph-disk:OSD id is 1

[node2][WARNIN] DEBUG:ceph-disk:Initializing OSD...

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /path1/activate.monmap[node2][WARNIN] got monmap epoch 1

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster ceph --mkfs --mkkey -i 1 -

-monmap /path1/activate.monmap --osd-data /path1 --osd-journal /path1/journal --osd-uuid 9245a83a-3058-45df-a8d2-57702442e230 --keyring /path1/keyring[node2][WARNIN] 2018-01-27 19:47:56.804140 7ff81a30a7c0 -1 journal FileJournal::_open: disabling aio f

or non-block journal. Use journal_force_aio to force use of aio anyway[node2][WARNIN] 2018-01-27 19:47:56.818808 7ff81a30a7c0 -1 journal FileJournal::_open: disabling aio f

or non-block journal. Use journal_force_aio to force use of aio anyway[node2][WARNIN] 2018-01-27 19:47:56.819433 7ff81a30a7c0 -1 filestore(/path1) could not find 23c2fcde/o

sd_superblock/0//-1 in index: (2) No such file or directory[node2][WARNIN] 2018-01-27 19:47:56.826569 7ff81a30a7c0 -1 created object store /path1 journal /path1/

journal for osd.1 fsid e45cd8ec-282b-43db-b093-f1005e6c0bc7[node2][WARNIN] 2018-01-27 19:47:56.826624 7ff81a30a7c0 -1 auth: error reading file: /path1/keyring: c

an't open /path1/keyring: (2) No such file or directory[node2][WARNIN] 2018-01-27 19:47:56.826753 7ff81a30a7c0 -1 created new key in keyring /path1/keyring

[node2][WARNIN] DEBUG:ceph-disk:Marking with init system sysvinit

[node2][WARNIN] DEBUG:ceph-disk:Authorizing OSD key...

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-o

sd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring auth add osd.1 -i /path1/keyring osd allow * mon allow profile osd[node2][WARNIN] added key for osd.1

[node2][WARNIN] DEBUG:ceph-disk:ceph osd.1 data dir is ready at /path1

[node2][WARNIN] DEBUG:ceph-disk:Creating symlink /var/lib/ceph/osd/ceph-1 -> /path1

[node2][WARNIN] DEBUG:ceph-disk:Starting ceph osd.1...

[node2][WARNIN] INFO:ceph-disk:Running command: /usr/sbin/service ceph --cluster ceph start osd.1

[node2][DEBUG ] === osd.1 ===

[node2][WARNIN] create-or-move updating item name 'osd.1' weight 0.04 at location {host=node2,root=def

ault} to crush map[node2][DEBUG ] Starting Ceph osd.1 on node2...

[node2][WARNIN] Running as unit run-11964.service.

[node2][INFO ] checking OSD status...

[node2][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[node2][INFO ] Running command: systemctl enable ceph

[node2][WARNIN] ceph.service is not a native service, redirecting to /sbin/chkconfig.

[node2][WARNIN] Executing /sbin/chkconfig ceph on

[node2][WARNIN] The unit files have no [Install] section. They are not meant to be enabled

[node2][WARNIN] using systemctl.

[node2][WARNIN] Possible reasons for having this kind of units are:

[node2][WARNIN] 1) A unit may be statically enabled by being symlinked from another unit's

[node2][WARNIN] .wants/ or .requires/ directory.

[node2][WARNIN] 2) A unit's purpose may be to act as a helper for some other unit which has

[node2][WARNIN] a requirement dependency on it.

[node2][WARNIN] 3) A unit may be started when needed via activation (socket, path, timer,

[node2][WARNIN] D-Bus, udev, scripted systemctl call, ...).

6.创建MD

ceph-deploy mds create node1

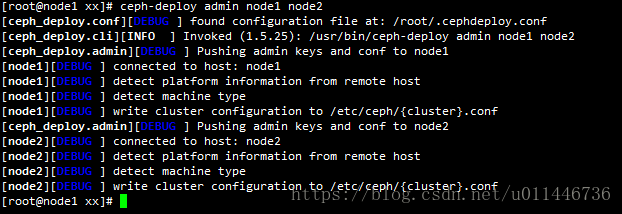

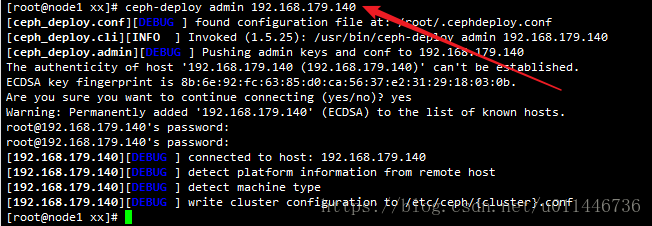

7.把密钥拷贝到所有的节点上去

ceph-deploy admin node1 node2

*********************************************************

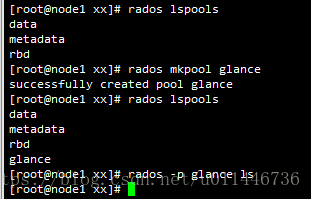

创建一个pool作为glance的后端存储

#################################

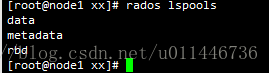

rados lspools

rados mkpool glance

rados rmpool glance glance --yes-i-really-really-mean-it

rados df ---查看池空间的使用情况

rados -p glance ls ---查看池里有什么东西

#########################

# rados lspools

创建pool存储数据

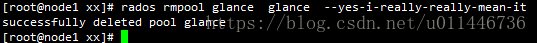

如何删除:

rados rmpool glance glance --yes-i-really-really-mean-it

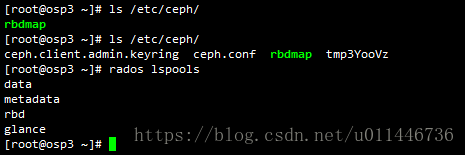

下面将osp3配置成ceph的一个客户端来使用ceph

把之前创建的配置文件复制到osp3上

# ceph-deploy admin 192.168.179.140

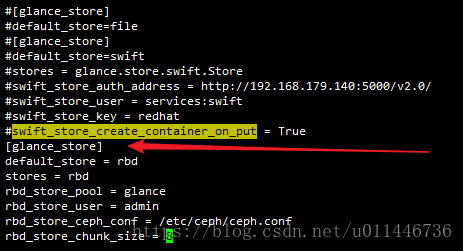

如何配置ceph作为glance后端存储:

修改glance配置文件:(具体内容从ceph的官方文档复制的)

[glance_store]

default_store = rbd

stores = rbd

rbd_store_pool = glance

rbd_store_user = admin

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

使用管理员登录的话,密码如下:

修改密码文件权限,因为glance用户只能使用o权限,所以设置setfacl

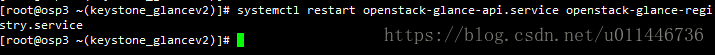

重启服务:

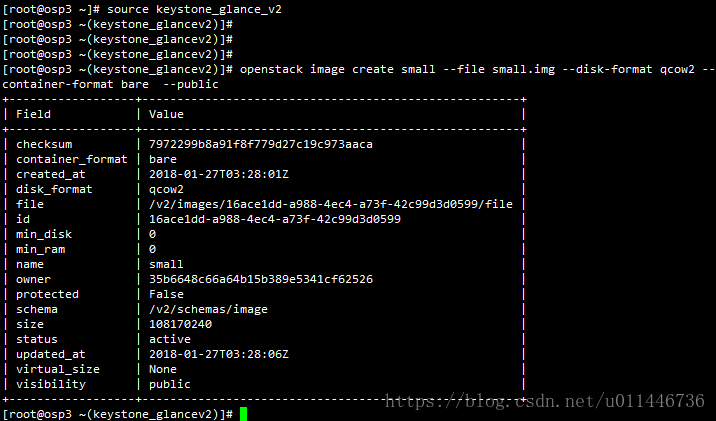

创建镜像并查看:

# openstack image create small --file small.img --disk-format qcow2 --container-format bare --public

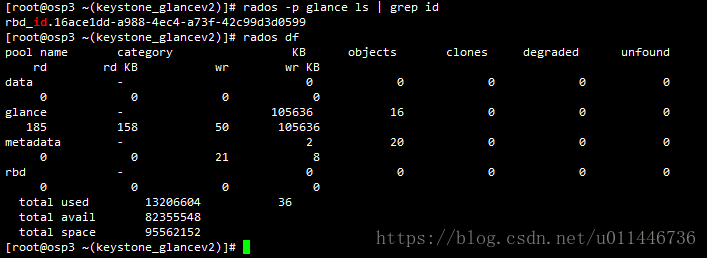

# rados -p glance ls | grep id

# rados df

删除此镜像:

恢复成默认值:

重启服务即可: