人脸识别的SDK来自虹软的人脸识别SDK,开源免费

虹软的官网 http://www.arcsoft.com.cn/ai/arcface.html

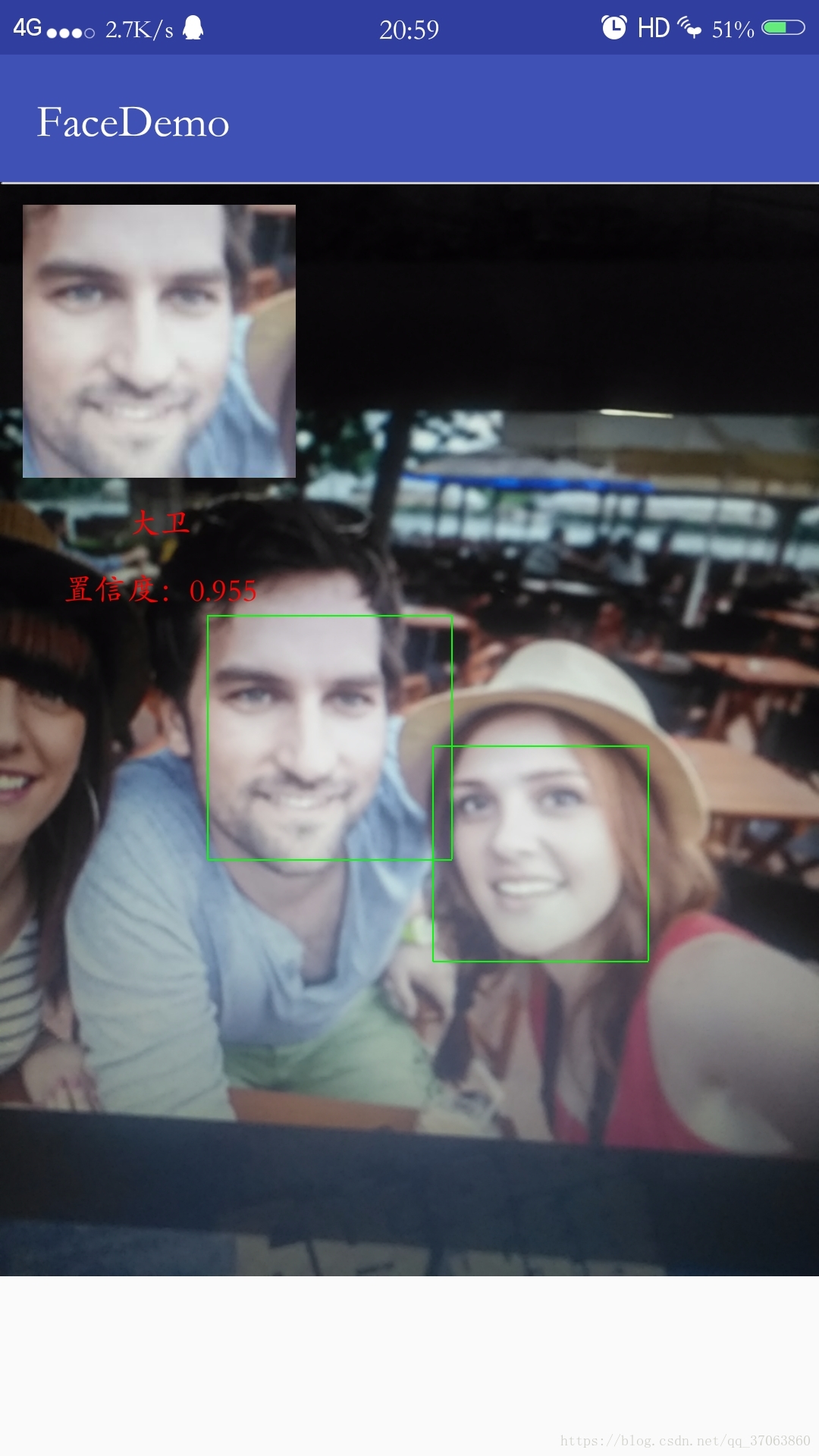

faceDemo实现效果:

在项目实现过程中遇到的一些问题,记一下。

一、调用系统相机方法

这里使用FileProvider.getUriForFile();获取Uri 而不使用Uri.fromFile()android7.0对于系统权限做了一些更爱,为了提高私有文件的安全性。当我们在访问文件的时候,安卓禁止你的应用外部公开file://uri 会报错:android.os.FileUriExposedException:

由于我的手机是Android7.1故使用以下,Android7.0以下的可以直接使用Uri.fromFile(),为了保持兼容性可以在程序中加一个判断当前系统版本进行调用

// 获取SD卡状态

String state = Environment.getExternalStorageState();

if(state.equals(Environment.MEDIA_MOUNTED)){

photoPath = Environment.getExternalStorageDirectory() + "/face.png";

File imageDir = new File(photoPath);

if(!imageDir.exists()){

// 根据地址生成新的文件

try {

imageDir.createNewFile();

} catch (IOException e) {

e.printStackTrace();

}

}

Intent intent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

//Uri uri = Uri.fromFile(imageDir);

Uri uri = FileProvider.getUriForFile(MainActivity.this, "com.demo.cjh.facedemo.fileprovider", imageDir);

intent.putExtra(MediaStore.EXTRA_OUTPUT,uri);

startActivityForResult(intent,11);

}else {

Toast.makeText(MainActivity.this,"SD卡未插入",Toast.LENGTH_SHORT).show();

}

关于FileProvider.getUriForFile()调用的设置

第一步 在清单文件下注册

<provider

android:name="android.support.v4.content.FileProvider"

//此处的provider需要和代码中的provider保持一致

android:authorities="你的包名.provider"

android:exported="false"

android:grantUriPermissions="true">

<meta-data

android:name="android.support.FILE_PROVIDER_PATHS"

android:resource="@xml/provider_paths" />

</provider> 第二步 res/xml/provider_paths类

<?xml version="1.0" encoding="utf-8"?>

<paths>

<external-path

name="external_files"

path="." />

</paths> 注意你的代码里面的provider名字需要和清单的provider名字一致,否则会报空

二、activity回调接收相机的图片

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

// requestCode 回调代码,用来识别是哪个activity回调回来的,和startActivityForResult();第二个参数比较

// resultCode 系统确认代码,有Activity.RESULT_OK |Activity.RESULT_CANCELED 前者表示成功回调,后者表示取消

}三、虹软SDK的使用

// 把图形格式转化为软虹SDK使用的图像格式NV21

byte[] data = new byte[bp.getWidth() * bp.getHeight() * 3 / 2];

ImageConverter convert = new ImageConverter();

convert.initial(bp.getWidth(), bp.getHeight(), ImageConverter.CP_PAF_NV21);

if (convert.convert(bp, data)) {

Log.d(TAG, "convert ok!");

}

convert.destroy();

// 人脸信息检测 检测的结果包括人脸矩形框的位置

AFD_FSDKEngine FD_engine = new AFD_FSDKEngine();

AFD_FSDKVersion FD_version = new AFD_FSDKVersion();

// 用来存放检测到的人脸信息列表

List<AFD_FSDKFace> FD_result = new ArrayList<AFD_FSDKFace>();

// 首先初始化人脸检测引擎

AFD_FSDKError FD_error = FD_engine.AFD_FSDK_InitialFaceEngine(FaceDB.appid,FaceDB.fd_key,AFD_FSDKEngine.AFD_OPF_0_HIGHER_EXT, 16, 5);

if(FD_error.getCode() != AFD_FSDKError.MOK ){

Toast.makeText(Register.this, "FD初始化失败,错误码:" + FD_error.getCode(), Toast.LENGTH_SHORT).show();

}

// 输入的data数据为NV21格式,人脸检测返回结果保存在FD_result中

FD_error = FD_engine.AFD_FSDK_StillImageFaceDetection(data,bp.getWidth(), bp.getHeight(), AFD_FSDKEngine.CP_PAF_NV21, FD_result);

// 画box框

Bitmap bitmap = Bitmap.createBitmap(bp.getWidth(), bp.getHeight(), bp.getConfig());

Canvas canvas = new Canvas(bitmap);

canvas.drawBitmap(bp, 0, 0, null);

Paint mPaint = new Paint();

for (AFD_FSDKFace face : FD_result) {

mPaint.setColor(Color.RED);

mPaint.setStrokeWidth(10.0f);

mPaint.setStyle(Paint.Style.STROKE);

canvas.drawRect(face.getRect(), mPaint);

}

// canvas.restore();

bp = bitmap;

imageView.setImageBitmap(bp);

if(!FD_result.isEmpty()) {

// 检测人脸特征信息

AFR_FSDKVersion FR_version1 = new AFR_FSDKVersion();

AFR_FSDKEngine FR_engine1 = new AFR_FSDKEngine();

// 存放人脸特征信息

AFR_FSDKFace FR_result1 = new AFR_FSDKFace();

// 初始化

AFR_FSDKError FR_error1 = FR_engine1.AFR_FSDK_InitialEngine(FaceDB.appid, FaceDB.fr_key);

if(FR_error1.getCode() != AFR_FSDKError.MOK ){

Toast.makeText(Register.this, "FR初始化失败,错误码:" + FD_error.getCode(), Toast.LENGTH_SHORT).show();

}

// 检测人脸特征

FR_error1 = FR_engine1.AFR_FSDK_ExtractFRFeature(data,bp.getWidth(),bp.getHeight(), AFR_FSDKEngine.CP_PAF_NV21, new Rect(FD_result.get(0).getRect()), FD_result.get(0).getDegree(), FR_result1);

if(FR_error1.getCode() != AFR_FSDKError.MOK){

Toast.makeText(Register.this, "人脸特征无法检测,请换一张图片", Toast.LENGTH_SHORT).show();

}else{

mAFR_FSDKFace = FR_result1.clone(); // 复制

// 裁剪

int width = FD_result.get(0).getRect().width();

int height = FD_result.get(0).getRect().height();

Bitmap face_bitmap = Bitmap.createBitmap(width, height, Bitmap.Config.RGB_565);

Canvas face_canvas = new Canvas(face_bitmap);

face_canvas.drawBitmap(bp, FD_result.get(0).getRect(), new Rect(0, 0, width, height), null);

// 显示

imageView2.setImageBitmap(face_bitmap);

// 添加人脸特征信息到脸库

// App.mFaceDB.addFace("name",mAFR_FSDKFace);

}

// 销毁

FR_error1 = FR_engine1.AFR_FSDK_UninitialEngine();

}else{

Toast.makeText(Register.this, "未检测到人脸", Toast.LENGTH_SHORT).show();

}

FD_error = FD_engine.AFD_FSDK_UninitialFaceEngine();

人脸检测布局

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context="com.demo.cjh.facedemo.Detecter">

<com.guo.android_extend.widget.CameraSurfaceView

android:id="@+id/surfaceView"

android:layout_width="1dp"

android:layout_height="1dp"/>

<com.guo.android_extend.widget.CameraGLSurfaceView

android:id="@+id/glsurfaceView"

android:layout_width="144dp"

android:layout_height="176dp"

android:layout_marginTop="80dp"

android:layout_centerHorizontal="true"/>

<TextView

android:id="@+id/text1"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="faceDemo"

android:layout_below="@id/glsurfaceView"

android:layout_centerHorizontal="true"

android:paddingTop="10dp"/>

</RelativeLayout>人脸检测代码

public class Detecter extends AppCompatActivity implements View.OnTouchListener, CameraSurfaceView.OnCameraListener, Camera.AutoFocusCallback {

private final String TAG = this.getClass().getSimpleName();

private CameraSurfaceView mSurfaceView;

private CameraGLSurfaceView mGLSurfaceView;

private Camera mCamera;

private TextView textView1 ;

int mCameraID;

int mCameraRotate;

boolean mCameraMirror;

private int mWidth, mHeight, mFormat;

List<AFT_FSDKFace> result = new ArrayList<>();

AFT_FSDKVersion version = new AFT_FSDKVersion();

AFT_FSDKEngine engine = new AFT_FSDKEngine();

byte[] mImageNV21 = null;

FRAbsLoop mFRAbsLoop = null;

AFT_FSDKFace mAFT_FSDKFace = null;

boolean isOK = false;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mCameraID = getIntent().getIntExtra("Camera", 0) == 0 ? Camera.CameraInfo.CAMERA_FACING_BACK : Camera.CameraInfo.CAMERA_FACING_FRONT;

mCameraRotate = getIntent().getIntExtra("Camera", 0) == 0 ? 90 : 270;

mCameraMirror = getIntent().getIntExtra("Camera", 0) == 0 ? false : true;

mWidth = 1280;

mHeight = 960;

mFormat = ImageFormat.NV21;

setContentView(R.layout.activity_detecter);

mGLSurfaceView = (CameraGLSurfaceView) findViewById(R.id.glsurfaceView);

mGLSurfaceView.setOnTouchListener(this);

mSurfaceView = (CameraSurfaceView) findViewById(R.id.surfaceView);

mSurfaceView.setOnCameraListener(this);

mSurfaceView.setupGLSurafceView(mGLSurfaceView, true, mCameraMirror, mCameraRotate);

mSurfaceView.debug_print_fps(true, false);

textView1 = (TextView) findViewById(R.id.text1);

// 初始化人脸跟踪程序

AFT_FSDKError err = engine.AFT_FSDK_InitialFaceEngine(FaceDB.appid, FaceDB.ft_key, AFT_FSDKEngine.AFT_OPF_0_HIGHER_EXT, 16, 5);

Log.d(TAG, "AFT_FSDK_InitialFaceEngine =" + err.getCode());

err = engine.AFT_FSDK_GetVersion(version);

Log.d(TAG, "AFT_FSDK_GetVersion:" + version.toString() + "," + err.getCode());

mFRAbsLoop = new FRAbsLoop(); // 识别线程

mFRAbsLoop.start();

}

class FRAbsLoop extends AbsLoop {

AFR_FSDKVersion version = new AFR_FSDKVersion();

AFR_FSDKEngine engine = new AFR_FSDKEngine();

AFR_FSDKFace result = new AFR_FSDKFace();

List<FaceDB.FaceRegist> mResgist = App.mFaceDB.mRegister;

List<ASAE_FSDKFace> face1 = new ArrayList<>();

List<ASGE_FSDKFace> face2 = new ArrayList<>();

@Override

public void setup() {

AFR_FSDKError error = engine.AFR_FSDK_InitialEngine(FaceDB.appid, FaceDB.fr_key);

Log.d(TAG, "AFR_FSDK_InitialEngine = " + error.getCode());

error = engine.AFR_FSDK_GetVersion(version);

Log.d(TAG, "FR=" + version.toString() + "," + error.getCode()); //(210, 178 - 478, 446), degree = 1 780, 2208 - 1942, 3370

}

@Override

public void loop() {

if(isOK){

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

mFRAbsLoop.shutdown();

Detecter.this.finish();

}

// 进行人脸识别检测

if (mImageNV21 != null) {

long time = System.currentTimeMillis();

AFR_FSDKError error = engine.AFR_FSDK_ExtractFRFeature(mImageNV21, mWidth, mHeight, AFR_FSDKEngine.CP_PAF_NV21, mAFT_FSDKFace.getRect(), mAFT_FSDKFace.getDegree(), result);

Log.d(TAG, "AFR_FSDK_ExtractFRFeature cost :" + (System.currentTimeMillis() - time) + "ms");

Log.d(TAG, "Face=" + result.getFeatureData()[0] + "," + result.getFeatureData()[1] + "," + result.getFeatureData()[2] + "," + error.getCode());

AFR_FSDKMatching score = new AFR_FSDKMatching();

float max = 0.0f;

String name = null;

for (FaceDB.FaceRegist fr : mResgist) {

for (AFR_FSDKFace face : fr.mFaceList) {

error = engine.AFR_FSDK_FacePairMatching(result, face, score);

Log.d(TAG, "Score:" + score.getScore() + ", AFR_FSDK_FacePairMatching=" + error.getCode());

if (max < score.getScore()) {

max = score.getScore();

name = fr.mName;

}

}

}

//age & gender

face1.clear();

face2.clear();

//crop

byte[] data = mImageNV21;

YuvImage yuv = new YuvImage(data, ImageFormat.NV21, mWidth, mHeight, null);

ExtByteArrayOutputStream ops = new ExtByteArrayOutputStream();

yuv.compressToJpeg(mAFT_FSDKFace.getRect(), 80, ops);

final Bitmap bmp = BitmapFactory.decodeByteArray(ops.getByteArray(), 0, ops.getByteArray().length);

try {

ops.close();

} catch (IOException e) {

e.printStackTrace();

}

if (max > 0.6f) {// 分数大于6.0识别成功

//fr success.

final float max_score = max;

Log.d(TAG, "fit Score:" + max + ", NAME:" + name);

final String mNameShow = name;

runOnUiThread(new Runnable() {

@Override

public void run() {

textView1.setText("识别成功!");

textView1.setTextColor(Color.BLACK);

isOK = true;

}

});

} else {

final String mNameShow = "未识别";

Log.d(TAG, "识别:" + name);

runOnUiThread(new Runnable() {

@Override

public void run() {

textView1.setText("识别失败!");

textView1.setTextColor(Color.RED);

}

});

}

mImageNV21 = null;

}

}

@Override

public void over() {

AFR_FSDKError error = engine.AFR_FSDK_UninitialEngine();

Log.d(TAG, "AFR_FSDK_UninitialEngine : " + error.getCode());

}

}

@Override

protected void onDestroy() {

// TODO Auto-generated method stub

super.onDestroy();

// 销毁

mFRAbsLoop.shutdown();

AFT_FSDKError err = engine.AFT_FSDK_UninitialFaceEngine();

Log.d(TAG, "AFT_FSDK_UninitialFaceEngine =" + err.getCode());

}

@Override

public boolean onTouch(View v, MotionEvent event) {

CameraHelper.touchFocus(mCamera, event, v, this); // 点击聚焦

return false;

}

@Override

public Camera setupCamera() {

// 在相机启动前设置一些属性,设置数据的格式为NV21,图像的宽高

mCamera = Camera.open(mCameraID);

try {

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewSize(mWidth, mHeight);

parameters.setPreviewFormat(mFormat);

for( Camera.Size size : parameters.getSupportedPreviewSizes()) {

Log.d(TAG, "SIZE:" + size.width + "x" + size.height);

}

for( Integer format : parameters.getSupportedPreviewFormats()) {

Log.d(TAG, "FORMAT:" + format);

}

List<int[]> fps = parameters.getSupportedPreviewFpsRange();

for(int[] count : fps) {

Log.d(TAG, "T:");

for (int data : count) {

Log.d(TAG, "V=" + data);

}

}

//parameters.setPreviewFpsRange(15000, 30000);

//parameters.setExposureCompensation(parameters.getMaxExposureCompensation());

//parameters.setWhiteBalance(Camera.Parameters.WHITE_BALANCE_AUTO);

//parameters.setAntibanding(Camera.Parameters.ANTIBANDING_AUTO);

//parmeters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

//parameters.setSceneMode(Camera.Parameters.SCENE_MODE_AUTO);

//parameters.setColorEffect(Camera.Parameters.EFFECT_NONE);

mCamera.setParameters(parameters);

} catch (Exception e) {

e.printStackTrace();

}

if (mCamera != null) {

mWidth = mCamera.getParameters().getPreviewSize().width;

mHeight = mCamera.getParameters().getPreviewSize().height;

}

return mCamera;

}

@Override

public void setupChanged(int format, int width, int height) {

}

@Override

public boolean startPreviewLater() {

return false;

}

@Override

public Object onPreview(byte[] data, int width, int height, int format, long timestamp) {

// 获取数据流 检测人脸信息框

AFT_FSDKError err = engine.AFT_FSDK_FaceFeatureDetect(data, width, height, AFT_FSDKEngine.CP_PAF_NV21, result);

Log.d(TAG, "AFT_FSDK_FaceFeatureDetect =" + err.getCode());

Log.d(TAG, "Face=" + result.size());

for (AFT_FSDKFace face : result) {

Log.d(TAG, "Face:" + face.toString());

}

if (mImageNV21 == null) {

if (!result.isEmpty()) {

mAFT_FSDKFace = result.get(0).clone();

mImageNV21 = data.clone();

} else {

//mHandler.postDelayed(hide, 3000);

}

}

//copy rects

Rect[] rects = new Rect[result.size()];

for (int i = 0; i < result.size(); i++) {

rects[i] = new Rect(result.get(i).getRect());

}

//clear result.

result.clear();

//return the rects for render.

return rects;

}

@Override

public void onBeforeRender(CameraFrameData data) {

}

@Override

public void onAfterRender(CameraFrameData data) {

// 绘制人脸框

mGLSurfaceView.getGLES2Render().draw_rect((Rect[])data.getParams(), Color.GREEN, 2);

}

@Override

public void onAutoFocus(boolean success, Camera camera) {

}

}