1、介绍:

文章:《Toward reliable signals decoding for electroencephalogram: A benchmark study to EEGNeX 》,Biomedical Signal Processing and Control ,IF:5.1\JCR:Q1

作者:Xia Chen a,*, Xiangbin Teng b, Han Chen c, Yafeng Pan c, Philipp Geyer a

单位:Department of Sustainable Building Systems, Leibniz University Hannover, Germany b Department of Psychology, The Chinese University of Hong Kong, Hong Kong Special Administrative Region, China c Department of Psychology and Behavioral Sciences, Zhejiang University, China

水平很高的一篇文章,是莱布尼兹大学、香港中文大学和浙江大学共同发表的,主要做的工作是对2018年的EEGNet模型进行改进。作者对该模型的改进,进行了多种尝试,甚至在原来的EEGNet中加入CBAM注意力机制,但是在文中作者说无效。其中,作者说明有4处改进非常成功,使得EEGNex成为媲美EEGNet的优秀的、先进的、专门处理EEG信号的深度学习轻量级模型。下面专门对该模型相对于EEGNet的改进部分进行解读:

2、四处改进:

EEGNex模型以EEGNet作为网络主干,和EEGNet一样,排除模型输入、输出两个层,还有3个层:block1、block2、block3:

1.加强对脑电输入信号的空间表征信息的提取(block1)

2.用2个空洞卷积替代深度可分离卷积(block3)

3.EEGNex模型使用逆瓶颈结构(block1、2、3)

4.在卷积层中使用填充和通过减少激活层来增加模型感受野,以扩充网络(block3)

这里给出EEGNet、EEGNex模型图:

EEGNet模型图

EEGNet解析:

第一列:输入层 第二列:一层普通Conv2d卷积,提取频率信息,block1

第三列:深度卷积层,提取空间信息,block2

第四列:深度可分离卷积:先深度卷积后点向卷积,学习每个通道特征映射的时间特征,block3

第五列:输出层

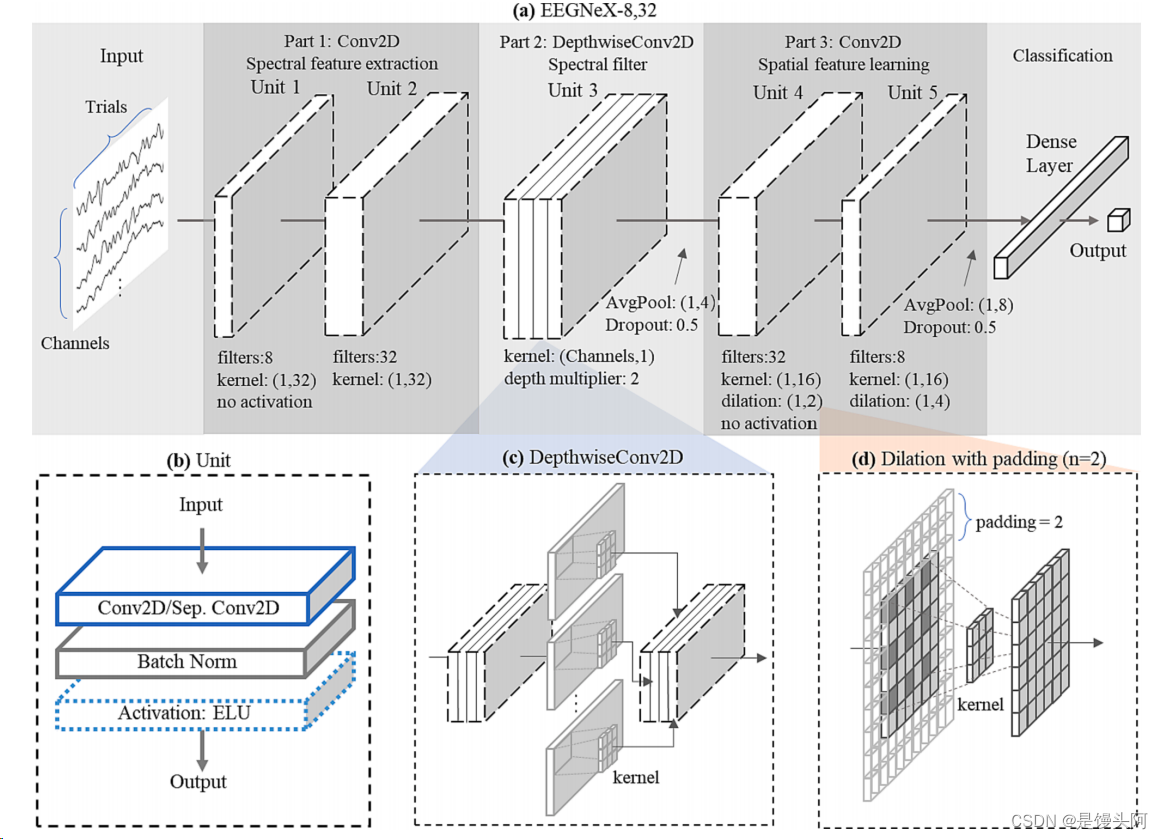

EEGNex模型图

EEGNex解析:

(a) EEGNeX-8、32的总体结构可视化。EEGNeX由五个单元组成。8表示临时过滤器的数量,32表示内核大小。

(b)单元结构,由卷积层、BatchNormalization层和ELU激活层组成,block1

(c)深度卷积的说明过程。在EEGNeX中,它充当频率空间学习器/过滤器,block2

(d)膨胀机制可视化:核扫描卷积层,中间留有空隙,增加核接受野,block3

2.1 加强对脑电输入信号的空间表征信息的提取

在block1中,EEGNex比EEGNet多设置一层普通Conv2d卷积:

block1的目的是从EEG输入中提取浅层的频谱信息(kernel_size=(1,sample_rate/2)),EEGNet滤波器数量是16,但在前人研究中[1],普通卷积深度不应过深,但是为了多提取频谱信息,复制一层普通Conv2d,两层的Conv2d的Filter=8(2层卷积提取了充分的频谱信息,filter数量也是EEGNet原来的一半)

[1].R.T. Schirrmeister, J.T. Springenberg, L.D.J. Fiederer, M. Glasstetter, K. Eggensperger, M. Tangermann, et al., Deep learning with convolutional neural networks for EEG decoding and visualization, Hum. Brain Mapp. 38 (11) (2017) 5391–5420.

2.2 用2个空洞卷积替代深度可分离卷积

去掉深度可分离卷积层的原因:

深度可分离层由(深度卷积层+点向卷积)组成,本质是将一个卷积核分解成2个较小的卷积核[1]。因为在上一层block2中,数据经过深度卷积(channels,1)已经把通道压缩为1,所以深度可分离在这里变得多余。

添加空洞卷积原因:

1.为了增强每个提取的特征映射的时间特征学习能力

2.为了增强网络的参数空间

在第四处改进,我再对空洞卷积进行详说。

[1].A.G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 2017.

2.3 EEGNex模型使用逆瓶颈结构

逆瓶颈结构:根据先进的CNN构架设计建议[1、2、3],使用扩展比为4的逆瓶颈结构,EEGNex自上到下的输出filter:8-32-64-32-8

[1].M. Tan, Q. Le. Efficientnet: Rethinking model scaling for convolutional neural networks. In: International conference on machine learning; 2019, p. 6105–6114.

[2]. Z. Liu, H. Mao, C.-Y. Wu, C. Feichtenhofer, T. Darrell, S. Xie. A ConvNet for the 2020s. arXiv preprint arXiv:2201.03545 2022.

[3].A.G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 2017.

2.4 在卷积层中使用填充和通过减少激活层来增加模型感受野,以扩充网络

用更少的激活函数扩张网络:

EEGNex中的block3的2个Conv2d块中添加dilation_rate=1*2、1*4,使得普通2维卷积变为2维空洞卷积,来捕获全局时间特征,并减少卷积块之间的激活函数[1],与普通卷积相比,空洞卷积有着更大的感受野,

例如:

dilation=1,感受野=3*3=9(dilation=1也叫普通卷积)

dilation=2,感受野=5*5=25

dilation=4,感受野=9*9=81

图例:

普通卷积 :3*3=9

空洞卷积:5*5=25

[1].Z. Liu, H. Mao, C.-Y. Wu, C. Feichtenhofer, T. Darrell, S. Xie. A ConvNet for the 2020s. arXiv preprint arXiv:2201.03545 2022.

3、模型结构:

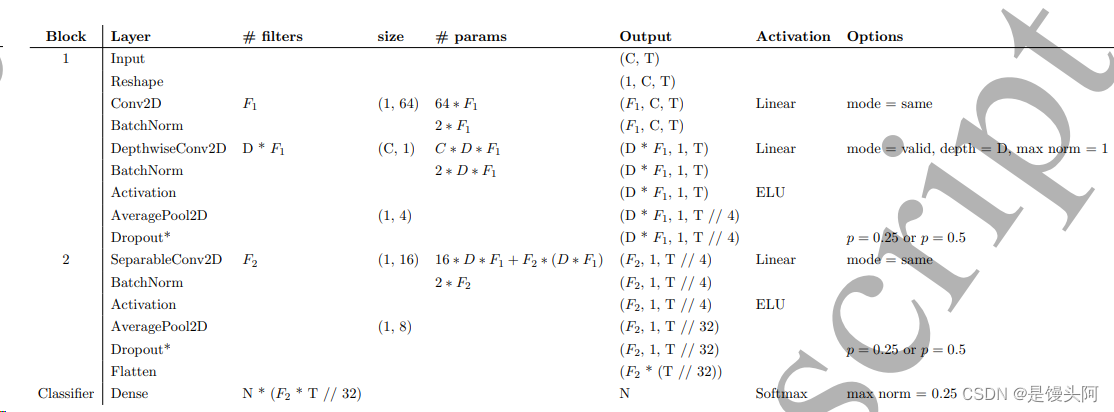

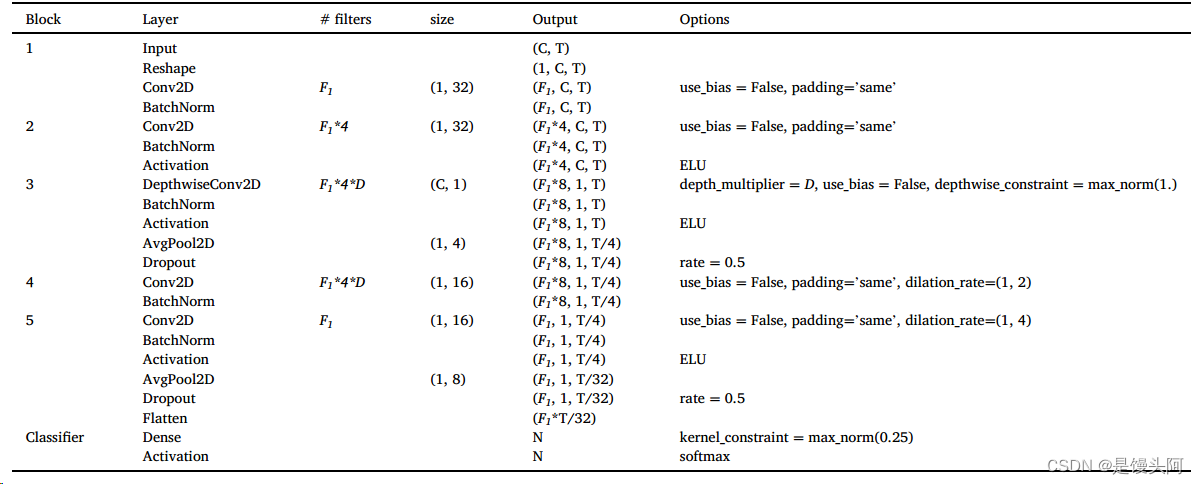

这里给出EEGNet、EEGNex详细的结构图:

EEGNet模型结构图

EEGNex模型结构图

4、模型代码:

4.1 EEGNex模型源码:keras实现

from keras.models import Model, Sequential

from keras.layers import Input, GRU, ConvLSTM2D, TimeDistributed, AvgPool2D, AvgPool1D, SeparableConv2D, DepthwiseConv2D, AveragePooling1D, Add, AveragePooling2D

from keras.layers import Dense, Flatten, Dropout, LSTM

# from keras.layers.merge import concatenate

from keras.layers import concatenate

from tensorflow.python.keras.layers.convolutional import Conv1D, MaxPooling1D, Conv2D, MaxPooling2D

from tensorflow.python.keras.layers import BatchNormalization, Activation, LayerNormalization, Reshape

# import tensorflow_addons as tfa

from keras.constraints import max_norm

from keras.regularizers import l2

from tensorflow.python.keras.utils.vis_utils import plot_model

from tensorflow.python.keras.callbacks import ReduceLROnPlateau, ModelCheckpoint

from tensorflow import Tensor

import csv

from datetime import datetime

## 改图片名称

##### Basic models

def Single_LSTM(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(LSTM(100, input_shape=(n_features, n_timesteps), return_sequences=True, kernel_regularizer=l2(0.0001)))

model.add(LSTM(100, return_sequences=True, kernel_regularizer=l2(0.0001)))

model.add(LSTM(100, kernel_regularizer=l2(0.0001)))

model.add(Dropout(0.5))

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def Single_GRU(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(GRU(100, input_shape=(n_features, n_timesteps), return_sequences=True, kernel_regularizer=l2(0.0001)))

model.add(GRU(100, return_sequences=True, kernel_regularizer=l2(0.0001)))

model.add(GRU(100, kernel_regularizer=l2(0.0001)))

model.add(Dropout(0.5))

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='Single_GRU.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def OneD_CNN(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=3, input_shape=(n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='OneD_CNN.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def OneD_CNN_Dilated(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=3, input_shape=(n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3, dilation_rate=2))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='OneD_CNN_Dilated.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def OneD_CNN_Causal(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=3, padding='causal', input_shape=(n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3, padding='causal'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3, padding='causal'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='OneD_CNN_Causal.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def OneD_CNN_CausalDilated(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=3, padding='causal', input_shape=(n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3, padding='causal', dilation_rate=2))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv1D(filters=64, kernel_size=3, padding='causal'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='OneD_CNN_CausalDilated.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def TwoD_CNN(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv2D(filters=64, kernel_size=(1, 3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=64, kernel_size=(1, 3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=64, kernel_size=(1, 3)))

model.add(Dropout(0.5))

model.add(AvgPool2D(pool_size=(2, 1), padding='same'))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='TwoD_CNN.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def TwoD_CNN_Dilated(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(Conv2D(filters=64, kernel_size=(1, 3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=64, kernel_size=(1, 3), dilation_rate=2))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=64, kernel_size=(1, 3)))

model.add(Dropout(0.5))

model.add(AvgPool2D(pool_size=(2, 1), padding='same'))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='TwoD_CNN_Dilated.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def TwoD_CNN_Separable(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(SeparableConv2D(filters=64, kernel_size=(1, 3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(SeparableConv2D(filters=64, kernel_size=(1, 3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(SeparableConv2D(filters=64, kernel_size=(1, 3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(AvgPool2D(pool_size=(2, 1), padding='same'))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='TwoD_CNN.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def TwoD_CNN_Depthwise(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(DepthwiseConv2D(kernel_size=(1, 3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(SeparableConv2D(filters=64, kernel_size=(1, 3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(SeparableConv2D(filters=64, kernel_size=(1, 3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(AvgPool2D(pool_size=(2, 1), padding='same'))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='TwoD_CNN.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

#####

#####

#####

##### Combined models

#####

#####

#####

def CNN_LSTM(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Dropout(0.5)))

model.add(TimeDistributed(MaxPooling1D(pool_size=2)))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(480, kernel_regularizer=l2(0.0001)))

model.add(Dropout(0.5))

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='CNN_LSTM.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def CNN_GRU(n_timesteps, n_features, n_outputs):

# define model

model = Sequential()

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3), input_shape=(1, n_features, n_timesteps)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Conv1D(filters=64, kernel_size=3)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(TimeDistributed(Dropout(0.5)))

model.add(TimeDistributed(MaxPooling1D(pool_size=2)))

model.add(TimeDistributed(Flatten()))

model.add(GRU(480, kernel_regularizer=l2(0.0001)))

model.add(Dropout(0.5))

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='CNN_GRU.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def Single_ConvLSTM2D(n_timesteps, n_features, n_outputs):

# kernel regulate good

# define model

model = Sequential()

model.add(ConvLSTM2D(filters=64, kernel_size=(1,3), input_shape=(1, 1, n_features, n_timesteps), kernel_regularizer=l2(0.0001)))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(100, activation='elu'))

model.add(Dense(n_outputs, activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='Single_ConvLSTM2D.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

#####

#####

#####

##### Advanced models

#####

#####

#####

def EEGNet_8_2(n_timesteps, n_features, n_outputs):

# define model 原版 EEGNet_8,2

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(SeparableConv2D(filters=16, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def EEGNet_4_2(n_timesteps, n_features, n_outputs):

# define model 原版 EEGNet_8,2

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=4, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(SeparableConv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

# add conv more for feature extraction

def test1(n_timesteps, n_features, n_outputs):

# define model 原版 EEGNet_8,2

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(SeparableConv2D(filters=16, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

# # wider output

# def test2(n_timesteps, n_features, n_outputs):

# # define model 原版

# model = Sequential()

# model.add(Input(shape=(1, n_features, n_timesteps)))

# # 可以分为三个kernelsize

# model.add(Conv2D(filters=8, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

# model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

# model.add(Conv2D(filters=8, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

# model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

# model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

# model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

# model.add(Dropout(0.5))

# model.add(SeparableConv2D(filters=16, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

# model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

# model.add(Dropout(0.5))

# model.add(Flatten())

# model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

# model.add(Activation(activation='softmax'))

# # save a plot of the model

# plot_model(model, show_shapes=True, to_file='EEGNet.png')

# model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# return model

# no SeparableConv2D and pooling, 2 Conv

def test2(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Conv2D(filters=16, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 2), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

# wider output

def test3(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Conv2D(filters=32, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 2), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

# wider output

def test4(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=32, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Conv2D(filters=32, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 2), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNet.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

# add pool

def EEGNeX_8_32(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=32, kernel_size=(1, 64), use_bias = False, padding='same', data_format="channels_first"))

model.add(BatchNormalization())

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Conv2D(filters=32, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 2), data_format='channels_first'))

model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(BatchNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNeX_8_32.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def test7(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=32, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(Conv2D(filters=32, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 2), data_format='channels_first'))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNeX_8_32.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def test8(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

model.add(SeparableConv2D(filters=16, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

# model.add(Dropout(0.5))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNeX_8_32.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

def test9(n_timesteps, n_features, n_outputs):

# define model 原版

model = Sequential()

model.add(Input(shape=(1, n_features, n_timesteps)))

# 可以分为三个kernelsize

model.add(Conv2D(filters=8, kernel_size=(1, 32), use_bias = False, padding='same', data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(DepthwiseConv2D(kernel_size=(n_features, 1), depth_multiplier=2, use_bias = False, depthwise_constraint=max_norm(1.), data_format="channels_first"))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

# model.add(AvgPool2D(pool_size=(1, 4), padding='same', data_format="channels_first"))

model.add(Dropout(0.5))

# model.add(SeparableConv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', data_format="channels_first"))

# model.add(BatchNormalization())

# model.add(Activation(activation='elu'))

# # model.add(AvgPool2D(pool_size=(1, 8), padding='same', data_format="channels_first"))

# model.add(Dropout(0.5))

model.add(Conv2D(filters=8, kernel_size=(1, 16), use_bias = False, padding='same', dilation_rate=(1, 4), data_format='channels_first'))

model.add(LayerNormalization())

model.add(Activation(activation='elu'))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(n_outputs, kernel_constraint=max_norm(0.25)))

model.add(Activation(activation='softmax'))

# save a plot of the model

plot_model(model, show_shapes=True, to_file='EEGNeX_8_32.png')

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

return model 4.2 EEGNex模型训练测试

import numpy as np

from numpy import mean, std, dstack

import pandas as pd

from pandas import read_csv

from matplotlib import pyplot

import tensorflow

import torch

import os, gc

from time import time

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report, confusion_matrix

from keras.models import Sequential

from keras.layers import Dense, Flatten, Dropout, LSTM

from tensorflow.keras.utils import to_categorical

# Selfbuilded functions

from BenchmarkModels import *

'''

This notebook loads dataset in YourDataName.npy data format and run benchmarkmodels:

- x_{}.npy (Input, format as #Samples, Channels, Lengths)

- y_{}.npy (Label, num)

'''

DATA_LIST = ['YourDataName']

channel_last=False

# load a dataset group, such as train or test

def load_dataset_group(data):

print('Loading data', data)

X = np.load('x_{}.npy'.format(data))

y = np.load('y_{}.npy'.format(data))

# load input data

return X, y

# load the dataset, returns train and test X and y elements

def load_dataset(prefix='', channel_last=False):

# X_SHAPE: samples, channels, lengths

X, y = load_dataset_group(DATA)

# One hot encode y

y = to_categorical(y)

# TRAIN - 0.75. VALIDATION - 0.125, TEST - 0.125

trainX, testX, trainy, testy = train_test_split(X, y, test_size=0.25)

valX, testX, valy, testy = train_test_split(testX, testy, test_size=0.5)

print(trainX.shape, trainy.shape, valX.shape, valy.shape, testX.shape, testy.shape)

return trainX, trainy, valX, valy, testX, testy

# summarize scores

def summarize_results(scores):

print(scores)

m, s = mean(scores), std(scores)

print('Accuracy: %.3f%% (+/-%.3f)' % (m, s))

return m, s

# Model traning/evaluating

def model_evaluation(trainX, trainy, valX, valy, testX, testy, Model_name, Data_name, channel_last=False, plot_model=False):

# Callbacks

callback_es = tensorflow.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20)

callback_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=5)

checkpointDir = './model_weights/'

os.makedirs(checkpointDir, exist_ok=True)

checkpointPath = os.path.join(checkpointDir, "{}_best_{}_weights.h5".format(Data_name, Model_name))

checkpointer = ModelCheckpoint(filepath=checkpointPath, verbose=1, save_best_only=True)

# Params

verbose, epochs, batch_size = 0, 200, 128

n_timesteps, n_features, n_outputs = trainX.shape[2], trainX.shape[1], trainy.shape[1]

trainX = trainX.reshape((trainX.shape[0], 1, n_features, n_timesteps))

valX = valX.reshape((valX.shape[0], 1, n_features, n_timesteps))

testX = testX.reshape((testX.shape[0], 1, n_features, n_timesteps))

### Model structure

if(Model_name == 'Single_LSTM'):

model = Single_LSTM(n_timesteps, n_features, n_outputs)

if(Model_name == 'Single_GRU'):

model = Single_GRU(n_timesteps, n_features, n_outputs)

if(Model_name == 'OneD_CNN'):

model = OneD_CNN(n_timesteps, n_features, n_outputs)

if(Model_name == 'OneD_CNN_Dilated'):

model = OneD_CNN_Dilated(n_timesteps, n_features, n_outputs)

if(Model_name == 'OneD_CNN_Causal'):

model = OneD_CNN_Causal(n_timesteps, n_features, n_outputs)

if(Model_name == 'OneD_CNN_CausalDilated'):

model = OneD_CNN_CausalDilated(n_timesteps, n_features, n_outputs)

if(Model_name == 'TwoD_CNN'):

model = TwoD_CNN(n_timesteps, n_features, n_outputs)

if(Model_name == 'TwoD_CNN_Separable'):

model = TwoD_CNN_Separable(n_timesteps, n_features, n_outputs)

if(Model_name == 'TwoD_CNN_Dilated'):

model = TwoD_CNN_Dilated(n_timesteps, n_features, n_outputs)

if(Model_name == 'TwoD_CNN_Depthwise'):

model = TwoD_CNN_Depthwise(n_timesteps, n_features, n_outputs)

###

if(Model_name == 'Single_ConvLSTM2D'):

# reshape into subsequences (samples, time steps, rows, cols, channels)

trainX = trainX.reshape((trainX.shape[0], 1, 1, n_features, n_timesteps))

valX = valX.reshape((valX.shape[0], 1, 1, n_features, n_timesteps))

testX = testX.reshape((testX.shape[0], 1, 1, n_features, n_timesteps))

model = Single_ConvLSTM2D(n_timesteps, n_features, n_outputs)

if(Model_name == 'CNN_LSTM'):

model = CNN_LSTM(n_timesteps, n_features, n_outputs)

if(Model_name == 'CNN_GRU'):

model = CNN_GRU(n_timesteps, n_features, n_outputs)

###

if(Model_name == 'EEGNet_8_2'):

model = EEGNet_8_2(n_timesteps, n_features, n_outputs)

if(Model_name == 'test1'):

model = test1(n_timesteps, n_features, n_outputs)

if(Model_name == 'test2'):

model = test2(n_timesteps, n_features, n_outputs)

if(Model_name == 'test3'):

model = test3(n_timesteps, n_features, n_outputs)

if(Model_name == 'test4'):

model = test4(n_timesteps, n_features, n_outputs)

if(Model_name == 'EEGNeX_8_32'):

model = EEGNeX_8_32(n_timesteps, n_features, n_outputs)

if(plot_model==True):

print('The current running model is:', Model_name)

print(model.summary())

# Fit / Evaluate model

# Fit network callback_es, callback_lr

model.fit(trainX, trainy, epochs=epochs, batch_size=batch_size, verbose=verbose,

validation_data=(valX, valy), callbacks=[callback_es, callback_lr])

# Load optimal weights

# model.load_weights(checkpointPath)

# Evaluate model

_, accuracy = model.evaluate(testX, testy, batch_size=batch_size, verbose=0)

pred_i = model.predict(testX, batch_size=batch_size)

return accuracy, pred_i

for DATA in DATA_LIST:

# Log

path = 'ResultLog_{}.csv'.format(DATA)

now = datetime.now()

with open(path,'a', newline='') as f:

csv_write = csv.writer(f)

# Write a row of date dd/mm/YY H:M:S

dt_string = now.strftime("%d/%m/%Y %H:%M:%S")

csv_write.writerow([dt_string])

Model_list = [

'Single_LSTM', 'Single_GRU',

'OneD_CNN', 'OneD_CNN_Dilated',

'OneD_CNN_Causal', 'OneD_CNN_CausalDilated',

'TwoD_CNN' ,'TwoD_CNN_Dilated',

'TwoD_CNN_Separable','TwoD_CNN_Depthwise',

'CNN_LSTM', 'CNN_GRU',

'Single_ConvLSTM2D',

'EEGNet_8_2',

'EEGNeX_8_32',

]

# Clear cache

gc.collect()

torch.cuda.empty_cache()

print('='*20)

# Load data

trainX, trainy, valX, valy, testX, testy = load_dataset(channel_last=channel_last)

for Model_name in Model_list:

scores = list()

pred = list()

true = list()

run_time = list()

# Repeat experiment

for r in range(5):

if(r == 0):

score, pred_i = model_evaluation(trainX, trainy, valX, valy, testX, testy, Model_name, DATA, plot_model=True)

else:

score, pred_i = model_evaluation(trainX, trainy, valX, valy, testX, testy, Model_name, DATA)

score = score * 100.0

print('>#%d: %.3f' % (r+1, score))

scores.append(score)

pred.append(pred_i)

true.append(testy)

# Summarize results

mean_, std_ = summarize_results(scores)

print("Classification Report:\n", classification_report(np.argmax(testy, axis=1), np.argmax(pred_i, axis=1), digits=3))

with open(path,'a', newline='') as f:

csv_write = csv.writer(f)

# Write data

csv_head = [Model_name, mean_, std_]

csv_write.writerow(csv_head)