距离 ChatGPT 发布已有 1 年有余,国内外各个公司和研究机构相继发布了各种语言模型,而多模态领域更进一步,在 GPT-4V 发布之前便涌现了诸多优秀的多模态大语言模型。如今 GPT-4V 也已经发布,同期阿里多模态mPLUG也发布了他们最新的基于模态协同思想的新工作 mPLUG-Owl2。mPLUG-Owl2 的论文、代码、demo 均已开源。模态自适应特征空间实现模态协同

-

论文:https://arxiv.org/abs/2311.04257

-

代码:https://github.com/X-PLUG/mPLUG-Owl/stargazers

-

Demo:https://modelscope.cn/studios/damo/mPLUG-Owl2/summary

借此机会,我们也来体验一下 mPLUG-Owl2 并看看最新的多模态大模型是否赶上了 GPT-4V。

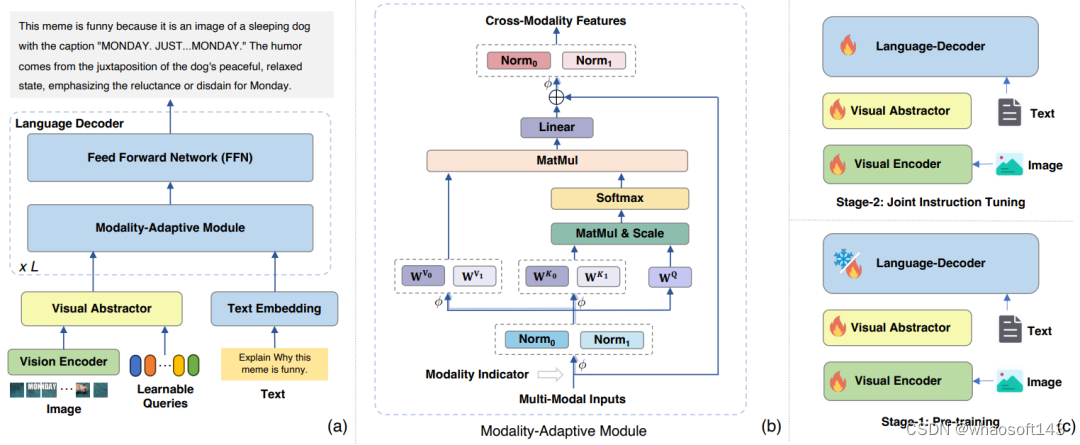

先来简单介绍一下 mPLUG-Owl2 的技术点。传统的多模态大语言模型往往使用特征空间映射的方式,将视觉特征转对齐到大语言模型的特征空间中,以开发大语言模型的多模态理解能力。然而这类方法存在两点问题,首先模态间的拉扯现象会降低多模态性能,因为视觉模态往往存在一些无法被语言完美表示的信号,强行对齐会干扰模型的收敛。此外,这类模型往往需要额外微调语言模型以达到最优的多模态理解能力,这会显著降低原始模型的纯文本性能。

mPLUG-Owl2 的解决方案是在大语言模型中引入一种 Modality-Adaptive Module(MAM)模块,通过在 Attention 过程中增加少量额外的参数,来从原本的语言特征空间中进一步学习一个视觉空间,这样既可以不干扰语言模型原有的特征空间,又可以保留视觉特征独有的语义和细节,可以说非常巧妙了。此外,他们也在训练策略上进行了改进,在预训练阶段除了训练视觉模型和对齐模型外,也会训练 MAM 模块的视觉参数。而在 SFT 阶段,则训练全部参数,以达到最大的模型容量。

GPT-4V 的结构还不明确,但从其价格计算规则可以猜测,其可能采用了切图结合固定查询 Token 的策略以应对不同类型的输入图像。

下面我们尝试给一些比较难的多模态场景,看看这里两个模型能给出怎么样的回复。

标识理解

问题: If i want to go to the toilet, where should i go?

GPT-4V: In the image provided, you can see an airport terminal with signs hanging from the ceiling indicating directions. Look at the sign with the text "24-26 ➡️," where there is a restroom symbol along with an arrow pointing to the right. To find the restroom/toilet, you should follow the direction of that arrow, which suggests it is located towards the gates numbered 24-26.

Qwen-VL: you can go to the direction of 24-26 according to the signboard.

mPLUG-Owl2: If you want to go to the toilet, you should follow the directional signs and head towards the restroom area. In the image, there are multiple people walking around, and some of them are carrying luggage. The presence of restrooms is indicated by the signs, so you should look for the appropriate sign to guide you to the restroom.

两者都关注到了图像中的告示牌,GPT-4V 出现了视觉幻觉,其认为混淆了卫生间的标识和 24-26 登机口的标识,并给出了错误的方向。mPLUG-Owl2 告诉用户告示牌上标明了方向,应该遵循告知牌指向的方向寻找卫生间。可见当两者在细粒度视觉理解上均仍有提升的空间,但 mPLUG-Owl2 的幻觉更少。

梗图理解

问题: Explain why this meme is funny

GPT-4V: The meme is considered funny because it uses humor to express a common sentiment about Mondays — a day that, for many, signifies the beginning of the workweek and the end of the weekend, often associated with tiredness and reluctance to start the working or school routine again.\n\nThe image features a dog lying flat on the ground, looking exhausted or deflated. The text reads, "MONDAY. JUST... MONDAY." This suggests that the feeling of being overwhelmed or exhausted is so commonly understood that the day of the week (Monday) is enough to explain the dog's posture and apparent lack of energy. In other words, the dog embodies how people often feel on Mondays, and the humor lies in the exaggerated, relatable portrayal of this feeling.

mPLUG-Owl2: The meme is funny because it humorously plays on the common phrase "Just Monday" which is often used to express the feeling of dread or discontent about the start of a new work week. In this case, the dog is sleeping on the floor, seemingly unaware of the day of the week, and the caption "Monday. Just Monday" is placed over the image, adding a humorous twist to the phrase. The image of the dog sleeping on the floor also adds a lighthearted and playful touch to the meme.

两者都关注到了图像中的文字背后的含义和狗狗疲惫的样子,但 GPT-4V 对狗狗的状态以及梗图想表达的内容解读更深刻,关联性描述得更好。而 mPLUG-Owl2 仅仅单独解释了这两者,缺乏一定深度。

多图理解

问题: Which photo is better?

GPT-4V: "better" can be subjective and depends on the criteria you're using to evaluate them. Both photos have their strengths and could appeal to different preferences or uses.\n\nThe photo on the left has a warmer color tone and more light, which gives it a vibrant and inviting feel. The exposure allows for more detail in the indoor scene, and the colors of the building exteriors in the background are more visible.\n\nThe photo on the right has a cooler and darker tone, with an emphasis on silhouette due to the backlighting. This creates a more dramatic and moody atmosphere. The focus here is more on the shapes and outlines of the chairs and the plant, with less emphasis on color.\n\nIf you prefer clarity and warmth, you might consider the photo on the left to be better. If you prefer contrast and silhouette aesthetics, the photo on the right might be more appealing. It all comes down to personal preference or the specific context in which the photo will be used.

mPLUG-Owl2: The left photo is better, as it has more details and is more visually appealing. It shows a chair sitting in front of a window, with a clear view of the cityscape outside. The right photo, however, has a blurry and out-of-focus appearance, making it less attractive and less informative.

这里我们让模型对比两张近似照片的优劣,GPT-4V 继承了 GPT 系列价值观对齐的特性,选择一碗水端平,对两张照片各自给出了优点,并没有给出自己的选择。而 mPLUG-Owl2 则更直接地选择了第一张图,并给出了原因。说明过度的价值观对齐,反而可能损害模型原本的创造力。

可以看出,mpLUG-Owl2 给出了非常不错的回复,跟 GPT-4V 有来有回。在 mPLUG-Owl2 的论文中,我们可以看到更多对其性能的分析。

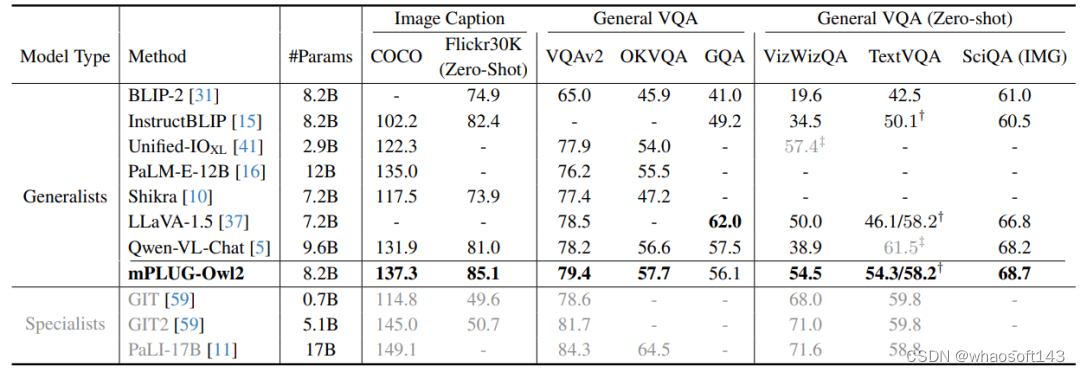

mPLUG-Owl2 在传统 Benchmark 中相比同等规模模型取得了最佳的性能。

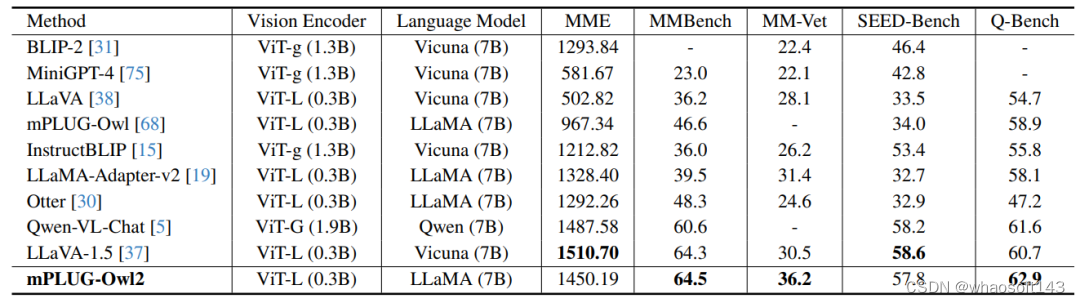

在多模态大语言模型的 Benchmark 也力压其他模型。 whaosoft aiot http://143ai.com

在纯文本 Benchmark 上,mPLUG-Owl2 得益于 MAM 模块避免了模态间的拉扯,实现了更佳的模态协同,其性能也显著优于主流纯文本大模型。对 MAM 的消融实验进一步验证了这一点。

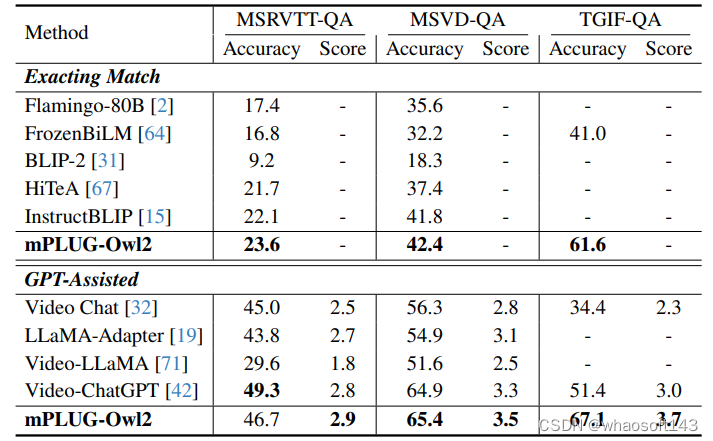

mPLUG-Owl2 还具备视频理解的能力,甚至超越了利用 GPT 进行辅助的模型。

mPLUG-Owl2 通过深入的可视化实验发现,多模态大语言模型在浅层更关注文本内容,而在深层则更多地搜寻视觉内容以回答文本问题。在没有 MAM 模块时,模型在还没有准确理解文本语义的时候就对视觉有了较多的注意力,这会影响模型理解多模态内容。加入 MAM 模块后,模型在浅层对文本的注意力显著提升,而在后层则聚焦关键的视觉信息。这一表现说明 MAM 模块能很好地解决模态拉扯问题,让不同模态的信息在模型推理中能各司其职。

更多关于 mPLUG-Owl2 的细节可以参考其开源论文和代码:

-

论文:https://arxiv.org/abs/2311.04257

-

代码:https://github.com/X-PLUG/mPLUG-Owl/stargazers

模型也可以直接在 modelscope 上自由体验:

-

Demo:https://modelscope.cn/studios/damo/mPLUG-Owl2/summary