tidb安装部署

1、下载&上传安装包

链接:https://pan.baidu.com/s/12pBCP55nBSGUKEaZ0bDafg?pwd=t9ul

提取码:t9ul

--来自百度网盘超级会员V4的分享

# 解压

tar -zxvf tidb-community-server-v6.5.1-linux-amd64.tar.gz && cd tidb-community-server-v6.5.1-linux-amd64

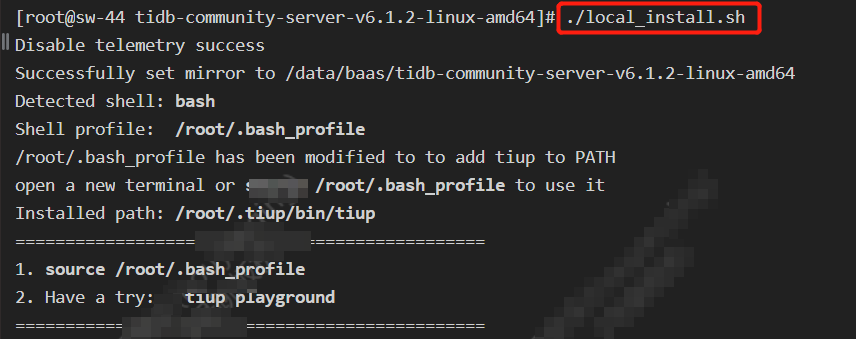

2、安装

./local_install.sh

# 根据上述安装的提示,执行source命令使环境变量生效

source /root/.bash_profile

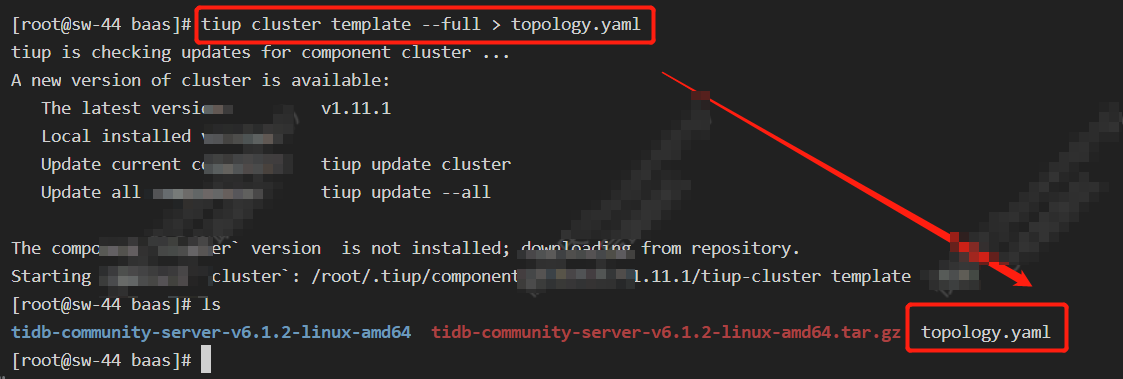

3、生成&修改topology.yaml

切换到与tidb-community-server-v6.5.1-linux-amd64.tar.gz同级的路径下:

tiup cluster template --full > topology.yaml

一般IP只需要配置好对应的IP即可,目录可根据自己的需求

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

# # The user who runs the tidb cluster.

user: "tidb"

# # group is used to specify the group name the user belong to,if it's not the same as user.

# group: "tidb"

# # SSH port of servers in the managed cluster.

# 配置端口号

ssh_port: 22222

# # Storage directory for cluster deployment files, startup scripts, and configuration files.

# 群集部署文件、启动脚本和配置文件的存储目录。

deploy_dir: "/data/tidb/tidb-deploy"

# # TiDB Cluster data storage directory

# TiDB集群数据存储目录

data_dir: "/data/tidb/tidb-data"

# # Supported values: "amd64", "arm64" (default: "amd64")

arch: "amd64"

# # Resource Control is used to limit the resource of an instance.

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html

# # Supports using instance-level `resource_control` to override global `resource_control`.

# resource_control:

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#MemoryLimit=bytes

# memory_limit: "2G"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#CPUQuota=

# # The percentage specifies how much CPU time the unit shall get at maximum, relative to the total CPU time available on one CPU. Use values > 100% for allotting CPU time on more than one CPU.

# # Example: CPUQuota=200% ensures that the executed processes will never get more than two CPU time.

# cpu_quota: "200%"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#IOReadBandwidthMax=device%20bytes

# io_read_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# io_write_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# # Monitored variables are applied to all the machines.

monitored:

# # The communication port for reporting system information of each node in the TiDB cluster.

node_exporter_port: 9100

# # Blackbox_exporter communication port, used for TiDB cluster port monitoring.

blackbox_exporter_port: 9115

# # Storage directory for deployment files, startup scripts, and configuration files of monitoring components.

# 监控组件的部署文件、启动脚本和配置文件的存储目录。

deploy_dir: "/data/tidb/tidb-deploy/monitored-9100"

# # Data storage directory of monitoring components.

# 监控组件的数据存储目录。

data_dir: "/data/tidb/tidb-data/monitored-9100"

# # Log storage directory of the monitoring component.

# 监视组件的日志存储目录。

log_dir: "/data/tidb/tidb-deploy/monitored-9100/log"

# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# # - TiDB: https://pingcap.com/docs/stable/reference/configuration/tidb-server/configuration-file/

# # - TiKV: https://pingcap.com/docs/stable/reference/configuration/tikv-server/configuration-file/

# # - PD: https://pingcap.com/docs/stable/reference/configuration/pd-server/configuration-file/

# # - TiFlash: https://docs.pingcap.com/tidb/stable/tiflash-configuration

# #

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# # ^ ^

# # - example: https://github.com/pingcap/tiup/blob/master/embed/examples/cluster/topology.example.yaml

# # You can overwrite this configuration via the instance-level `config` field.

# server_configs:

# tidb:

# tikv:

# pd:

# tiflash:

# tiflash-learner:

# kvcdc:

# # Server configs are used to specify the configuration of PD Servers.

pd_servers:

# # The ip address of the PD Server.

# PD服务器的ip地址

- host: xx.xx.xx.xx

# # SSH port of the server.

# ssh_port: 22

# # PD Server name

# name: "pd-1"

# # communication port for TiDB Servers to connect.

# client_port: 2379

# # communication port among PD Server nodes.

# peer_port: 2380

# # PD Server deployment file, startup script, configuration file storage directory.

# PD服务器部署文件、启动脚本、配置文件存储目录。

deploy_dir: "/data/tidb/tidb-deploy/pd-2379"

# # PD Server data storage directory.

# PD服务器数据存储目录。

data_dir: "/data/tidb/tidb-data/pd-2379"

# # PD Server log file storage directory.

# PD服务器日志文件存储目录。

log_dir: "/data/tidb/tidb-deploy/pd-2379/log"

# # numa node bindings.

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

# # Server configs are used to specify the configuration of TiDB Servers.

tidb_servers:

# # The ip address of the TiDB Server.

# TiDB服务器的ip地址。

- host: xx.xx.xx.xx

# # SSH port of the server.

# ssh_port: 22

# # Access the TiDB cluster port.

port: 4000

# # TiDB Server status information reporting port.

status_port: 10080

# # TiDB Server deployment file, startup script, configuration file storage directory.

# TiDB服务器部署文件、启动脚本、配置文件存储目录。

deploy_dir: "/data/tidb/tidb-deploy/tidb-4000"

# # TiDB Server log file storage directory.

# TiDB服务器日志文件存储目录。

log_dir: "/data/tidb/tidb-deploy/tidb-4000/log"

# numa_node: "0" # suggest numa node bindings.

# # Server configs are used to specify the configuration of TiKV Servers.

tikv_servers:

# # The ip address of the TiKV Server.

# TiKV服务器的ip地址。

- host: xx.xx.xx.xx

# # SSH port of the server.

# ssh_port: 22

# # TiKV Server communication port.

port: 20160

# # Communication port for reporting TiKV Server status.

status_port: 20180

# # TiKV Server deployment file, startup script, configuration file storage directory.

# TiKV服务器部署文件、启动脚本、配置文件存储目录。

deploy_dir: "/data/tidb/tidb-deploy-server/tikv-20160"

# # TiKV Server data storage directory.

# TiKV服务器数据存储目录。

data_dir: "/data/tidb/tidb-data-server/tikv-20160"

# # TiKV Server log file storage directory.

# TiKV服务器日志文件存储目录。

log_dir: "/data/tidb/tidb-deploy-server/tikv-20160/log"

# numa_node: "0"

# # The following configs are used to overwrite the `server_configs.tikv` values.

# config:

# log.level: warn

# # Server configs are used to specify the configuration of Alertmanager Servers.

alertmanager_servers:

# # The ip address of the Alertmanager Server.

# 警报管理服务器的ip地址。

- host: xx.xx.xx.xx

# # SSH port of the server.

# ssh_port: 22

# Alertmanager web service listen host.

# listen_host: 0.0.0.0

# # Alertmanager web service port.

# web_port: 9093

# # Alertmanager communication port.

# cluster_port: 9094

# # Alertmanager deployment file, startup script, configuration file storage directory.

# 警报管理器部署文件、启动脚本、配置文件存储目录。

deploy_dir: "/data/tidb/tidb-deploy/alertmanager-9093"

# # Alertmanager data storage directory.

# 警报管理器数据存储目录。

data_dir: "/data/tidb/tidb-data/alertmanager-9093"

# # Alertmanager log file storage directory.

# 警报管理器日志文件存储目录。

log_dir: "/data/tidb/tidb-deploy/alertmanager-9093/log"

# # Alertmanager config file storage directory.

# 警报管理器配置文件存储目录。

config_file: "/data/tidb/tidb-deploy/alertmanager-9093/bin/alertmanager/alertmanager.yml"

4、添加权限

chmod -R 777 /etc/sysctl.conf

chmod -R 777 /etc/selinux/config

chmod -R 777 /etc/sysctl.d/

source ~/.bash_profile

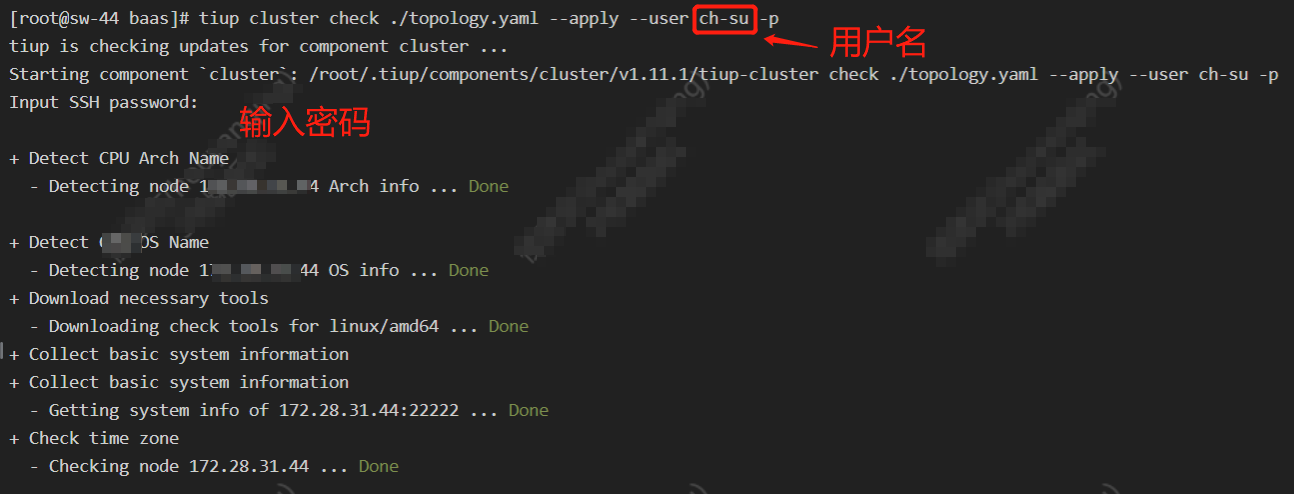

5、检查 topology.yaml

tiup cluster check ./topology.yaml --apply --user 用户名 -p

# 然后输入密码

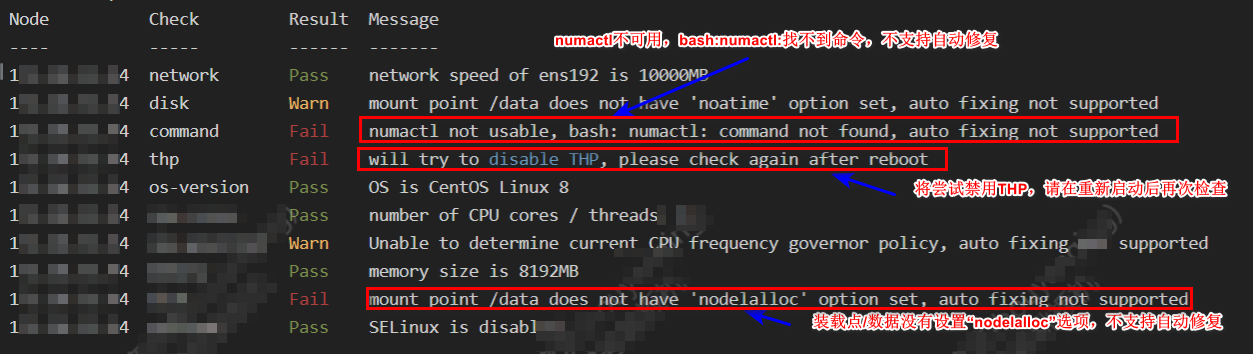

目前是自己测试,上述错误可以忽略,如果是生产环境,则不能大意!!!!

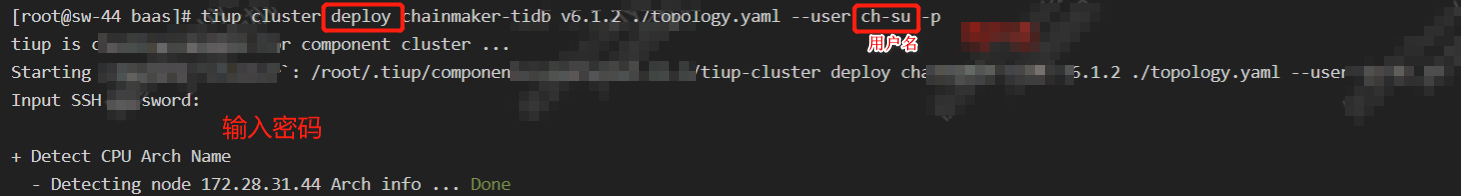

6、部署

# 集群名称 chainmaker-tidb

# 版本 v6.1.2

# 配置文件 topology.yaml

tiup cluster deploy chainmaker-tidb v6.1.2 ./topology.yaml --user '用户名'-p

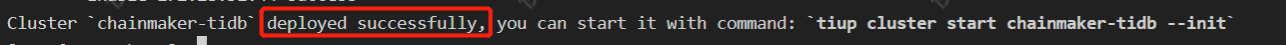

看到sucessfully表示部署成功,然后根据提示完成初始化:

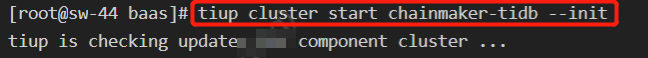

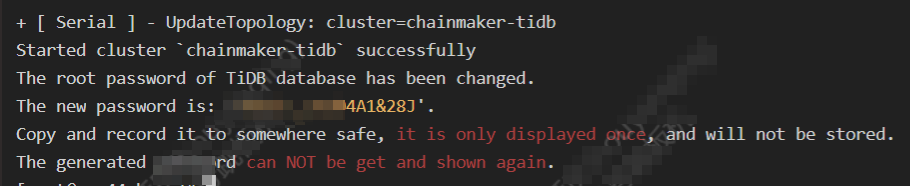

7、初始化

tiup cluster start chainmaker-tidb --init

大意为:

TiDB数据库的根密码已更改。

新密码为:'********'。

复制并记录到安全的地方,它只显示一次,不会被存储。

生成的密码无法获取并再次显示。

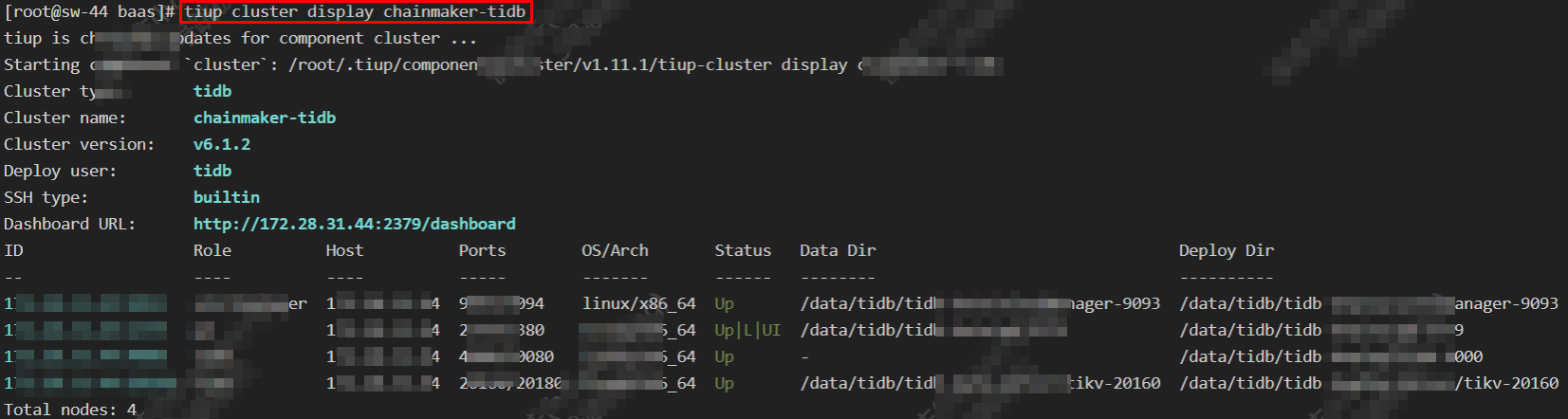

8、查看状态,若节点状态为up即为启动

tiup cluster display chainmaker-tidb