序言

前段时间一直在摸索如何在RKNN上部署OCR的模型,花了好长一段时间,终于把模型部署到了rv1126的NPU上,过程虽然不是很困难,因为在RKNN群中交流过一些OCR部署时遇到的问题,经常有同学看到聊天记录加了我Q问相关问题,趁着最近周末,打算写一篇文章记录下我的部署全过程,作为分享,也方便以后复习、加深印象。

一、准备工作

硬件支持:

- PC端主机(ubuntu)系统

- rv1126板子

- 双头USB线(用于PC和板子间adb调试)

软件支持:

- PC端搭建好rknn-toolkit环境

- rv1126板子刷好系统

- rv1126的sdk包

官方文档参考:rv1126开发指南

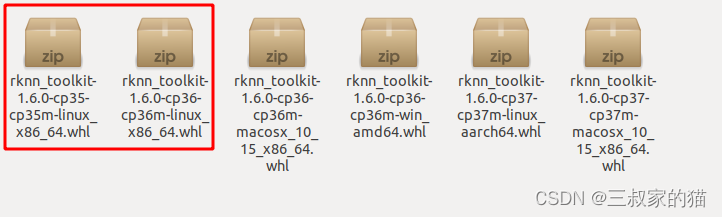

首先需要在ubuntu系统中搭建rknn-toolkit的环境,搭建也相对比较简单,我这里使用的rknn-toolkit版本是1.6.0版本,在rv1126的SDK包中/external/rknn-toolkit文件夹有提供,也可以到官方的hub中下载,不过下载下来的whl包都是最新的版本,注意观察包名,仅支持linux和python3.6或python3.5环境,所以windows下是无法使用的。

创建一个conda虚拟环境,执行如下安装命令:

#创建名为rknn,python环境为3.6的虚拟环境

conda create -n rknn python=3.6

# 安装你需要安装的环境包,此处省略

pip install ...

# 安装 RKNN-Toolkit

pip install rknn_toolkit-1.6.0-cp36-cp36m-linux_x86_64.whl

# 检查是否安装成功,import rknn 库

(rknn) rk@rk:~/rknn-toolkit-v1.6.0/package$ python

>>> from rknn.api import RKNN

>>>

rv1126SDK开发包和板子刷系统参考我之前的文章:SDK环境准备和系统烧录,其他板子的话自行解决。

二、模型转换及量化

这里我准备了三个onnx模型,都是基于PytorchOCR,一个是轻量型的DBNet文字检测,由paddleocr权重转PytorchOCR再转onnx转换得来,一个是CRNN识别模型,也是由paddleocr先转换PytorchOCR而来,最后一个是我自己训练的识别模型,因为之前说过目前RKNN模型还不支持LSTM的算子转换,我尝试过通过jit::torch的pt文件是可以转过去RKNN的,但是转后输出效果不对且不可量化,所以还是针对部署平台自己设计了一个识别网络,设计历程参考我上一篇文章:使用RKNN部署CRNN模型踩坑优化历程,这里我放出的是一个比较小的版本,通用识别效果是没有paddleocr的crnn好的,主要还是训练数据造的没有paddleocr的好,但是在某些特定场景下使用起来是要比paddleocr的要好,因为训练数据是针对场景造的,而且加入了很多通用字体,通用识别也不会至于太差,如果能满足自己场景要求,可以拿去使用,重要的是量化好后速度非常快,而且开启预编译后模型量化后仅为1.5M,推理仅需几毫秒,还要啥自行车。

权重文件看文末附百度云链接

相关参考:

https://github.com/PaddlePaddle/Paddle2ONNX

https://github.com/PaddlePaddle/PaddleOCR

https://github.com/WenmuZhou/PytorchOCR

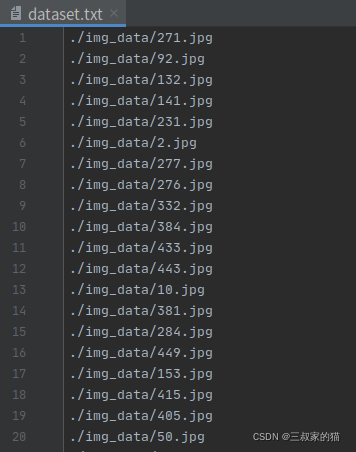

rknn模型量化是在模型转换的时候完成的,所以需要准备一批用于检测模型量化的图片,和一批识别模型量化的模型,我这里检测模型图片准备了几百张,识别模型准备了两千张左右,然后分别将图片路径写到一个txt文件中,用于量化时加载:

rknn模型转换提供了两种方式,一种是python代码转换,一种是界面转换,界面转换的话,安装好rknn环境后,需要在终端中输入:python3 -m rknn.bin.visualization,然后会出现如下界面:

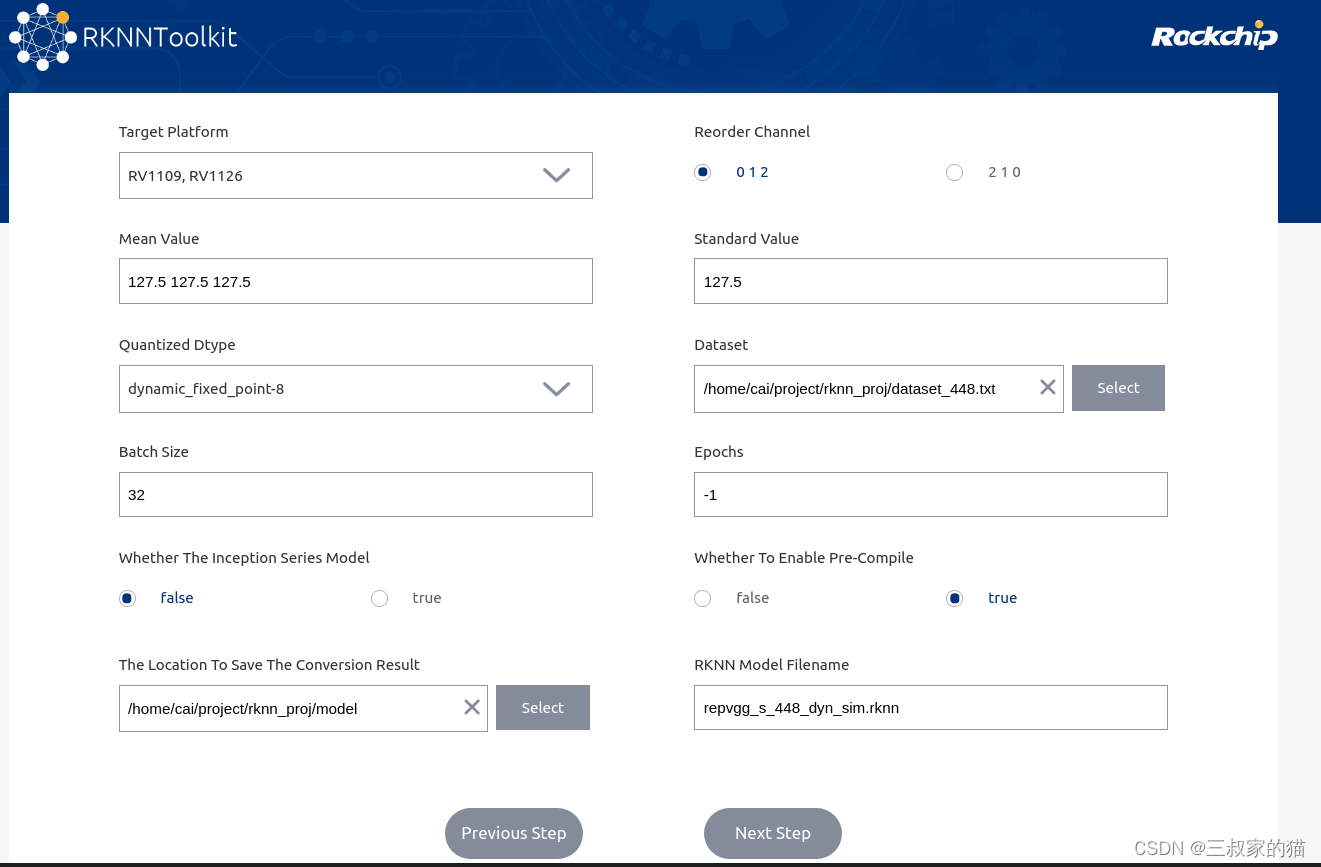

根据自己原始模型格式选择,这里的原始模型是onnx模型,所以选择onnx,进去后各个选项的意思自己翻译过来对照一下不难懂(rknn toolkit 1.7.1版本界面好像是中文),需要注意的是预编译选项(Whether To Enable Pre-Compile),预编译 RKNN 模型可以减少模型初始化时间,但是无法通过模拟器进行推理或性能评估:

第二种方式转换是通过python代码转换,代码如下,按需修改:

import os

from rknn.api import RKNN

import numpy as np

import onnxruntime as ort

onnx_model = 'model/repvgg_s.onnx' #onnx路径

save_rknn_dir = 'model/repvgg_s.rknn'#rknn保存路径

def norm(img):

mean = 0.5

std = 0.5

img_data = (img.astype(np.float32)/255 - mean) / std

return img_data

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN(verbose=True)

image = np.random.randn(1,3,32,448).astype(np.float32) # 创建一个np数组,分别用onnx和rknn推理看转换后的输出差异,检测模型输入是1,3,640,640 ,识别模型输入是1,3,32,448

onnx_net = ort.InferenceSession(onnx_model) # onnx推理

onnx_infer = onnx_net.run(None, {

'input': norm(image)}) # 如果是paddle2onnx转出来的模型输入名字默认是 "x"

# pre-process config

print('--> Config model')

rknn.config(mean_values=[[127.5, 127.5, 127.5]], std_values=[[127.5, 127.5, 127.5]], reorder_channel='2 1 0', target_platform=['rv1126'], batch_size=4,quantized_dtype='asymmetric_quantized-u8') # 需要输入为RGB#####需要转化一下均值和归一化的值

# rknn.config(mean_values=[[0.0, 0.0, 0.0]], std_values=[[255, 255, 255]], reorder_channel='2 1 0', target_platform=['rv1126'], batch_size=1) # 需要输入为RGB

print('done')

# model_name = onnx_model[onnx_model.rfind('/') + 1:]

# Load ONNX model

print('--> Loading model %s' % onnx_model)

ret = rknn.load_onnx(model=onnx_model)

if ret != 0:

print('Load %s failed!' % onnx_model)

exit(ret)

print('done')

# Build model

print('--> Building model')

# rknn.build(do_quantization=False)

ret = rknn.build(do_quantization=True, dataset='dataset_448.txt', pre_compile=False)

#do_quantization是否对模型进行量化,datase量化校正数据集,pre_compil模型预编译开关,预编译 RKNN 模型可以减少模型初始化时间,但是无法通过模拟器进行推理或性能评估

if ret != 0:

print('Build net failed!')

exit(ret)

print('done')

# Export RKNN model

print('--> Export RKNN model')

ret = rknn.export_rknn(save_rknn_dir)

if ret != 0:

print('Export rknn failed!')

exit(ret)

ret = rknn.init_runtime(target='rv1126',device_id="a0c4f1cae341b3df") # 两个参数分别是板子型号和device_id,device_id在双头usb线连接后通过 adb devices查看

if ret != 0:

print('init runtime failed.')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[image])

# perf

print('--> Begin evaluate model performance')

perf_results = rknn.eval_perf(inputs=[image]) # 模型评估

print('done')

print()

print("->>模型前向对比!")

print("--------------rknn outputs--------------------")

print(outputs[0])

print()

print("--------------onnx outputs--------------------")

print(onnx_infer[0])

print()

std = np.std(outputs[0]-onnx_infer[0])

print(std) # 如果这个值比较大的话,说明模型转换后不太理想

rknn.release()

使用我提供的det_new.onnx和repvgg_s.onnx转换量化应该是没有问题的,rec_mbv3.onnx转不过去,感兴趣可以自己尝试看看,注意我在检测模型转换时打开预编译,但是效果非常差,所以下面的检测效果是没有打开预编译时的,模型加载时间会长一些。

以下是量化过程,开启量化的话时间可能会长一些:

量化成功后的测试结果,最后用onnx的输出和rknn的输出求了个方差:

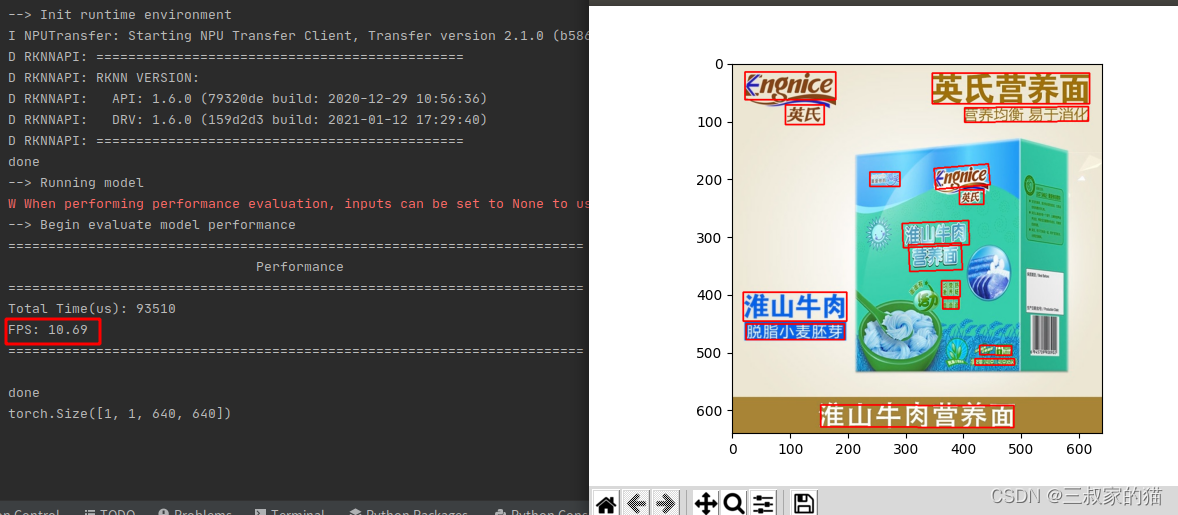

检测模型量化后的效果,模型640 x 640 固定输入:

检测模型rknn测试代码:

import numpy as np

import cv2

from rknn.api import RKNN

import torch

# from label_convert import CTCLabelConverter

import cv2

import numpy as np

import pyclipper

from shapely.geometry import Polygon

from matplotlib import pyplot as plt

class DBPostProcess():

def __init__(self, thresh=0.3, box_thresh=0.7, max_candidates=1000, unclip_ratio=2):

self.min_size = 3

self.thresh = thresh

self.box_thresh = box_thresh

self.max_candidates = max_candidates

self.unclip_ratio = unclip_ratio

def __call__(self, pred, h_w_list, is_output_polygon=False):

'''

batch: (image, polygons, ignore_tags

h_w_list: 包含[h,w]的数组

pred:

binary: text region segmentation map, with shape (N, 1,H, W)

'''

pred = pred[:, 0, :, :]

segmentation = self.binarize(pred)

boxes_batch = []

scores_batch = []

for batch_index in range(pred.shape[0]):

height, width = h_w_list[batch_index]

boxes, scores = self.post_p(pred[batch_index], segmentation[batch_index], width, height,

is_output_polygon=is_output_polygon)

boxes_batch.append(boxes)

scores_batch.append(scores)

return boxes_batch, scores_batch

def binarize(self, pred):

return pred > self.thresh

def post_p(self, pred, bitmap, dest_width, dest_height, is_output_polygon=False):

'''

_bitmap: single map with shape (H, W),

whose values are binarized as {0, 1}

'''

height, width = pred.shape

boxes = []

new_scores = []

# bitmap = bitmap.cpu().numpy()

if cv2.__version__.startswith('3'):

_, contours, _ = cv2.findContours((bitmap * 255).astype(np.uint8), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

if cv2.__version__.startswith('4'):

contours, _ = cv2.findContours((bitmap * 255).astype(np.uint8), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

for contour in contours[:self.max_candidates]:

epsilon = 0.005 * cv2.arcLength(contour, True)

approx = cv2.approxPolyDP(contour, epsilon, True)

points = approx.reshape((-1, 2))

if points.shape[0] < 4:

continue

score = self.box_score_fast(pred, contour.squeeze(1))

if self.box_thresh > score:

continue

if points.shape[0] > 2:

box = self.unclip(points, unclip_ratio=self.unclip_ratio)

if len(box) > 1:

continue

else:

continue

four_point_box, sside = self.get_mini_boxes(box.reshape((-1, 1, 2)))

if sside < self.min_size + 2:

continue

if not isinstance(dest_width, int):

dest_width = dest_width.item()

dest_height = dest_height.item()

if not is_output_polygon:

box = np.array(four_point_box)

else:

box = box.reshape(-1, 2)

box[:, 0] = np.clip(np.round(box[:, 0] / width * dest_width), 0, dest_width)

box[:, 1] = np.clip(np.round(box[:, 1] / height * dest_height), 0, dest_height)

boxes.append(box)

new_scores.append(score)

return boxes, new_scores

def unclip(self, box, unclip_ratio=1.5):

poly = Polygon(box)

distance = poly.area * unclip_ratio / poly.length

offset = pyclipper.PyclipperOffset()

offset.AddPath(box, pyclipper.JT_ROUND, pyclipper.ET_CLOSEDPOLYGON)

expanded = np.array(offset.Execute(distance))

return expanded

def get_mini_boxes(self, contour):

bounding_box = cv2.minAreaRect(contour)

points = sorted(list(cv2.boxPoints(bounding_box)), key=lambda x: x[0])

index_1, index_2, index_3, index_4 = 0, 1, 2, 3

if points[1][1] > points[0][1]:

index_1 = 0

index_4 = 1

else:

index_1 = 1

index_4 = 0

if points[3][1] > points[2][1]:

index_2 = 2

index_3 = 3

else:

index_2 = 3

index_3 = 2

box = [points[index_1], points[index_2], points[index_3], points[index_4]]

return box, min(bounding_box[1])

def box_score_fast(self, bitmap, _box):

# bitmap = bitmap.detach().cpu().numpy()

h, w = bitmap.shape[:2]

box = _box.copy()

xmin = np.clip(np.floor(box[:, 0].min()).astype(np.int), 0, w - 1)

xmax = np.clip(np.ceil(box[:, 0].max()).astype(np.int), 0, w - 1)

ymin = np.clip(np.floor(box[:, 1].min()).astype(np.int), 0, h - 1)

ymax = np.clip(np.ceil(box[:, 1].max()).astype(np.int), 0, h - 1)

mask = np.zeros((ymax - ymin + 1, xmax - xmin + 1), dtype=np.uint8)

box[:, 0] = box[:, 0] - xmin

box[:, 1] = box[:, 1] - ymin

cv2.fillPoly(mask, box.reshape(1, -1, 2).astype(np.int32), 1)

return cv2.mean(bitmap[ymin:ymax + 1, xmin:xmax + 1], mask)[0]

def narrow_224_32(image, expected_size=(224,32)):

ih, iw = image.shape[0:2]

ew, eh = expected_size

# scale = eh / ih

scale = min((eh/ih),(ew/iw))

# scale = eh / max(iw,ih)

nh = int(ih * scale)

nw = int(iw * scale)

image = cv2.resize(image, (nw, nh), interpolation=cv2.INTER_CUBIC)

top = 0

bottom = eh - nh

left = 0

right = ew - nw

new_img = cv2.copyMakeBorder(image, top, bottom, left, right, cv2.BORDER_CONSTANT, value=(114, 114, 114))

return image,new_img

def draw_bbox(img_path, result, color=(0, 0, 255), thickness=2):

import cv2

if isinstance(img_path, str):

img_path = cv2.imread(img_path)

# img_path = cv2.cvtColor(img_path, cv2.COLOR_BGR2RGB)

img_path = img_path.copy()

for point in result:

point = point.astype(int)

cv2.polylines(img_path, [point], True, color, thickness)

return img_path

if __name__ == '__main__':

post_proess = DBPostProcess()

is_output_polygon = False

# Create RKNN object

rknn = RKNN()

ret = rknn.load_rknn('./model/det_new.rknn')

# Set inputs

img = cv2.imread('./idcard/2.jpg')

origin_img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img0 ,image = narrow_224_32(img,expected_size=(640,640))

# init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime(target='rv1126',device_id="a0c4f1cae341b3df")

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[image])

# perf

print('--> Begin evaluate model performance')

perf_results = rknn.eval_perf(inputs=[image])

print('done')

feat_2 = torch.from_numpy(outputs[0])

print(feat_2.size())

box_list, score_list = post_proess(outputs[0], [image.shape[:2]], is_output_polygon=is_output_polygon)

box_list, score_list = box_list[0], score_list[0]

if len(box_list) > 0:

idx = [x.sum() > 0 for x in box_list]

box_list = [box_list[i] for i, v in enumerate(idx) if v]

score_list = [score_list[i] for i, v in enumerate(idx) if v]

else:

box_list, score_list = [], []

img = draw_bbox(image, box_list)

img = img[0:img0.shape[0],0:img0.shape[1]]

cv2.imshow("img",img)

cv2.waitKey()

rknn.release()

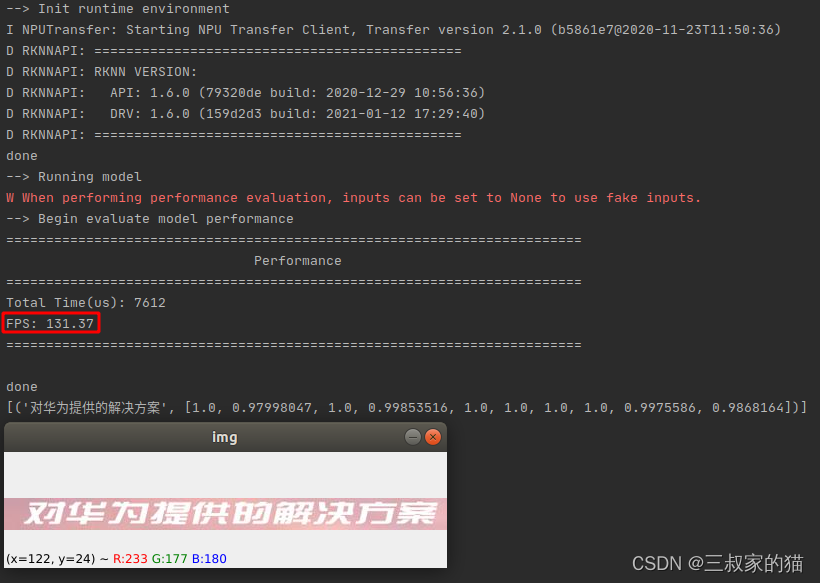

识别模型量化后的效果,模型32 x 448 固定输入:

识别模型rknn测试代码:

import numpy as np

import cv2

from rknn.api import RKNN

import torch

# from label_convert import CTCLabelConverter

class CTCLabelConverter(object):

""" Convert between text-label and text-index """

def __init__(self, character):

# character (str): set of the possible characters.

dict_character = []

with open(character, "rb") as fin:

lines = fin.readlines()

for line in lines:

line = line.decode('utf-8').strip("\n").strip("\r\n")

dict_character += list(line)

# dict_character = list(character)

self.dict = {

}

for i, char in enumerate(dict_character):

# NOTE: 0 is reserved for 'blank' token required by CTCLoss

self.dict[char] = i + 1

#TODO replace ‘ ’ with special symbol

self.character = ['[blank]'] + dict_character+[' '] # dummy '[blank]' token for CTCLoss (index 0)

def encode(self, text, batch_max_length=None):

"""convert text-label into text-index.

input:

text: text labels of each image. [batch_size]

output:

text: concatenated text index for CTCLoss.

[sum(text_lengths)] = [text_index_0 + text_index_1 + ... + text_index_(n - 1)]

length: length of each text. [batch_size]

"""

length = [len(s) for s in text]

# text = ''.join(text)

# text = [self.dict[char] for char in text]

d = []

batch_max_length = max(length)

for s in text:

t = [self.dict[char] for char in s]

t.extend([0] * (batch_max_length - len(s)))

d.append(t)

return (torch.tensor(d, dtype=torch.long), torch.tensor(length, dtype=torch.long))

def decode(self, preds, raw=False):

""" convert text-index into text-label. """

preds_idx = preds.argmax(axis=2)

preds_prob = preds.max(axis=2)

result_list = []

for word, prob in zip(preds_idx, preds_prob):

if raw:

result_list.append((''.join([self.character[int(i)] for i in word]), prob))

else:

result = []

conf = []

for i, index in enumerate(word):

if word[i] != 0 and (not (i > 0 and word[i - 1] == word[i])):

result.append(self.character[int(index)])

conf.append(prob[i])

result_list.append((''.join(result), conf))

return result_list

def narrow_224_32(image, expected_size=(224,32)):

ih, iw = image.shape[0:2]

ew, eh = expected_size

scale = eh / ih

# scale = eh / max(iw,ih)

nh = int(ih * scale)

nw = int(iw * scale)

image = cv2.resize(image, (nw, nh), interpolation=cv2.INTER_CUBIC)

top = 0

bottom = eh - nh - top

left = 0

right = ew - nw - left

new_img = cv2.copyMakeBorder(image, top, bottom, left, right, cv2.BORDER_CONSTANT, value=(114, 114, 114))

return new_img

if __name__ == '__main__':

dict_path = r"./dict/dict_text.txt"

converter = CTCLabelConverter(dict_path)

# Create RKNN object

rknn = RKNN()

ret = rknn.load_rknn('./model/repvgg_s.rknn')

# Set inputs

img = cv2.imread('crnn_img/33925.jpg')

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

origin_img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

image = narrow_224_32(img,expected_size=(448,32))

# init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime(target='rv1126',device_id="a0c4f1cae341b3df")

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[image])

# perf

print('--> Begin evaluate model performance')

perf_results = rknn.eval_perf(inputs=[image])

print('done')

feat_2 = torch.from_numpy(outputs[0])

# print(feat_2.size())

#

txt = converter.decode(feat_2.detach().cpu().numpy())

print(txt)

cv2.imshow("img",img)

cv2.waitKey()

rknn.release()

至此模型转换成功了,篇幅太长了,转下一篇:【工程部署】手把手教你在RKNN上部署OCR服务(下),下一篇主要是补充一些代码实现和编写,有点长,没写完。。。

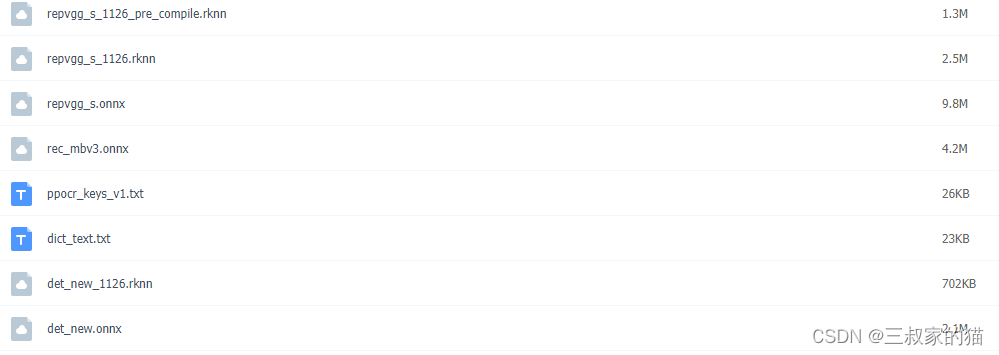

百度云链接: https://pan.baidu.com/s/1jSirZT2LBOWQxohCEORp5g 密码: vrjk,提供的文件有:

其中第一个rknn模型是开启了预编译的转换模型,模型更小,模型在初始化时速度加载更快,检测模型开启预编译转换精度掉的太厉害,所以没有提供,可以自己尝试,rec_mbv3.onnx转不过去,所以没有对应的rknn文件,ppocr_keys_v1.txt是ppocr识别模型rec_mbv3.onnx的keys文件,dict_text.txt是我训练的repvgg_s.onnx的keys文件。