中断和异常处理程序的嵌套执行

https://blog.csdn.net/denglin12315/article/details/121703669

一、历史

早前的Linux内核版本,中断分为两种:

1. 快中断,申请的时候带IRQF_DISABLED标记,在IRQ HANDLER里面不允许新的中断进来;

2. 慢中断,申请的时候不带IRQF_DISABLED标记,在IRQ HANDLER里面允许新的其他中断嵌套进来。

老的Linux内核中,如果一个中断服务程序不想被别的中断打断,我们能看到这样的代码:

request_irq(FLOPPY_IRQ, floppy_interrupt,- IRQF_DISABLED, "floppy", NULL)

二、现在

在2010年如下的commit中,IRQF_DISABLED被作废了:

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=e58aa3d2d0cc

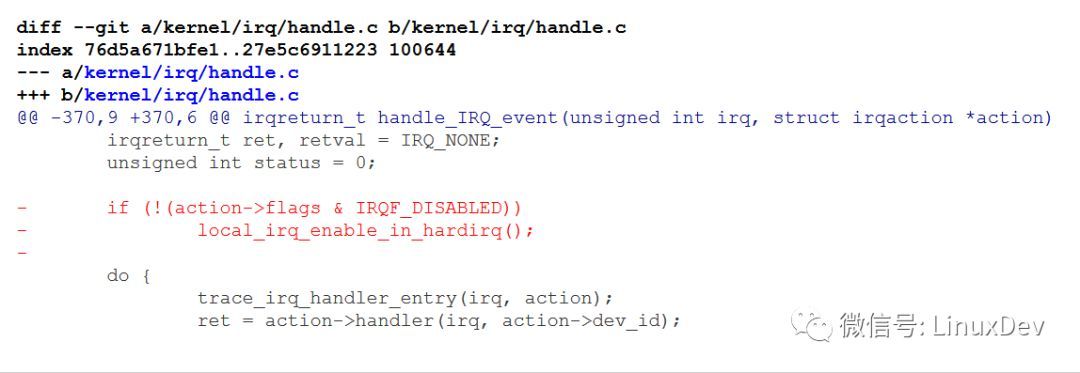

它的commit log清晰地解释中断嵌套可能引入的一些risk,比如stack溢出等。也就是说,从这个commit开始,实际Linux已经不再支持中断的嵌套, 也没有快慢中断的概念了,IRQF_DISABLED标记也作废了。在IRQ HANDLER里面,无论一个中断设置还是不设置IRQF_DISABLED, 内核都不会开启CPU对中断的响应:

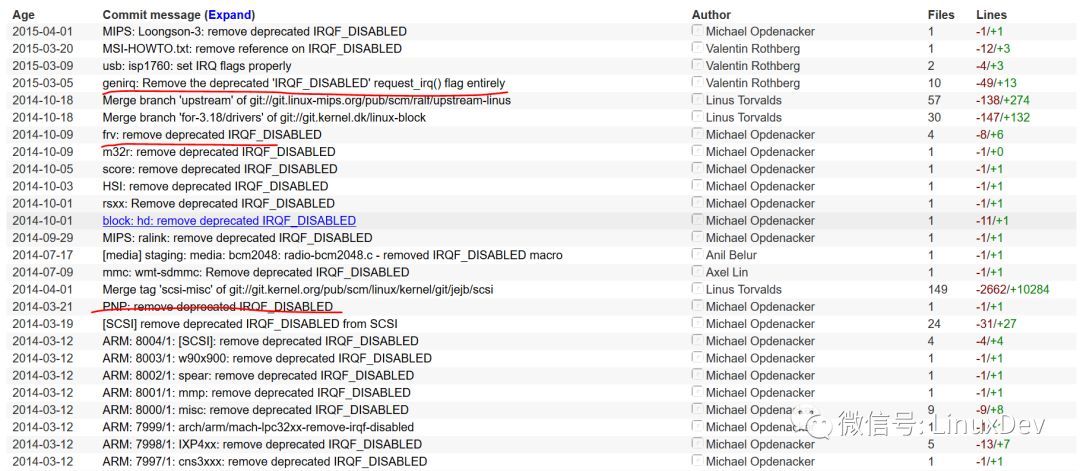

这个作废的IRQF_DISABLED标记,在内核已经没有任何的意义了。后来,这个标记本身,在内核里面也被删除了,彻底成为过往:

三、硬件

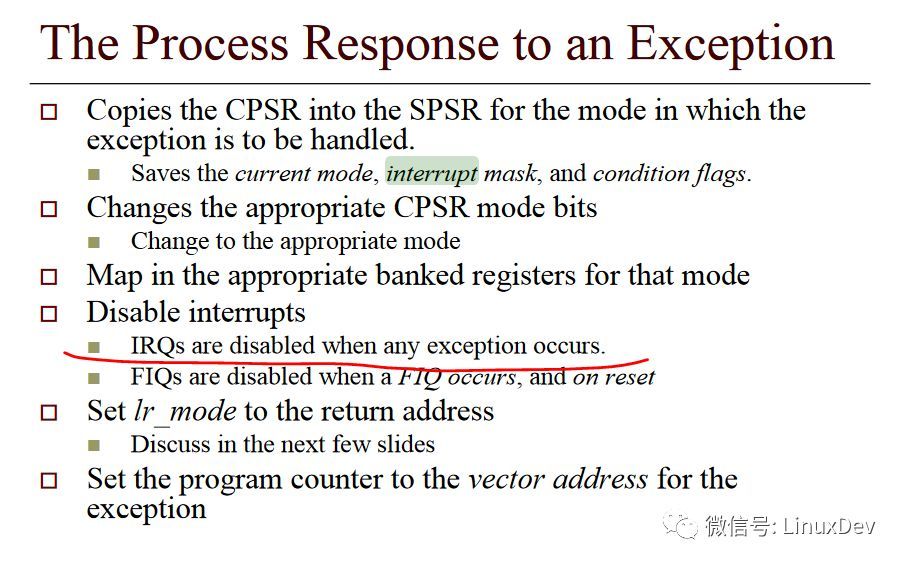

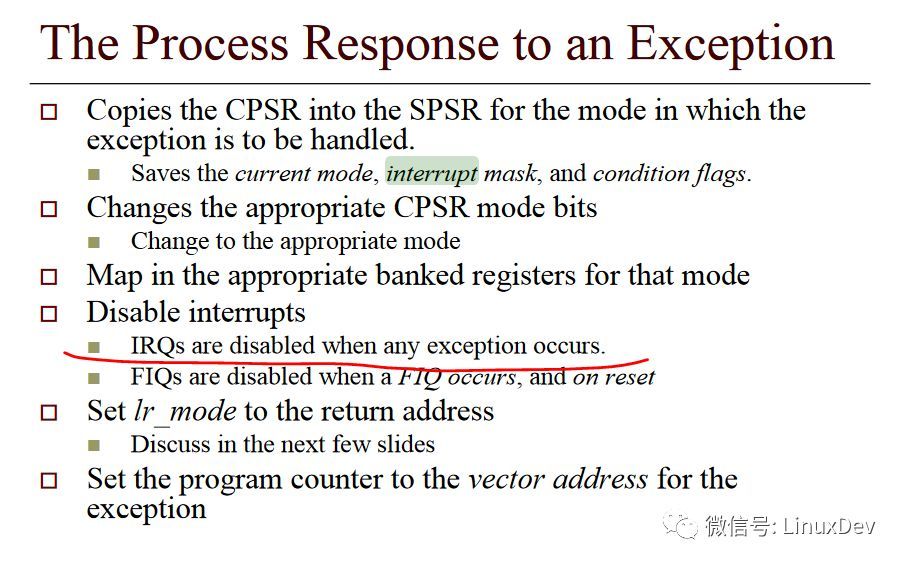

中断发生后,一般硬件会自动屏蔽CPU对中断的响应,而软件层面上,直到IRQ HANDLER做完,才会重新开启中断。比如,对于ARM处理器而言,exception进来的时候,硬件都会自动屏蔽中断:

也就是说,当ARM处理器收到中断的时候,它进入中断模式,同时ARM处理器的CPSR寄存器的IRQ位会被硬件设置为屏蔽IRQ。

Linux内核会在如下2个时候重新开启CPSR对IRQ的响应:

1. 从IRQ HANDLER返回中断底半部的SOFTIRQ

2. 从IRQ HANDLER返回一个线程上下文

从1大家可以看出,SOFTIRQ里面是可以响应中断的。

异常处理

中断处理

流程与异常处理类似。

el1_irq

->el1_interrupt_handler handle_arch_irq //handle_arch_irq = gic_handle_irq

->irq_handler \handler

->gic_handle_irq

->handle_domain_irq

->irq_enter;

->->preempt_count_add(HARDIRQ_OFFSET);

->generic_handle_irq_desc;

-> desc->handle_irq = handle_edge_irq //handle_irq = handle_edge_irq or handle_level_irq ...

->handle_irq_event

->handle_irq_event_percpu

->__handle_irq_event_percpu

->res = action->handler(irq, action->dev_id);

->irq_exit;

->arm64_preempt_schedule_irq //内核抢占

IRQ数据结构

arm64 对应的hw_interrupt_type没找到。

为中断处理程序保存寄存器的值

通过kernel_entry和kernel_exit保存恢复寄存器

kernel_entry和kernel_exit

对于ARM处理器而言,exception进来的时候,硬件都会自动屏蔽中断:

也就是说,当ARM处理器收到中断的时候,它进入中断模式,同时ARM处理器的CPSR寄存器的IRQ位会被硬件设置为屏蔽IRQ。

这里重点强调一下ERET,以arm64为例,调用该指令后,PSTATE恢复SPSR_ELn的值,PC恢复ELR_ELn的值.

https://blog.csdn.net/weixin_42135087/article/details/107227624

ARMv8-A Process State, PSTATE介绍

https://blog.csdn.net/longwang155069/article/details/105204547

do_IRQ

__do_IRQ

这两节对应下面流程

-> desc->handle_irq = handle_edge_irq //handle_irq = handle_edge_irq or handle_level_irq ...

->handle_irq_event

->handle_irq_event_percpu

->__handle_irq_event_percpu

->res = action->handler(irq, action->dev_id);

void handle_edge_irq(struct irq_desc *desc)

{

raw_spin_lock(&desc->lock);

desc->istate &= ~(IRQS_REPLAY | IRQS_WAITING);

if (!irq_may_run(desc)) {

desc->istate |= IRQS_PENDING;

mask_ack_irq(desc);

goto out_unlock;

}

/*

* If its disabled or no action available then mask it and get

* out of here.

*/

if (irqd_irq_disabled(&desc->irq_data) || !desc->action) {

desc->istate |= IRQS_PENDING;

mask_ack_irq(desc);

goto out_unlock;

}

kstat_incr_irqs_this_cpu(desc);

/* Start handling the irq */

desc->irq_data.chip->irq_ack(&desc->irq_data);

do {

if (unlikely(!desc->action)) {

mask_irq(desc);

goto out_unlock;

}

/*

* When another irq arrived while we were handling

* one, we could have masked the irq.

* Renable it, if it was not disabled in meantime.

*/

if (unlikely(desc->istate & IRQS_PENDING)) {

if (!irqd_irq_disabled(&desc->irq_data) &&

irqd_irq_masked(&desc->irq_data))

unmask_irq(desc);

}

handle_irq_event(desc);

} while ((desc->istate & IRQS_PENDING) &&

!irqd_irq_disabled(&desc->irq_data));

out_unlock:

raw_spin_unlock(&desc->lock);

}

irqreturn_t handle_irq_event(struct irq_desc *desc)

{

irqreturn_t ret;

desc->istate &= ~IRQS_PENDING;

irqd_set(&desc->irq_data, IRQD_IRQ_INPROGRESS);

raw_spin_unlock(&desc->lock);

ret = handle_irq_event_percpu(desc);

raw_spin_lock(&desc->lock);

irqd_clear(&desc->irq_data, IRQD_IRQ_INPROGRESS);

return ret;

}

irqreturn_t handle_irq_event_percpu(struct irq_desc *desc)

{

irqreturn_t retval;

unsigned int flags = 0;

retval = __handle_irq_event_percpu(desc, &flags);

add_interrupt_randomness(desc->irq_data.irq, flags);

if (!noirqdebug)

note_interrupt(desc, retval);

return retval;

}

irqreturn_t __handle_irq_event_percpu(struct irq_desc *desc, unsigned int *flags)

{

irqreturn_t retval = IRQ_NONE;

unsigned int irq = desc->irq_data.irq;

struct irqaction *action;

record_irq_time(desc);

for_each_action_of_desc(desc, action) {

irqreturn_t res;

/*

* If this IRQ would be threaded under force_irqthreads, mark it so.

*/

if (irq_settings_can_thread(desc) &&

!(action->flags & (IRQF_NO_THREAD | IRQF_PERCPU | IRQF_ONESHOT)))

lockdep_hardirq_threaded();

trace_irq_handler_entry(irq, action);

res = action->handler(irq, action->dev_id);

trace_irq_handler_exit(irq, action, res);

if (WARN_ONCE(!irqs_disabled(),"irq %u handler %pS enabled interrupts\n",

irq, action->handler))

local_irq_disable();

switch (res) {

case IRQ_WAKE_THREAD:

/*

* Catch drivers which return WAKE_THREAD but

* did not set up a thread function

*/

if (unlikely(!action->thread_fn)) {

warn_no_thread(irq, action);

break;

}

__irq_wake_thread(desc, action);

fallthrough; /* to add to randomness */

case IRQ_HANDLED:

*flags |= action->flags;

break;

default:

break;

}

retval |= res;

}

return retval;

}

挽救丢失的中断

kernel/irq/chip.c

irq_startup

->irq_enable

->check_irq_resend

int check_irq_resend(struct irq_desc *desc, bool inject)

{

int err = 0;

/*

* We do not resend level type interrupts. Level type interrupts

* are resent by hardware when they are still active. Clear the

* pending bit so suspend/resume does not get confused.

*/

if (irq_settings_is_level(desc)) {

desc->istate &= ~IRQS_PENDING;

return -EINVAL;

}

if (desc->istate & IRQS_REPLAY)

return -EBUSY;

if (!(desc->istate & IRQS_PENDING) && !inject)

return 0;

desc->istate &= ~IRQS_PENDING;

if (!try_retrigger(desc))

err = irq_sw_resend(desc);

/* If the retrigger was successfull, mark it with the REPLAY bit */

if (!err)

desc->istate |= IRQS_REPLAY;

return err;

}

软中断及tasklet

ksoftirqd

这一节的代码对应下面的smpboot_thread_fn

static struct smp_hotplug_thread softirq_threads = {

.store = &ksoftirqd,

.thread_should_run = ksoftirqd_should_run,

.thread_fn = run_ksoftirqd,

.thread_comm = "ksoftirqd/%u",

};

static __init int spawn_ksoftirqd(void)

{

cpuhp_setup_state_nocalls(CPUHP_SOFTIRQ_DEAD, "softirq:dead", NULL,

takeover_tasklets);

BUG_ON(smpboot_register_percpu_thread(&softirq_threads));

return 0;

}

static int smpboot_thread_fn(void *data)

{

struct smpboot_thread_data *td = data;

struct smp_hotplug_thread *ht = td->ht;

while (1) {

set_current_state(TASK_INTERRUPTIBLE);

preempt_disable();

...

if (!ht->thread_should_run(td->cpu)) {

preempt_enable_no_resched();

schedule();

} else {

__set_current_state(TASK_RUNNING);

preempt_enable();

ht->thread_fn(td->cpu);

}

}

static void run_ksoftirqd(unsigned int cpu)

{

local_irq_disable();

if (local_softirq_pending()) {

/*

* We can safely run softirq on inline stack, as we are not deep

* in the task stack here.

*/

__do_softirq();

local_irq_enable();

cond_resched();

return;

}

local_irq_enable();

}