容器云平台搭建目录

容器云平台搭建

1.节点规划

| IP | 主机名 | 节点 |

|---|---|---|

| 192.168.100.10 | Master | kubernetes集群 |

2.基础环境配置

将安装包 下载至root目录 并解压到/opt目录

[root@localhost ~]# mount -o loop chinaskills_cloud_paas_v2.0.2.iso /mnt/

cmount: /dev/loop0 写保护,将以只读方式挂载

[root@localhost ~]# cp -r /mnt/* /opt/

[root@localhost ~]# umount /mnt/

1.1安装kubeeasy

kubeeasy为Kubernetes集群专业部署工具,极大的简化了流程,其特性如下:

- 全自动化安装流程

- 支持DNS识别集群

- 支持自我修复: 一切都在自动扩缩组中运行

- 支持多种操作系统(如 Debian、Ubuntu 16.04、CentOs7、RHEL等)

- 支持高可用

在master节点安装kubeeasy工具

[root@localhost ~]# mv /opt/kubeeasy /usr/bin/

1.2安装依赖包

此步骤主要完成docker-ce、git、unzip、vim、wget等工具的安装

在master节点执行以下命令完成依赖包的安装

[root@localhost ~]# kubeeasy install depend --host 192.168.100.10 --user root --password Abc@1234 --offline-file /opt/dependencies/base-rpms.tar.gz

参数解释如下:

- –host: 这里使用单节点搭建就填主机IP就行(如果还有其他节点IP用逗号隔开就行)

- –password: 主机登录密码,所有节点需保持密码一致

- –offline-file: 离线安装包路径

可以通过命令 tail -f /var/log/kubeinstall.log 查看安装详情或者排查错误

1.3配置SSH免密钥

(单节点不用配置)

安装Kubernetes集群的时候,需要配置Kubernetes集群各节点间的免密登录,方便传输文件和通讯。

在master节点执行以下命令完成集群节点的连通性检测:

[root@localhost ~]# kubeeasy check ssh \

--host 10.24.2.10,10.24.2.11 \

--user root \

--password Abc@1234

在master节点执行以下命令完成集群所有节点间的免密钥配置:

[root@localhost ~]# kubeeasy create ssh-keygen \

--master 10.24.2.10 \

--worker 10.24.2.11 \

--user root --password Abc@1234

–mater参数后跟master节点IP,–worker参数后跟所有worker节点IP。

安装Kubernetes集群

在master节点执行以下命令部署 kubernetes集群

[root@localhost ~]# kubeeasy install kubernetes --master 192.168.100.10 --user root --password Abc@1234 --version 1.22.1 --offline-file /opt/kubernetes.tar.gz

部分参数解释:

- –master: Master节点IP

- –worker:Node节点IP,如有多个Node节点用逗号隔开

- –version:Kubernetes版本此处只能为 1.22.1

可以通过命令 tail -f /var/log/kubeinstall.log 查看安装详情或者排查错误

部署完查看集群状态:

[root@k8s-master-node1 ~]# kubectl cluster-info

Kubernetes control plane is running at https://apiserver.cluster.local:6443

CoreDNS is running at https://apiserver.cluster.local:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

查看节点负载情况:

[root@k8s-master-node1 ~]# kubectl top nodes --use-protocol-buffers

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master-node1 390m 3% 1967Mi 25%

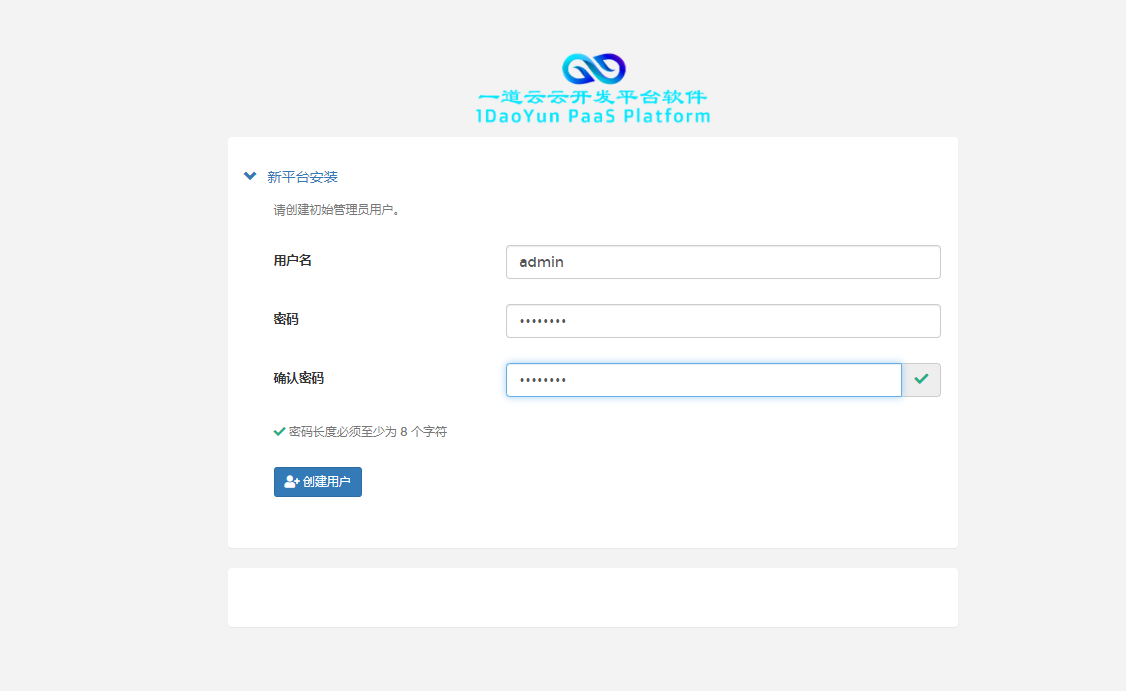

登录一道云云开发平台

http://master_ip:30080

基础案例

使用nginx镜像再default命名空间下创建一个名为exam的Pod设置环境变量exam,其值为2022

[root@k8s-master-node1 ~]# vi exam.yaml

apiVersion: v1

kind: Pod

metadata:

name: exam

namespace: default

spec:

containers:

- image: nginx:latest

name: nginx

imagePullPolicy: IfNotPresent

env:

- name: "exam"

value: "2022"

[root@k8s-master-node1 ~]# kubectl apply -f exam.yaml

pod/exam created

[root@k8s-master-node1 ~]# kubectl get -f exam.yaml

NAME READY STATUS RESTARTS AGE

exam 1/1 Running 0 4m9s

安装部署Istio

本次安装版本为1.12.0

在master节点执行以下命令进行Istio服务网格环境的安装

[root@k8s-master-node1 ~]# kubeeasy add --istio istio

查看Pod

[root@k8s-master-node1 ~]# kubectl -n istio-system get pods

NAME READY STATUS RESTARTS AGE

grafana-6ccd56f4b6-8q8kg 1/1 Running 0 59s

istio-egressgateway-7f4864f59c-jbsbw 1/1 Running 0 74s

istio-ingressgateway-55d9fb9f-8sbd7 1/1 Running 0 74s

istiod-555d47cb65-hcl69 1/1 Running 0 78s

jaeger-5d44bc5c5d-b9cq7 1/1 Running 0 58s

kiali-9f9596d69-ssn57 1/1 Running 0 58s

prometheus-64fd8ccd65-xdrzz 2/2 Running 0 58s

查看Istio版本信息

[root@k8s-master-node1 ~]# istioctl version

client version: 1.12.0

control plane version: 1.12.0

data plane version: 1.12.0 (2 proxies)

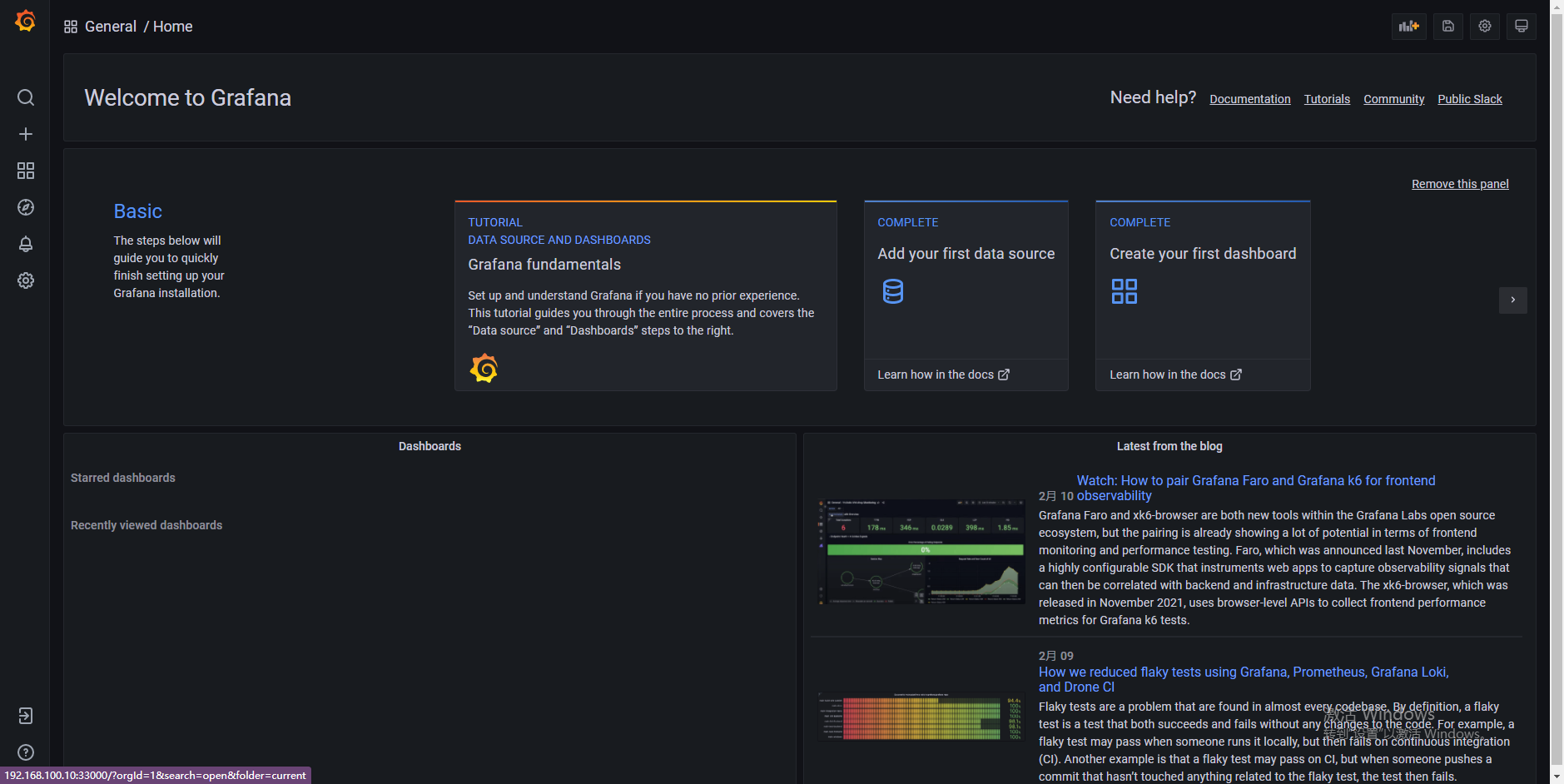

Istio可视化访问

http://master_ip:33000

访问Grafana界面

访问Kiali

http://master_ip:20001

还有部分访问界面

http://master_ip:30090

http://master_ip:30686

3.3istioctl基本使用

istioctl用于Istio系统中创建、列出、修改以及删除配置资源

可用的路由和流量管理配置类型有: virtualservice、gateway、destinationrule、serviceentry、httpapispecbinding、quotaspec、quotaspecbinding、servicerole、servicerolebinding、policy。

使用下面命令展示istioctl可以访问到丢Istio配置档的名称

[root@k8s-master-node1 ~]# istioctl profile list

Istio configuration profiles:

default

demo

empty

external

minimal

openshift

preview

remote

#default: 根据istioOpperator API的默认设置启用相关组件,适用于生产环境

#demo: 部署较多组件演示istio功能

#minimal:类似于default,仅部署控制平台

#remore: 用于配置共享control plane多集群

#empty: 不部署任何组件,通常帮助用户自定义profifle时生成基础配置

#preview:包含预览性的profile,可探索新功能,不保证稳定性和安全及性能

展示配置档的配置信息

[root@k8s-master-node1 ~]# istioctl profile dump demo

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

components:

base:

enabled: true

cni:

enabled: false

egressGateways:

- enabled: true

k8s:

resources:

requests:

cpu: 10m

memory: 40Mi

name: istio-egressgateway

ingressGateways:

- enabled: true

k8s:

resources:

requests:

cpu: 10m

memory: 40Mi

service:

ports:

- name: status-port

port: 15021

targetPort: 15021

- name: http2

port: 80

targetPort: 8080

- name: https

port: 443

targetPort: 8443

- name: tcp

port: 31400

targetPort: 31400

- name: tls

port: 15443

targetPort: 15443

name: istio-ingressgateway

istiodRemote:

enabled: false

pilot:

enabled: true

k8s:

env:

- name: PILOT_TRACE_SAMPLING

value: "100"

resources:

requests:

cpu: 10m

memory: 100Mi

hub: docker.io/istio

meshConfig:

accessLogFile: /dev/stdout

defaultConfig:

proxyMetadata: {

}

enablePrometheusMerge: true

profile: demo

tag: 1.12.0

values:

base:

enableCRDTemplates: false

validationURL: ""

defaultRevision: ""

gateways:

istio-egressgateway:

autoscaleEnabled: false

env: {

}

name: istio-egressgateway

secretVolumes:

- mountPath: /etc/istio/egressgateway-certs

name: egressgateway-certs

secretName: istio-egressgateway-certs

- mountPath: /etc/istio/egressgateway-ca-certs

name: egressgateway-ca-certs

secretName: istio-egressgateway-ca-certs

type: ClusterIP

istio-ingressgateway:

autoscaleEnabled: false

env: {

}

name: istio-ingressgateway

secretVolumes:

- mountPath: /etc/istio/ingressgateway-certs

name: ingressgateway-certs

secretName: istio-ingressgateway-certs

- mountPath: /etc/istio/ingressgateway-ca-certs

name: ingressgateway-ca-certs

secretName: istio-ingressgateway-ca-certs

type: LoadBalancer

global:

configValidation: true

defaultNodeSelector: {

}

defaultPodDisruptionBudget:

enabled: true

defaultResources:

requests:

cpu: 10m

imagePullPolicy: ""

imagePullSecrets: []

istioNamespace: istio-system

istiod:

enableAnalysis: false

jwtPolicy: third-party-jwt

logAsJson: false

logging:

level: default:info

meshNetworks: {

}

mountMtlsCerts: false

multiCluster:

clusterName: ""

enabled: false

network: ""

omitSidecarInjectorConfigMap: false

oneNamespace: false

operatorManageWebhooks: false

pilotCertProvider: istiod

priorityClassName: ""

proxy:

autoInject: enabled

clusterDomain: cluster.local

componentLogLevel: misc:error

enableCoreDump: false

excludeIPRanges: ""

excludeInboundPorts: ""

excludeOutboundPorts: ""

image: proxyv2

includeIPRanges: '*'

logLevel: warning

privileged: false

readinessFailureThreshold: 30

readinessInitialDelaySeconds: 1

readinessPeriodSeconds: 2

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 10m

memory: 40Mi

statusPort: 15020

tracer: zipkin

proxy_init:

image: proxyv2

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 10m

memory: 10Mi

sds:

token:

aud: istio-ca

sts:

servicePort: 0

tracer:

datadog: {

}

lightstep: {

}

stackdriver: {

}

zipkin: {

}

useMCP: false

istiodRemote:

injectionURL: ""

pilot:

autoscaleEnabled: false

autoscaleMax: 5

autoscaleMin: 1

configMap: true

cpu:

targetAverageUtilization: 80

enableProtocolSniffingForInbound: true

enableProtocolSniffingForOutbound: true

env: {

}

image: pilot

keepaliveMaxServerConnectionAge: 30m

nodeSelector: {

}

podLabels: {

}

replicaCount: 1

traceSampling: 1

telemetry:

enabled: true

v2:

enabled: true

metadataExchange:

wasmEnabled: false

prometheus:

enabled: true

wasmEnabled: false

stackdriver:

configOverride: {

}

enabled: false

logging: false

monitoring: false

topology: false

显示配置文件的差异

[root@k8s-master-node1 ~]# istioctl profile diff default demo

The difference between profiles:

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

creationTimestamp: null

namespace: istio-system

spec:

components:

base:

enabled: true

cni:

enabled: false

egressGateways:

- - enabled: false

+ - enabled: true

+ k8s:

+ resources:

+ requests:

+ cpu: 10m

+ memory: 40Mi

name: istio-egressgateway

ingressGateways:

- enabled: true

+ k8s:

+ resources:

+ requests:

+ cpu: 10m

+ memory: 40Mi

+ service:

+ ports:

+ - name: status-port

+ port: 15021

+ targetPort: 15021

+ - name: http2

+ port: 80

+ targetPort: 8080

+ - name: https

+ port: 443

+ targetPort: 8443

+ - name: tcp

+ port: 31400

+ targetPort: 31400

+ - name: tls

+ port: 15443

+ targetPort: 15443

name: istio-ingressgateway

istiodRemote:

enabled: false

pilot:

enabled: true

+ k8s:

+ env:

+ - name: PILOT_TRACE_SAMPLING

+ value: "100"

+ resources:

+ requests:

+ cpu: 10m

+ memory: 100Mi

hub: docker.io/istio

meshConfig:

+ accessLogFile: /dev/stdout

defaultConfig:

proxyMetadata: {

}

enablePrometheusMerge: true

profile: default

tag: 1.12.0

values:

base:

enableCRDTemplates: false

validationURL: ""

defaultRevision: ""

gateways:

istio-egressgateway:

- autoscaleEnabled: true

+ autoscaleEnabled: false

env: {

}

name: istio-egressgateway

secretVolumes:

- mountPath: /etc/istio/egressgateway-certs

name: egressgateway-certs

secretName: istio-egressgateway-certs

- mountPath: /etc/istio/egressgateway-ca-certs

name: egressgateway-ca-certs

secretName: istio-egressgateway-ca-certs

type: ClusterIP

istio-ingressgateway:

- autoscaleEnabled: true

+ autoscaleEnabled: false

env: {

}

name: istio-ingressgateway

secretVolumes:

- mountPath: /etc/istio/ingressgateway-certs

name: ingressgateway-certs

secretName: istio-ingressgateway-certs

- mountPath: /etc/istio/ingressgateway-ca-certs

name: ingressgateway-ca-certs

secretName: istio-ingressgateway-ca-certs

type: LoadBalancer

global:

configValidation: true

defaultNodeSelector: {

}

defaultPodDisruptionBudget:

enabled: true

defaultResources:

requests:

cpu: 10m

imagePullPolicy: ""

imagePullSecrets: []

istioNamespace: istio-system

istiod:

enableAnalysis: false

jwtPolicy: third-party-jwt

logAsJson: false

logging:

level: default:info

meshNetworks: {

}

mountMtlsCerts: false

multiCluster:

clusterName: ""

enabled: false

network: ""

omitSidecarInjectorConfigMap: false

oneNamespace: false

operatorManageWebhooks: false

pilotCertProvider: istiod

priorityClassName: ""

proxy:

autoInject: enabled

clusterDomain: cluster.local

componentLogLevel: misc:error

enableCoreDump: false

excludeIPRanges: ""

excludeInboundPorts: ""

excludeOutboundPorts: ""

image: proxyv2

includeIPRanges: '*'

logLevel: warning

privileged: false

readinessFailureThreshold: 30

readinessInitialDelaySeconds: 1

readinessPeriodSeconds: 2

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

- cpu: 100m

- memory: 128Mi

+ cpu: 10m

+ memory: 40Mi

statusPort: 15020

tracer: zipkin

proxy_init:

image: proxyv2

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 10m

memory: 10Mi

sds:

token:

aud: istio-ca

sts:

servicePort: 0

tracer:

datadog: {

}

lightstep: {

}

stackdriver: {

}

zipkin: {

}

useMCP: false

istiodRemote:

injectionURL: ""

pilot:

- autoscaleEnabled: true

+ autoscaleEnabled: false

autoscaleMax: 5

autoscaleMin: 1

configMap: true

cpu:

targetAverageUtilization: 80

deploymentLabels: null

enableProtocolSniffingForInbound: true

enableProtocolSniffingForOutbound: true

env: {

}

image: pilot

keepaliveMaxServerConnectionAge: 30m

nodeSelector: {

}

podLabels: {

}

replicaCount: 1

traceSampling: 1

telemetry:

enabled: true

v2:

enabled: true

metadataExchange:

wasmEnabled: false

prometheus:

enabled: true

wasmEnabled: false

stackdriver:

configOverride: {

}

enabled: false

logging: false

monitoring: false

topology: false

可以使用proxy-status或ps命令概述服务网格

[root@k8s-master-node1 ~]# istioctl proxy-status

NAME CDS LDS EDS RDS ISTIOD VERSION

istio-egressgateway-7f4864f59c-ps7cs.istio-system SYNCED SYNCED SYNCED NOT SENT istiod-555d47cb65-z9m58 1.12.0

istio-ingressgateway-55d9fb9f-bwzsl.istio-system SYNCED SYNCED SYNCED NOT SENT istiod-555d47cb65-z9m58 1.12.0

[root@k8s-master-node1 ~]# istioctl ps

NAME CDS LDS EDS RDS ISTIOD VERSION

istio-egressgateway-7f4864f59c-ps7cs.istio-system SYNCED SYNCED SYNCED NOT SENT istiod-555d47cb65-z9m58 1.12.0

istio-ingressgateway-55d9fb9f-bwzsl.istio-system SYNCED SYNCED SYNCED NOT SENT istiod-555d47cb65-z9m58 1.12.0

如果输出列表中缺少某个代理,则意味着它当前未连接到Polit实例,所以它无法接收到任何配置。

此外,如果它被标记为stale,则意味着存在网格问题或者需要扩展Pilot

istio允许使用 proxy-config或者pc命令检索代理的配置信息

检索特定Pod中Envoy实例的集群配置的信息

# istioctl proxy-config cluster <pod-name> [flages]

all #检索指定pod中Envoy的所有配置

bootstrap #检索指定pod中Envoy的引导配置

cluster #检索指定pod中Envoy的集群配置

endpoint #检索指定pod中Envoy的端点配置

listener #检索指定pod中Envoy的侦听器配置

log #实验)检索指定pod中Envoy的日志级别

rootca #比较比较两个给定pod的rootca值

route #检索指定pod中Envoy的路由配置

secret #检索指定pod中Envoy的机密配置

基础案例

在Kubernetes集群上完成istio服务网格环境的安装,然后新建命名空间exam,为该命名空间开启自动注入Sidecar(也可以修改配置文件名自动注入)

[root@k8s-master-node1 ~]# kubectl create namespace exam

namespace/exam created

[root@k8s-master-node1 ~]# kubectl label namespace exam istio-injection=enabled

namespace/exam labeled

在master节点执行kubectl -n istio-system get all 命令和kubectl get ns exam --show-labels 命令进行验证

[root@k8s-master-node1 ~]# kubectl -n istio-system get all

NAME READY STATUS RESTARTS AGE

pod/grafana-6ccd56f4b6-dz9gx 1/1 Running 0 28m

pod/istio-egressgateway-7f4864f59c-ps7cs 1/1 Running 0 29m

pod/istio-ingressgateway-55d9fb9f-bwzsl 1/1 Running 0 29m

pod/istiod-555d47cb65-z9m58 1/1 Running 0 29m

pod/jaeger-5d44bc5c5d-5vmw8 1/1 Running 0 28m

pod/kiali-9f9596d69-qkb6f 1/1 Running 0 28m

pod/prometheus-64fd8ccd65-s92vb 2/2 Running 0 28m

.....

[root@k8s-master-node1 ~]# kubectl get ns exam --show-labels

NAME STATUS AGE LABELS

exam Active 7m52s istio-injection=enabled,kubernetes.io/metadata.name=exam

安装部署KubeVirt

在master节点执行安装KubeVirt

[root@k8s-master-node1 ~]# kubeeasy add --virt kubevirt

查看Pod

[root@k8s-master-node1 ~]# kubectl -n kubevirt get pod

NAME READY STATUS RESTARTS AGE

virt-api-86f9d6d4f-2mntr 1/1 Running 0 89s

virt-api-86f9d6d4f-vf8vc 1/1 Running 0 89s

virt-controller-54b79f5db-4xp4t 1/1 Running 0 64s

virt-controller-54b79f5db-kq4wj 1/1 Running 0 64s

virt-handler-gxtv6 1/1 Running 0 64s

virt-operator-6fbd74566c-nnrdn 1/1 Running 0 119s

virt-operator-6fbd74566c-wblgx 1/1 Running 0 119s

基本使用

创建vmi

[root@k8s-master-node1 ~]# kubectl create -f vmi.yaml

查看vmi

[root@k8s-master-node1 ~]# kubectl get vmis

删除vmi

[root@k8s-master-node1 ~]# kubectl delete vmis <vmi-name>

virhctl工具

virtctl是KubeVirt自带的类似于Kubectl的命令行工具,可以直接管理虚拟机,可以控制虚拟机的start,stop,restart等等

# 启动虚拟机

virtctl start <vmi-name>

# 停止虚拟机

virtctl stop <vmi-name>

# 重启虚拟机

virtctl restart <vmi-name>

基础案例

在Kubernetes集群上完成KubeVirt虚拟化环境的安装,完成后再master节点执行kubectl -n kubevirt get deployment 命令进行验证

[root@k8s-master-node1 ~]# kubectl -n kubevirt get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

virt-api 2/2 2 2 14m

virt-controller 2/2 2 2 14m

virt-operator 2/2 2 2 15m

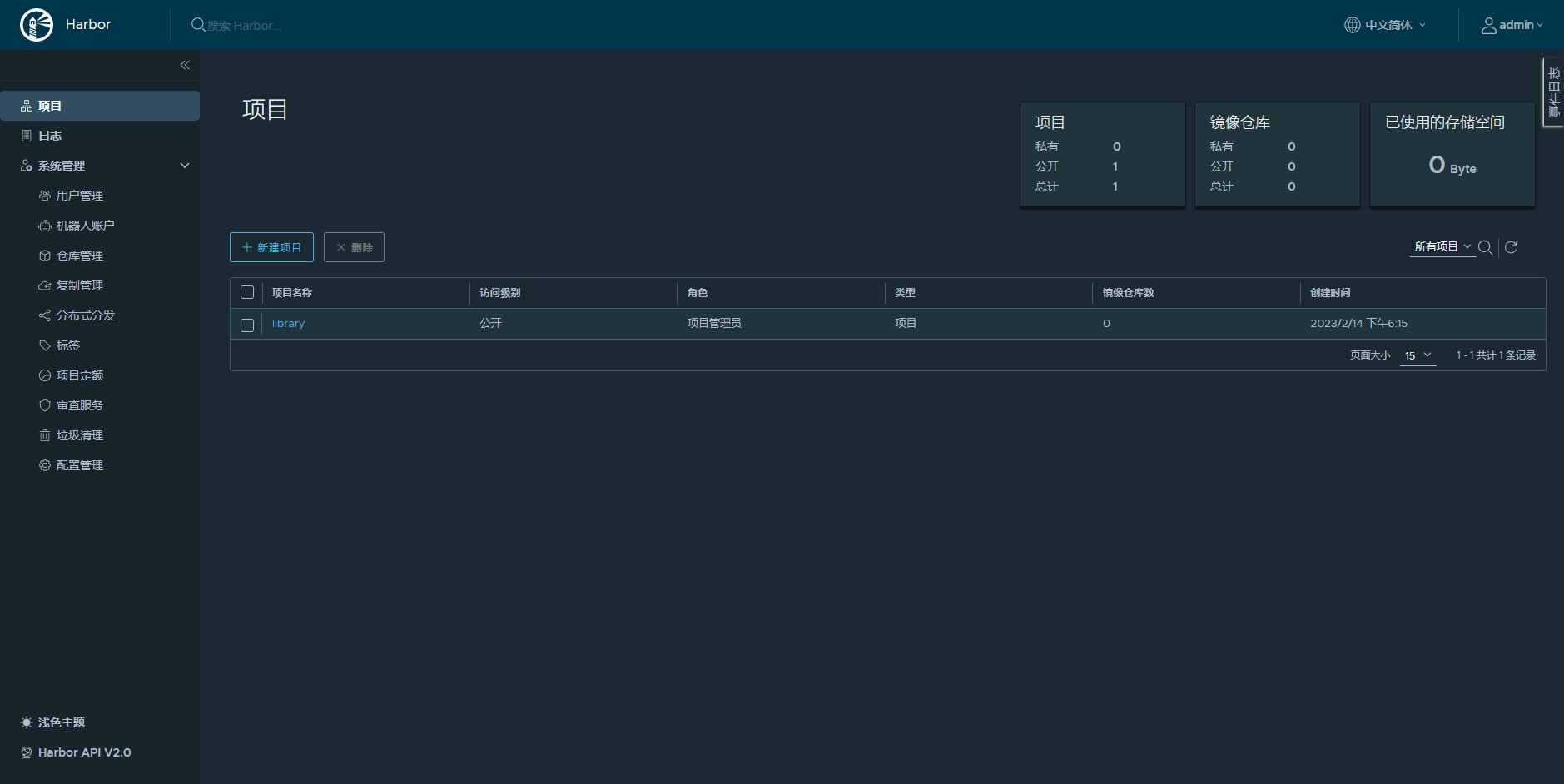

安装部署Hanbor仓库

执行以下命令安装hanbor仓库

[root@k8s-master-node1 ~]# kubeeasy add --registry harbor

部署完成后查看Harbor仓库状态

[root@k8s-master-node1 ~]# systemctl status harbor

● harbor.service - Harbor

Loaded: loaded (/usr/lib/systemd/system/harbor.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2023-02-14 18:16:05 CST; 10s ago

Docs: http://github.com/vmware/harbor

Main PID: 35753 (docker-compose)

Tasks: 14

Memory: 8.2M

CGroup: /system.slice/harbor.service

└─35753 /usr/local/bin/docker-compose -f /opt/harbor/docker-compose.yml up

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container redis Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container harbor-portal Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container registry Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container harbor-core Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container nginx Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Container harbor-jobservice Running

2月 14 18:16:05 k8s-master-node1 docker-compose[35753]: Attaching to harbor-core, harbor-db, harbor-jobservice, harbor-log, harbor-portal, nginx, redis, regist...gistryctl

2月 14 18:16:06 k8s-master-node1 docker-compose[35753]: registry | 172.18.0.8 - - [14/Feb/2023:10:16:06 +0000] "GET / HTTP/1.1" 200 0 "" "Go-http-client/1.1"

2月 14 18:16:06 k8s-master-node1 docker-compose[35753]: registryctl | 172.18.0.8 - - [14/Feb/2023:10:16:06 +0000] "GET /api/health HTTP/1.1" 200 9

2月 14 18:16:06 k8s-master-node1 docker-compose[35753]: harbor-portal | 172.18.0.8 - - [14/Feb/2023:10:16:06 +0000] "GET / HTTP/1.1" 200 532 "-" "Go-http-client/1.1"

Hint: Some lines were ellipsized, use -l to show in full.

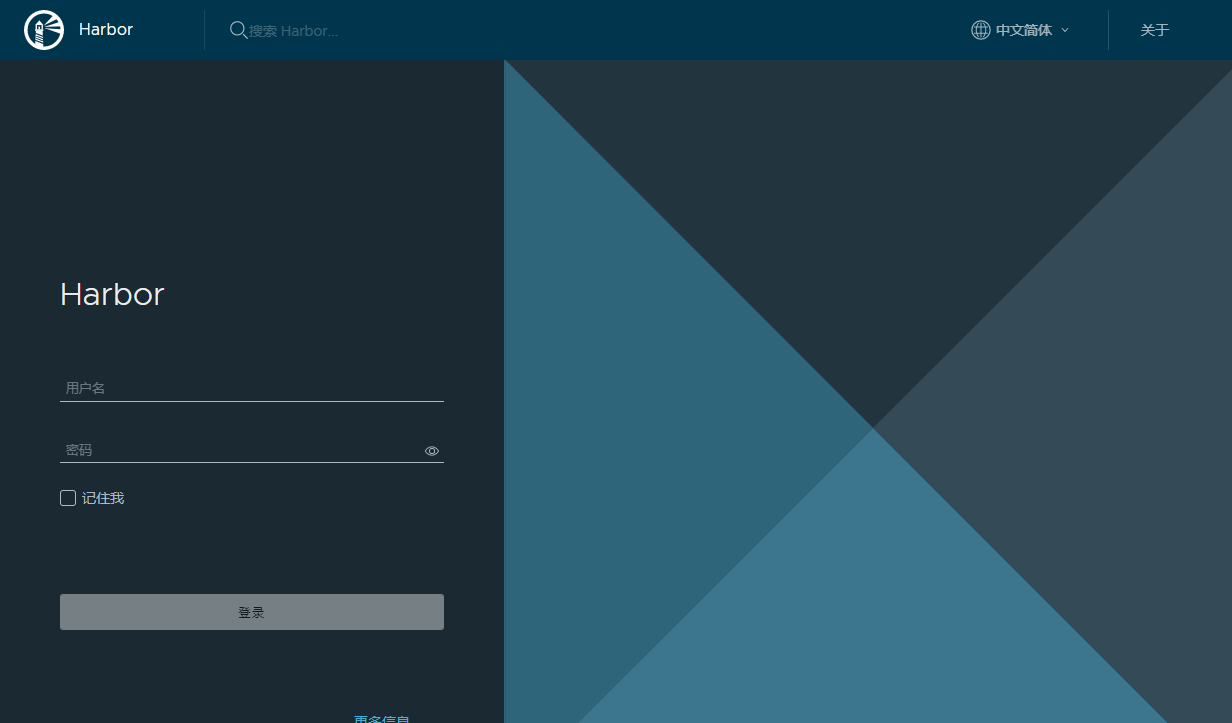

在Web端通过http://master_ip 访问Harbor仓库

使用管理员账户(admin/Harbor12345)登录Harbor

helm常用命令

查看版本信息

[root@k8s-master-node1 ~]# helm version

version.BuildInfo{

Version:"v3.7.1", GitCommit:"1d11fcb5d3f3bf00dbe6fe31b8412839a96b3dc4", GitTreeState:"clean", GoVersion:"go1.16.9"}

查看当前安装的Charts

[root@k8s-master-node1 ~]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

查询Charts

# helm search <chart-name>

查询Charts状态

# helm status RELEASE_NAME

创建Charts

# helm create helm_charts

删除Charts

# helm delete RELEASE_NAME

打包Charts

# cd helm_charts && helm package ./

查看生成的yaml文件

# helm template helm_charts-xxx.tgz

基础案例

在master节点上完成Harbor镜像仓库及Helm包管理工具的部署。

使用nginx 镜像自定义一个Chart,Deployment名称为nginx,副本数为1,然后将该Chart部署到default命名空间下,Release名称为 web

[root@k8s-master-node1 ~]# helm create mychart

Creating mych

[root@k8s-master-node1 ~]# rm -rf mychart/templates/*

[root@k8s-master-node1 ~]# kubectl create deployment nginx --image=nginx --dry-run=client -o yaml > mychart/templates/deployment.yaml

[root@k8s-master-node1 ~]# vi mychart/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

strategy: {

}

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

resources: {

}

status: {

}

[root@k8s-master-node1 ~]# helm install web mychart

NAME: web

LAST DEPLOYED: Tue Feb 14 18:43:57 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

在master节点执行 helm stauts web命令进行验证

[root@k8s-master-node1 ~]# helm status web

NAME: web

LAST DEPLOYED: Tue Feb 14 18:43:57 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

重置集群

若集群部署失败或者出现故障,需要重新部署

[root@k8s-master-node1 ~]# kubeeasy reset