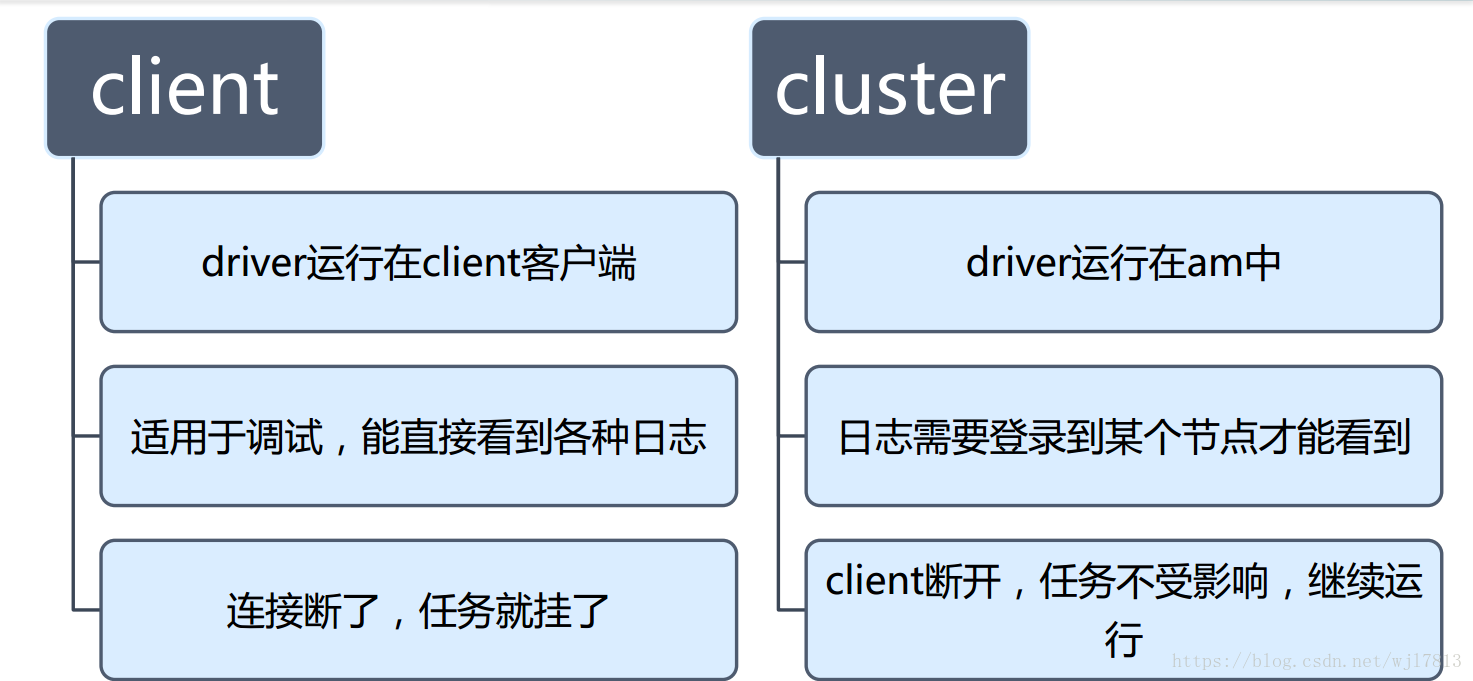

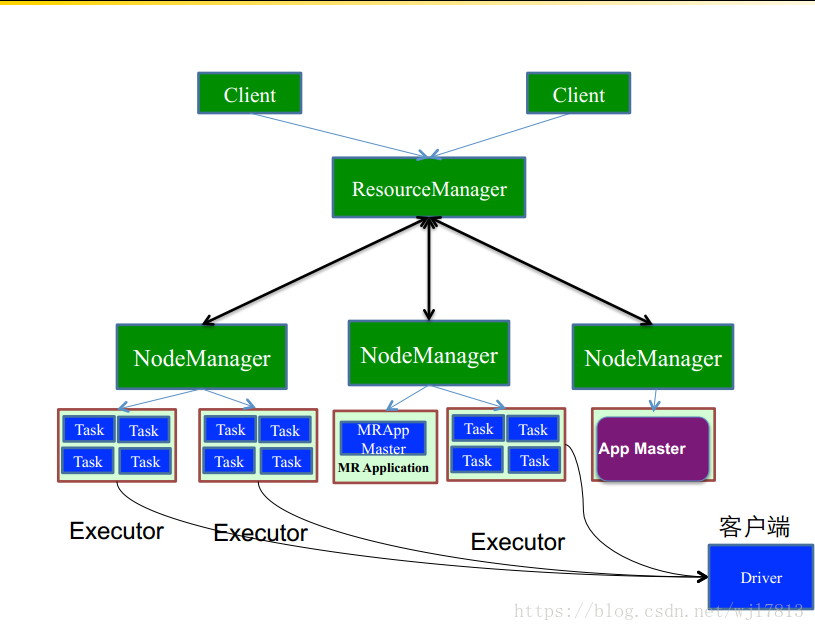

YARN-Cluster和YARN-Client的区别

(2)而Driver会和Executors进行通信,这也导致了Yarn_cluster在提交App之后可以关闭Client,而Yarn-Client不可以;

(3)最后再来说应用场景,Yarn-Cluster适合生产环境,Yarn-Client适合交互和调试。

============== yarn-client 模式 ==================

如果是yarn-client 模式的话 ,把 客户端关掉的话 ,是不能提交任务的

idea 创建new project 并编写 scala 程序

package com.wjl7813

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

/**

* Created by joy on 2017/7/29.

*/

object SparkWordCount {

def main(args: Array[String]): Unit = {

val path = "hdfs://192.168.137.251:8020/data/emp.txt"

val savepath = "hdfs://192.168.137.251:8020/data/sparkwordcount/${System.currentTimeMillis()}"

// 1. 创建sparkconf 上下文

val conf = new SparkConf()

.setMaster("local[2]")

.setAppName("wordcount")

val sc = new SparkContext(conf)

// 2. 数据读取---->> 形成RDD

// alt +enter

val rdd:RDD[String] = sc.textFile(path)

// 3 数据处理--->> RDD API 调用

val wordCountRDD = rdd

.flatMap(line => line.split(" "))

.map(word => (word,1))

.reduceByKey(_ + _)

// 4 结果保存--->>> RDD 数据输出

wordCountRDD.foreachPartition(iter => iter.foreach(println))

wordCountRDD.saveAsTextFile(savepath)

}

}

[hadoop@node1 ~]$ spark-shell

--master yarn \

--jars /home/hadoop/mysql-connector-java-5.1.40-bin.jar

--num-executors 3 \

--executor-memory 512m \

--driver-memory 512m \

--executor-cores 1

[hadoop@node1 ~]$ spark-shell \

--master yarn \

--jars $HIVE_HOME/lib/mysql-connector-java-5.1.40-bin.jar \

--num-executors 3 \

--executor-memory 512m \

--driver-memory 512m \

--executor-cores 1

spark-submit \

--master yarn-client \

--class com.wjl7813.SparkWordCount /home/hadoop/sparkapp.jar \

--num-executors 3 \

--executor-memory 512m \

--driver-memory 512m \

--executor-cores 1

如果是yarn-cluster 模式的话, client 关闭 是可以提交任务的

yarn-cluster 不支持spark-shell /spark-sql

======================= yarn cluster =

spark-shell --master yarn-cluster \

--jars \

--num-executors 3 \

--executor-memory 512m \

--driver-memory 512m \

--executor-cores 1

上面的spark-shell 不能用交互式 ,交互式模式 只用 yarn-client 模式下 ,spark-submit 不存在这种情况

spark-submit \

--class org.apache.spark.examples.Spark.Pi \

--master yarn-cluster \

--executor-memory 1G \

/home/hadoop/app/spark-1.6.1-bin-2.6.0-cdh5.7.0/lib/spark-examples-1.6.1-hadoop2.6.0-cdh5.7.0.jar \

3

spark-submit --class com.wjl7813.SparkWordCount /home/hadoop/sparkapp.jar

spark-submit --master yarn-client --class com.wjl7813.SparkWordCount /home/hadoop/sparkapp.jar

spark-submit --master yarn-cluster --class com.wjl7813.SparkWordCount /home/hadoop/sparkapp.jar