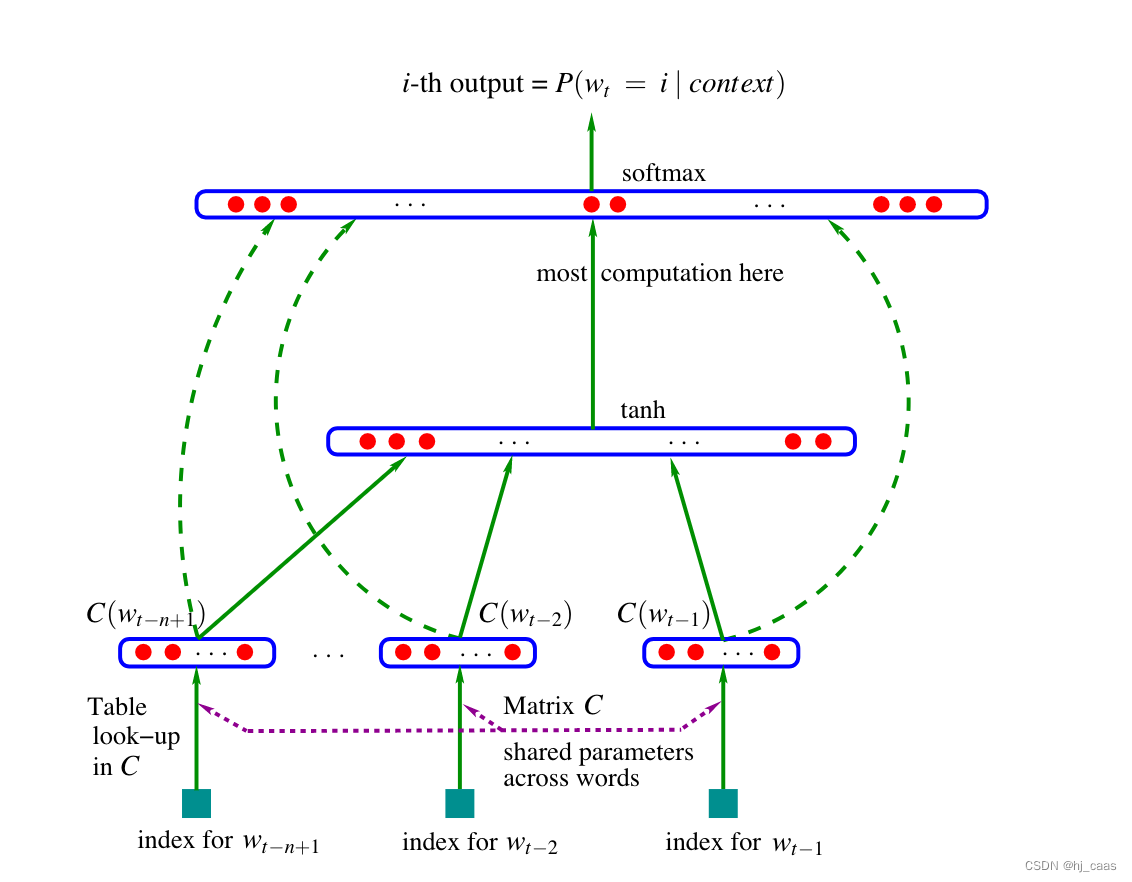

《A Neural Probabilistic Language Model》,这篇论文第一次用神经网络解决语言模型的问题,比传统的语言模型使用n-gram建模更远的关系,且考虑词与词之间的相似性。

论文中采用多层感知器(MLP)构造了语言模型,如下图所示,模型共分为三层,即映射层、隐藏层、输出层,利用前n个单词得到下一个单词。具体的代码如下所示。

import copy

import torch

import torch.nn as nn

import torch.optim as optim

#根据论文公式构建模型

class NNLM(nn.Module):

def __init__(self, dict_size, edim, input_dim, hidden_dim):

super(NNLM, self).__init__()

self.input_dim = input_dim

self.C = nn.Embedding(dict_size, edim)

self.H = nn.Linear(input_dim, hidden_dim, bias=False)

self.d = nn.Parameter(torch.ones(hidden_dim))

self.U = nn.Linear(hidden_dim, dict_size, bias=False)

self.W = nn.Linear(input_dim, dict_size, bias=False)

self.b = nn.Parameter(torch.ones(dict_size))

def forward(self, X):

X = self.C(X) #映射层 (batch_size,n_gram,edim) n_gram是指词的个数

X = X.view(-1, self.input_dim) #(batch_size,input_dim)

tanh = torch.tanh(self.d + self.H(X)) #隐藏层(batch_size,hidden_dim)

output = self.b + self.W(X) + self.U(tanh) #输出层(batch_size,dict_size)

return output

#将文本集合转化成input,target的形式

def make_batch(sentences,n_gram,word_dict):

input_batch = []

target_batch = []

for sentence in sentences:

words = sentence.split() #分词

if n_gram+1 > len(words):

raise Exception('n_gram大于文本长度')

for i in range(len(words)-n_gram):

input = [word_dict[n] for n in words[i:i+n_gram]]

target = word_dict[words[i+n_gram]]

input_batch.append(input)

target_batch.append(target)

return input_batch, target_batch

if __name__=='__main__':

sentences = ["i like dog", "i love coffee", "i hate milk"]

word_list = " ".join(sentences).split()

word_list = list(set(word_list))

word_dict = {

w: i for i, w in enumerate(word_list)}

number_dict = {

i: w for i, w in enumerate(word_list)}

dict_size = len(word_dict) # 字典的大小

edim = 3 #维度大小

n_gram = 2 #设置窗口大小

input_dim = edim*n_gram

hidden_dim = 2 #隐向量大小

model = NNLM(dict_size, edim, input_dim, hidden_dim) #加载模型

criterion = nn.CrossEntropyLoss() #损失

optimizer = optim.Adam(model.parameters(), lr=0.001) #优化器

input_batch, target_batch = make_batch(sentences, n_gram, word_dict)

input_text = copy.deepcopy(input_batch)

input_batch = torch.LongTensor(input_batch) #类型转换

target_batch = torch.LongTensor(target_batch)

# 模型训练

for epoch in range(1,10001):

optimizer.zero_grad() #梯度清零

output = model(input_batch)

loss = criterion(output, target_batch)

if epoch % 1000 == 0:

print('Epoch:', '%04d' % (epoch), 'cost =', '{:.6f}'.format(loss))

loss.backward() #反向传播,计算当前梯度

optimizer.step() #根据梯度更新网络参数

# 模型预测

predict = model(input_batch).data.max(1, keepdim=True)[1]

print("真实标签:",[number_dict[n.item()] for n in target_batch],'->','预测标签:',[number_dict[n.item()] for n in predict.squeeze()])

NNLM模型使用低维稠密词向量对上文进行表示,解决了词袋模型带来的数据稀疏、语义鸿沟等问题。NNLM可以根据上下文语境预测下一个目标词,优于传统的n-gram的模型。