AI: 图像识别基础(Image Processing Basics)二

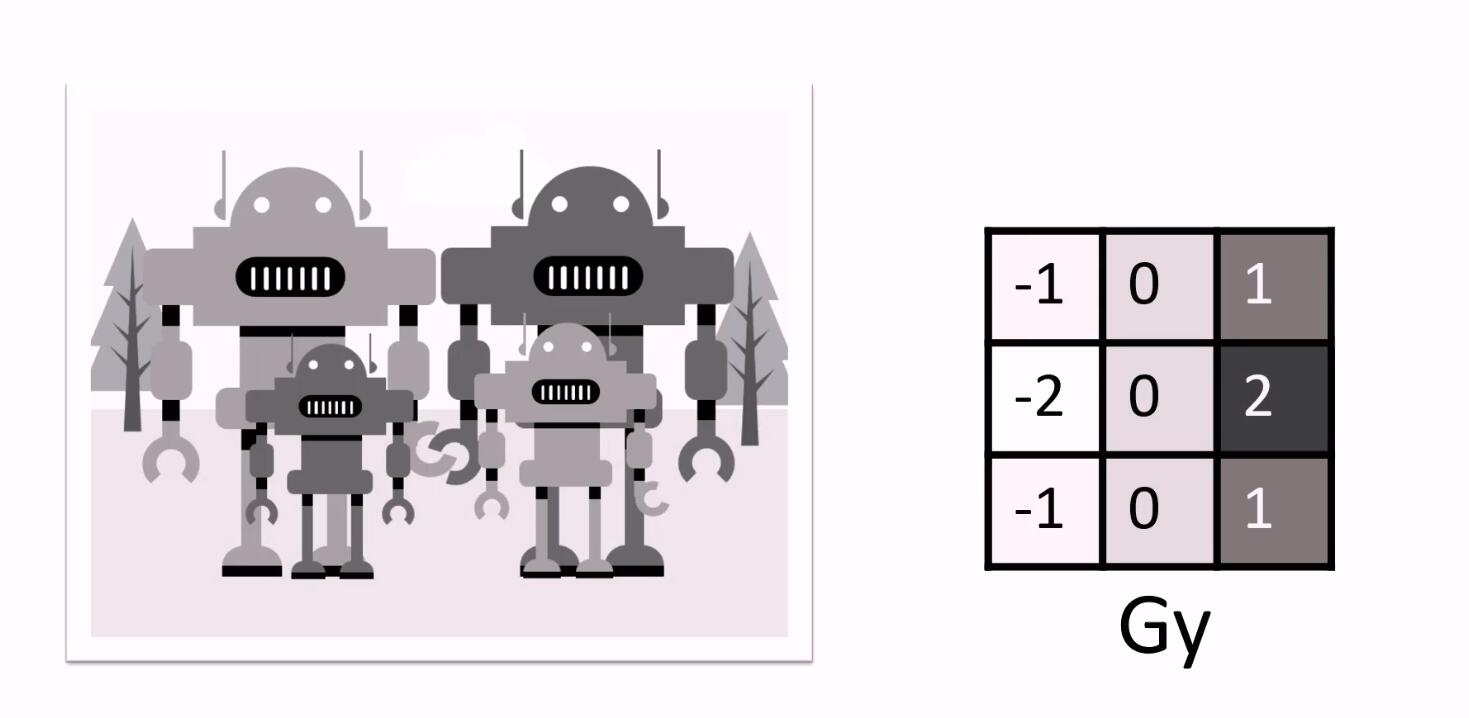

四,边缘检测(Edge Detection)

边缘是什么?

边缘勾画出目标物体;边缘蕴含了丰富的信息:方向、形状等;边缘是图像局部特征不连续(灰度突变、颜色突变、纹理结构突变等)的反映;标志着一个区域的终结和另一个区域的开始。对于计算机,边缘是指周围像素灰度有变化的那些像素的集合。主要表现为图像局部特征的不连续行;即信号发生奇异变化的地方。

例如:

Edge Detection?

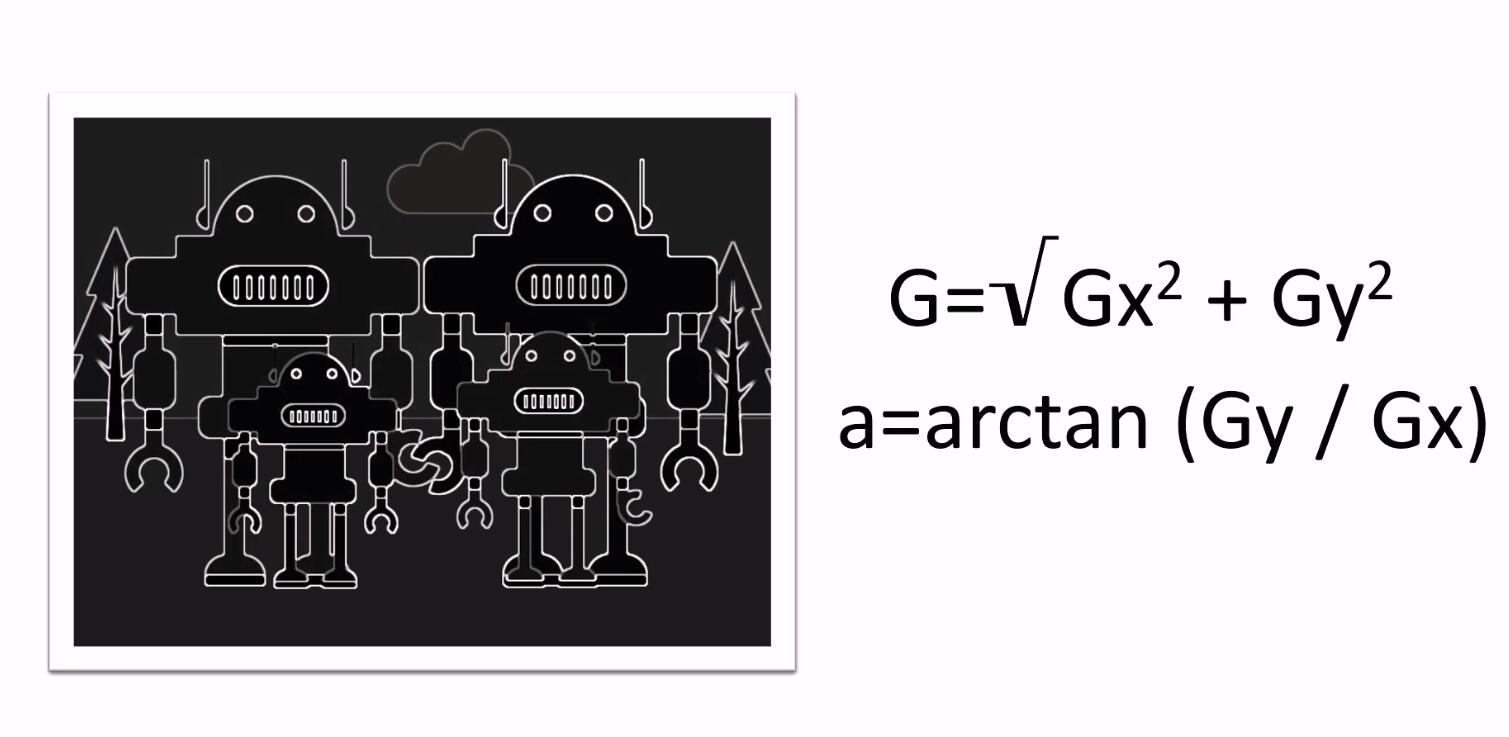

人眼对物体的区别依赖于图像的边缘;人的视觉细胞对物体的边缘特别敏感。我们先看到物体的轮廓,然后才判断这到底是什么东西。边缘检测技术能够将图像中最有意义的部分即边缘信息。提取出来,为进一步的图像分析、处理、识别奠定基础。

边缘处理前后对比。

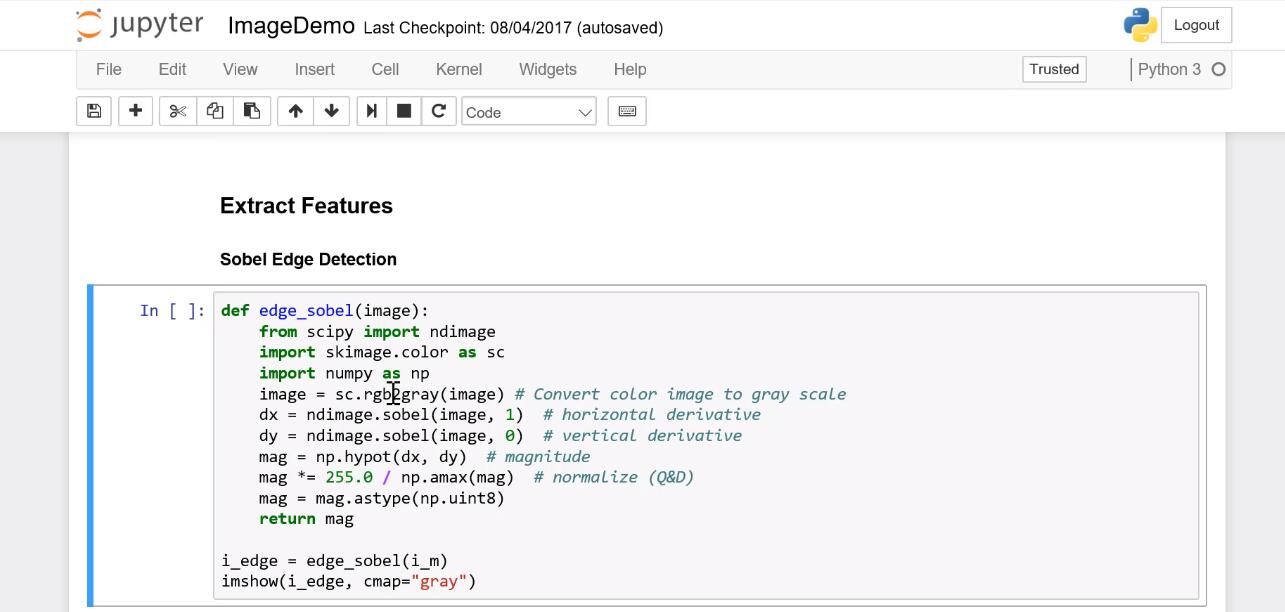

在Azure AI 写Python 比较方便,这里继续介绍使用Python 做Edge Detection的方法

在Azure平台里使用的是,基于Sobel的边沿检测。

参考代码

import numpy import argparse import cv2 image = cv2.imread('1.jpg') cv2.imshow("Original", image) gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) cv2.imshow("Gray", gray) sobelx = cv2.Sobel(gray, cv2.CV_64F, 1, 0) sobely = cv2.Sobel(gray, cv2.CV_64F, 0, 1) sobelx = numpy.uint8(numpy.absolute(sobelx)) sobely = numpy.uint8(numpy.absolute(sobely)) sobelcombine = cv2.bitwise_or(sobelx,sobely) #display two images in a figure cv2.imshow("Edge detection by Sobel", numpy.hstack([gray,sobelx,sobely, sobelcombine])) cv2.imwrite("1_edge_by_sobel.jpg", numpy.hstack([gray,sobelx,sobely, sobelcombine])) if(cv2.waitKey(0)==27): cv2.destroyAllWindows()基于Laplacian的边沿检测

import numpy import argparse import cv2 image = cv2.imread('1.jpg') cv2.imshow("Original", image) gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) cv2.imshow("Gray", gray) #if don't use a floating point data type when computing #the gradient magnitude image, you will miss edges lap = cv2.Laplacian(gray, cv2.CV_64F) lap = numpy.uint8(numpy.absolute(lap)) #display two images in a figure cv2.imshow("Edge detection by Laplacaian", numpy.hstack([lap, gray])) cv2.imwrite("1_edge_by_laplacian.jpg", numpy.hstack([gray, lap])) if(cv2.waitKey(0)==27): cv2.destroyAllWindows()基于Canny的

import numpy import argparse import cv2 image = cv2.imread('1.jpg') cv2.imshow("Original", image) gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) cv2.imshow("Gray", gray) #30 and 150 is the threshold, larger than 150 is considered as edge, #less than 30 is considered as not edge canny = cv2.Canny(gray, 30, 150) canny = numpy.uint8(numpy.absolute(canny)) #display two images in a figure cv2.imshow("Edge detection by Canny", numpy.hstack([gray,canny])) cv2.imwrite("1_edge_by_canny.jpg", numpy.hstack([gray,canny])) if(cv2.waitKey(0)==27): cv2.destroyAllWindows()

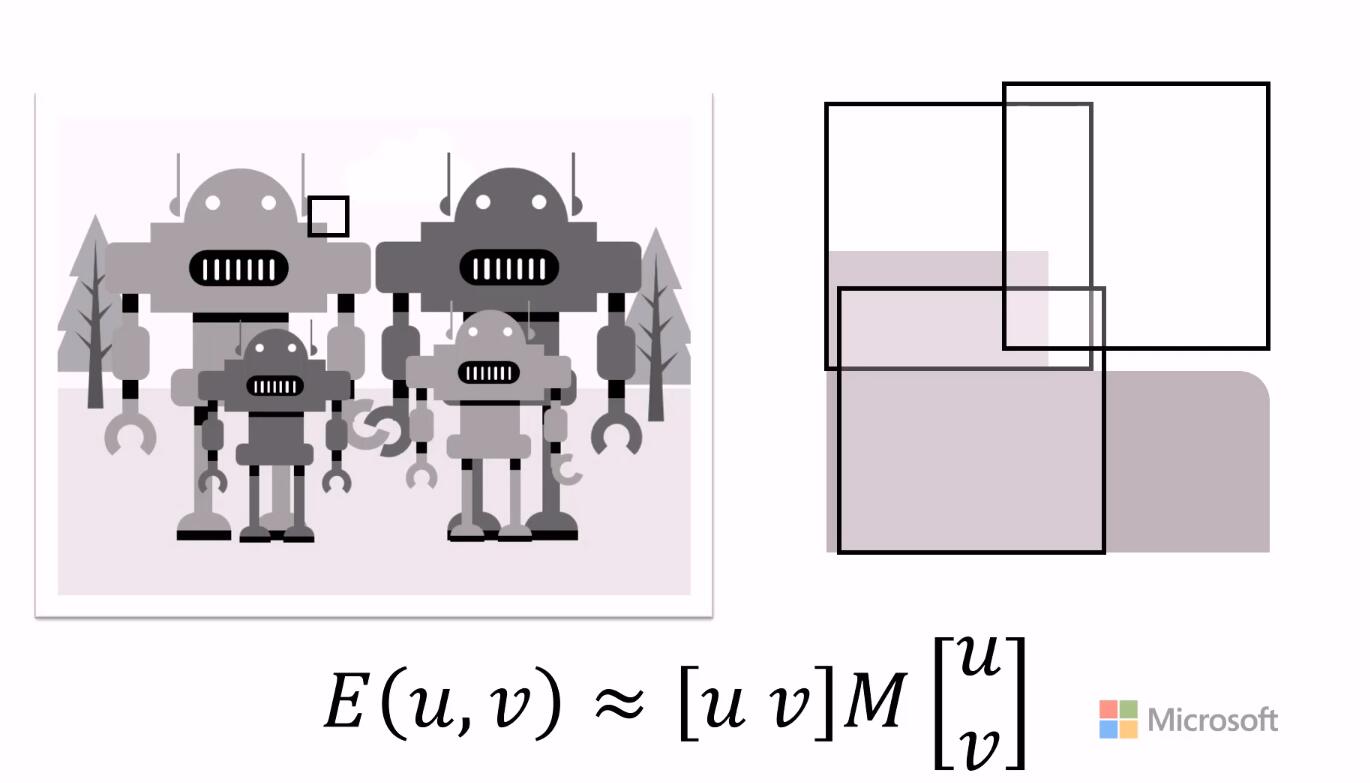

五,角点检测(Corner Detection)

介绍:

角点检测算法可归纳为3类:基于灰度图像的角点检测、基于二值图像的角点检测、基于轮廓曲线的角点检测。基于灰度图像的角点检测又可分为基于梯度、基于模板和基于模板梯度组合3类方法,其中基于模板的方法主要考虑像素领域点的灰度变化,即图像亮度的变化,将与邻点亮度对比足够大的点定义为角点。常见的基于模板的角点检测算法有Kitchen-Rosenfeld角点检测算法,Harris角点检测算法、KLT角点检测算法及SUSAN角点检测算法。和其他角点检测算法相比,SUSAN角点检测算法具有算法简单、位置准确、抗噪声能力强等特点。

举2例子,

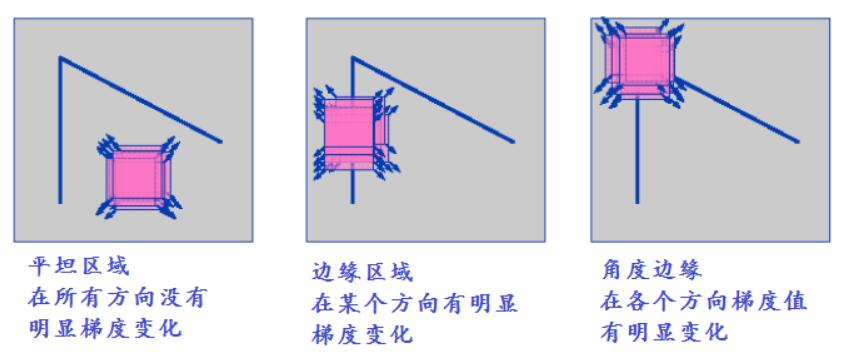

角点是一幅图像上最明显与重要的特征,对于一阶导数而言,角点在各个方向的变化是最大的,而边缘区域在只是某一方向有明显变化。一个直观的图示如下:

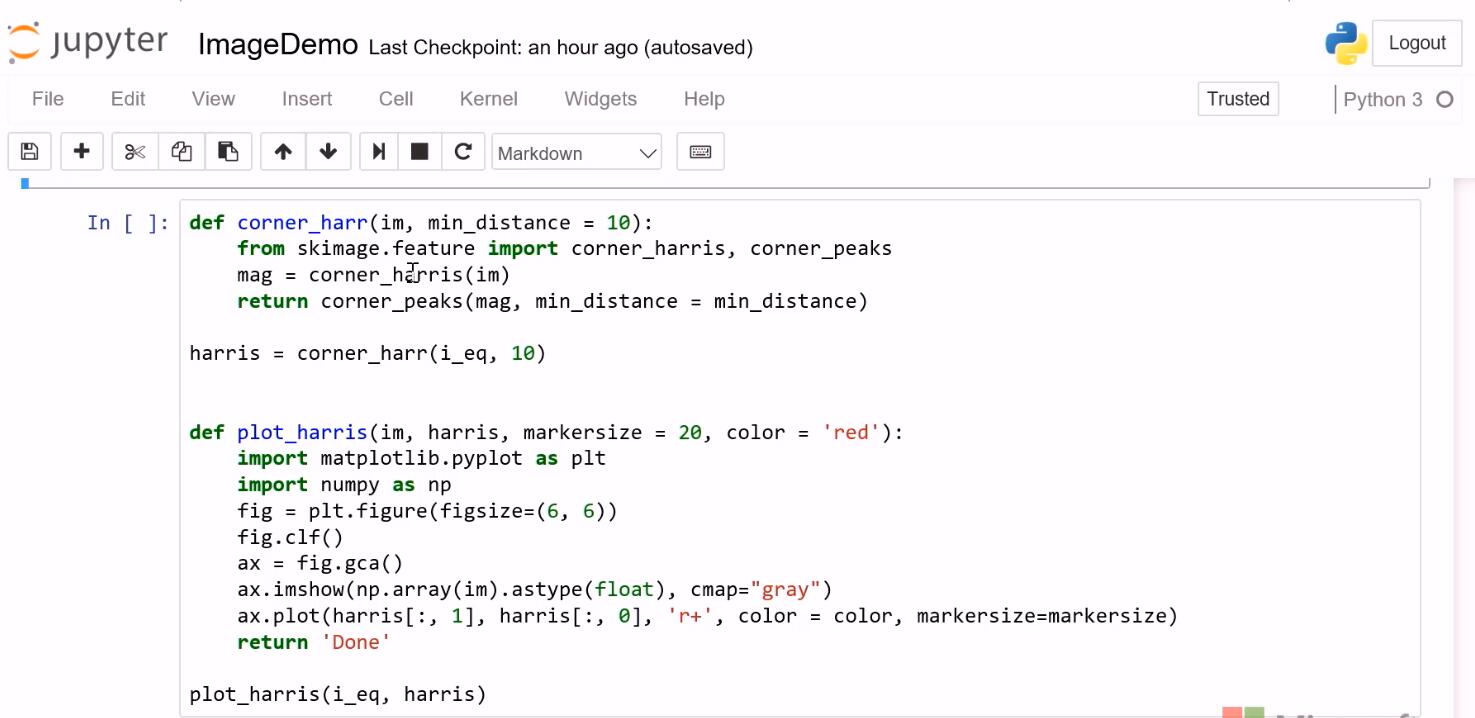

在Azure 部分Python 代码如下:

package com.gloomyfish.image.harris.corner;

import java.awt.image.BufferedImage;

import java.util.ArrayList;

import java.util.List;

import com.gloomyfish.filter.study.GrayFilter;

public class HarrisCornerDetector extends GrayFilter {

private GaussianDerivativeFilter filter;

private List<HarrisMatrix> harrisMatrixList;

private double lambda = 0.04; // scope : 0.04 ~ 0.06

// i hard code the window size just keep it' size is same as

// first order derivation Gaussian window size

private double sigma = 1; // always

private double window_radius = 1; // always

public HarrisCornerDetector() {

filter = new GaussianDerivativeFilter();

harrisMatrixList = new ArrayList<HarrisMatrix>();

}

@Override

public BufferedImage filter(BufferedImage src, BufferedImage dest) {

int width = src.getWidth();

int height = src.getHeight();

initSettings(height, width);

if ( dest == null )

dest = createCompatibleDestImage( src, null );

BufferedImage grayImage = super.filter(src, null);

int[] inPixels = new int[width*height];

// first step - Gaussian first-order Derivatives (3 × 3) - X - gradient, (3 × 3) - Y - gradient

filter.setDirectionType(GaussianDerivativeFilter.X_DIRECTION);

BufferedImage xImage = filter.filter(grayImage, null);

getRGB( xImage, 0, 0, width, height, inPixels );

extractPixelData(inPixels, GaussianDerivativeFilter.X_DIRECTION, height, width);

filter.setDirectionType(GaussianDerivativeFilter.Y_DIRECTION);

BufferedImage yImage = filter.filter(grayImage, null);

getRGB( yImage, 0, 0, width, height, inPixels );

extractPixelData(inPixels, GaussianDerivativeFilter.Y_DIRECTION, height, width);

// second step - calculate the Ix^2, Iy^2 and Ix^Iy

for(HarrisMatrix hm : harrisMatrixList)

{

double Ix = hm.getXGradient();

double Iy = hm.getYGradient();

hm.setIxIy(Ix * Iy);

hm.setXGradient(Ix*Ix);

hm.setYGradient(Iy*Iy);

}

// 基于高斯方法,中心点化窗口计算一阶导数和,关键一步 SumIx2, SumIy2 and SumIxIy, 高斯模糊

calculateGaussianBlur(width, height);

// 求取Harris Matrix 特征值

// 计算角度相应值R R= Det(H) - lambda * (Trace(H))^2

harrisResponse(width, height);

// based on R, compute non-max suppression

nonMaxValueSuppression(width, height);

// match result to original image and highlight the key points

int[] outPixels = matchToImage(width, height, src);

// return result image

setRGB( dest, 0, 0, width, height, outPixels );

return dest;

}

private int[] matchToImage(int width, int height, BufferedImage src) {

int[] inPixels = new int[width*height];

int[] outPixels = new int[width*height];

getRGB( src, 0, 0, width, height, inPixels );

int index = 0;

for(int row=0; row<height; row++) {

int ta = 0, tr = 0, tg = 0, tb = 0;

for(int col=0; col<width; col++) {

index = row * width + col;

ta = (inPixels[index] >> 24) & 0xff;

tr = (inPixels[index] >> 16) & 0xff;

tg = (inPixels[index] >> 8) & 0xff;

tb = inPixels[index] & 0xff;

HarrisMatrix hm = harrisMatrixList.get(index);

if(hm.getMax() > 0)

{

tr = 0;

tg = 255; // make it as green for corner key pointers

tb = 0;

outPixels[index] = (ta << 24) | (tr << 16) | (tg << 8) | tb;

}

else

{

outPixels[index] = (ta << 24) | (tr << 16) | (tg << 8) | tb;

}

}

}

return outPixels;

}

/***

* we still use the 3*3 windows to complete the non-max response value suppression

*/

private void nonMaxValueSuppression(int width, int height) {

int index = 0;

int radius = (int)window_radius;

for(int row=0; row<height; row++) {

for(int col=0; col<width; col++) {

index = row * width + col;

HarrisMatrix hm = harrisMatrixList.get(index);

double maxR = hm.getR();

boolean isMaxR = true;

for(int subrow =-radius; subrow<=radius; subrow++)

{

for(int subcol=-radius; subcol<=radius; subcol++)

{

int nrow = row + subrow;

int ncol = col + subcol;

if(nrow >= height || nrow < 0)

{

nrow = 0;

}

if(ncol >= width || ncol < 0)

{

ncol = 0;

}

int index2 = nrow * width + ncol;

HarrisMatrix hmr = harrisMatrixList.get(index2);

if(hmr.getR() > maxR)

{

isMaxR = false;

}

}

}

if(isMaxR)

{

hm.setMax(maxR);

}

}

}

}

/***

* 计算两个特征值,然后得到R,公式如下,可以自己推导,关于怎么计算矩阵特征值,请看这里:

* http://www.sosmath.com/matrix/eigen1/eigen1.html

*

* A = Sxx;

* B = Syy;

* C = Sxy*Sxy*4;

* lambda = 0.04;

* H = (A*B - C) - lambda*(A+B)^2;

*

* @param width

* @param height

*/

private void harrisResponse(int width, int height) {

int index = 0;

for(int row=0; row<height; row++) {

for(int col=0; col<width; col++) {

index = row * width + col;

HarrisMatrix hm = harrisMatrixList.get(index);

double c = hm.getIxIy() * hm.getIxIy();

double ab = hm.getXGradient() * hm.getYGradient();

double aplusb = hm.getXGradient() + hm.getYGradient();

double response = (ab -c) - lambda * Math.pow(aplusb, 2);

hm.setR(response);

}

}

}

private void calculateGaussianBlur(int width, int height) {

int index = 0;

int radius = (int)window_radius;

double[][] gw = get2DKernalData(radius, sigma);

double sumxx = 0, sumyy = 0, sumxy = 0;

for(int row=0; row<height; row++) {

for(int col=0; col<width; col++) {

for(int subrow =-radius; subrow<=radius; subrow++)

{

for(int subcol=-radius; subcol<=radius; subcol++)

{

int nrow = row + subrow;

int ncol = col + subcol;

if(nrow >= height || nrow < 0)

{

nrow = 0;

}

if(ncol >= width || ncol < 0)

{

ncol = 0;

}

int index2 = nrow * width + ncol;

HarrisMatrix whm = harrisMatrixList.get(index2);

sumxx += (gw[subrow + radius][subcol + radius] * whm.getXGradient());

sumyy += (gw[subrow + radius][subcol + radius] * whm.getYGradient());

sumxy += (gw[subrow + radius][subcol + radius] * whm.getIxIy());

}

}

index = row * width + col;

HarrisMatrix hm = harrisMatrixList.get(index);

hm.setXGradient(sumxx);

hm.setYGradient(sumyy);

hm.setIxIy(sumxy);

// clean up for next loop

sumxx = 0;

sumyy = 0;

sumxy = 0;

}

}

}

public double[][] get2DKernalData(int n, double sigma) {

int size = 2*n +1;

double sigma22 = 2*sigma*sigma;

double sigma22PI = Math.PI * sigma22;

double[][] kernalData = new double[size][size];

int row = 0;

for(int i=-n; i<=n; i++) {

int column = 0;

for(int j=-n; j<=n; j++) {

double xDistance = i*i;

double yDistance = j*j;

kernalData[row][column] = Math.exp(-(xDistance + yDistance)/sigma22)/sigma22PI;

column++;

}

row++;

}

// for(int i=0; i<size; i++) {

// for(int j=0; j<size; j++) {

// System.out.print("\t" + kernalData[i][j]);

// }

// System.out.println();

// System.out.println("\t ---------------------------");

// }

return kernalData;

}

private void extractPixelData(int[] pixels, int type, int height, int width)

{

int index = 0;

for(int row=0; row<height; row++) {

int ta = 0, tr = 0, tg = 0, tb = 0;

for(int col=0; col<width; col++) {

index = row * width + col;

ta = (pixels[index] >> 24) & 0xff;

tr = (pixels[index] >> 16) & 0xff;

tg = (pixels[index] >> 8) & 0xff;

tb = pixels[index] & 0xff;

HarrisMatrix matrix = harrisMatrixList.get(index);

if(type == GaussianDerivativeFilter.X_DIRECTION)

{

matrix.setXGradient(tr);

}

if(type == GaussianDerivativeFilter.Y_DIRECTION)

{

matrix.setYGradient(tr);

}

}

}

}

private void initSettings(int height, int width)

{

int index = 0;

for(int row=0; row<height; row++) {

for(int col=0; col<width; col++) {

index = row * width + col;

HarrisMatrix matrix = new HarrisMatrix();

harrisMatrixList.add(index, matrix);

}

}

}

}