问题:

训练模型的时候碰到报错 RuntimeError: Trying to backward through the graph a second time, but the buffers have already been freed. Specify retain_graph=True when calling backward the first time

原因:

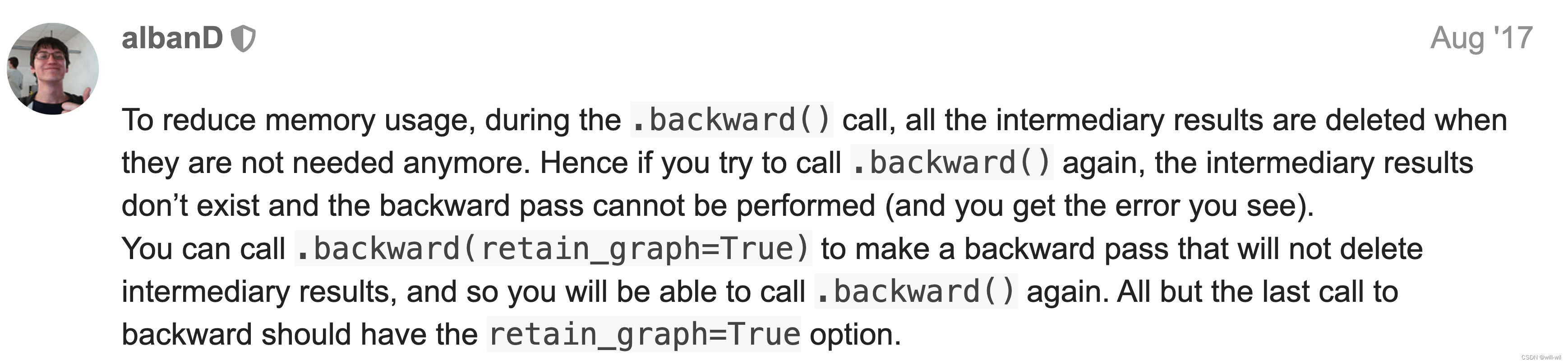

为了减少显存的使用,pytorch训练时在调用backward()后会自动释放中间结果,等到第二次调用的时候,中间结果不存在导致的报错,可以在backward()中添加retain_graph=True参数,保留中间结果

等会,这不符合直觉啊,不应该每次forward都走一遍模型,创建一遍graph么?刚好评论区有人问了这个问题

划重点, perform some computation just before the loop,定义了循环外的变量,在第一次backward的时候释放了,从而导致第二次forward的时候,循环外的变量释放同时无法redo导致中间结果丢失报错

import torch

a = torch.rand(3,3, requires_grad=True)

# This will be share by both iterations and will make the second backward fail !

b = a * a

for i in range(10):

d = b * b

res = d.sum()

# The first here will work but the second will not !

res.backward(

###运行报错

RuntimeError: Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward.

###改成以下形式,问题解决

import torch

#a = torch.rand(3,3, requires_grad=True)

# This will be share by both iterations and will make the second backward fail !

#b = a * a

for i in range(10):

a = torch.rand(3,3, requires_grad=True)

b = a * a

d = b * b

res = d.sum()

# The first here will work but the second will not !

res.backward()

另外一种解决方法

如果在模型中存在一部分不训练的参数,如Moco等,可以尝试在该部分变量创建函数上添加torch.no_grad(),也能解决这个问题