1、GCANet

Gated Context Aggregation Network for Image Dehazing and Derainin

用于图像去雾和去雨的门控上下文聚合网络

论文最重要的两个贡献:

smooth dilated convolution,用于代替原始的dilated convolution,消除了gridding artifacts(网格伪影)

gated fusion sub-network,用于融合不同层级的特征,对low-level任务和high-level任务都有好处

smooth dilated convolution(平滑的空洞卷积)

# SS convolution 分割和共享卷积(separate and shared convolution)

class ShareSepConv(nn.Module):

def __init__(self, kernel_size):

super(ShareSepConv, self).__init__()

assert kernel_size % 2 == 1, 'kernel size should be odd'

self.padding = (kernel_size - 1)//2

weight_tensor = torch.zeros(1, 1, kernel_size, kernel_size)

weight_tensor[0, 0, (kernel_size-1)//2, (kernel_size-1)//2] = 1

self.weight = nn.Parameter(weight_tensor)

self.kernel_size = kernel_size

def forward(self, x):

inc = x.size(1)

expand_weight = self.weight.expand(inc, 1, self.kernel_size, self.kernel_size).contiguous()

return F.conv2d(x, expand_weight,

None, 1, self.padding, 1, inc)

class SmoothDilatedResidualBlock(nn.Module):

def __init__(self, channel_num, dilation=1, group=1):

super(SmoothDilatedResidualBlock, self).__init__()

self.pre_conv1 = ShareSepConv(dilation*2-1)

self.conv1 = nn.Conv2d(channel_num, channel_num, 3, 1, padding=dilation, dilation=dilation, groups=group, bias=False)

self.norm1 = nn.InstanceNorm2d(channel_num, affine=True)

self.pre_conv2 = ShareSepConv(dilation*2-1)

self.conv2 = nn.Conv2d(channel_num, channel_num, 3, 1, padding=dilation, dilation=dilation, groups=group, bias=False)

self.norm2 = nn.InstanceNorm2d(channel_num, affine=True)

def forward(self, x):

y = F.relu(self.norm1(self.conv1(self.pre_conv1(x))))

y = self.norm2(self.conv2(self.pre_conv2(y)))

return F.relu(x+y)

gates = self.gate(torch.cat((y1, y2, y3), dim=1))

gated_y = y1 * gates[:, [0], :, :] + y2 * gates[:, [1], :, :] + y3 * gates[:, [2], :, :]

y = F.relu(self.norm4(self.deconv3(gated_y)))

y = F.relu(self.norm5(self.deconv2(y)))

if self.only_residual:

y = self.deconv1(y)

else:

y = F.relu(self.deconv1(y))

2、MSBDN

Multi-Scale Boosted Dehazing Network with Dense Feature Fusion

具有密集特征融合的多尺度增强去雾网络

**DFF:**密集特征融合模块可以同时弥补高分辨率特征中缺失的空间信息,并利用非相邻特征

文章贡献:基于Unet,多尺度使用特征信息

# DFF特征模块

class Encoder_MDCBlock1(torch.nn.Module):

def __init__(self, num_filter, num_ft, kernel_size=4, stride=2, padding=1, bias=True, activation='prelu', norm=None, mode='iter1'):

super(Encoder_MDCBlock1, self).__init__()

self.mode = mode

self.num_ft = num_ft - 1

self.up_convs = nn.ModuleList()

self.down_convs = nn.ModuleList()

for i in range(self.num_ft):

self.up_convs.append(

DeconvBlock(num_filter//(2**i), num_filter//(2**(i+1)), kernel_size, stride, padding, bias, activation, norm=None)

)

self.down_convs.append(

ConvBlock(num_filter//(2**(i+1)), num_filter//(2**i), kernel_size, stride, padding, bias, activation, norm=None)

)

#

if self.mode == 'iter2':

ft_fusion = ft_l

for i in range(len(ft_h_list)):

ft = ft_fusion

for j in range(self.num_ft - i):

ft = self.up_convs[j](ft)

ft = ft - ft_h_list[i]

for j in range(self.num_ft - i):

# print(j)

ft = self.down_convs[self.num_ft - i - j - 1](ft)

ft_fusion = ft_fusion + ft

# DFF解码模块

class Decoder_MDCBlock1(torch.nn.Module):

def __init__(self, num_filter, num_ft, kernel_size=4, stride=2, padding=1, bias=True, activation='prelu', norm=None, mode='iter1'):

super(Decoder_MDCBlock1, self).__init__()

self.mode = mode

self.num_ft = num_ft - 1

self.down_convs = nn.ModuleList()

self.up_convs = nn.ModuleList()

for i in range(self.num_ft):

self.down_convs.append(

ConvBlock(num_filter*(2**i), num_filter*(2**(i+1)), kernel_size, stride, padding, bias, activation, norm=None)

)

self.up_convs.append(

DeconvBlock(num_filter*(2**(i+1)), num_filter*(2**i), kernel_size, stride, padding, bias, activation, norm=None)

)

#

if self.mode == 'iter2':

ft_fusion = ft_h

for i in range(len(ft_l_list)):

ft = ft_fusion

for j in range(self.num_ft - i):

ft = self.down_convs[j](ft)

ft = ft - ft_l_list[i]

for j in range(self.num_ft - i):

ft = self.up_convs[self.num_ft - i - j - 1](ft)

ft_fusion = ft_fusion + ft

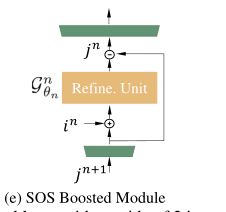

# SOS

res8x = self.dense_4(res8x) + res8x - res16x

self.dense_4 = nn.Sequential(

ResidualBlock(128),

ResidualBlock(128),

ResidualBlock(128)

)

3、4kDehazing

Ultra-High-Definition Image Dehazing via Multi-Guided Bilateral Learning

基于多引导双边学习的超高清图像去雾

def forward(self, x):

x_u= F.interpolate(x, (320, 320), mode='bicubic', align_corners=True)

x_r= F.interpolate(x, (256, 256), mode='bicubic', align_corners=True)

coeff = self.downsample(self.u_net(x_r)).reshape(-1, 12, 16, 16, 16)

guidance_r = self.guide_r(x[:, 0:1, :, :])

guidance_g = self.guide_g(x[:, 1:2, :, :])

guidance_b = self.guide_b(x[:, 2:3, :, :])

slice_coeffs_r = self.slice(coeff, guidance_r)

slice_coeffs_g = self.slice(coeff, guidance_g)

slice_coeffs_b = self.slice(coeff, guidance_b)

x_u = self.u_net_mini(x_u)

x_u = F.interpolate(x_u, (x.shape[2], x.shape[3]), mode='bicubic', align_corners=True)

output_r = self.apply_coeffs(slice_coeffs_r, self.p(self.r_point(x_u)))

output_g = self.apply_coeffs(slice_coeffs_g, self.p(self.g_point(x_u)))

output_b = self.apply_coeffs(slice_coeffs_b, self.p(self.b_point(x_u)))

output = torch.cat((output_r, output_g, output_b), dim=1)

output = self.fusion(output)

output = self.p(self.x_r_fusion(output) * x - output + 1)

# 分三通道处理

class ApplyCoeffs(nn.Module):

def __init__(self):

super(ApplyCoeffs, self).__init__()

self.degree = 3

def forward(self, coeff, full_res_input):

R = torch.sum(full_res_input * coeff[:, 0:3, :, :], dim=1, keepdim=True) + coeff[:, 3:4, :, :]

G = torch.sum(full_res_input * coeff[:, 4:7, :, :], dim=1, keepdim=True) + coeff[:, 7:8, :, :]

B = torch.sum(full_res_input * coeff[:, 8:11, :, :], dim=1, keepdim=True) + coeff[:, 11:12, :, :]

result = torch.cat([R, G, B], dim=1)

return result

补充:

双边滤波速度很慢 —> 加速,双边网格。

首先明确一点,双边网格本质上是一个数据结构

以单通道灰度值为例,双边网格结合了图像二维的空间域信息以及一维的灰度信息,可认为其是一个3D的数组。

举一个简单的例子,假设现在你手上有一只用于滤波/平滑的笔刷,当你用这只笔刷在图像E EE上的某一个位置( x , y ) (x,y)(x,y)处点击了一下,对应的,3D双边网格的( x , y , E ( x , y ) ) (x,y,E(x,y))(x,y,E(x,y))位置将出现一个点,这个点即对应你在2D影像E EE上点击的那个点。随着这只笔刷的移动,3D双边空间中三个维度都会被高斯平滑,那么对于平坦区域而言,灰度变化不大的时候,沿着二维平面进行高斯平滑,则等价于对影像进行高斯滤波;而对于边界区域而言,灰度变化很大,笔刷的高斯衰减范围保证了边界另一边的数值不被影像,因此保留住了边界。

这个将图像上点击一个点,再投影到3D双边空间的操作,术语称之为splat.

笔刷已经在3D双边空间刷过一遍了,那么如何重建滤波后的影像呢?在双边空间进行插值,术语称之为slice。

打个小总结,双边滤波可以简单的理解为在空间域内考虑到色彩域的信息,综合权重进行滤波,但是直接根据双边滤波权重的公式进行计算的话,常常在速度上令人着急,因此提出了在双边网格上模拟双边滤波的想法,在三维上既能够考虑到色彩域的信息,又能够加快速度。

现在将快速双边滤波的过程简洁的描述为: splat/blur/slice, 也就是在图像上进行采样操作,投影到3D网格上,在3D上进行滤波,再内插出每个( x , y ) (x,y)(x,y)上的值,重建出滤波后的影像。