目标:使用kubeadm部署kubernetes集群

环境:CentOS 7

步骤:基础环境配置->kubernetes安装前设置(源、镜像及相关配置)->kubeadm部署(master)->启用基于flannel的Pod网络->kubeadm加入node节点->dashboard组件安装与使用->heapster监控组件安装与使用->访问测试

1.基础环境配置

master和所有node节点都需要进行基础环境配置。

(1)net相关项配置

设置ipv4转发:

vim /etc/sysctl.d/k8s.conf:增加一行 net.ipv4.ip_forward = 1

sysctl -p /etc/sysctl.d/k8s.conf

centos7下net-bridge设置:

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables

(2)docker安装

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

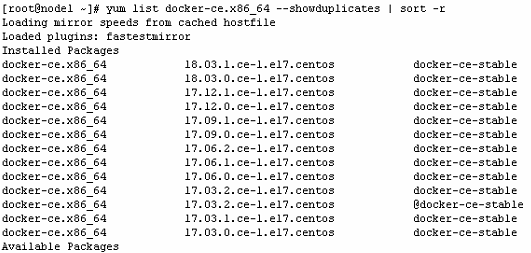

查看docker版本:

yum list docker-ce.x86_64 --showduplicates | sort -r

本文使用Kubernetes 1.10版本,因此选用验证过的17.03.2docker版本。

yum makecache fast

yum install -y --setopt=obsoletes=0 docker-ce-17.03.2.ce-1.el7.centos

启动docker:

systemctl enable docker

systemctl start docker

docker 1.13以上版本默认禁用iptables的forward调用链,因此需要执行开启命令:

iptables -P FORWARD ACCEPT

2.kubernetes安装前设置(源、镜像及相关配置)

配置源路径:使用国内的阿里源

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

docker镜像拉取,因为默认拉取路径为google,因此使用国内镜像。

(1)master节点

vim k8s.sh

docker pull cnych/kube-apiserver-amd64:v1.10.0 docker pull cnych/kube-scheduler-amd64:v1.10.0 docker pull cnych/kube-controller-manager-amd64:v1.10.0 docker pull cnych/kube-proxy-amd64:v1.10.0 docker pull cnych/k8s-dns-kube-dns-amd64:1.14.8 docker pull cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker pull cnych/k8s-dns-sidecar-amd64:1.14.8 docker pull cnych/etcd-amd64:3.1.12 docker pull cnych/flannel:v0.10.0-amd64 docker pull cnych/pause-amd64:3.1 docker tag cnych/kube-apiserver-amd64:v1.10.0 k8s.gcr.io/kube-apiserver-amd64:v1.10.0 docker tag cnych/kube-scheduler-amd64:v1.10.0 k8s.gcr.io/kube-scheduler-amd64:v1.10.0 docker tag cnych/kube-controller-manager-amd64:v1.10.0 k8s.gcr.io/kube-controller-manager-amd64:v1.10.0 docker tag cnych/kube-proxy-amd64:v1.10.0 k8s.gcr.io/kube-proxy-amd64:v1.10.0 docker tag cnych/k8s-dns-kube-dns-amd64:1.14.8 k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8 docker tag cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker tag cnych/k8s-dns-sidecar-amd64:1.14.8 k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8 docker tag cnych/etcd-amd64:3.1.12 k8s.gcr.io/etcd-amd64:3.1.12 docker tag cnych/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64 docker tag cnych/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

chmod +x k8s.sh,然后执行./k8s.sh

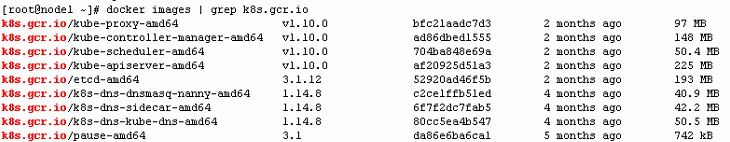

docker images查看当前镜像:

执行安装命令:

yum install -y kubelet kubeadm kubectl

配置kubelet:

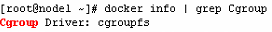

docker info | grep Cgroup

修改k8s配置文件:vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

修改为cgroupfs,与docker匹配

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

关闭swap:

Environment="KUBELET_EXTRA_ARGS=--fail-swap-on=false"

(2)Node节点

vim k8s-node.sh

docker pull cnych/kube-proxy-amd64:v1.10.0 docker pull cnych/flannel:v0.10.0-amd64 docker pull cnych/pause-amd64:3.1 docker pull cnych/kubernetes-dashboard-amd64:v1.8.3 docker pull cnych/heapster-influxdb-amd64:v1.3.3 docker pull cnych/heapster-grafana-amd64:v4.4.3 docker pull cnych/heapster-amd64:v1.4.2 docker pull cnych/k8s-dns-kube-dns-amd64:1.14.8 docker pull cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker pull cnych/k8s-dns-sidecar-amd64:1.14.8 docker tag cnych/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64 docker tag cnych/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1 docker tag cnych/kube-proxy-amd64:v1.10.0 k8s.gcr.io/kube-proxy-amd64:v1.10.0 docker tag cnych/kubernetes-dashboard-amd64:v1.8.3 k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3 docker tag cnych/heapster-influxdb-amd64:v1.3.3 k8s.gcr.io/heapster-influxdb-amd64:v1.3.3 docker tag cnych/heapster-grafana-amd64:v4.4.3 k8s.gcr.io/heapster-grafana-amd64:v4.4.3 docker tag cnych/heapster-amd64:v1.4.2 k8s.gcr.io/heapster-amd64:v1.4.2 docker tag cnych/k8s-dns-kube-dns-amd64:1.14.8 k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8 docker tag cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker tag cnych/k8s-dns-sidecar-amd64:1.14.8 k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8

此处包含了dashboard和heapster相关镜像。

执行过程与master节点相同。

3.kubeadm部署(master)

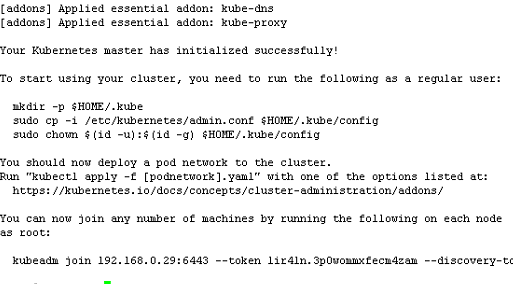

在master节点执行初始化命令:

kubeadm init --kubernetes-version=v1.10.3 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.0.29

之后按说明执行mkdir -p $HOME/.kube等命令。

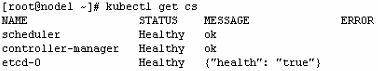

kubectl get cs查看集群状态:

4.启用基于flannel的Pod网络

下载yaml文件:wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

启动flannel:kubectl apply -f kube-flannel.yml

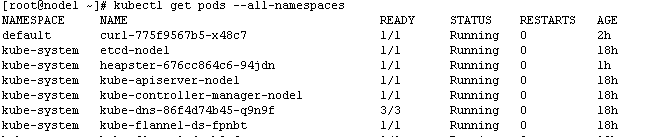

kubectl get pods --all-namespaces查看状态:

5.kubeadm加入node节点

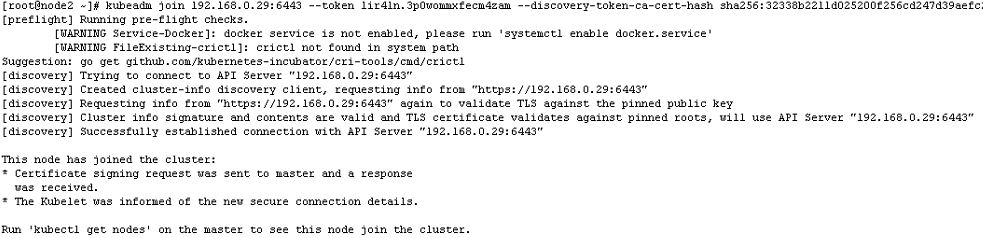

在node节点上执行:

kubeadm join 192.168.0.29:6443 --token xxxx --discovery-token-ca-cert-hash xxxx

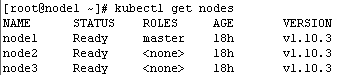

在master节点上执行:

kubectl get nodes

6.dashboard组件安装与使用

在master节点安装:kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

创建admin账户配置文件:kubernetes-dashboard-admin.rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

执行:kubectl create -f kubernetes-dashboard-admin.rbac.yaml

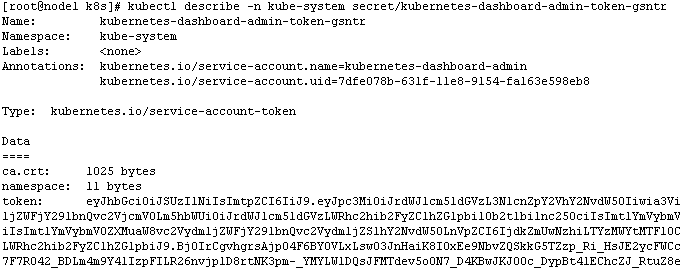

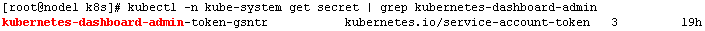

查看kubernete-dashboard-admin的token:

kubectl -n kube-system get secret | grep kubernetes-dashboard-admin

kubectl describe -n kube-system secret/kubernetes-dashboard-admin-token-gsntr

其中的token即为dashboard登录用的令牌。

修改现有dashboard访问方式为NodePort:修改type类型为NodePort

kubectl -n kube-system edit service kubernetes-dashboard

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

creationTimestamp: 2018-05-29T09:09:30Z

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

resourceVersion: "2647"

selfLink: /api/v1/namespaces/kube-system/services/kubernetes-dashboard

uid: fe971993-631f-11e8-9154-fa163e598eb8

spec:

clusterIP: 10.101.128.69

externalTrafficPolicy: Cluster

ports:

- nodePort: 30269

port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

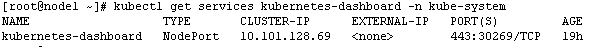

查看NodePort端口:

7.heapster监控组件安装与使用

在master节点下载heapster配置文件:

mkdir -p ~/k8s/heapster

cd ~/k8s/heapster

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

修改heapster.yaml,将版本改为1.4.2

vim k8s-heapster.sh

docker pull cnych/heapster-influxdb-amd64:v1.3.3 docker pull cnych/heapster-grafana-amd64:v4.4.3 docker pull cnych/heapster-amd64:v1.4.2 docker tag cnych/heapster-influxdb-amd64:v1.3.3 k8s.gcr.io/heapster-influxdb-amd64:v1.3.3 docker tag cnych/heapster-grafana-amd64:v4.4.3 k8s.gcr.io/heapster-grafana-amd64:v4.4.3 docker tag cnych/heapster-amd64:v1.4.2 k8s.gcr.io/heapster-amd64:v1.4.2

执行k8s-heapster.sh下载镜像

启用heapster:

cd ~/k8s/heapster/

kubectl create -f ./

8.访问测试

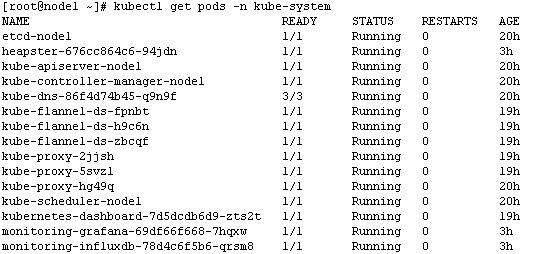

查看kube-system pod是否都正常运行:

kubectl get pods -n kube-system

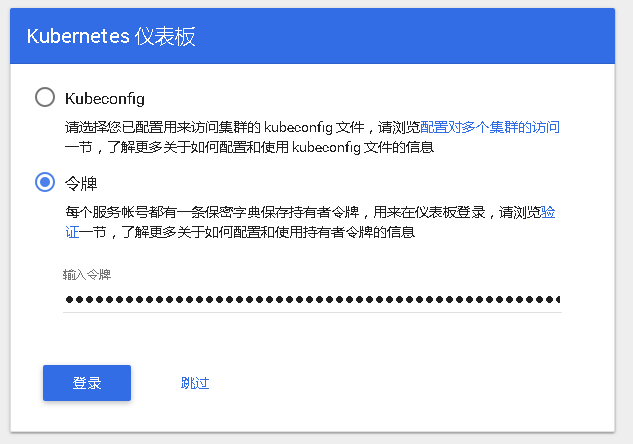

访问dashboard:https://NodeIP:30269

选择令牌登录:

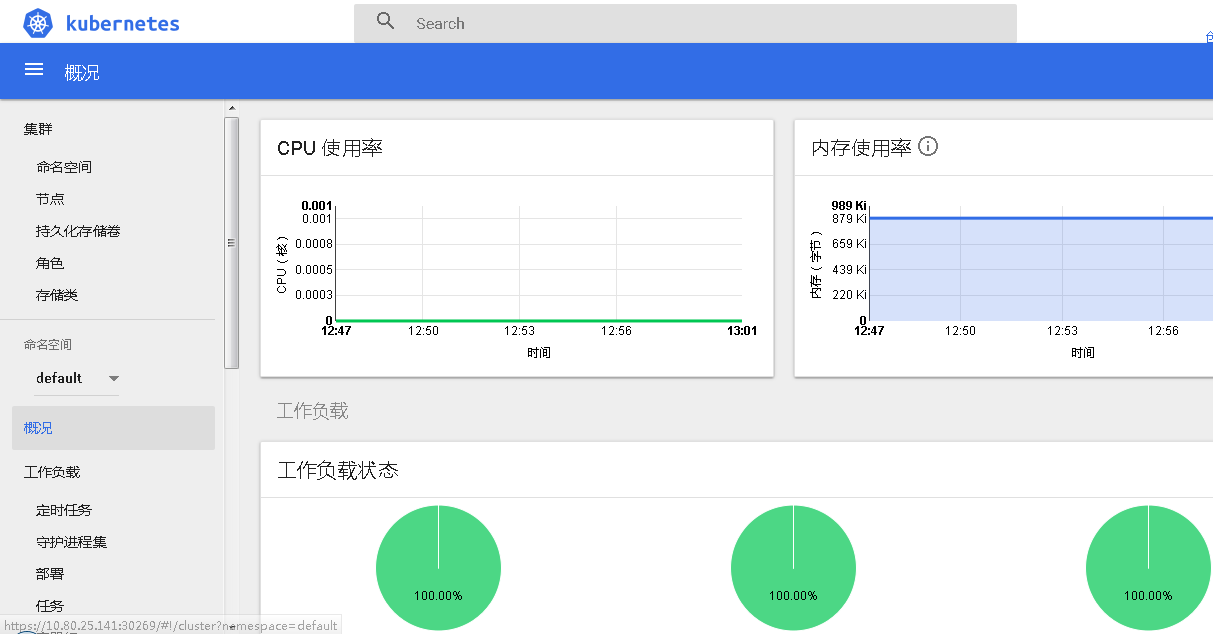

主界面: