4.4 安装 Docker

master和node安装docker-ce:

[root@k8s-master01 ~]# cat install_docker.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2021-12-07

#FileName: install_docker.sh

#URL: raymond.blog.csdn.net

#Description: install_docker for centos 7/8 & ubuntu 18.04/20.04 Rocky 8

#Copyright (C): 2021 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

DOCKER_VERSION=20.10.17

URL='mirrors.cloud.tencent.com'

HARBOR_DOMAIN=harbor.raymonds.cc

os(){

OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release`

}

ubuntu_install_docker(){

dpkg -s docker-ce &>/dev/null && ${COLOR}"Docker已安装,退出"${END} && exit

${COLOR}"开始安装DOCKER依赖包"${END}

apt update &> /dev/null

apt -y install apt-transport-https ca-certificates curl software-properties-common &> /dev/null

curl -fsSL https://${URL}/docker-ce/linux/ubuntu/gpg | sudo apt-key add - &> /dev/null

add-apt-repository "deb [arch=amd64] https://${URL}/docker-ce/linux/ubuntu $(lsb_release -cs) stable" &> /dev/null

apt update &> /dev/null

${COLOR}"Docker有以下版本"${END}

apt-cache madison docker-ce

${COLOR}"10秒后即将安装:Docker-"${DOCKER_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Docker版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装DOCKER"${END}

apt -y install docker-ce=5:${DOCKER_VERSION}~3-0~ubuntu-$(lsb_release -cs) docker-ce-cli=5:${DOCKER_VERSION}~3-0~ubuntu-$(lsb_release -cs) &> /dev/null || {

${COLOR}"apt源失败,请检查apt配置"${END};exit; }

}

centos_install_docker(){

rpm -q docker-ce &> /dev/null && ${COLOR}"Docker已安装,退出"${END} && exit

${COLOR}"开始安装DOCKER依赖包"${END}

yum -y install yum-utils &> /dev/null

yum-config-manager --add-repo https://${URL}/docker-ce/linux/centos/docker-ce.repo &> /dev/null

sed -i 's+download.docker.com+'''${URL}'''/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum clean all &> /dev/null

yum makecache &> /dev/null

${COLOR}"Docker有以下版本"${END}

yum list docker-ce.x86_64 --showduplicates

${COLOR}"10秒后即将安装:Docker-"${DOCKER_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Docker版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装DOCKER"${END}

yum -y install docker-ce-${DOCKER_VERSION} docker-ce-cli-${DOCKER_VERSION} &> /dev/null || {

${COLOR}"yum源失败,请检查yum配置"${END};exit; }

}

mirror_accelerator(){

mkdir -p /etc/docker

cat > /etc/docker/daemon.json <<-EOF

{

"registry-mirrors": [

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"insecure-registries": ["${HARBOR_DOMAIN}"],

"exec-opts": ["native.cgroupdriver=systemd"],

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 5,

"log-opts": {

"max-size": "300m",

"max-file": "2"

},

"live-restore": true

}

EOF

systemctl daemon-reload

systemctl enable --now docker

systemctl is-active docker &> /dev/null && ${COLOR}"Docker 服务启动成功"${END} || {

${COLOR}"Docker 启动失败"${END};exit; }

docker version && ${COLOR}"Docker 安装成功"${END} || ${COLOR}"Docker 安装失败"${END}

}

set_alias(){

echo 'alias rmi="docker images -qa|xargs docker rmi -f"' >> ~/.bashrc

echo 'alias rmc="docker ps -qa|xargs docker rm -f"' >> ~/.bashrc

}

set_swap_limit(){

if [ ${OS_ID} == "Ubuntu" ];then

${COLOR}'设置Docker的"WARNING: No swap limit support"警告'${END}

sed -ri '/^GRUB_CMDLINE_LINUX=/s@"$@ swapaccount=1"@' /etc/default/grub

update-grub &> /dev/null

${COLOR}"10秒后,机器会自动重启"${END}

sleep 10

reboot

fi

}

main(){

os

if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then

centos_install_docker

else

ubuntu_install_docker

fi

mirror_accelerator

set_alias

set_swap_limit

}

main

[root@k8s-master01 ~]# bash install_docker.sh

[root@k8s-master02 ~]# bash install_docker.sh

[root@k8s-master03 ~]# bash install_docker.sh

[root@k8s-node01 ~]# bash install_docker.sh

[root@k8s-node02 ~]# bash install_docker.sh

[root@k8s-node03 ~]# bash install_docker.sh

4.5 安装kubeadm等组件

CentOS 配置k8s镜像仓库和安装k8s组件:

[root@k8s-master01 ~]# cat > /etc/yum.repos.d/kubernetes.repo <<-EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-master01 ~]# yum list kubeadm.x86_64 --showduplicates | sort -r|grep 1.25

kubeadm.x86_64 1.25.0-0 kubernetes

[root@k8s-master01 ~]# yum -y install kubeadm-1.25.0 kubelet-1.25.0 kubectl-1.25.0

Ubuntu:

root@k8s-master01:~# apt update

root@k8s-master01:~# apt install -y apt-transport-https

root@k8s-master01:~# curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

OK

root@k8s-master01:~# echo "deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

root@k8s-master01:~# apt update

root@k8s-master01:~# apt-cache madison kubeadm | grep 1.25

kubeadm | 1.25.0-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages

root@k8s-master01:~# apt -y install kubelet=1.25.0-00 kubeadm=1.25.0-00 kubectl=1.25.0-00

设置Kubelet开机自启动:

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

在master02和master03执行脚本安装:

[root@k8s-master02 ~]# cat install_kubeadm_for_master.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: install_kubeadm_for_master.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

KUBEADM_MIRRORS=mirrors.aliyun.com

KUBEADM_VERSION=1.25.0

HARBOR_DOMAIN=harbor.raymonds.cc

os(){

OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release`

}

install_ubuntu_kubeadm(){

${COLOR}"开始安装Kubeadm依赖包"${END}

apt update &> /dev/null && apt install -y apt-transport-https &> /dev/null

curl -fsSL https://${KUBEADM_MIRRORS}/kubernetes/apt/doc/apt-key.gpg | apt-key add - &> /dev/null

echo "deb https://"${KUBEADM_MIRRORS}"/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

apt update &> /dev/null

${COLOR}"Kubeadm有以下版本"${END}

apt-cache madison kubeadm

${COLOR}"10秒后即将安装:Kubeadm-"${KUBEADM_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

apt -y install kubelet=${KUBEADM_VERSION}-00 kubeadm=${KUBEADM_VERSION}-00 kubectl=${KUBEADM_VERSION}-00 &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

install_centos_kubeadm(){

cat > /etc/yum.repos.d/kubernetes.repo <<-EOF

[kubernetes]

name=Kubernetes

baseurl=https://${KUBEADM_MIRRORS}/kubernetes/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://${KUBEADM_MIRRORS}/kubernetes/yum/doc/yum-key.gpg https://${KUBEADM_MIRRORS}/kubernetes/yum/doc/rpm-package-key.gpg

EOF

${COLOR}"Kubeadm有以下版本"${END}

yum list kubeadm.x86_64 --showduplicates | sort -r

${COLOR}"10秒后即将安装:Kubeadm-"${KUBEADM_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

yum -y install kubelet-${KUBEADM_VERSION} kubeadm-${KUBEADM_VERSION} kubectl-${KUBEADM_VERSION} &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

start_service(){

systemctl daemon-reload

systemctl enable --now kubelet

systemctl is-active kubelet &> /dev/null && ${COLOR}"Kubelet 服务启动成功"${END} || {

${COLOR}"Kubelet 启动失败"${END};exit; }

kubelet --version && ${COLOR}"Kubelet 安装成功"${END} || ${COLOR}"Kubelet 安装失败"${END}

}

main(){

os

if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then

install_centos_kubeadm

else

install_ubuntu_kubeadm

fi

start_service

}

main

[root@k8s-master02 ~]# bash install_kubeadm_for_master.sh

[root@k8s-master03 ~]# bash install_kubeadm_for_master.sh

node上安装kubeadm:

[root@k8s-node01 ~]# cat install_kubeadm_for_node.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: install_kubeadm_for_node.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

KUBEADM_MIRRORS=mirrors.aliyun.com

KUBEADM_VERSION=1.25.0

HARBOR_DOMAIN=harbor.raymonds.cc

os(){

OS_ID=`sed -rn '/^NAME=/s@.*="([[:alpha:]]+).*"$@\1@p' /etc/os-release`

}

install_ubuntu_kubeadm(){

${COLOR}"开始安装Kubeadm依赖包"${END}

apt update &> /dev/null && apt install -y apt-transport-https &> /dev/null

curl -fsSL https://${KUBEADM_MIRRORS}/kubernetes/apt/doc/apt-key.gpg | apt-key add - &> /dev/null

echo "deb https://"${KUBEADM_MIRRORS}"/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

apt update &> /dev/null

${COLOR}"Kubeadm有以下版本"${END}

apt-cache madison kubeadm

${COLOR}"10秒后即将安装:Kubeadm-"${KUBEADM_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

apt -y install kubelet=${KUBEADM_VERSION}-00 kubeadm=${KUBEADM_VERSION}-00 &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

install_centos_kubeadm(){

cat > /etc/yum.repos.d/kubernetes.repo <<-EOF

[kubernetes]

name=Kubernetes

baseurl=https://${KUBEADM_MIRRORS}/kubernetes/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://${KUBEADM_MIRRORS}/kubernetes/yum/doc/yum-key.gpg https://${KUBEADM_MIRRORS}/kubernetes/yum/doc/rpm-package-key.gpg

EOF

${COLOR}"Kubeadm有以下版本"${END}

yum list kubeadm.x86_64 --showduplicates | sort -r

${COLOR}"10秒后即将安装:Kubeadm-"${KUBEADM_VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

yum -y install kubelet-${KUBEADM_VERSION} kubeadm-${KUBEADM_VERSION} &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

start_service(){

systemctl daemon-reload

systemctl enable --now kubelet

systemctl is-active kubelet &> /dev/null && ${COLOR}"Kubelet 服务启动成功"${END} || {

${COLOR}"Kubelet 启动失败"${END};exit; }

kubelet --version && ${COLOR}"Kubelet 安装成功"${END} || ${COLOR}"Kubelet 安装失败"${END}

}

main(){

os

if [ ${OS_ID} == "CentOS" -o ${OS_ID} == "Rocky" ] &> /dev/null;then

install_centos_kubeadm

else

install_ubuntu_kubeadm

fi

start_service

}

main

[root@k8s-node01 ~]# bash install_kubeadm_for_node.sh

[root@k8s-node02 ~]# bash install_kubeadm_for_node.sh

[root@k8s-node03 ~]# bash install_kubeadm_for_node.sh

4.6 安装 cri-dockerd

Kubernetes自v1.24移除了对docker-shim的支持,而Docker Engine默认又不支持CRI规范,因而二者将无法直接完成整合。为此,Mirantis和Docker联合创建了cri-dockerd项目,用于为Docker Engine提供一个能够支持到CRI规范的垫片,从而能够让Kubernetes基于CRI控制Docker 。

项目地址:https://github.com/Mirantis/cri-dockerd

cri-dockerd项目提供了预制的二制格式的程序包,用户按需下载相应的系统和对应平台的版本即可完成安装,这里以Ubuntu 20.04 64bits系统环境,以及cri-dockerd目前最新的程序版本v0.2.5为例。

4.6.1 镜像包方式安装cri-dockerd

#CentOS

[root@k8s-master01 ~]# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5-3.el8.x86_64.rpm

[root@k8s-master01 ~]# rpm -ivh cri-dockerd-0.2.5-3.el8.x86_64.rpm

[root@k8s-master01 ~]# for i in {102..103};do scp cri-dockerd-0.2.5-3.el8.x86_64.rpm 172.31.3.$i: ; ssh 172.31.3.$i "rpm -ivh cri-dockerd-0.2.5-3.el8.x86_64.rpm";done

[root@k8s-master01 ~]# for i in {108..110};do scp cri-dockerd-0.2.5-3.el8.x86_64.rpm 172.31.3.$i: ; ssh 172.31.3.$i "rpm -ivh cri-dockerd-0.2.5-3.el8.x86_64.rpm";done

#Ubuntu

[root@k8s-master01 ~]# curl -LO https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb

[root@k8s-master01 ~]# dpkg -i cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb

[root@k8s-master01 ~]# for i in {102..103};do scp cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb 172.31.3.$i: ; ssh 172.31.3.$i "dpkg -i cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb";done

[root@k8s-master01 ~]# for i in {108..110};do scp cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb 172.31.3.$i: ; ssh 172.31.3.$i "dpkg -i cri-dockerd_0.2.5.3-0.ubuntu-focal_amd64.deb";done

配置 cri-dockerd

众所周知的原因,从国内 cri-dockerd 服务无法下载 k8s.gcr.io上面相关镜像,导致无法启动,所以需要修改cri-dockerd 使用国内镜像源

[root@k8s-master01 ~]# sed -ri '/ExecStart.*/s@(ExecStart.*)@\1 --pod-infra-container-image harbor.raymonds.cc/google_containers/pause:3.8@g' /lib/systemd/system/cri-docker.service

[root@k8s-master01 ~]# sed -nr '/ExecStart.*/p' /lib/systemd/system/cri-docker.service

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image harbor.raymonds.cc/google_containers/pause:3.8

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now cri-docker

[root@k8s-master01 ~]# for i in {102..103};do scp /lib/systemd/system/cri-docker.service 172.31.3.$i:/lib/systemd/system/cri-docker.service; ssh 172.31.3.$i "systemctl daemon-reload && systemctl enable --now cri-docker.service";done

[root@k8s-master01 ~]# for i in {108..110};do scp /lib/systemd/system/cri-docker.service 172.31.3.$i:/lib/systemd/system/cri-docker.service; ssh 172.31.3.$i "systemctl daemon-reload && systemctl enable --now cri-docker.service";done

如果不配置,会出现下面日志提示

Aug 21 01:35:17 ubuntu2004 kubelet[6791]: E0821 01:35:17.999712 6791 remote_runtime.go:212] "RunPodSandbox from runtime service failed" err="rpc error: code = Unknown desc = failed pulling image \"k8s.gcr.io/pause:3.6\": Error response from daemon: Get \"https://k8s.gcr.io/v2/\": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)"

4.6.2 二进制包方式安装cri-dockerd

[root@k8s-master01 ~]# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5.amd64.tgz

[root@k8s-master01 ~]# tar xf cri-dockerd-0.2.5.amd64.tgz

[root@k8s-master01 ~]# mv cri-dockerd/* /usr/bin/

[root@k8s-master01 ~]# ll /usr/bin/cri-dockerd

-rwxr-xr-x 1 root root 52351080 Sep 3 07:00 /usr/bin/cri-dockerd*

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/cri-docker.service <<EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd://

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3

# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-master01 ~]# cat > /usr/lib/systemd/system/cri-docker.socket <<EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

[root@k8s-master01 ~]# sed -ri '/ExecStart.*/s@(ExecStart.*)@\1 --pod-infra-container-image harbor.raymonds.cc/google_containers/pause:3.8@g' /lib/systemd/system/cri-docker.service

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now cri-docker

master02、master03和node安装:

[root@k8s-master02 ~]# cat install_cri_dockerd_binary.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-09-03

#FileName: install_cri_dockerd_binary.sh

#URL: raymond.blog.csdn.net

#Description: install_docker_binary for centos 7/8 & ubuntu 18.04/20.04 & Rocky 8

#Copyright (C): 2021 All rights reserved

#*********************************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

#cri-dockerd下载地址:https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5.amd64.tgz

CRI_DOCKER_FILE=cri-dockerd-0.2.5.amd64.tgz

HARBOR_DOMAIN=harbor.raymonds.cc

check_file (){

cd ${SRC_DIR}

if [ ! -e ${CRI_DOCKER_FILE} ];then

${COLOR}"缺少${CRI_DOCKER_FILE}文件,如果是离线包,请把文件放到${SRC_DIR}目录下"${END}

exit

else

${COLOR}"相关文件已准备好"${END}

fi

}

install(){

[ -f /usr/bin/cri-dockerd ] && {

${COLOR}"cri-dockerd已存在,安装失败"${END};exit; }

${COLOR}"开始安装cri-dockerd..."${END}

tar xf ${CRI_DOCKER_FILE}

mv cri-dockerd/* /usr/bin/

cat > /usr/lib/systemd/system/cri-docker.service <<-EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image ${HARBOR_DOMAIN}/google_containers/pause:3.8

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3

# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat > /usr/lib/systemd/system/cri-docker.socket <<-EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

systemctl daemon-reload

systemctl enable --now cri-docker &> /dev/null

systemctl is-active cri-docker &> /dev/null && ${COLOR}"cri-docker 服务启动成功"${END} || {

${COLOR}"cri-docker 启动失败"${END};exit; }

cri-dockerd --version && ${COLOR}"cri-dockerd 安装成功"${END} || ${COLOR}"cri-dockerd 安装失败"${END}

}

main(){

check_file

install

}

main

[root@k8s-master02 ~]# bash install_cri_dockerd_binary.sh

[root@k8s-master03 ~]# bash install_cri_dockerd_binary.sh

[root@k8s-node01 ~]# bash install_cri_dockerd_binary.sh

[root@k8s-node02 ~]# bash install_cri_dockerd_binary.sh

[root@k8s-node03 ~]# bash install_cri_dockerd_binary.sh

4.7 提前准备 Kubernetes 初始化所需镜像

查看镜像版本:

[root@k8s-master01 ~]# kubeadm config images list --kubernetes-version v1.25.0

registry.k8s.io/kube-apiserver:v1.25.0

registry.k8s.io/kube-controller-manager:v1.25.0

registry.k8s.io/kube-scheduler:v1.25.0

registry.k8s.io/kube-proxy:v1.25.0

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.4-0

registry.k8s.io/coredns/coredns:v1.9.3

#查看国内镜像

[root@k8s-master01 ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

registry.aliyuncs.com/google_containers/kube-apiserver:v1.25.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.25.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.25.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.25.0

registry.aliyuncs.com/google_containers/pause:3.8

registry.aliyuncs.com/google_containers/etcd:3.5.4-0

registry.aliyuncs.com/google_containers/coredns:v1.9.3

下载镜像并上传至harbor:

[root@k8s-master01 ~]# docker login harbor.raymonds.cc

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8s-master01 ~]# cat download_kubeadm_images_1.25.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_kubeadm_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

KUBEADM_VERSION=1.25.0

images=$(kubeadm config images list --kubernetes-version=v${

KUBEADM_VERSION} | awk -F "/" '{print $NF}')

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Kubeadm镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Kubeadm镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_kubeadm_images_1.25.sh

[root@k8s-master01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

harbor.raymonds.cc/google_containers/kube-apiserver v1.25.0 4d2edfd10d3e 5 days ago 128MB

harbor.raymonds.cc/google_containers/kube-controller-manager v1.25.0 1a54c86c03a6 5 days ago 117MB

harbor.raymonds.cc/google_containers/kube-scheduler v1.25.0 bef2cf311509 5 days ago 50.6MB

harbor.raymonds.cc/google_containers/kube-proxy v1.25.0 58a9a0c6d96f 5 days ago 61.7MB

harbor.raymonds.cc/google_containers/pause 3.8 4873874c08ef 2 months ago 711kB

harbor.raymonds.cc/google_containers/etcd 3.5.4-0 a8a176a5d5d6 2 months ago 300MB

harbor.raymonds.cc/google_containers/coredns v1.9.3 5185b96f0bec 3 months ago 48.8MB

4.8 基于命令初始化高可用master方式

kubeadm init 命令参考说明

--kubernetes-version:#kubernetes程序组件的版本号,它必须要与安装的kubelet程序包的版本号相同

--control-plane-endpoint:#多主节点必选项,用于指定控制平面的固定访问地址,可是IP地址或DNS名称,会被用于集群管理员及集群组件的kubeconfig配置文件的API Server的访问地址,如果是单主节点的控制平面部署时不使用该选项,注意:kubeadm 不支持将没有 --control-plane-endpoint 参数的单个控制平面集群转换为高可用性集群。

--pod-network-cidr:#Pod网络的地址范围,其值为CIDR格式的网络地址,通常情况下Flannel网络插件的默认为10.244.0.0/16,Calico网络插件的默认值为192.168.0.0/16

--service-cidr:#Service的网络地址范围,其值为CIDR格式的网络地址,默认为10.96.0.0/12;通常,仅Flannel一类的网络插件需要手动指定该地址

--service-dns-domain string #指定k8s集群域名,默认为cluster.local,会自动通过相应的DNS服务实现解析

--apiserver-advertise-address:#API 服务器所公布的其正在监听的 IP 地址。如果未设置,则使用默认网络接口。apiserver通告给其他组件的IP地址,一般应该为Master节点的用于集群内部通信的IP地址,0.0.0.0表示此节点上所有可用地址,非必选项

--image-repository string #设置镜像仓库地址,默认为 k8s.gcr.io,此地址国内可能无法访问,可以指向国内的镜像地址

--token-ttl #共享令牌(token)的过期时长,默认为24小时,0表示永不过期;为防止不安全存储等原因导致的令牌泄露危及集群安全,建议为其设定过期时长。未设定该选项时,在token过期后,若期望再向集群中加入其它节点,可以使用如下命令重新创建token,并生成节点加入命令。kubeadm token create --print-join-command

--ignore-preflight-errors=Swap” #若各节点未禁用Swap设备,还需附加选项“从而让kubeadm忽略该错误

--upload-certs #将控制平面证书上传到 kubeadm-certs Secret

--cri-socket #v1.24版之后指定连接cri的socket文件路径,注意;不同的CRI连接文件不同

#如果是cRI是containerd,则使用--cri-socket unix:///run/containerd/containerd.sock #如果是cRI是docker,则使用--cri-socket unix:///var/run/cri-dockerd.sock

#如果是CRI是CRI-o,则使用--cri-socket unix:///var/run/crio/crio.sock

#注意:CRI-o与containerd的容器管理机制不一样,所以镜像文件不能通用。

初始化集群:

[root@k8s-master01 ~]# kubeadm init --control-plane-endpoint="kubeapi.raymonds.cc" --kubernetes-version=v1.25.0 --pod-network-cidr=192.168.0.0/12 --service-cidr=10.96.0.0/12 --token-ttl=0 --cri-socket unix:///run/cri-dockerd.sock --image-repository harbor.raymonds.cc/google_containers --upload-certs

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.raymonds.cc:6443 --token ndloct.qmgzvq90q864dmqn \

--discovery-token-ca-cert-hash sha256:65848696b3ad7c838728b75ef484bcf68f5c5f16dc9e1f4c35d42c0b744eb1b2 \

--control-plane --certificate-key e488972b1ed8ccaa1916e1de397adae42151f7f67b61cac0f5e03f32a40240e0

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.raymonds.cc:6443 --token ndloct.qmgzvq90q864dmqn \

--discovery-token-ca-cert-hash sha256:65848696b3ad7c838728b75ef484bcf68f5c5f16dc9e1f4c35d42c0b744eb1b2

4.9 生成 kubectl 命令的授权文件

kubectl是kube-apiserver的命令行客户端程序,实现了除系统部署之外的几乎全部的管理操作,是kubernetes管理员使用最多的命令之一。kubectl需经由API server认证及授权后方能执行相应的管理操作,kubeadm部署的集群为其生成了一个具有管理员权限的认证配置文件/etc/kubernetes/admin.conf,它可由kubectl通过默认的“$HOME/.kube/config”的路径进行加载。当然,用户也可在kubectl命令上使用–kubeconfig选项指定一个别的位置。

下面复制认证为Kubernetes系统管理员的配置文件至目标用户(例如当前用户root)的家目录下:

#可复制4.9的结果执行下面命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

4.10 实现 kubectl 命令补全

kubectl 命令功能丰富,默认不支持命令补会,可以用下面方式实现

#CentOS

[root@k8s-master01 ~]# yum -y install bash-completion

#Ubuntu

[root@k8s-master01 ~]# apt -y install bash-completion

[root@k8s-master01 ~]# source <(kubectl completion bash) # 在 bash 中设置当前 shell 的自动补全,要先安装 bash-completion 包。

[root@k8s-master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc # 在您的 bash shell 中永久的添加自动补全

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 9m12s v1.25.0

4.11 高可用Master

如果是配置文件初始化集群,不用申请证书,命令行初始化,执行下面命令,申请证书,当前maste生成证书用于添加新控制节点

添加master02和master03:

kubeadm join kubeapi.raymonds.cc:6443 --token ndloct.qmgzvq90q864dmqn \

--discovery-token-ca-cert-hash sha256:65848696b3ad7c838728b75ef484bcf68f5c5f16dc9e1f4c35d42c0b744eb1b2 \

--control-plane --certificate-key e488972b1ed8ccaa1916e1de397adae42151f7f67b61cac0f5e03f32a40240e0 --cri-socket unix:///run/cri-dockerd.sock

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 21m v1.25.0

k8s-master02.example.local NotReady control-plane 116s v1.25.0

k8s-master03.example.local NotReady control-plane 38s v1.25.0

4.12 高可用Node

Node节点上主要部署公司的一些业务应用,生产环境中不建议Master节点部署系统组件之外的其他Pod,测试环境可以允许Master节点部署Pod以节省系统资源。

添加node:

kubeadm join kubeapi.raymonds.cc:6443 --token ndloct.qmgzvq90q864dmqn \

--discovery-token-ca-cert-hash sha256:65848696b3ad7c838728b75ef484bcf68f5c5f16dc9e1f4c35d42c0b744eb1b2 --cri-socket unix:///run/cri-dockerd.sock

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 74m v1.25.0

k8s-master02.example.local NotReady control-plane 60m v1.25.0

k8s-master03.example.local NotReady control-plane 105s v1.25.0

k8s-node01.example.local NotReady <none> 59m v1.25.0

k8s-node02.example.local NotReady <none> 59m v1.25.0

k8s-node03.example.local NotReady <none> 6s v1.25.0

4.13 网络组件flannel部署

Kubernetes系统上Pod网络的实现依赖于第三方插件进行,这类插件有近数十种之多,较为著名的有flannel、calico、canal和kube-router等,简单易用的实现是为CoreOS提供的flannel项目。下面的命令用于在线部署flannel至Kubernetes系统之上:

首先,下载适配系统及硬件平台环境的flanneld至每个节点,并放置于/opt/bin/目录下。我们这里选用flanneld-amd64,目前最新的版本为v0.19.1,因而,我们需要在集群的每个节点上执行如下命令:

提示:下载flanneld的地址为 https://github.com/flannel-io/flannel

随后,在初始化的第一个master节点k8s-master01上运行如下命令,向Kubernetes部署kube-flannel。

root@k8s-master01:~# wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

root@k8s-master01:~# grep '"Network":' kube-flannel.yml

"Network": "10.244.0.0/16",

root@k8s-master01:~# sed -ri '/"Network":/s@("Network": ).*@\1"192.168.0.0/12",@g' kube-flannel.yml

root@k8s-master01:~# grep '"Network":' kube-flannel.yml

"Network": "192.168.0.0/12",

root@k8s-master01:~# grep '[^#]image:' kube-flannel.yml

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

root@k8s-master01:~# cat download_flannel_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_flannel_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/[^#]image:/{print $NF}' kube-flannel.yml |uniq)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Flannel镜像"${END}

for i in ${images};do

docker pull registry.cn-beijing.aliyuncs.com/raymond9/$i

docker tag registry.cn-beijing.aliyuncs.com/raymond9/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.cn-beijing.aliyuncs.com/raymond9/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Flannel镜像下载完成"${END}

}

images_download

root@k8s-master01:~# bash download_flannel_images.sh

root@k8s-master01:~# docker images |grep flannel

harbor.raymonds.cc/google_containers/mirrored-flannelcni-flannel v0.19.1 252b2c3ee6c8 3 weeks ago 62.3MB

harbor.raymonds.cc/google_containers/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 3 months ago 8.09MB

root@k8s-master01:~# sed -ri 's@([^#]image:) docker.io/rancher(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' kube-flannel.yml

root@k8s-master01:~# grep '[^#]image:' kube-flannel.yml

image: harbor.raymonds.cc/google_containers/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

image: harbor.raymonds.cc/google_containers/mirrored-flannelcni-flannel:v0.19.1

image: harbor.raymonds.cc/google_containers/mirrored-flannelcni-flannel:v0.19.1

root@k8s-master01:~# kubectl apply -f kube-flannel.yml

#查看容器状态

root@k8s-master01:~# kubectl get pod -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-447kh 1/1 Running 0 113s

kube-flannel-ds-5b2cq 1/1 Running 0 113s

kube-flannel-ds-jgkdp 1/1 Running 0 113s

kube-flannel-ds-pksgj 1/1 Running 0 113s

kube-flannel-ds-wqcz6 1/1 Running 0 113s

kube-flannel-ds-z8hlk 1/1 Running 0 113s

#查看集群状态

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local Ready control-plane 145m v1.25.0

k8s-master02.example.local Ready control-plane 131m v1.25.0

k8s-master03.example.local Ready control-plane 72m v1.25.0

k8s-node01.example.local Ready <none> 130m v1.25.0

k8s-node02.example.local Ready <none> 129m v1.25.0

k8s-node03.example.local Ready <none> 70m v1.25.0

重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

#测试VIP

[root@k8s-master01 ~]# ping 172.31.3.188

PING 172.31.3.188 (172.31.3.188) 56(84) bytes of data.

64 bytes from 172.31.3.188: icmp_seq=1 ttl=64 time=0.526 ms

64 bytes from 172.31.3.188: icmp_seq=2 ttl=64 time=0.375 ms

^C

--- 172.31.3.188 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1015ms

rtt min/avg/max/mdev = 0.375/0.450/0.526/0.078 ms

[root@k8s-ha01 ~]# systemctl stop keepalived

[root@k8s-ha01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:05:9b:2a brd ff:ff:ff:ff:ff:ff

inet 172.31.3.104/21 brd 172.31.7.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe05:9b2a/64 scope link

valid_lft forever preferred_lft forever

[root@k8s-ha02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5e:d8:f8 brd ff:ff:ff:ff:ff:ff

inet 172.31.3.105/21 brd 172.31.7.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.31.3.188/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5e:d8f8/64 scope link

valid_lft forever preferred_lft forever

[root@k8s-ha01 ~]# systemctl start keepalived

[root@k8s-ha01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:05:9b:2a brd ff:ff:ff:ff:ff:ff

inet 172.31.3.104/21 brd 172.31.7.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.31.3.188/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe05:9b2a/64 scope link

valid_lft forever preferred_lft forever

[root@k8s-master01 ~]# telnet 172.31.3.188 6443

Trying 172.31.3.188...

Connected to 172.31.3.188.

Escape character is '^]'.

Connection closed by foreign host.

如果ping不通且telnet没有出现 ] ,则认为VIP不可以,不可在继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

查看haproxy状态

http://172.31.3.188:9999/haproxy-status

4.14 测试应用编排及服务访问

demoapp是一个web应用,可将demoapp以Pod的形式编排运行于集群之上,并通过在集群外部进行访问:

root@k8s-master01:~# kubectl create deployment demoapp --image=registry.cn-hangzhou.aliyuncs.com/raymond9/demoapp:v1.0 --replicas=3

deployment.apps/demoapp created

root@k8s-master01:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-c4787f9fc-bcrlh 1/1 Running 0 28s 192.160.2.2 k8s-node01.example.local <none> <none>

demoapp-c4787f9fc-fbfnq 1/1 Running 0 28s 192.160.3.2 k8s-node02.example.local <none> <none>

demoapp-c4787f9fc-zv9mp 1/1 Running 0 28s 192.160.5.2 k8s-node03.example.local <none> <none>

root@k8s-master01:~# curl 192.160.2.2

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-bcrlh, ServerIP: 192.160.2.2!

root@k8s-master01:~# curl 192.160.3.2

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-fbfnq, ServerIP: 192.160.3.2!

root@k8s-master01:~# curl 192.160.5.2

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-zv9mp, ServerIP: 192.160.5.2!

#使用如下命令了解Service对象demoapp使用的NodePort,格式:<集群端口>:<POd端口>,以便于在集群外部进行访问

root@k8s-master01:~# kubectl create service nodeport demoapp --tcp=80:80

service/demoapp created

root@k8s-master01:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.102.135.254 <none> 80:30589/TCP 13s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 160m

root@k8s-master01:~# curl 10.102.135.254

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-zv9mp, ServerIP: 192.160.5.2!

root@k8s-master01:~# curl 10.102.135.254

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-bcrlh, ServerIP: 192.160.2.2!

root@k8s-master01:~# curl 10.102.135.254

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-fbfnq, ServerIP: 192.160.3.2!

#用户可以于集群外部通过“http://NodeIP:30589”这个URL访问demoapp上的应用,例如于集群外通过浏览器访问“http://<kubernetes-node>:30589”。

[root@rocky8 ~]# curl http://172.31.3.101:30589

raymond demoapp v1.0 !! ClientIP: 192.160.0.0, ServerName: demoapp-c4787f9fc-bcrlh, ServerIP: 192.160.2.2!

[root@rocky8 ~]# curl http://172.31.3.102:30589

raymond demoapp v1.0 !! ClientIP: 192.160.1.0, ServerName: demoapp-c4787f9fc-fbfnq, ServerIP: 192.160.3.2!

[root@rocky8 ~]# curl http://172.31.3.103:30589

raymond demoapp v1.0 !! ClientIP: 192.160.4.0, ServerName: demoapp-c4787f9fc-zv9mp, ServerIP: 192.160.5.2!

#扩容

root@k8s-master01:~# kubectl scale deployment demoapp --replicas 5

deployment.apps/demoapp scaled

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-4snfh 1/1 Running 0 5s

demoapp-c4787f9fc-bcrlh 1/1 Running 0 9m47s

demoapp-c4787f9fc-fbfnq 1/1 Running 0 9m47s

demoapp-c4787f9fc-mgnfc 1/1 Running 0 5s

demoapp-c4787f9fc-zv9mp 1/1 Running 0 9m47s

#缩容

root@k8s-master01:~# kubectl scale deployment demoapp --replicas 2

deployment.apps/demoapp scaled

#可以看到销毁pod的过程

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-4snfh 1/1 Terminating 0 34s

demoapp-c4787f9fc-bcrlh 1/1 Running 0 10m

demoapp-c4787f9fc-fbfnq 1/1 Running 0 10m

demoapp-c4787f9fc-mgnfc 1/1 Terminating 0 34s

demoapp-c4787f9fc-zv9mp 1/1 Terminating 0 10m

#再次查看,最终缩容成功

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-bcrlh 1/1 Running 0 11m

demoapp-c4787f9fc-fbfnq 1/1 Running 0 11m

4.15 基于文件初始化高可用master方式

Master01节点创建kubeadm-config.yaml配置文件如下:

Master01:(# 注意,如果不是高可用集群,172.31.3.188:6443改为master01的地址,注意更改v1.18.5自己服务器kubeadm的版本:kubeadm version)

注意

以下文件内容,宿主机网段、podSubnet网段、serviceSubnet网段不能重复

root@k8s-master01:~# kubeadm version

kubeadm version: &version.Info{

Major:"1", Minor:"25", GitVersion:"v1.25.0", GitCommit:"a866cbe2e5bbaa01cfd5e969aa3e033f3282a8a2", GitTreeState:"clean", BuildDate:"2022-08-23T17:43:25Z", GoVersion:"go1.19", Compiler:"gc", Platform:"linux/amd64"}

root@k8s-master01:~# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.31.3.101 #master01的IP地址

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master01.example.local #设置master01的hostname

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- kubeapi.raymonds.cc #VIP地址

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: kubeapi.raymonds.cc:6443 #haproxy代理后端地址

controllerManager: {

}

dns: {

}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: harbor.raymonds.cc/google_containers #harbor镜像地址

kind: ClusterConfiguration

kubernetesVersion: v1.25.0 #更改版本号

networking:

dnsDomain: cluster.local #dnsdomain

podSubnet: 192.168.0.0/12 #pod网段

serviceSubnet: 10.96.0.0/12 #service网段

scheduler: {

}

更新kubeadm文件

root@k8s-master01:~# kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

root@k8s-master01:~# cat new.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.31.3.101

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master01.example.local

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- kubeapi.raymonds.cc

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: kubeapi.raymonds.cc:6443

controllerManager: {

}

dns: {

}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: harbor.raymonds.cc/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.25.0

networking:

dnsDomain: cluster.local

podSubnet: 192.168.0.0/12

serviceSubnet: 10.96.0.0/12

scheduler: {

}

Master01节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可:

#如果已经初始化过,重新初始化用下面命令reset集群后,再进行初始化

#master和node上执行

kubeadm reset -f --cri-socket unix:///run/cri-dockerd.sock

rm -rf /etc/cni/net.d/

rm -rf $HOME/.kube/config

reboot

root@k8s-master01:~# kubeadm init --config /root/new.yaml --upload-certs

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join kubeapi.raymonds.cc:6443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:f3c7558578e131d50c3aef5324635dfd1e8b768c74bafe844e68992a88494ad8 \

--control-plane --certificate-key e9b299145e9f51cdd3faf9a0a2eff12606105a4674f1e8fd05d128a5e4937a3b

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kubeapi.raymonds.cc:6443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:f3c7558578e131d50c3aef5324635dfd1e8b768c74bafe844e68992a88494ad8

生成 kubectl 命令的授权文件,重复4.10

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 3m8s v1.25.0

高可用master,参考4.12

#添加master02和master03

kubeadm join kubeapi.raymonds.cc:6443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:f3c7558578e131d50c3aef5324635dfd1e8b768c74bafe844e68992a88494ad8 \

--control-plane --certificate-key e9b299145e9f51cdd3faf9a0a2eff12606105a4674f1e8fd05d128a5e4937a3b --cri-socket unix:///run/cri-dockerd.sock

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 5m53s v1.25.0

k8s-master02.example.local NotReady control-plane 93s v1.25.0

k8s-master03.example.local NotReady control-plane 38s v1.25.0

高可用node,参考4.13

kubeadm join kubeapi.raymonds.cc:6443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:f3c7558578e131d50c3aef5324635dfd1e8b768c74bafe844e68992a88494ad8 --cri-socket unix:///run/cri-dockerd.sock

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local NotReady control-plane 8m6s v1.25.0

k8s-master02.example.local NotReady control-plane 3m46s v1.25.0

k8s-master03.example.local NotReady control-plane 2m51s v1.25.0

k8s-node01.example.local NotReady <none> 57s v1.25.0

k8s-node02.example.local NotReady <none> 39s v1.25.0

k8s-node03.example.local NotReady <none> 19s v1.25.0

4.16 网络组件calico部署

https://docs.projectcalico.org/maintenance/kubernetes-upgrade#upgrading-an-installation-that-uses-the-kubernetes-api-datastore

calico安装:https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

root@k8s-master01:~# curl https://docs.projectcalico.org/manifests/calico.yaml -O

root@k8s-master01:~# POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'`

root@k8s-master01:~# echo $POD_SUBNET

192.168.0.0/12

root@k8s-master01:~# grep -E "(.*CALICO_IPV4POOL_CIDR.*|.*192.168.0.0.*)" calico.yaml

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

root@k8s-master01:~# sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico.yaml

root@k8s-master01:~# grep -E "(.*CALICO_IPV4POOL_CIDR.*|.*192.168.0.0.*)" calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: 192.168.0.0/12

root@k8s-master01:~# grep "image:" calico.yaml

image: docker.io/calico/cni:v3.24.1

image: docker.io/calico/cni:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/kube-controllers:v3.24.1

下载calico镜像并上传harbor:

root@k8s-master01:~# cat download_calico_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_calico_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' calico.yaml |uniq)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Calico镜像"${END}

for i in ${images};do

docker pull registry.cn-beijing.aliyuncs.com/raymond9/$i

docker tag registry.cn-beijing.aliyuncs.com/raymond9/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.cn-beijing.aliyuncs.com/raymond9/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Calico镜像下载完成"${END}

}

images_download

root@k8s-master01:~# bash download_calico_images.sh

root@k8s-master01:~# sed -ri 's@(.*image:) docker.io/calico(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' calico.yaml

root@k8s-master01:~# grep "image:" calico.yaml

image: harbor.raymonds.cc/google_containers/cni:v3.24.1

image: harbor.raymonds.cc/google_containers/cni:v3.24.1

image: harbor.raymonds.cc/google_containers/node:v3.24.1

image: harbor.raymonds.cc/google_containers/node:v3.24.1

image: harbor.raymonds.cc/google_containers/kube-controllers:v3.24.1

root@k8s-master01:~# kubectl apply -f calico.yaml

#查看容器状态

root@k8s-master01:~# kubectl get pod -n kube-system |grep calico

calico-kube-controllers-5477499cbc-sc47h 1/1 Running 0 63s

calico-node-75wtg 1/1 Running 0 63s

calico-node-bdqmk 1/1 Running 0 63s

calico-node-fhvl7 1/1 Running 0 63s

calico-node-j5tx4 1/1 Running 0 63s

calico-node-l5pnw 1/1 Running 0 63s

calico-node-zvztr 1/1 Running 0 63s

#查看集群状态

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local Ready control-plane 67m v1.25.0

k8s-master02.example.local Ready control-plane 63m v1.25.0

k8s-master03.example.local Ready control-plane 62m v1.25.0

k8s-node01.example.local Ready <none> 60m v1.25.0

k8s-node02.example.local Ready <none> 60m v1.25.0

k8s-node03.example.local Ready <none> 59m v1.25.0

测试应用编排及服务访问,参考4.15

root@k8s-master01:~# kubectl create deployment demoapp --image=registry.cn-hangzhou.aliyuncs.com/raymond9/demoapp:v1.0 --replicas=3

deployment.apps/demoapp created

root@k8s-master01:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-c4787f9fc-6kzzz 1/1 Running 0 11s 192.170.21.193 k8s-node03.example.local <none> <none>

demoapp-c4787f9fc-kbl7m 1/1 Running 0 11s 192.167.195.129 k8s-node02.example.local <none> <none>

demoapp-c4787f9fc-lb9nn 1/1 Running 0 11s 192.169.111.129 k8s-node01.example.local <none> <none>

root@k8s-master01:~# curl 192.170.21.193

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-6kzzz, ServerIP: 192.170.21.193!

root@k8s-master01:~# curl 192.167.195.129

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-kbl7m, ServerIP: 192.167.195.129!

root@k8s-master01:~# curl 192.169.111.129

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-lb9nn, ServerIP: 192.169.111.129!

#使用如下命令了解Service对象demoapp使用的NodePort,格式:<集群端口>:<POd端口>,以便于在集群外部进行访问

root@k8s-master01:~# kubectl create service nodeport demoapp --tcp=80:80

service/demoapp created

root@k8s-master01:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demoapp NodePort 10.106.167.169 <none> 80:31101/TCP 9s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 71m

root@k8s-master01:~# curl 10.106.167.169

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-kbl7m, ServerIP: 192.167.195.129!

root@k8s-master01:~# curl 10.106.167.169

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-6kzzz, ServerIP: 192.170.21.193!

root@k8s-master01:~# curl 10.106.167.169

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-lb9nn, ServerIP: 192.169.111.129!

#用户可以于集群外部通过“http://NodeIP:31101”这个URL访问demoapp上的应用,例如于集群外通过浏览器访问“http://<kubernetes-node>:31101”。

[root@rocky8 ~]# curl http://172.31.3.101:31101

raymond demoapp v1.0 !! ClientIP: 192.162.55.64, ServerName: demoapp-c4787f9fc-6kzzz, ServerIP: 192.170.21.193!

[root@rocky8 ~]# curl http://172.31.3.102:31101

raymond demoapp v1.0 !! ClientIP: 192.171.30.64, ServerName: demoapp-c4787f9fc-6kzzz, ServerIP: 192.170.21.193!

[root@rocky8 ~]# curl http://172.31.3.103:31101

raymond demoapp v1.0 !! ClientIP: 192.165.109.64, ServerName: demoapp-c4787f9fc-6kzzz, ServerIP: 192.170.21.193!

#扩容

root@k8s-master01:~# kubectl scale deployment demoapp --replicas 5

deployment.apps/demoapp scaled

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-6kzzz 1/1 Running 0 3m31s

demoapp-c4787f9fc-8l7n8 1/1 Running 0 9s

demoapp-c4787f9fc-kbl7m 1/1 Running 0 3m31s

demoapp-c4787f9fc-lb9nn 1/1 Running 0 3m31s

demoapp-c4787f9fc-rlljj 1/1 Running 0 9s

#缩容

root@k8s-master01:~# kubectl scale deployment demoapp --replicas 2

deployment.apps/demoapp scaled

#可以看到销毁pod的过程

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-6kzzz 1/1 Terminating 0 3m50s

demoapp-c4787f9fc-8l7n8 1/1 Terminating 0 28s

demoapp-c4787f9fc-kbl7m 1/1 Running 0 3m50s

demoapp-c4787f9fc-lb9nn 1/1 Running 0 3m50s

demoapp-c4787f9fc-rlljj 1/1 Terminating 0 28s

#再次查看,最终缩容成功

root@k8s-master01:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

demoapp-c4787f9fc-kbl7m 1/1 Running 0 6m3s

demoapp-c4787f9fc-lb9nn 1/1 Running 0 6m3s

4.17 Metrics部署

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

https://github.com/kubernetes-sigs/metrics-server

root@k8s-master01:~# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

将Master01节点的front-proxy-ca.crt复制到所有Node节点

root@k8s-master01:~# for i in k8s-node01 k8s-node02 k8s-node03;do scp /etc/kubernetes/pki/front-proxy-ca.crt $i:/etc/kubernetes/pki/front-proxy-ca.crt ; done

修改下面内容:

[root@k8s-master01 ~]# vim components.yaml

...

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

#添加下面内容

#添加下面内容

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt #kubeadm证书文件是front-proxy-ca.crt

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

...

volumeMounts:

- mountPath: /tmp

name: tmp-dir

#添加下面内容

- name: ca-ssl

mountPath: /etc/kubernetes/pki

...

volumes:

- emptyDir: {

}

name: tmp-dir

#添加下面内容

- name: ca-ssl

hostPath:

path: /etc/kubernetes/pki

...

下载镜像并修改镜像地址:

root@k8s-master01:~# grep "image:" components.yaml

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

root@k8s-master01:~# cat download_metrics_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_metrics_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' components.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Metrics镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Metrics镜像下载完成"${END}

}

images_download

root@k8s-master01:~# bash download_metrics_images.sh

root@k8s-master01:~# sed -ri 's@(.*image:) k8s.gcr.io/metrics-server(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' components.yaml

root@k8s-master01:~# grep "image:" components.yaml

image: harbor.raymonds.cc/google_containers/metrics-server:v0.6.1

root@k8s-master01:~# kubectl apply -f components.yaml

查看状态

root@k8s-master01:~# kubectl get pod -n kube-system |grep metrics

metrics-server-6dcf48c9dc-pdghg 1/1 Running 0 35s

root@k8s-master01:~# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01.example.local 339m 16% 1658Mi 43%

k8s-master02.example.local 319m 15% 1177Mi 30%

k8s-master03.example.local 299m 14% 1318Mi 34%

k8s-node01.example.local 133m 6% 759Mi 19%

k8s-node02.example.local 136m 6% 723Mi 19%

k8s-node03.example.local 156m 7% 764Mi 20%

4.18 Dashboard部署

Dashboard用于展示集群中的各类资源,同时也可以通过Dashboard实时查看Pod的日志和在容器中执行一些命令等。

https://github.com/kubernetes/dashboard/releases

查看对应版本兼容的kubernetes版本

root@k8s-master01:~# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.1/aio/deploy/recommended.yaml

[root@k8s-master01 ~]# vim recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加这行

ports:

- port: 443

targetPort: 8443

nodePort: 30005 #添加这行

selector:

k8s-app: kubernetes-dashboard

...

root@k8s-master01:~# grep "image:" recommended.yaml

image: kubernetesui/dashboard:v2.6.1

image: kubernetesui/metrics-scraper:v1.0.8

root@k8s-master01:~# cat download_dashboard_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_dashboard_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' recommended.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Dashboard镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Dashboard镜像下载完成"${END}

}

images_download

root@k8s-master01:~# bash download_dashboard_images.sh

root@k8s-master01:~# docker images |grep -E "(dashboard|metrics-scraper)"

harbor.raymonds.cc/google_containers/dashboard v2.6.1 783e2b6d87ed 2 weeks ago 246MB

harbor.raymonds.cc/google_containers/metrics-scraper v1.0.8 115053965e86 3 months ago 43.8MB

root@k8s-master01:~# sed -ri 's@(.*image:) kubernetesui(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' recommended.yaml

root@k8s-master01:~# grep "image:" recommended.yaml

image: harbor.raymonds.cc/google_containers/dashboard:v2.6.1

image: harbor.raymonds.cc/google_containers/metrics-scraper:v1.0.8

root@k8s-master01:~# kubectl apply -f recommended.yaml

创建管理员用户admin.yaml

root@k8s-master01:~# cat admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

4.18.1 登录dashboard

在谷歌浏览器(Chrome)启动文件中加入启动参数,用于解决无法访问Dashboard的问题,参考图1-1:

--test-type --ignore-certificate-errors

图1-1 谷歌浏览器 Chrome的配置

root@k8s-master01:~# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.110.52.91 <none> 443:30005/TCP 13m

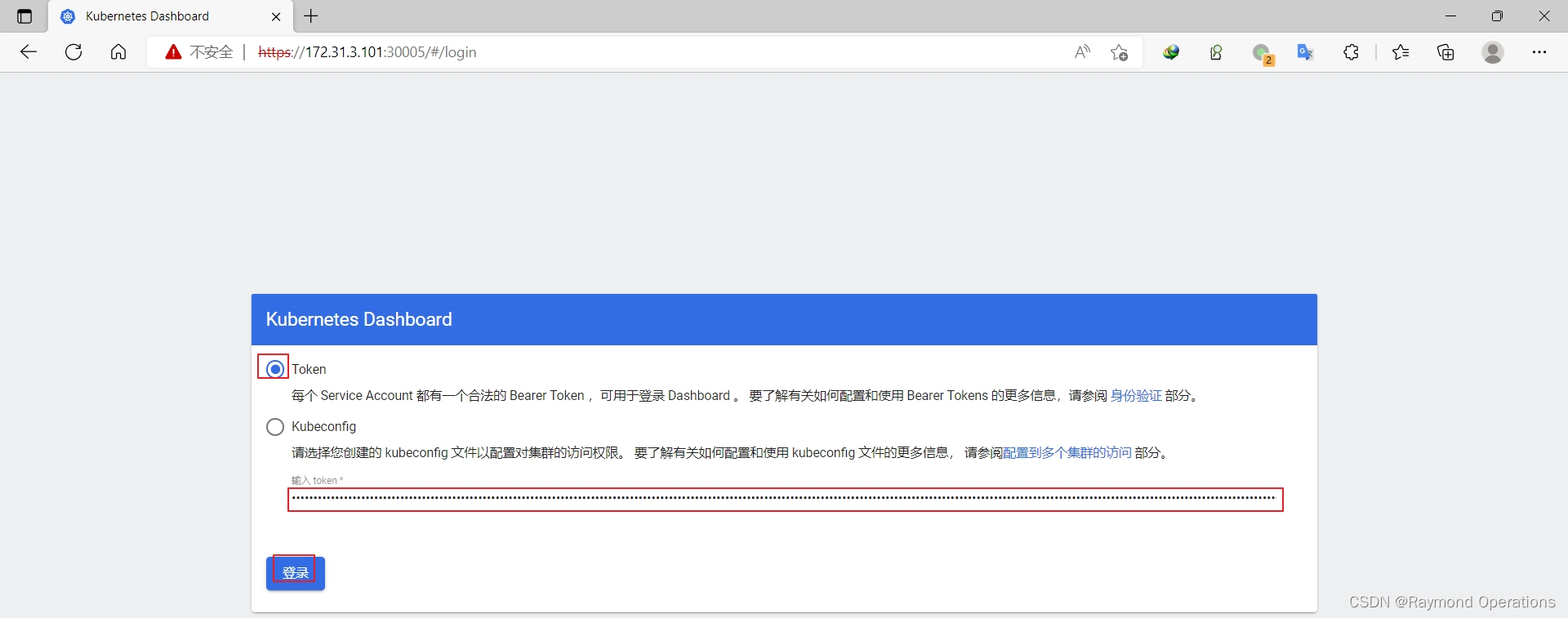

访问Dashboard:https://172.31.3.101:30005,参考图1-2

图1-2 Dashboard登录方式

4.18.2 token登录

创建token:

root@k8s-master01:~# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6IlZ5QWEtZjkzVW51eWJkZlJESTA4ZGNvLUdXM0lIeTVkMktRakhzckxIZTQifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjYxODc5NTA1LCJpYXQiOjE2NjE4NzU5MDUsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiNmY0ZTk5N2MtNDlhNC00MDMwLWE4NTQtNWNjNGE5NzRmYWQ4In19LCJuYmYiOjE2NjE4NzU5MDUsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.mAm2OGxsRTLCL-NKJpWM3NanRLHGSE9zwf80HayF_spIkD_w5KWRWUplvTVfks6sSgcZT38IzVZAEtHWwOf_qvDZNfp5-aVq9t4eb_jnbtRFSZVpDarF-AeHNAlbZk--DI-U--nsc8xsl-YjmVjhAYqL5xrqAPjnZdo7ewTIuj94MWOcwN4I3OjJCq0vPoOqTf2r4pkgadjZJIV1Shvcn304Ol-Sxt0OBtKhwrXQDKGvJGGBCxQdq8LD8uFKRSf1-gjwOgK_f617UzoDjpZB0wy0JodS0Q0G8HOMs1pmpiqVIhi_azcd8-961Q4eynDuHAKO9Hgt3gRp5wxhqV5L1A

将token值输入到令牌后,单击登录即可访问Dashboard,参考图1-3:

4.18.3 使用kubeconfig文件登录dashboard

root@k8s-master01:~# cp /etc/kubernetes/admin.conf kubeconfig

root@k8s-master01:~# cat kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeU1EZ3pNREV6TXpZeU5sb1hEVE15TURneU56RXpNell5Tmxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTk5JCnRrSmoyRlUrcFRROG5LcjN6dlY1VXhqVGt1YUZNTk1yUG5ubXJOUlJRN1dFRWVIMG1GYkprWjRXbGpkYk0xZ1UKaFRUN2xLanpUQTdHUHdST3QxMytKVWdEdjlvQ0Z2NGRHTitRMXp5S1I0V2UzTzhIWUNyUUxjaUkwWGRXU1VDQwpWL3lMR1ByLzRHbEtmZlZzTjIrU1Fjc0FjaGFUc29aRHpGZ1lNN2RwTjRlSlR5NG1IZmREY3JSM2dGRURJczJMCkp4VHMyUzk4WU02YWhlb0dSM3diMzZvZm5IYldHVWN3ZWNoa29GUGJ3R1FuWUk0bU9PUmpPWnRWZURsaDZLVGgKZEQxNnN1bTdWWGc1MSsvbGJSTnZLMGxuN3Q1d3ZjV1JPRWJwOHhDbC8vcVYrWnZrd1BwdVphblkyM3B5UzJoZworY3VtekQza1VDQTF3TXNZeEEwQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZMUnNpb250SHk3UUlSbnl4QWtleWlLeVowSFVNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBRlBVUjRLNWJmL2UxeHA5UEc1TgpyRERyL3kyYU1sL1plYStNMkJsZjhJbWJDcEYxR2F1WXIxNjdRTWRXanl3SGVvL1RYcjhPMGtEWHJBNGEyWXkrClJhZzk1RG9FajBJWEo4czhQRHg2ZnEwMEJZUnJjZDc1QmZZZDYxRUJpblFZcXIrYjNMaHVFQVlndDRXMXpDQWsKQWJVdUxKTDh3N3A3ZTVlbHVNejRMNmxVeDZWbVZ1M3ZnUTFTT1REUWh1enNTNEpobTUxL2tPcDJHRkI0d0NJcApRWW5HZGkzanJYbndYWDFPSUdPNUdRRjB4bkxpSWkycStlRS9OelNQMG9FQ0NNZk9ISDk1aTAycHI2WHkyaVhUCmVsM0p6SDV2cHc4TWpsa2NzOWhCK1FRQU10blVRQmszR01zbmpsMnh5OTZCS3dERHRUS21KMGw2YVdXY2FjRFoKWWZVPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://kubeapi.raymonds.cc:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {

}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJZStjUDgyY1dFcll3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TWpBNE16QXhNek0yTWpaYUZ3MHlNekE0TXpBeE16TTJNamhhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXdKdlVBcEtTbnFkN1luVHgKSVFqTTNoaEFMUHM3ZlJKNzl5eDZGa29Fa0o4N1JqaXlkdVVhS3RWSzRoQTRMYW5STk5kd3JNa3A3RGFBbVN5ZwpGS1Y1d25TMDRYWkJXenJCRGVCNG5TczZLNlM3ekxRdzY2cmtyRHNyU3RGT2FkU2dhWW9HTml1UXcwUVljclNmClVLU0NYQlVMODkzQmlKaDllbWllMmdUYm9jT2hXOW5mSXdhemsxZ3V6UGJJMzkzQkNvTGF6WkNlNW54N3phZG8KZ1dSSGQxSVZydkxaUlVvVmhQdjFVd2UrWGxscUViU0paTUk4dVB6K2owT2NvNXFYcTJ1T2pOT1pVS0lPbnFSUApXdGxwSUROcXpPKzRIS0ZmUk1oT2hTcU94SjZMZnA4R284TVBGMkRLcWhDZWVFSlZpU1dKdG5CZHNXWFE2VWpSCkhPT1Yxd0lEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JTMGJJcUo3Ujh1MENFWjhzUUpIc29pc21kQgoxREFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBYlM4Y01ZTTF1eVVoUEhHQ0JFWW5xbnlVOXdaRTRCekEzRXlqCkduNFg2L09kUzUxR3BWOExSdGMxN1EzZjgvZFRURkxvWkptV0MxNFhhM2dQWnMrZ1lmL0w4ZWtQZ1MzenVJT0IKT2Y5ZXovY2xWSHdWcnFySzJQL01PU2tBRWlqNWR5UGR6aFZBRU44Rkd2M1JVVjZUNDNKaUx3K2R1b3JwRi9PcQowRWp0UlhEK2loeklVQm9xMzdSM0xTcmdzZXhiekIyUldEbm5YVllWdkFYZENkck1JUno1T0xzOENzYk5MOEtjCkwyS1p6bW02OTl4MUtYR09Lek51R2xkZWFUM01YVGxuWDVIWG4wRkdmOUliSW5EWmdMd3Y2RVhQZFhKa3k2MTMKOHBIU2hPa3Npby9sMU1BdVpBR0hkYVpOT1hHTk1vbVROWVpZM1dsRk15S0EySTFlcXc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBd0p2VUFwS1NucWQ3WW5UeElRak0zaGhBTFBzN2ZSSjc5eXg2RmtvRWtKODdSaml5CmR1VWFLdFZLNGhBNExhblJOTmR3ck1rcDdEYUFtU3lnRktWNXduUzA0WFpCV3pyQkRlQjRuU3M2SzZTN3pMUXcKNjZya3JEc3JTdEZPYWRTZ2FZb0dOaXVRdzBRWWNyU2ZVS1NDWEJVTDg5M0JpSmg5ZW1pZTJnVGJvY09oVzluZgpJd2F6azFndXpQYkkzOTNCQ29MYXpaQ2U1bng3emFkb2dXUkhkMUlWcnZMWlJVb1ZoUHYxVXdlK1hsbHFFYlNKClpNSTh1UHorajBPY281cVhxMnVPak5PWlVLSU9ucVJQV3RscElETnF6Tys0SEtGZlJNaE9oU3FPeEo2TGZwOEcKbzhNUEYyREtxaENlZUVKVmlTV0p0bkJkc1dYUTZValJIT09WMXdJREFRQUJBb0lCQUJ0Ty9NUlFtOUU2MWRlagoxUHhtRHdYK1Vqc09jK1RMMWgrNWdxWGVZTDlRbEVya2h3a3Nlb1ZRTUluVTJ1SStqWmI4Wk5GYXhFTGxoMTR3CllaSUwxRE9wOEd0M0pOVVdnNERBTHRtNTQwbUUxY3UwVUt0WlU0ckg2TjkyeGJOam5rclljd0VETkVjN1JHd2YKQitlYks1QjZ1M01jSWZDSURtSm9xdjBtYXkySUhEUVdWLzJoTFVRdUxVOVJDNzFpcjFYTk5qTEdwNTQvY3U0SQpIallzQ1NwVUJBd1VxNkNxcS9tZmZwbklDbGsrQ2x4MXBYRk55SFg2L1g5UDlKbjlxY0YwajEzUURqT21rd1lJCmZ3VGhNVm8zejJiQUxVVUZYMDdTTGROZGIwQW92RUhwS1lUVGtBbWZqZmlvbng1bnNmemFZZ0UrSm9SV1NMU0sKK1lndTE2RUNnWUVBNkhZMldKUGJvR055L00zcFY4RVJLaGtGb0FRbDhEaUEvQkZpQ1dOclBLSlRpZ0NsM3hPMwpPQXVuYk5GRVNpeVA0Q1JTdzI4am0wMktkd0Y1VHZZN0orWEZzMk9BN0dRK2xHaDl0bWJCNlEyUm13ZnZrcENkCmJldzBva0MvNVI3aEozSXJGOGUwTFBLdTB4ZmxqQWdYRU1lZnpUcnAyQU9YRkxKRlFpa2x5T01DZ1lFQTFCeVAKUHdubXg2aytUdFFDT2l3dFU5RVRHdFFWTmFjUXZFNVF0emRCNTRDTCt0OVNLalI4WGIzSHpIZHhJUkVMNURscgozQWRpTU9qZmJIMnBJb2pWWEM0cWhGM254MkZLdCtoNHExSENDREJheS9PODdEVnZET1FwaDc5c1BtQnNSVHF5CjM5RTZmK3VmSWphZy9xRHBQeDdhZzJoMHlVWk9OZWtjRTR4NHRYMENnWUVBczlMbVhZVWJpNm9DeEk5aEo3SkIKWGVoM1VuNkMvcDRuSVZjdEdJZ2c1NG5HeCtXU2FzdXNteDFneWF2a2dPQ1I5OWtCY1E5all2c0wxdDE4QXRvMQpqcnFQUWlNQ0UxdkVrVGQzc0Fjemo5NGdPZVpjckd0VWJUa2d5amIrZXZaMVEvZHNZSHZxNUM1amtRWldXd25UCkZmYm1wbk1oZkNuaTBHN0xacysvMi9NQ2dZRUF4NWU4UDRCc3RqS09uQlNwcDkzTUpWUFdtMmM0TWcxc0ZSWEkKcEM4T0IrNlJTZGQ4OUpRQTl5RE84cHJ1VEVSRElWWGJKZWVZd1JkUXJrRXN0MzkwN2RIUFZsRWEraVdWN3FxRgphZ2g4QWNLbW5jWlVYeDBFeTJlam9NWkM4QXRCdG44K3RKZW9hWmpwWElOMVNVVlhWbnNNK1p5QVVLbWtqTncyCi9Eb3hsKzBDZ1lCSGxjaVhJTnV6azlMWERCcDhmK2JiVk5yWWhtd1M1cWVhUS90WE1SYkpNZUdHVzlPNVhGRjgKR09iY3U3TUltSWVYV2JDNHkvUnRMc3FDUkJCa1U5aVovaTYrTTNSWXRPU2NVSXV2Um8zb25pL2lKVWNqdXpucQpFYVdxQ1o3eFBTTHIyRDVsZzNDUXpGOVZtaXpveENoMjY0cFlzUlBzUVZCUmhRZlFHVUhZY2c9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlZ5QWEtZjkzVW51eWJkZlJESTA4ZGNvLUdXM0lIeTVkMktRakhzckxIZTQifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjYxODc5NTA1LCJpYXQiOjE2NjE4NzU5MDUsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiNmY0ZTk5N2MtNDlhNC00MDMwLWE4NTQtNWNjNGE5NzRmYWQ4In19LCJuYmYiOjE2NjE4NzU5MDUsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.mAm2OGxsRTLCL-NKJpWM3NanRLHGSE9zwf80HayF_spIkD_w5KWRWUplvTVfks6sSgcZT38IzVZAEtHWwOf_qvDZNfp5-aVq9t4eb_jnbtRFSZVpDarF-AeHNAlbZk--DI-U--nsc8xsl-YjmVjhAYqL5xrqAPjnZdo7ewTIuj94MWOcwN4I3OjJCq0vPoOqTf2r4pkgadjZJIV1Shvcn304Ol-Sxt0OBtKhwrXQDKGvJGGBCxQdq8LD8uFKRSf1-gjwOgK_f617UzoDjpZB0wy0JodS0Q0G8HOMs1pmpiqVIhi_azcd8-961Q4eynDuHAKO9Hgt3gRp5wxhqV5L1A

5.一些必须的配置更改

将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下:

在master01节点执行

root@k8s-master01:~# curl 127.0.0.1:10249/proxyMode

iptables

root@k8s-master01:~# kubectl edit cm kube-proxy -n kube-system

...

mode: "ipvs"

更新Kube-Proxy的Pod:

root@k8s-master01:~# kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

daemonset.apps/kube-proxy patched

验证Kube-Proxy模式

root@k8s-master01:~# curl 127.0.0.1:10249/proxyMode

ipvs

root@k8s-master01:~# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:30005 rr

-> 192.169.111.132:8443 Masq 1 0 0

TCP 172.17.0.1:31101 rr

-> 192.167.195.129:80 Masq 1 0 0

-> 192.169.111.129:80 Masq 1 0 0

TCP 172.31.3.101:30005 rr

-> 192.169.111.132:8443 Masq 1 0 0

TCP 172.31.3.101:31101 rr

-> 192.167.195.129:80 Masq 1 0 0

-> 192.169.111.129:80 Masq 1 0 0

TCP 192.162.55.64:30005 rr

-> 192.169.111.132:8443 Masq 1 0 0

TCP 192.162.55.64:31101 rr

-> 192.167.195.129:80 Masq 1 0 0

-> 192.169.111.129:80 Masq 1 0 0

TCP 10.96.0.1:443 rr

-> 172.31.3.101:6443 Masq 1 0 0

-> 172.31.3.102:6443 Masq 1 0 0

-> 172.31.3.103:6443 Masq 1 1 0

TCP 10.96.0.10:53 rr

-> 192.162.55.65:53 Masq 1 0 0

-> 192.162.55.67:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 192.162.55.65:9153 Masq 1 0 0

-> 192.162.55.67:9153 Masq 1 0 0

TCP 10.101.15.4:8000 rr

-> 192.170.21.198:8000 Masq 1 0 0

TCP 10.102.2.33:443 rr

-> 192.170.21.197:4443 Masq 1 0 0

TCP 10.106.167.169:80 rr

-> 192.167.195.129:80 Masq 1 0 0

-> 192.169.111.129:80 Masq 1 0 0

TCP 10.110.52.91:443 rr

-> 192.169.111.132:8443 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 192.162.55.65:53 Masq 1 0 0

-> 192.162.55.67:53 Masq 1 0 0