一、背景

因为公司做的B端口项目,很多的大型的B端客户服务升级迭代都会多少有些问题,为了降低迭代风险,所以考虑对于一些重要且版本比较老旧的客户,需要在公司内部搭建一套内部测试环境,有涉及rocketmq,redis等中间件服务,服务器资源申请也比较麻烦,所以就考虑用k8s跑一些服务,整体实现的部署架构是jenkins+kuboard+k8s。

二、业务服务编写Dockerfile

以用户服务作为示例:

FROM hub.xx/imas/openjdk:8-jdk-alpine

#FROM hub.xx/imas/openjdk:8-jdk-alpine-swagent

#java启动参数配置

ENV JAVA_OPTS=""

#应用配置

ENV BOOTSTRAP_NAME="bootstrap-prod"

ENV PROFILE="prod"

COPY target/imas-user.jar /opt/imas-user.jar

RUN apk add --update font-adobe-100dpi ttf-dejavu fontconfig

ENTRYPOINT java ${JAVA_OPTS} -Djava.security.egd=file:/dev/./urandom -jar /opt/imas-user.jar --spring.cloud.bootstrap.name=${BOOTSTRAP_NAME} --spring.cloud.config.profile=${PROFILE}

三、编写jenkinsFile

pipeline {

agent any

options {

timestamps()

disableConcurrentBuilds()

timeout(time: 5, unit: 'MINUTES')

buildDiscarder(logRotator(numToKeepStr: '3'))

}

environment {

// 生成镜像标签信息

GIT_TAG = sh(returnStdout: true,script: 'git describe --tags --always | cut -d"-" -f1').trim()

GIT_HASH = sh(returnStdout: true,script: 'git log --oneline -1 business-services/user-parent/imas-user | cut -d" " -f1').trim()

BUILD_DATE = sh(returnStdout: true,script: 'date +%y%m%d%H%M').trim()

}

parameters {

// 指定项目地址和项目名

string(name: 'PROJECT_PATH', defaultValue: 'business-services/user-parent/imas-user', description: '项目路径')

string(name: 'PROJECT_NAME', defaultValue: 'imas-user', description: '项目名')

}

stages {

// 调用maven容器构建jar包

stage('Maven Build') {

steps {

sh 'mvn clean install -pl ${PROJECT_PATH} -am'

}

}

// 调用docker容器构建镜像并上传至镜像仓库

stage('Deploy to PRO') {

when {

equals expected: 'origin/xxx',

actual: GIT_BRANCH

}

environment {

// 生成历史版本镜像名称及标签

IMAGE_NAME = sh(returnStdout: true,script: 'echo ${HARBOR_ADDR}/imas/xxx-${PROJECT_NAME}:${GIT_TAG}-${BUILD_DATE}-${GIT_HASH}').trim()

IMAGE_NAME_PRO = sh(returnStdout: true,script: 'echo ${HARBOR_ADDR}/imas/xxx-${PROJECT_NAME}:latest').trim()

// 镜像仓库账户信息

HARBOR_CREDS = credentials('globalvpclubcrdes')

}

steps {

echo '打包镜像${IMAGE_NAME}'

sh 'docker login -u ${HARBOR_CREDS_USR} -p ${HARBOR_CREDS_PSW} ${HARBOR_ADDR}'

sh 'docker build -t ${IMAGE_NAME} business-services/user-parent/imas-user'

sh 'docker push ${IMAGE_NAME}'

//线上使用版本

sh 'docker tag ${IMAGE_NAME} ${IMAGE_NAME_PRO}'

sh 'docker push ${IMAGE_NAME_PRO}'

sh 'docker rmi ${IMAGE_NAME}'

sh 'docker rmi ${IMAGE_NAME_PRO}'

}

}

// 部署至PRO环境k8s集群

stage('Deploy to PRO_k8s') {

when {

equals expected: 'origin/xxx',

actual: GIT_BRANCH

}

steps {

sshPublisher(publishers: [sshPublisherDesc(configName: '5G-JENKINS-SLAVE154.3', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: 'sh /data/CICD/xxx//imas-user.sh', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: ' /data/CICD/', remoteDirectorySDF: false, removePrefix: '', sourceFiles: 'pom.xml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: false)])

}

}

}

post {

always {

sh 'echo "清理workspace"'

deleteDir()

}

}

}

【备注】

jenkinsfile的目的是为了发布服务,并且将业务服务镜像推送到公司仓库

四、kuboard创建服务应用

4.1 创建一个新的命名空间

内部使用命令空间隔离一套环境

4.2 创建项目中需要要用的配置文件

---

apiVersion: v1

data:

ALLOWED_ORIGINS: 'http://xx.cn/'

AUTH_HOST: imas-auth.xxx

CLOUD_CONFIG_HOST: platform-config.xxx

CLOUD_CONFIG_PORT: '9130'

CLOUD_CONFIG_PROFILE: xxx

CONFIG_URL: 'http://platform-config.xxx:9130'

CONSUL_HOST: consul.xxx

CONSUL_PORT: '8500'

DB_HOST: 172.16.11.21

DB_PORT: '3306'

DB_PWD: riX$F7jK

DB_USERNAME: root

FALLBACK_DOMAIN: 'http://gbch5tst.imas.vpclub.cn'

LOGSTASH_URL: '172.16.154.xxx:10007'

REDIS_HOST: redis.xxx

REDIS_PORT: '6379'

REDIS_PWD: '123456'

ROCKET_MQ_URL: 'mqnamesrv:9876'

SSO_URL: 'https://media.imas.vpclub.cn/attachment/v1/file'

TRACER_URL: '121.37.214.xx:22122'

XXL_JOB_ACCESS_TOKEN: prod

XXL_JOB_URL: 'http://xxxx.cn/xxl-job-admin/'

kind: ConfigMap

metadata:

name: config

namespace: xxx

resourceVersion: '70545837'

4.3 k8s创建redis服务

ConfigMap-redis.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-conf

namespace: guangben

data:

redis.conf: |

bind 0.0.0.0

port 6379

requirepass 123456

pidfile .pid

appendonly yes

cluster-config-file nodes-6379.conf

pidfile /data/middleware-data/redis/log/redis-6379.pid

cluster-config-file /data/middleware-data/redis/conf/redis.conf

dir /data/middleware-data/redis/data/

logfile "/data/middleware-data/redis/log/redis-6379.log"

cluster-node-timeout 5000

protected-mode noredis-StatefulSet.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: guangben

spec:

replicas: 1

serviceName: redis

selector:

matchLabels:

name: redis

template:

metadata:

labels:

name: redis

spec:

initContainers:

- name: init-redis

image: busybox

command: ['sh', '-c', 'mkdir -p /data/middleware-data/redis/log/;mkdir -p /data/middleware-data/redis/conf/;mkdir -p /data/middleware-data/redis/data/']

volumeMounts:

- name: data

mountPath: /data/middleware-data/redis/

containers:

- name: redis

image: redis:5.0.6

imagePullPolicy: IfNotPresent

command:

- sh

- -c

- "exec redis-server /data/middleware-data/redis/conf/redis.conf"

ports:

- containerPort: 6379

name: redis

protocol: TCP

volumeMounts:

- name: redis-config

mountPath: /data/middleware-data/redis/conf/

- name: data

mountPath: /data/middleware-data/redis/

volumes:

- name: redis-config

configMap:

name: redis-conf

- name: data

hostPath:

path: /data/middleware-data/redis/

Service-redis.yaml

kind: Service

apiVersion: v1

metadata:

labels:

name: redis

name: redis

namespace: guangben

spec:

type: NodePort

ports:

- name: redis

port: 6379

targetPort: 6379

nodePort: 30020

selector:

name: redis执行创建命令:

kubectl apply -f ConfigMap-redis.yaml

kubectl apply -f redis-StatefulSet.yaml

kubectl apply -f Service-redis.yaml【资源源文件】https://download.csdn.net/download/qq_35008624/85684462

4.4 k8s创建rocketmq服务

(1) 把处理好的rocketmq服务包准备好

(2) Dockerfile文件编写

FROM hub.xxn/imas/openjdk:8-jdk-alpine

RUN rm -f /etc/localtime \

&& ln -sv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \

&& echo "Asia/Shanghai" > /etc/timezone

ENV LANG en_US.UTF-8

ADD rocketmq4.7.tar.gz /usr/local/

RUN mkdir -p /data/imas/base_soft/rocketmq4.7/data/store

CMD ["/bin/bash"]

(3) 执行上传命令

docker build -t hub.xx/imas/rocketmq-470 .

docker push hub.xx/imas/rocketmq-470:latest【资源源文件】https://download.csdn.net/download/qq_35008624/85684462

4.5 k8s创建rocketmq-console服务

(1) 把处理好的rocketmq-console-ng-1.0.1.jar服务包准备好

(2) Dockerfile文件编写

FROM hub.xx/imas/openjdk:8-jdk-alpine

#java启动参数配置

ENV JAVA_OPTS="-Drocketmq.config.namesrvAddr=mqnamesrv:9876 -Drocketmq.config.isVIPChannel=false"

COPY rocketmq-console-ng-1.0.1.jar /opt/rocketmq-console-ng-1.0.1.jar

ENTRYPOINT java ${JAVA_OPTS} -Djava.security.egd=file:/dev/./urandom -jar /opt/rocketmq-console-ng-1.0.1.jar --server.port=10081(3) 执行上传命令

docker build -t hub.xx/imas/rocketmq-console .

docker tag hub.xx/imas/rocketmq-console hub.vpclub.cn/imas/rocketmq-console:1.0.1

docker push hub.xx/imas/rocketmq-console:latest

docker push hub.xx/imas/rocketmq-console:1.0.1【资源源文件】https://download.csdn.net/download/qq_35008624/85684462

部署好后在kuboard上查看如下所示:

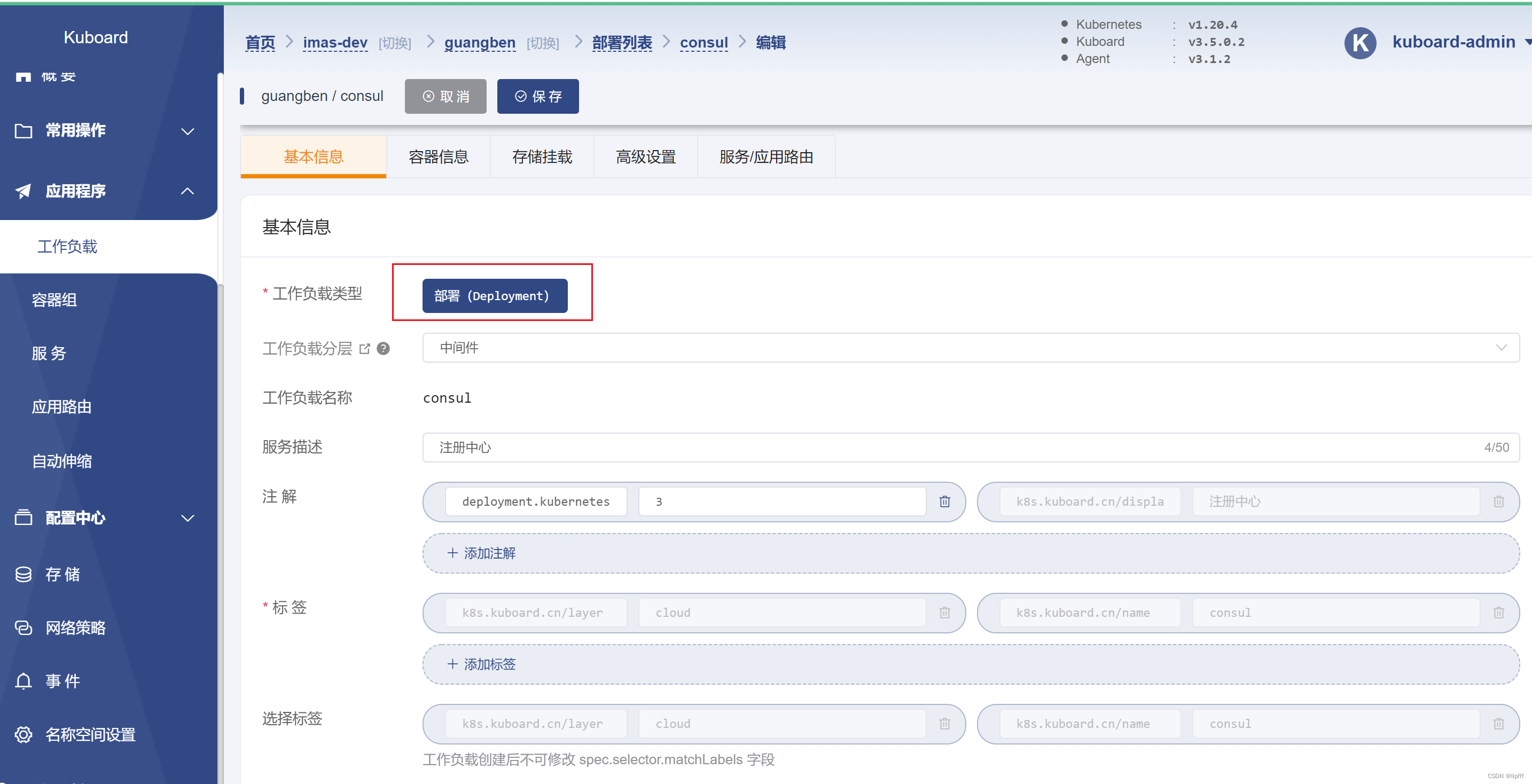

4.6 k8s创建注册中心consul(单机dev)

工作负载类型使用Deployment

【备注】单机版的比较简单直接在kuboard创建即可。需要注意的是路由配置ingress域名的时候,要把域名解析到对应内网服务器(k8s集群master)对应的公网,在公网转发的nginx服务只需要配置对应的转发内网ip,代理到ingress controller所在ip的80 443

【扩展】 对于集群版本的consul部署,使用StatefulSet类型的负载

根据对应的yaml创建

(1)创建nfs

k8s-serviceAccount.yaml

#nfs-client的自动装载程序安装、授权

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: imas

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: imas

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: leader-locking-nfs-client-provisioner

namespace: imas

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: imas

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: imas

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.iok8s-serviceAccount.yaml

#nfs-client的自动装载程序安装

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: imas

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.12.142

- name: NFS_PATH

value: /data/projects/consul/consul-data

volumes:

- name: nfs-client-root

nfs:

server: 192.168.12.142

path: /data/projects/consul/consul-data

(2)创建storage-class(使用pvc方式存储数据时需要)

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: consul-sc

namespace: imas

provisioner: fuseim.pri/ifs

reclaimPolicy: Retain(3)创建k8s-consul-statefulset-nfs.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: imas

name: consul

spec:

serviceName: consul

selector:

matchLabels:

app: consul

component: server

replicas: 3

template:

metadata:

labels:

app: consul

component: server

spec:

terminationGracePeriodSeconds: 10

containers:

- name: consul

image: hub.vpclub.cn/imas/consul:1.9.1

args:

- "agent"

- "-server"

- "-bootstrap-expect=3"

- "-ui"

- "-data-dir=/consul/data"

- "-bind=0.0.0.0"

- "-client=0.0.0.0"

- "-advertise=$(PODIP)"

- "-retry-join=consul-0.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-1.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-2.consul.$(NAMESPACE).svc.cluster.local"

- "-domain=cluster.local"

- "-disable-host-node-id"

env:

- name: PODIP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- containerPort: 8500

name: ui-port

- containerPort: 8400

name: alt-port

- containerPort: 53

name: udp-port

- containerPort: 8443

name: https-port

- containerPort: 8080

name: http-port

- containerPort: 8301

name: serflan

- containerPort: 8302

name: serfwan

- containerPort: 8600

name: consuldns

- containerPort: 8300

name: server

volumeMounts:

- name: consuldata

mountPath: /consul/data

volumeClaimTemplates:

- metadata:

name: consuldata

spec:

storageClassName: consul-sc

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 5Gi

(4)创建k8s-consul-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: imas

name: consul

labels:

name: consul

spec:

type: ClusterIP

clusterIP: None

ports:

- name: http

port: 8500

targetPort: 8500

- name: rpc

port: 8400

targetPort: 8400

- name: serflan-tcp

protocol: "TCP"

port: 8301

targetPort: 8301

- name: serflan-udp

protocol: "UDP"

port: 8301

targetPort: 8301

- name: serfwan-tcp

protocol: "TCP"

port: 8302

targetPort: 8302

- name: serfwan-udp

protocol: "UDP"

port: 8302

targetPort: 8302

- name: server

port: 8300

targetPort: 8300

- name: consuldns

port: 8600

targetPort: 8600

selector:

app: consul

(5)创建consul-ingress.yml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: imas

name: ingress-consul

annotations:

kubernets.io/ingress.class: "nginx"

spec:

rules:

- host: consul.xxx.cn

http:

paths:

- path:

backend:

serviceName: consul

servicePort: 8500

【备注】 将集群master公网ip解析到对应的ingress域名即可

创建好后在kuboard看:

4.7 k8s创建用户服务

【执行的命令】 java -Djava.security.egd=file:/dev/./urandom -jar /opt/imas-user.jar --spring.cloud.bootstrap.name=bootstrap-xx --spring.cloud.config.profile=xx

4.8 k8s部署前端应用

(1)创建Dockerfile

FROM nginx:1.13.6-alpine

MAINTAINER lipf([email protected])

ARG TZ="Asia/Guangzhou"

ENV TZ ${TZ}

RUN apk upgrade --update \

&& apk add bash tzdata \

&& ln -sf /usr/share/zoneinfo/${TZ} /etc/localtime \

&& echo ${TZ} > /etc/timezone \

&& rm -rf /var/cache/apk/*

COPY dist /usr/share/nginx/html

CMD ["nginx", "-g", "daemon off;"]

(2)jenkins发布

#!/bin/bash

PROJECT_NAME=xx-imas-oms-web

VERSION=latest

npm install

npm run build

docker rmi -f $(docker images|grep ${PROJECT_NAME}|grep latest|awk '{print $3}'|head -n 1)

#打包镜像

docker build -t hub.xx.cn/imas/xx-imas-oms-web:latest .

docker push hub.xx.cn/imas/xx-imas-oms-web:latest

#更新POD

curl -X PUT \

-H "Content-Type: application/yaml" \

-H "Cookie: KuboardUsername=admin; KuboardAccessKey=mn25z3ytbkxe.2fwn4behc5pj863rcf3ih674t6y2w7re" \

-d '{"kind":"deployments","namespace":"xx","name":"imas-oms-web"}' \

"http://172.16.11.xx:30080/kuboard-api/cluster/imas-dev/kind/CICDApi/admin/resource/restartWorkload"【备注】jenkins具体实现CICD主要通过调用kuboard生成的CICD命令实现