一、安装es

1)我是个懒人,所以选择rpm安装,一口气把ELK用的都下下来

mkdir /source && cd /source

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.3.2-x86_64.rpm

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.3.2.rpm

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.2-x86_64.rpm

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.3.2-x86_64.rpm

yum install -y elasticsearch-7.3.2-x86_64.rpm

2)修改配置

vim /etc/elasticsearch/elasticsearch.yml

node.name: ES1

network.host: 0.0.0.0

http.port: 9200

cluster.initial_master_nodes: ["ES1"]

3)修改权限

chown -R elasticsearch:elasticsearch /etc/elasticsearch/

4)启动es

systemctl start elasticsearch

5)测试es

[root@txvm2019 ~]# curl localhost:9200

{

"name" : "ES1",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "HJKFSDFd0234jflsdfj",

"version" : {

"number" : "7.2.0",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "508c38a",

"build_date" : "2019-06-20T15:54:18.811730Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

注意cluster_uuid一定要有,不能使_na_,不然后边的ACL无法部署

6)设置elastic 用户密码 以及 kibana用户名密码

配置 TLS 和身份验证

使用 elasticsearchcertutil

命令生成证书,且无需担心证书通常带来的任何困扰,

便能完成这一步。

第1步,生成证书(通过证书,才能允许节点安全的通信)

/usr/share/elasticsearch/bin/elasticsearch-certutil cert -out /etc/elasticsearch/elastic-certificates.p12 -pass ""

接下来,修改/etc/elasticsearch/elasticsearch.yaml 配置文件,添加如下内容:

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: elastic-certificates.p12

生成的证书文件会在/etc/elasticsearch/里边,但是所有者是root,需手动再更改一下

chown -R elasticsearch:elasticsearch /etc/elasticsearch/

重启服务

systemctl restart elasticsearch

设置密码

这里我用手动配置的方法,自动是elasticsearch-setup-passwords auto

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

解释:

elastic 超级用户

Kibana 用于连接并且和Elasticsearch通信的

logstash_system 用于在Elasticsearch中存储监控信息

beats_system 用于在Elasticsearch中存储监控信息

如果有集群的话在elasticsearch各节点上配置TLS(证书)

最简单的方法就是,把配置好的主节点配置目录完全复制到其它节点的配置目录下即可;

二、部署kibana

1)安装kibana

yum install -y /source/kibana-7.3.2-x86_64.rpm

2)配置kibana

vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://localhost:9200"]

elasticsearch.preserveHost: true

kibana.index: ".kibana"

kibana.defaultAppId: "home"

elasticsearch.username: "kibana"

elasticsearch.password: "刚才设的密码"

elasticsearch.pingTimeout: 1500

elasticsearch.requestTimeout: 30000

elasticsearch.startupTimeout: 5000

logging.dest: /var/log/kibana.log

i18n.locale: "zh-CN"

3)创建配置文件

touch /var/log/kibana.log; chmod 777 /var/log/kibana.log

4)启动kibana服务,并检查进程和监听端口:

[root@elk_master kibana]# systemctl start kibana

[root@elk_master kibana]# ps aux |grep kibana

kibana 5286 2.6 4.1 1768616 338700 ? Ssl 16:45 1:29 /usr/share/kibana/bin/../node/bin/node --no-warnings --max-http-header-size=65536 /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml

root 6267 0.0 0.0 112664 2092 pts/0 S+ 17:41 0:00 grep --color=auto kibana

[root@elk_master kibana]# netstat -lntp |grep 5601

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 5286/node

注:由于kibana是使用node.js开发的,所以进程名称为node

5)配置一下反向代理,用于域名访问和增加安全性

vim /opt/nginx/conf/hosts/elk.conf

server {

listen 80;

server_name elk.workbai.com;

location / {

proxy_pass http://192.168.1.247:5601;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

nginx -s reload

然后在浏览器里进行访问,如:http://192.168.1.247:5601/

因为有X-pack的ACL,这里账户用超级用户elastic,不要用kibana用户,kibana用户权限不够,会返回403拒绝掉

登陆完毕会显示这个页面

到此我们的kibana就安装完成了,很简单,接下来就是安装logstash,不然kibana是没法用的。

三、部署logstash

1)安装logstash

yum install -y /source/logstash-7.3.2.rpm

2)安装完之后,先不要启动服务,先配置logstash收集syslog日志进行测试:

vim /etc/logstash/conf.d/syslog.conf

input {

# 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10514 # 定义监听端口

}

}

output {

# 定义日志输出

stdout {

codec => rubydebug # 将日志输出到当前的终端上显示

}

}

3)测试配置

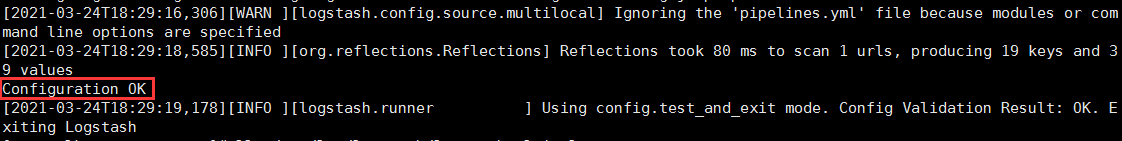

/usr/share/logstash/bin/logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

显示OK就是配置没问题

命令说明:

–path.settings 用于指定logstash的配置文件所在的目录(省略掉也没事儿)

-f 指定需要被检测的配置文件的路径

–config.test_and_exit 指定检测完之后就退出,不然就会直接启动了

4)配置rsyslog配置文件,添加logstash发送地址

vim /etc/rsyslog.conf

*.* @@192.168.1.247:10514

重启rsyslog让配置生效

systemctl restart rsyslog

5)指定配置文件,启动logstash测试rsyslog的日志是否有输出:

/usr/share/logstash/bin//logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

此时终端会停住,不要慌,logstash启动有点慢,要等等,过会儿rsyslog的日志会直接输出在终端上(毕竟配置里写的输出到终端嘛)

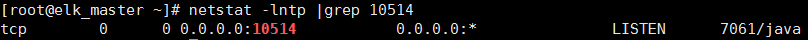

然后再开一个终端,可以看刚才配置的10514端口已经亮了

netstat -lntp |grep 10514

然后可以使用其他机器ssh登陆连接一下看看日志是否有刷出在终端上,输出结果如下:

{

"message" => "<86>Mar 24 18:18:22 elk_master sshd[7326]: Received disconnect from 186.147.129.110 port 33426:11: Bye Bye [preauth]\n",

"facility_label" => "kernel",

"tags" => [

[0] "_grokparsefailure_sysloginput"

],

"host" => "127.0.0.1",

"priority" => 0,

"@timestamp" => 2021-03-24T10:18:22.205Z,

"@version" => "1",

"type" => "system-syslog",

"severity" => 0,

"severity_label" => "Emergency",

"facility" => 0

}

{

"message" => "<86>Mar 24 18:18:22 elk_master sshd[7326]: Disconnected from 186.147.129.110 port 33426 [preauth]\n",

"facility_label" => "kernel",

"tags" => [

[0] "_grokparsefailure_sysloginput"

],

"host" => "127.0.0.1",

"priority" => 0,

"@timestamp" => 2021-03-24T10:18:22.206Z,

"@version" => "1",

"type" => "system-syslog",

"severity" => 0,

"severity_label" => "Emergency",

"facility" => 0

}

可以看到,终端中以JSON的格式打印了收集到的日志,测试成功。

6)以上只是测试的配置,这一步我们需要重新改一下配置文件,让收集的日志信息输出到es服务器中,而不是当前终端:

vim /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"] # 定义es服务器的ip

index => "system-syslog-%{+YYYY.MM}" # 定义索引

workers => 1

user => "elastic"

password => "自己设定的密码"

}

}

同样的需要检测配置文件有没有错:

/usr/share/logstash/bin//logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

可以看到有一些奇怪的提示,先不管,因为用root启动过logstash,所以会产生权限问题,这里把权限搞定

chown logstash:logstash /var/log/logstash/logstash-plain.log

chown -R logstash /var/lib/logstash/

7)启动logstash

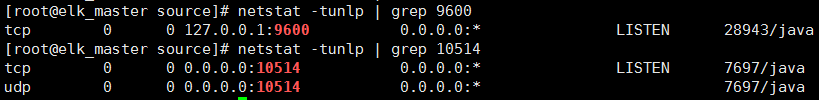

systemctl start logstash

端口也是要稍等下才会亮

netstat -tunlp | grep 9600

netstat -tunlp | grep 10514

8)验证数据进入ES索引

因为有加密,所以curl要把用户和密码写在url前边用@分隔

[root@elk_master conf.d]# curl 'elastic:密码@127.0.0.1:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open sfa-nginx-error-2021.03.29 UWfV9dSdTHCRVWxz9Ll15w 1 1 25 0 100.9kb 100.9kb

yellow open sfa-nginx-error-2021.03.26 lWsn0YTKR_ODPvU7kq2_Hg 1 1 22 0 59.6kb 59.6kb

yellow open sfa-nginx-error-2021.03.27 4jhxlRSDSe2ODptUQW3Nbg 1 1 27

如上,可以看到,在logstash配置文件中定义的system-syslog索引成功获取到了,证明配置没问题,logstash与es通信正常。status状态因为是单节点的关系,所以状态是黄,集群的话就是Green了。不影响使用,就是可靠性差而已。

获取指定索引详细信息:

[root@elk_master conf.d]# curl -XGET 'elastic:密码@127.0.0.1:9200/sfa-nginx-error-2021.03.29?pretty'

{

"sfa-nginx-error-2021.03.29" : {

"aliases" : {

},

"mappings" : {

"properties" : {

"@timestamp" : {

"type" : "date"

},

"@version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"agent" : {

"properties" : {

"ephemeral_id" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"hostname" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"id" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"type" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"ecs" : {

"properties" : {

"version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"host" : {

"properties" : {

"name" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"log" : {

"properties" : {

"file" : {

"properties" : {

"path" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"offset" : {

"type" : "long"

}

}

},

"message" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"tags" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1616976824696",

"number_of_shards" : "1",

"number_of_replicas" : "1",

"uuid" : "UWfV9dSdTHCRVWxz9Ll15w",

"version" : {

"created" : "7030299"

},

"provided_name" : "sfa-nginx-error-2021.03.29"

}

}

}

}

如果日后需要删除索引的话,使用以下命令可以删除指定索引:

curl -XDELETE 'elastic:密码@127.0.0.1:9200/sfa-nginx-error-2021.03.29'

四、kibana建立索引管理

es与logstash能够正常通信后就可以去配置kibana了,浏览器访问http://192.168.1.247:5601/,到kibana页面上配置索引:

五、定期清理ES索引

mkdir /scripts

vim /scripts/es_index_del.sh

#!/bin/bash

# 只保留7天内的索引

DATE=`date -d "7 days ago" "+%Y.%m.%d"`

curl -s -XDELETE -u elastic:ES的密码 "http://127.0.0.1:9200/*-${DATE}"

crontab -e

#删除es索引日志

59 23 * * * /opt/scripts/es_index_del.sh >/dev/null

至此单节点部署就完成了,后边我会再补充logstash筛选nginx日志和filebeat筛选tomcat日志的文章,后续会补充链接