Springboot集成Redis后运行时常见的报错信息和其解决方案

1. io.lettuce.core.protocol.CommandHandler : null Unexpected exception during request: java.io.IOException: 远程主机强迫关闭了一个现有的连接。

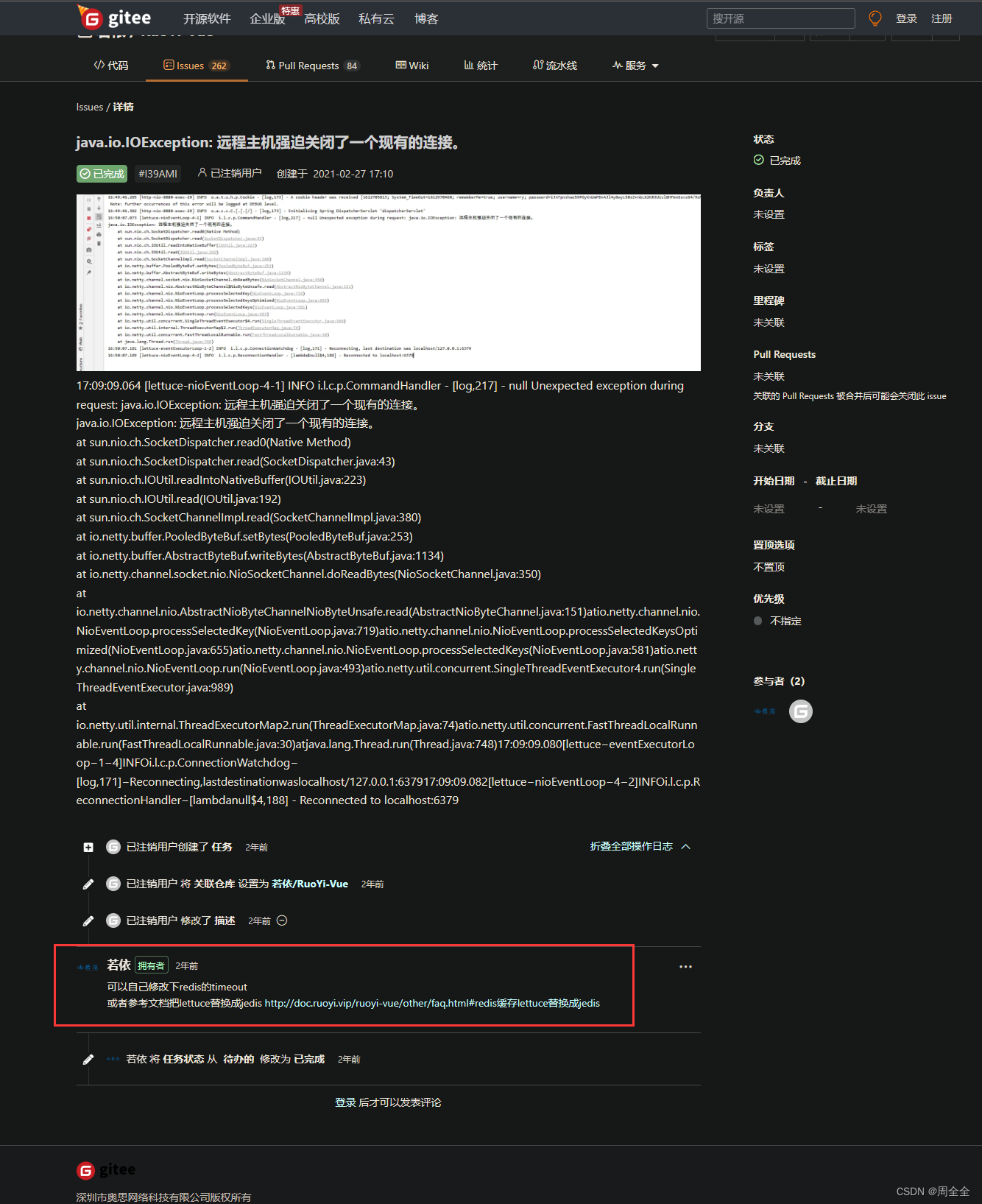

报错信息

during request: java.io.IOException: 远程主机强迫关闭了一个现有的连接。

项目时基于若依框架开发,在生产环境报错:

io.lettuce.core.protocol.CommandHandler : null Unexpected exception

during request: java.io.IOException: 远程主机强迫关闭了一个现有的连接。

详细日志信息如下:

INFO 3352 --- [ioEventLoop-4-1] io.lettuce.core.protocol.CommandHandler : null Unexpected exception during request: java.io.IOException: 远程主机强迫关闭了一个现有的连接。

java.io.IOException: 远程主机强迫关闭了一个现有的连接。

at sun.nio.ch.SocketDispatcher.read0(Native Method) ~[na:1.8.0_221]

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:43) ~[na:1.8.0_221]

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) ~[na:1.8.0_221]

at sun.nio.ch.IOUtil.read(IOUtil.java:192) ~[na:1.8.0_221]

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380) ~[na:1.8.0_221]

at io.netty.buffer.PooledByteBuf.setBytes(PooledByteBuf.java:253) ~[netty-buffer-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1132) ~[netty-buffer-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:350) ~[netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:151) ~[netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:719) [netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:655) [netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:581) [netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) [netty-transport-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986) [netty-common-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) [netty-common-4.1.69.Final.jar!/:4.1.69.Final]

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) [netty-common-4.1.69.Final.jar!/:4.1.69.Final]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_221]

2023-06-14 00:52:16.956 INFO 3352 --- [ionShutdownHook] org.quartz.core.QuartzScheduler : Scheduler TaskScheduler_$_iZj575z0jja92rZ1686469913890 paused.

2023-06-14 00:52:16.985 INFO 3352 --- [xecutorLoop-1-8] i.l.core.protocol.ConnectionWatchdog : Reconnecting, last destination was /172.17.151.173:6379

原因分析

日志中容易看出报错信息与redis有关,并且springboot 使用 lettuce做redis 的连接池,lettuce 调用netty与redis服务器通讯

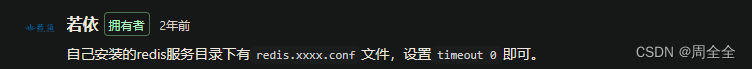

在gitee上找到了若依框架的相同问题,并且官方对于此问题给出了修改方案

解决方案

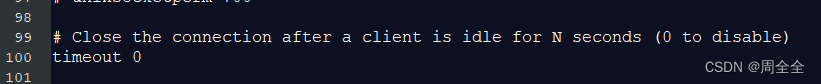

- 修改配置文件redis.windows.conf的timeout参数

Close the connection after a client is idle for N seconds (0 to disable)

在客户端空闲N秒后关闭连接(0表示禁用)

2. 检查spring项目中redis的配置

redis:

# 地址

host: 192.168.0.1

# 端口,默认为6379

port: 6379

# 数据库索引

database: 10

# 密码

password: 147258

# 连接超时时间 60s

timeout: 60s

lettuce:

pool:

# 连接池中的最小空闲连接

min-idle: 10

# 连接池中的最大空闲连接

max-idle: 30

# 连接池的最大数据库连接数

max-active: 8

# #连接池最大阻塞等待时间(使用负值表示没有限制)

max-wait: -1ms

3.redis.windows.conf中设置tcp-keepalive时间为60s

# TCP keepalive.

#

# If non-zero, use SO_KEEPALIVE to send TCP ACKs to clients in absence

# of communication. This is useful for two reasons:

#

# 1) Detect dead peers.

# 2) Take the connection alive from the point of view of network

# equipment in the middle.

#

# On Linux, the specified value (in seconds) is the period used to send ACKs.

# Note that to close the connection the double of the time is needed.

# On other kernels the period depends on the kernel configuration.

#

# A reasonable value for this option is 60 seconds.

tcp-keepalive 60

配置完成后重启redis服务

2. io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 1073741824, max: 1073741824)

报错信息

io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 1073741824, max: 1073741824)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:802) ~[netty-common-4.1.72.Final.jar:4.1.72.Final]

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:731) ~[netty-common-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:648) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:623) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:202) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena.tcacheAllocateSmall(PoolArena.java:172) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena.allocate(PoolArena.java:134) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PoolArena.allocate(PoolArena.java:126) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:395) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:188) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:179) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

at io.netty.buffer.AbstractByteBufAllocator.buffer(AbstractByteBufAllocator.java:116) ~[netty-buffer-4.1.72.Final.jar:4.1.72.Final]

...

原因分析

当前引入的依赖pom.xml

<!-- redis 缓存操作 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

Springboot2.x以后弃用jedis改为使用lettuce作为操作redis数据库的客户端,而Lettuce使用的 netty 框架,引用的netty包netty-common-4.1.49.Final.jar里PlatformDependent.java类 中的incrementMemoryCounter方法。该方法判断堆外内存是否大于当前服务可使用的内存,是则抛出throw new OutOfDirectMemoryError。因此该问题的发生是Spring对Lettuce的支持不够好,还存在问题。

private static void incrementMemoryCounter(int capacity) {

if (DIRECT_MEMORY_COUNTER != null) {

long newUsedMemory = DIRECT_MEMORY_COUNTER.addAndGet(capacity);

//校验堆外内存是否大于当前服务可使用的内存,如果大于则抛出 OutOfDirectMemoryError(堆外内存溢出)

if (newUsedMemory > DIRECT_MEMORY_LIMIT) {

DIRECT_MEMORY_COUNTER.addAndGet(-capacity);

throw new OutOfDirectMemoryError("failed to allocate " + capacity

+ " byte(s) of direct memory (used: " + (newUsedMemory - capacity)

+ ", max: " + DIRECT_MEMORY_LIMIT + ')');

}

}

}

解决方案

- 调大堆外内存 -Dio.netty.maxDirectMemory,但是治标不治本

- 放弃Lettuce客户端,改回Jedis客户客户端

<!--引入redis,排除lettuce的引用-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>io.lettuce</groupId>

<artifactId>lettuce-core</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- 引入Jedis客戶端-->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

</dependency>