今天我学习了DeepLearning.AI的 Building Systems with LLM 的在线课程,我想和大家一起分享一下该门课程的一些主要内容。之前我们已经学习了下面这些知识:

使用大型语言模(LLM)构建系统(一):分类

使用大型语言模(LLM)构建系统(二):内容审核、预防Prompt注入

使用大型语言模(LLM)构建系统(三):思维链推理

使用大型语言模(LLM)构建系统(四):链式提示

使用大型语言模(LLM)构建系统(五):输出结果检查

使用大型语言模(LLM)构建系统(六):构建端到端系统

使用大型语言模(LLM)构建系统(七):评估1

下面是我们访问LLM模型的主要代码:

import os

import openai

import sys

sys.path.append('../..')

import utils

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.environ["OPENAI_API_KEY"]

def get_completion_from_messages(messages,

model="gpt-3.5-turbo",

temperature=0,

max_tokens=500):

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=temperature,

max_tokens=max_tokens,

)

return response.choices[0].message["content"]通过运行端到端系统来回答用户查询

这里我们通过一个工具包utils来执行一系列的用户关于电子产品的问题的回复,utils中的函数在的原理在之前的博客中都有说明,这里不再赘述。

customer_msg = f"""

tell me about the smartx pro phone and the fotosnap camera, the dslr one.

Also, what TVs or TV related products do you have?"""

products_by_category = utils.get_products_from_query(customer_msg)

category_and_product_list = utils.read_string_to_list(products_by_category)

product_info = utils.get_mentioned_product_info(category_and_product_list)

assistant_answer = utils.answer_user_msg(user_msg=customer_msg,

product_info=product_info)这里我们把customer_msg翻译成中文,这样便于大家更好的理解其中的含义:

这里我们首先调用了utils.get_products_from_query方法来获取用户问题中所涉及的产品目录的清单(这个过程需要访问LLM)。由于LLM返回的产品目录清单是字符串型所以我们还需要调用utils.read_string_to_list方法将字符串转换成python的List格式,然后我们查询出所有产品目录清单中所包含的所有产品的具体信息(这个过程不需要访问LLM),最后我们将用户的问题和具体的产品信息一起发送给LLM,并生产最终的回复。下面我们分别看一下上面那些变量中的具体内容:

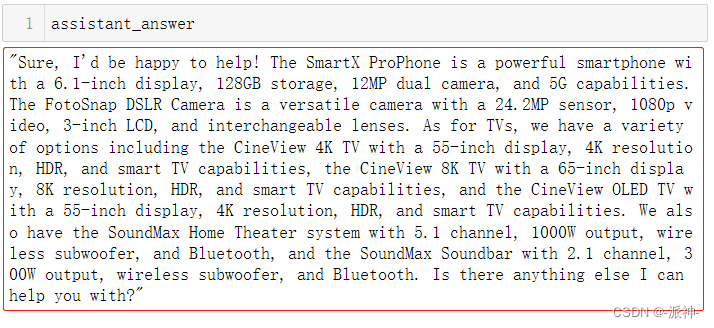

最后我们查看LLM的最终回复:

由于客户的问题中涉及了多个电子产品,因此LLM的回复的信息量有点多,我们如何来评估LLM的回复是否符合要求呢?

根据提取的产品信息,使用评分标准评估LLM对用户的回答

这里我们需要制定一个评分标准来评估LLM的最终回复是否合格,评估的依据来自于客户的问题,具体的产品信息以及LLM的最终回复这三部分内容。也就是说我们需要把这3部分内容整合在一起然后让LLM再来评估自己之前的回复是否合格,下面我们定义一个eval_with_rubric函数来实现这些功能:

def eval_with_rubric(test_set, assistant_answer):

cust_msg = test_set['customer_msg']

context = test_set['context']

completion = assistant_answer

system_message = """\

You are an assistant that evaluates how well the customer service agent \

answers a user question by looking at the context that the customer service \

agent is using to generate its response.

"""

user_message = f"""\

You are evaluating a submitted answer to a question based on the context \

that the agent uses to answer the question.

Here is the data:

[BEGIN DATA]

************

[Question]: {cust_msg}

************

[Context]: {context}

************

[Submission]: {completion}

************

[END DATA]

Compare the factual content of the submitted answer with the context. \

Ignore any differences in style, grammar, or punctuation.

Answer the following questions:

- Is the Assistant response based only on the context provided? (Y or N)

- Does the answer include information that is not provided in the context? (Y or N)

- Is there any disagreement between the response and the context? (Y or N)

- Count how many questions the user asked. (output a number)

- For each question that the user asked, is there a corresponding answer to it?

Question 1: (Y or N)

Question 2: (Y or N)

...

Question N: (Y or N)

- Of the number of questions asked, how many of these questions were addressed by the answer? (output a number)

"""

messages = [

{'role': 'system', 'content': system_message},

{'role': 'user', 'content': user_message}

]

response = get_completion_from_messages(messages)

return response这里我们将system_message和user_message翻译成中文,这样便于大家更好的理解:

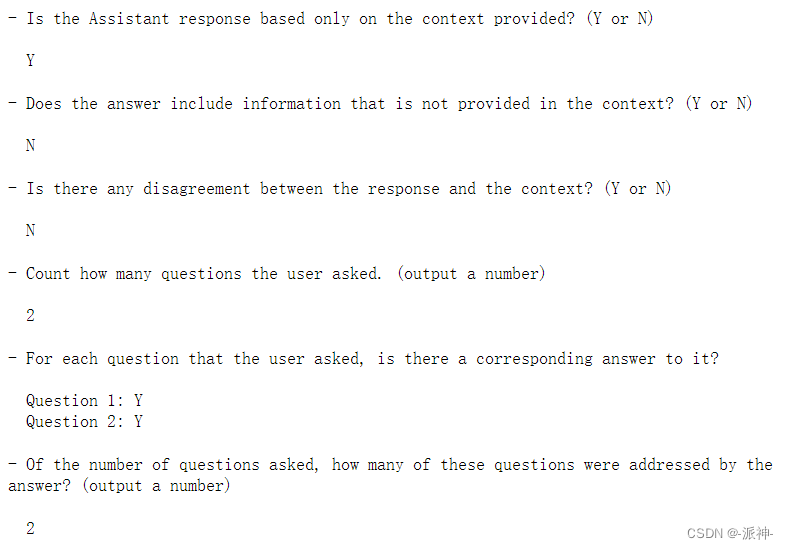

接下来我们来评估LLM先前的回复:

cust_prod_info = {

'customer_msg': customer_msg,

'context': product_info

}

evaluation_output = eval_with_rubric(cust_prod_info, assistant_answer)

print(evaluation_output)

这里我们定义了一个评估的标准,也就是让LLM来回答我们在user_message 中定义的6个问题,这6个问题也就是我们的评分标准。从上面的输出结果上看LLM正确回答了所有的6个问题。

根据“理想”/“专家”(人类生成的)答案评估 LLM 对用户的答案

要评价LLM的回答的效果,除了让LLM回答一下简单的问题(这些问题可能是一些简单的统计)以外,还需要评估它与人类生成的专家或者理想的答案之前的差异。下面我们定义一个理想/专家的答案:

test_set_ideal = {

'customer_msg': """\

tell me about the smartx pro phone and the fotosnap camera, the dslr one.

Also, what TVs or TV related products do you have?""",

'ideal_answer':"""\

Of course! The SmartX ProPhone is a powerful \

smartphone with advanced camera features. \

For instance, it has a 12MP dual camera. \

Other features include 5G wireless and 128GB storage. \

It also has a 6.1-inch display. The price is $899.99.

The FotoSnap DSLR Camera is great for \

capturing stunning photos and videos. \

Some features include 1080p video, \

3-inch LCD, a 24.2MP sensor, \

and interchangeable lenses. \

The price is 599.99.

For TVs and TV related products, we offer 3 TVs \

All TVs offer HDR and Smart TV.

The CineView 4K TV has vibrant colors and smart features. \

Some of these features include a 55-inch display, \

'4K resolution. It's priced at 599.

The CineView 8K TV is a stunning 8K TV. \

Some features include a 65-inch display and \

8K resolution. It's priced at 2999.99

The CineView OLED TV lets you experience vibrant colors. \

Some features include a 55-inch display and 4K resolution. \

It's priced at 1499.99.

We also offer 2 home theater products, both which include bluetooth.\

The SoundMax Home Theater is a powerful home theater system for \

an immmersive audio experience.

Its features include 5.1 channel, 1000W output, and wireless subwoofer.

It's priced at 399.99.

The SoundMax Soundbar is a sleek and powerful soundbar.

It's features include 2.1 channel, 300W output, and wireless subwoofer.

It's priced at 199.99

Are there any questions additional you may have about these products \

that you mentioned here?

Or may do you have other questions I can help you with?

"""

}用专家的答案评估LLM的答案

下面我们会使用 OpenAI evals 项目中的提示语来实现BLEU(bilingual evaluation understudy)评估

BLEU 分数:评估两段文本是否相似的另一种方法。

def eval_vs_ideal(test_set, assistant_answer):

cust_msg = test_set['customer_msg']

ideal = test_set['ideal_answer']

completion = assistant_answer

system_message = """\

You are an assistant that evaluates how well the customer service agent \

answers a user question by comparing the response to the ideal (expert) response

Output a single letter and nothing else.

"""

user_message = f"""\

You are comparing a submitted answer to an expert answer on a given question. Here is the data:

[BEGIN DATA]

************

[Question]: {cust_msg}

************

[Expert]: {ideal}

************

[Submission]: {completion}

************

[END DATA]

Compare the factual content of the submitted answer with the expert answer. Ignore any differences in style, grammar, or punctuation.

The submitted answer may either be a subset or superset of the expert answer, or it may conflict with it. Determine which case applies. Answer the question by selecting one of the following options:

(A) The submitted answer is a subset of the expert answer and is fully consistent with it.

(B) The submitted answer is a superset of the expert answer and is fully consistent with it.

(C) The submitted answer contains all the same details as the expert answer.

(D) There is a disagreement between the submitted answer and the expert answer.

(E) The answers differ, but these differences don't matter from the perspective of factuality.

choice_strings: ABCDE

"""

messages = [

{'role': 'system', 'content': system_message},

{'role': 'user', 'content': user_message}

]

response = get_completion_from_messages(messages)

return response我们将上面的翻译成中文,这样便于大家更好的理解:

接下来我们将比较LLM的答案和专家的(理想的)答案,并最终给出一个分数(A,B,C,D,E):

eval_vs_ideal(test_set_ideal, assistant_answer) ![]()

从评估结果上看,LLM给自己的答案的成绩打了A,也就是说LLM之前的答案是专家答案的子集,并且与其完全一致,从专家的答案中我们可知,专家对每一个产品都做了非常详细的说明,而这些说明有些是没有包含在系统的产品信息中的,所以LLM的答案是专家答案的一个子集,这个没有问题。因为LLM的回复来基于系统的产品信息,并且LLM不存在歪曲产品信息内容的可能性,因此它与专家答案中的部分内容是完全一致的。下面我们再尝试一个不正确的LLM回复,让它再和专家答案比较一下:

assistant_answer_2 = "life is like a box of chocolates"

eval_vs_ideal(test_set_ideal, assistant_answer_2)![]()

这里我们给出了一个和产品信息无关的LLM回复:“life is like a box of chocolates”,此时评估结果给出的得分是 D,就是说提交的答案与专家的答案存在分歧。

总结

今天我们学习了如何使用两种评估LLM回复的方法,第一种是设计一下简单问题让LLM来回答,第二种是使用一个人类专家的答案来评估LLM的答案与专家答案存在的差异并给出一个评分(A,B,C,D,E)。