Apache Kafka源码分析-模块简介

1 概述

一个开源分布式发布订阅消息系统,基于对磁盘文件的顺序存取实现在廉价硬件基础上提供高吞吐量、易扩展、随机消费等特点已被广泛使用。

2 目的

以源码入手对Kafka架构有比较深入的了解,不会展开具体模块的细节。

3 准备

需要从Kafka官网下载Kafka源码这里以最新版本0.10.2.1 为例下载地址:

http://mirrors.tuna.tsinghua.edu.cn/apache/kafka/0.10.2.1/kafka-0.10.2.1-src.tgz

Kafka项目基于Gradle构建需要自行安装Gradle构建工具并在eclipse中完成配置。Kafka源码导入完成后大致项目结构如图3-1所示:

图3-1 Kfka项目模块

4 分析

4.1 模块介绍

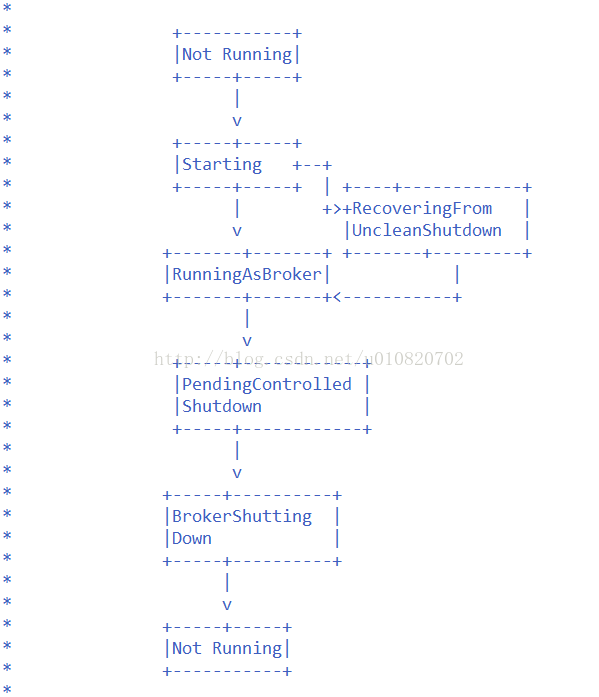

本节只关注Kafka core项目,展开core项目的包结构大致结构如图4.1-1所示,core项目即为Kafka项目的入口,其它独立项目多为多为客户端代码,Kafka.scala即为Kafka应用的main所在。

图4-1 core项目包结构

4.2 启动流程

1. Kafka.scala

Kafka.scala中main方法源码如下:

def main(args: Array[String]): Unit = {

try {

val serverProps= getPropsFromArgs(args)

val kafkaServerStartable= KafkaServerStartable.fromProps(serverProps)

// attach shutdown handler to catch control-c

Runtime.getRuntime().addShutdownHook(newThread() {

override defrun() = {

kafkaServerStartable.shutdown

}

})

kafkaServerStartable.startup

kafkaServerStartable.awaitShutdown

}

catch {

case e:Throwable =>

fatal(e)

System.exit(1)

}

System.exit(0)

}

据此得出Kafka启动程序由KafkaServerStartable对象负责完成,并且向JVM注册了钩子以在JVM卸载时停止Kafka进程,Kafka进程启动后后台运行直到用户发起关闭请求。

2. KafkaServerStartable.scala

KafkaServerStartable为一个标准的Scala Class 由其伴生对象负责创建源码如下

object KafkaServerStartable {

deffromProps(serverProps: Properties) = {

valreporters =KafkaMetricsReporter.startReporters(newVerifiableProperties(serverProps))

newKafkaServerStartable(KafkaConfig.fromProps(serverProps), reporters)

}

}

class KafkaServerStartable(val serverConfig:KafkaConfig, reporters: Seq[KafkaMetricsReporter]) extendsLogging {

privateval server= new KafkaServer(serverConfig, kafkaMetricsReporters = reporters)

defthis(serverConfig: KafkaConfig) = this(serverConfig, Seq.empty)

defstartup() {

try{

server.startup()

}

catch{

casee: Throwable =>

fatal("Fatalerror during KafkaServerStartable startup. Prepare to shutdown",e)

System.exit(1)

}

}

defshutdown() {

try{

server.shutdown()

}

catch{

casee: Throwable =>

fatal("Fatalerror during KafkaServerStable shutdown. Prepare to halt", e)

Runtime.getRuntime.halt(1)

}

}

defsetServerState(newState: Byte) {

server.brokerState.newState(newState)

}

defawaitShutdown() =

server.awaitShutdown

}

不难发现实际上启动Kafka应用的为KafkaServer对象的实例,KafkaServerStartable 只是作为KafkaServer的代理来启动KafkaServer实例。实际上一个KafkaServer的实例正是一个 Kafka Broker的存在与Kafka Broker生命周期息息相关。

3. KafkaServer.scala

对KafkaServer首先关注的是它的startup方法源码如下:

def startup() {

try{

info("starting")

if(isShuttingDown.get)

thrownew IllegalStateException("Kafka server is still shutting down, cannotre-start!")

if(startupComplete.get)

return

valcanStartup = isStartingUp.compareAndSet(false, true)

if(canStartup) {

brokerState.newState(Starting)

/* startscheduler */

kafkaScheduler.startup()

/* setup zookeeper*/

zkUtils= initZk()

/* Get orcreate cluster_id */

_clusterId= getOrGenerateClusterId(zkUtils)

info(s"ClusterID = $clusterId")

/* generatebrokerId */

config.brokerId = getBrokerId

this.logIdent = "[KafkaServer " + config.brokerId + "],"

/* create andconfigure metrics */

valreporters = config.getConfiguredInstances(KafkaConfig.MetricReporterClassesProp,classOf[MetricsReporter],

Map[String, AnyRef](KafkaConfig.BrokerIdProp -> (config.brokerId.toString)).asJava)

reporters.add(new JmxReporter(jmxPrefix))

valmetricConfig = KafkaServer.metricConfig(config)

metrics= new Metrics(metricConfig, reporters,time, true)

quotaManagers= QuotaFactory.instantiate(config, metrics, time)

notifyClusterListeners(kafkaMetricsReporters ++ reporters.asScala)

/* start logmanager */

logManager= createLogManager(zkUtils.zkClient, brokerState)

logManager.startup()

metadataCache= new MetadataCache(config.brokerId)

credentialProvider= new CredentialProvider(config.saslEnabledMechanisms)

socketServer= new SocketServer(config, metrics,time, credentialProvider)

socketServer.startup()

/* startreplica manager */

replicaManager= new ReplicaManager(config, metrics,time, zkUtils, kafkaScheduler,logManager,

isShuttingDown,quotaManagers.follower)

replicaManager.startup()

/* start kafkacontroller */

kafkaController= new KafkaController(config, zkUtils,brokerState, time, metrics,threadNamePrefix)

kafkaController.startup()

adminManager= new AdminManager(config, metrics,metadataCache, zkUtils)

/* startgroup coordinator */

// HardcodeTime.SYSTEM for now as some Streams tests fail otherwise, it would be good tofix the underlying issue

groupCoordinator= GroupCoordinator(config, zkUtils, replicaManager,Time.SYSTEM)

groupCoordinator.startup()

/* Get theauthorizer and initialize it if one is specified.*/

authorizer= Option(config.authorizerClassName).filter(_.nonEmpty).map{ authorizerClassName =>

valauthZ = CoreUtils.createObject[Authorizer](authorizerClassName)

authZ.configure(config.originals())

authZ

}

/* startprocessing requests */

apis =new KafkaApis(socketServer.requestChannel,replicaManager, adminManager,groupCoordinator,

kafkaController,zkUtils, config.brokerId, config,metadataCache, metrics,authorizer, quotaManagers,

clusterId, time)

requestHandlerPool= new KafkaRequestHandlerPool(config.brokerId,socketServer.requestChannel,apis, time,

config.numIoThreads)

Mx4jLoader.maybeLoad()

/* startdynamic config manager */

dynamicConfigHandlers= Map[String, ConfigHandler](ConfigType.Topic-> new TopicConfigHandler(logManager, config,quotaManagers),

ConfigType.Client -> newClientIdConfigHandler(quotaManagers),

ConfigType.User -> new UserConfigHandler(quotaManagers,credentialProvider),

ConfigType.Broker -> newBrokerConfigHandler(config, quotaManagers))

// Create theconfig manager. start listening to notifications

dynamicConfigManager= new DynamicConfigManager(zkUtils, dynamicConfigHandlers)

dynamicConfigManager.startup()

/* telleveryone we are alive */

vallisteners = config.advertisedListeners.map { endpoint =>

if(endpoint.port == 0)

endpoint.copy(port = socketServer.boundPort(endpoint.listenerName))

else

endpoint

}

kafkaHealthcheck= new KafkaHealthcheck(config.brokerId,listeners, zkUtils,config.rack,

config.interBrokerProtocolVersion)

kafkaHealthcheck.startup()

// Now that thebroker id is successfully registered via KafkaHealthcheck, checkpoint it

checkpointBrokerId(config.brokerId)

/* registerbroker metrics */

registerStats()

brokerState.newState(RunningAsBroker)

shutdownLatch= new CountDownLatch(1)

startupComplete.set(true)

isStartingUp.set(false)

AppInfoParser.registerAppInfo(jmxPrefix, config.brokerId.toString)

info("started")

}

}

catch{

casee: Throwable =>

fatal("Fatalerror during KafkaServer startup. Prepare to shutdown", e)

isStartingUp.set(false)

shutdown()

throwe

}

}

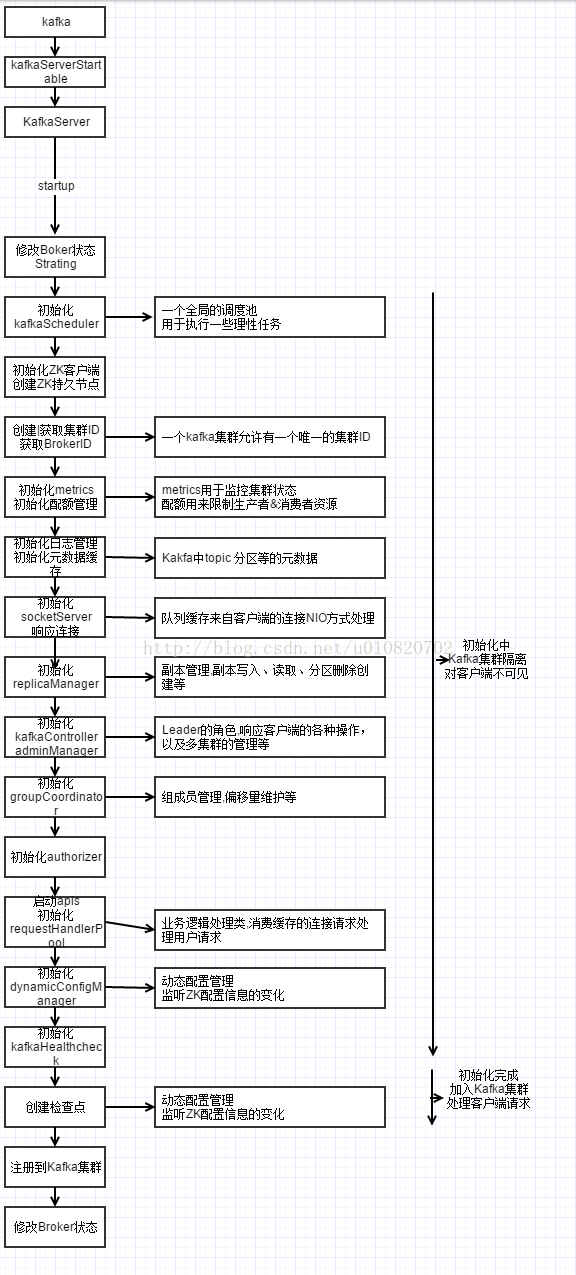

因该类代码较多因而只贴出代码方法部分,该方法中使用“外观模式”来逐一启动每一个模块,下面我们逐一来分析。

a) 首先前期有一定的校验是未了避免出现启动|关闭不一致的状态

b) brokerState成员定义了集群节点的不同状态

BrokerState源码中大致能看到一下类别的状态类型代表kafka节点当前状态

case object NotRunning extendsBrokerStates { val state: Byte = 0}

case object Starting extendsBrokerStates { val state: Byte = 1}

case object RecoveringFromUncleanShutdown extends BrokerStates { val state:Byte = 2 }

case object RunningAsBroker extends BrokerStates { val state:Byte = 3 }

case object PendingControlledShutdown extends BrokerStates { val state:Byte = 6 }

case object BrokerShuttingDown extends BrokerStates { val state:Byte = 7 }

大致的状态切换流程如图4.2-1 所示

图4.2-1 Kafka 状态切换流程

c) kafkaScheduler成员包装了一个全局的线程池供Kafka节点进行线程调度

该类中维护一个ScheduledThreadPoolExecutor 对象并进行一些定制的初始化及必要的包装。

d) zkUtils成员为Kafka对ZK操作的封装

initZk()主要完成安全检查[如果启用]、初始化在ZK中的节点以及返回一个可用的zkUtils对象,由ZKUtils源码得知初始化时需要检查一下持久节点是否存在

valAdminPath= "/admin" valBrokersPath = "/brokers" valClusterPath = "/cluster" valConfigPath= "/config" valControllerPath = "/controller" valControllerEpochPath = "/controller_epoch" valIsrChangeNotificationPath = "/isr_change_notification" valKafkaAclPath = "/kafka-acl" valKafkaAclChangesPath = "/kafka-acl-changes"

e) 创建或者获取一个唯一的集群ID

确保多个Broker拥有唯一的集群ID

f) 获取brokerId

这里做了一系列的验证确保每个Broker拥有唯一的ID

g) 创建和配置metrics模块、初始化配额管理

Kafka监控的各种指标进行初始化、创建quotaManagers对象该对象用于对生产者和消费者进行资源限制。

h) logManager为Kafka日志管理子系统的入口,负责日志的创建、检索、删除等

所有的读写操作都委托logManager完成。其startup()方法实质上启动了若干定制执行的调度线程,包括”kafka-log-retention”过期日志清理、” kafka-log-flusher”日志刷新、”kafka-recovery-point-checkpoint”日志恢复、” kafka-delete-logs”日志删除。

def startup() {

/* Schedule thecleanup task to delete old logs */

if(scheduler!= null) {

info("Startinglog cleanup with a period of %d ms.".format(retentionCheckMs))

scheduler.schedule("kafka-log-retention",

cleanupLogs,

delay = InitialTaskDelayMs,

period = retentionCheckMs,

TimeUnit.MILLISECONDS)

info("Startinglog flusher with a default period of %d ms.".format(flushCheckMs))

scheduler.schedule("kafka-log-flusher",

flushDirtyLogs,

delay = InitialTaskDelayMs,

period = flushCheckMs,

TimeUnit.MILLISECONDS)

scheduler.schedule("kafka-recovery-point-checkpoint",

checkpointRecoveryPointOffsets,

delay = InitialTaskDelayMs,

period = flushCheckpointMs,

TimeUnit.MILLISECONDS)

scheduler.schedule("kafka-delete-logs",

deleteLogs,

delay = InitialTaskDelayMs,

period = defaultConfig.fileDeleteDelayMs,

TimeUnit.MILLISECONDS)

}

if(cleanerConfig.enableCleaner)

cleaner.startup()

}

i) metadataCache分区缓存、credentialProvider 凭证管理

为所有Broker提供一致的分区缓存通过UpdateMetadataRequest 实现更新

j) socketServerNIO的请求响应模型

socketServer 使用一个接收线程(Accpeter)处理用户连接请求,N个处理线程(Processor)负责读写数据,M个处理线程(Handler)处理业务逻辑,Accpeter与Processor及Processor与Handler之间都使用队列缓存

k) replicaManager副本管理,数据写入等

startup方法启动调度ISR(in-sync replicas) 过期及ISR改变线程,在Leader失效时从ISR Broker中选举Leader

l) kafkaControllerKafka集群中的一个Broker会被选举为Controller

kafkaController负责与Produce和Consumer进行交互,包括Broker、Partition、Replica信息获取,主题管理、集群管理、Leader选举、分区管理等,大部分Kafka bin 目录下的脚本都由kafkaController 来响应处理

m) adminManager配合kafkaController完成Kafka 集群管理

n) groupCoordinatorkafka分组协调模块

管理组成员及Offset管理,每一个Kafka Server实例化一个GroupCoordinator

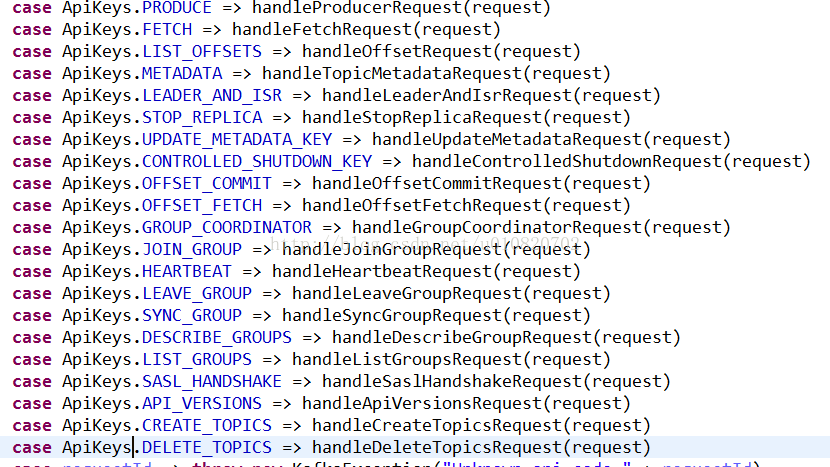

o) apis 请求处理模块, requestHandlerPool 请求处理池开始响应请求

KafkaApis 的实例用来处理kafka业务逻辑,支持的请求类别如图4.2-2所示

图4.2-2 KafkaApis 处理的请求类型

requestHandlerPool 作为请求缓冲池,在多线程模型下通过KafkaRequestHandler进行不同类别请求的处理。

p) dynamicConfigHandlers、dynamicConfigManager 动态配置管理

基于ZK节点(/config/)监听实现配置变化的通知。

q) kafkaHealthcheck向集群注册Broker节点

注册Broker到ZK零时节点(/brokers/ids/[0...N]),允许其它Broker进行失败检测

r) Broker正常启动执行checkpoint,注册broker metrics,更新Broker状态,修改启动状态

checkpointBrokerId(config.brokerId) registerStats() brokerState.newState(RunningAsBroker) shutdownLatch = new CountDownLatch(1) startupComplete.set(true) isStartingUp.set(false)

4.3 关闭流程

1. 关闭流程仍然在KafkaServer中完成源码如下

brokerState.newState(BrokerShuttingDown) if(socketServer!= null) CoreUtils.swallow(socketServer.shutdown()) if(requestHandlerPool!= null) CoreUtils.swallow(requestHandlerPool.shutdown()) CoreUtils.swallow(kafkaScheduler.shutdown()) if(apis!= null) CoreUtils.swallow(apis.close()) CoreUtils.swallow(authorizer.foreach(_.close())) if(replicaManager!= null) CoreUtils.swallow(replicaManager.shutdown()) if (adminManager!= null) CoreUtils.swallow(adminManager.shutdown()) if(groupCoordinator!= null) CoreUtils.swallow(groupCoordinator.shutdown()) if(logManager!= null) CoreUtils.swallow(logManager.shutdown()) if(kafkaController!= null) CoreUtils.swallow(kafkaController.shutdown()) if(zkUtils!= null) CoreUtils.swallow(zkUtils.close()) if (metrics!= null) CoreUtils.swallow(metrics.close()) brokerState.newState(NotRunning) startupComplete.set(false) isShuttingDown.set(false)

2. 可见关闭的顺序大致如下

a) 修改Broker状态为shutdown

b) 关闭socketServer 不在接收新的连接且关闭acceptors、processors

c) 关闭requestHandlerPool 不在处理请求,停止相关线程

d) 关闭kafkaScheduler 销毁线程池

e) 关闭apis 模块

f) 关闭authorizer 身份验证模块

g) 关闭replicaManager 副本管理模块

h) 关闭adminManager

i) 关闭groupCoordinator

j) 关闭logManager

k) 关闭kafkaController

l) 关闭zkUtils

m) 关闭metrics

n) 修改Broker状态为NotRunning