一、下载和导入Zxing

使用zxing.unity.dll,将下载的dll文件添加到Plugins下:

二、unity设置

Project Settings-Player下勾选Allow ‘unsafe’ Code:

三、编写代码

1.使用ARCameraManager接收摄像帧

m_CameraManager.frameReceived += OnCameraFrameReceived;

2.读取摄像机图像信息

cameraManager.TryAcquireLatestCpuImage(out XRCpuImage image)

3.创建Texture2D,将摄像机图像信息转为Texture2D

var format = TextureFormat.RGBA32;

if (m_CameraTexture == null || m_CameraTexture.width != image.width || m_CameraTexture.height != image.height)

{

m_CameraTexture = new Texture2D(image.width, image.height, format, false);

}

// Convert the image to format, flipping the image across the Y axis.

// We can also get a sub rectangle, but we'll get the full image here.

var conversionParams = new XRCpuImage.ConversionParams(image, format, m_Transformation);

// Texture2D allows us write directly to the raw texture data

// This allows us to do the conversion in-place without making any copies.

var rawTextureData = m_CameraTexture.GetRawTextureData<byte>();

try

{

image.Convert(conversionParams, new IntPtr(rawTextureData.GetUnsafePtr()), rawTextureData.Length);

}

finally

{

// We must dispose of the XRCpuImage after we're finished

// with it to avoid leaking native resources.

image.Dispose();

}

// Apply the updated texture data to our texture

m_CameraTexture.Apply();

4.调用ZXing解码

// create a reader with a custom luminance source

BarcodeReader barcodeReader = new BarcodeReader { AutoRotate = false, Options = new ZXing.Common.DecodingOptions { TryHarder = false } };

…

var result = barcodeReader.Decode(colors, width, height);

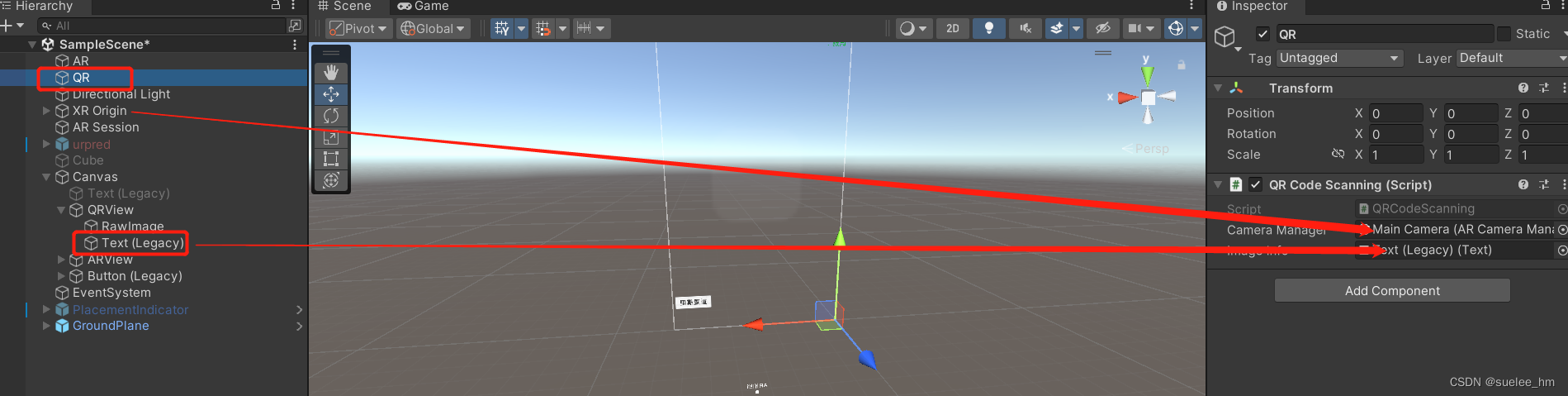

四、unity创建组件挂载

1.Hierarchy新建空对象,命名QR,Canvas下创建Text,显示结果

2.QRCodeScanning.cs

using System;

using Unity.Collections.LowLevel.Unsafe;

using UnityEngine;

using UnityEngine.UI;

using UnityEngine.XR.ARFoundation;

using UnityEngine.XR.ARSubsystems;

using ZXing;

namespace FrameworkDesign.Example

{

public class QRCodeScanning : MonoBehaviour

{

[SerializeField]

[Tooltip("The ARCameraManager which will produce frame events.")]

ARCameraManager m_CameraManager;

/// <summary>

/// Get or set the <c>ARCameraManager</c>.

/// </summary>

public ARCameraManager cameraManager

{

get => m_CameraManager;

set => m_CameraManager = value;

}

/**

[SerializeField]

RawImage m_RawCameraImage;

/// <summary>

/// The UI RawImage used to display the image on screen.

/// </summary>

public RawImage rawCameraImage

{

get => m_RawCameraImage;

set => m_RawCameraImage = value;

}

*/

[SerializeField]

Text m_ImageInfo;

/// <summary>

/// The UI Text used to display information about the image on screen.

/// </summary>

public Text imageInfo

{

get => m_ImageInfo;

set => m_ImageInfo = value;

}

/**

[SerializeField]

Button m_TransformationButton;

/// <summary>

/// The button that controls transformation selection.

/// </summary>

public Button transformationButton

{

get => m_TransformationButton;

set => m_TransformationButton = value;

}

*/

XRCpuImage.Transformation m_Transformation = XRCpuImage.Transformation.MirrorY;

Texture2D m_CameraTexture;

// create a reader with a custom luminance source

BarcodeReader barcodeReader = new BarcodeReader { AutoRotate = false, Options = new ZXing.Common.DecodingOptions { TryHarder = false } };

private bool snaped = false;

/**

/// <summary>

/// Cycles the image transformation to the next case.

/// </summary>

public void CycleTransformation()

{

m_Transformation = m_Transformation switch

{

XRCpuImage.Transformation.None => XRCpuImage.Transformation.MirrorX,

XRCpuImage.Transformation.MirrorX => XRCpuImage.Transformation.MirrorY,

XRCpuImage.Transformation.MirrorY => XRCpuImage.Transformation.MirrorX | XRCpuImage.Transformation.MirrorY,

_ => XRCpuImage.Transformation.None

};

if (m_TransformationButton)

{

m_TransformationButton.GetComponentInChildren<Text>().text = m_Transformation.ToString();

}

}

*/

void OnEnable()

{

if (m_CameraManager != null)

{

m_CameraManager.frameReceived += OnCameraFrameReceived;

}

}

void OnDisable()

{

if (m_CameraManager != null)

{

m_CameraManager.frameReceived -= OnCameraFrameReceived;

}

}

unsafe void UpdateCameraImage()

{

// Attempt to get the latest camera image. If this method succeeds,

// it acquires a native resource that must be disposed (see below).

if (!cameraManager.TryAcquireLatestCpuImage(out XRCpuImage image))

{

return;

}

//Logger.Log("UpdateCameraImage");

// Display some information about the camera image

m_ImageInfo.text = string.Format(

"Image info:\n\twidth: {0}\n\theight: {1}\n\tplaneCount: {2}\n\ttimestamp: {3}\n\tformat: {4}",

image.width, image.height, image.planeCount, image.timestamp, image.format);

// Once we have a valid XRCpuImage, we can access the individual image "planes"

// (the separate channels in the image). XRCpuImage.GetPlane provides

// low-overhead access to this data. This could then be passed to a

// computer vision algorithm. Here, we will convert the camera image

// to an RGBA texture and draw it on the screen.

// Choose an RGBA format.

// See XRCpuImage.FormatSupported for a complete list of supported formats.

var format = TextureFormat.RGBA32;

if (m_CameraTexture == null || m_CameraTexture.width != image.width || m_CameraTexture.height != image.height)

{

m_CameraTexture = new Texture2D(image.width, image.height, format, false);

}

// Convert the image to format, flipping the image across the Y axis.

// We can also get a sub rectangle, but we'll get the full image here.

var conversionParams = new XRCpuImage.ConversionParams(image, format, m_Transformation);

// Texture2D allows us write directly to the raw texture data

// This allows us to do the conversion in-place without making any copies.

var rawTextureData = m_CameraTexture.GetRawTextureData<byte>();

try

{

image.Convert(conversionParams, new IntPtr(rawTextureData.GetUnsafePtr()), rawTextureData.Length);

}

finally

{

// We must dispose of the XRCpuImage after we're finished

// with it to avoid leaking native resources.

image.Dispose();

}

// Apply the updated texture data to our texture

m_CameraTexture.Apply();

// Set the RawImage's texture so we can visualize it.

// m_RawCameraImage.texture = m_CameraTexture;

//Logger.Log("UpdateCameraImage2222222");

//-----------------

string res = DecodeQR(m_CameraTexture.GetPixels32(), m_CameraTexture.width, m_CameraTexture.height);

//Logger.Log("DecodeQR22222222"+res);

if (!string.IsNullOrEmpty(res))

{

imageInfo.text = "识别:"+res;

Logger.Log("DecodeQR=" + res);

OnDisable();

}

}

static void UpdateRawImage(RawImage rawImage, XRCpuImage cpuImage, XRCpuImage.Transformation transformation)

{

// Get the texture associated with the UI.RawImage that we wish to display on screen.

var texture = rawImage.texture as Texture2D;

// If the texture hasn't yet been created, or if its dimensions have changed, (re)create the texture.

// Note: Although texture dimensions do not normally change frame-to-frame, they can change in response to

// a change in the camera resolution (for camera images) or changes to the quality of the human depth

// and human stencil buffers.

if (texture == null || texture.width != cpuImage.width || texture.height != cpuImage.height)

{

texture = new Texture2D(cpuImage.width, cpuImage.height, cpuImage.format.AsTextureFormat(), false);

rawImage.texture = texture;

}

// For display, we need to mirror about the vertical access.

var conversionParams = new XRCpuImage.ConversionParams(cpuImage, cpuImage.format.AsTextureFormat(), transformation);

// Get the Texture2D's underlying pixel buffer.

var rawTextureData = texture.GetRawTextureData<byte>();

// Make sure the destination buffer is large enough to hold the converted data (they should be the same size)

Debug.Assert(rawTextureData.Length == cpuImage.GetConvertedDataSize(conversionParams.outputDimensions, conversionParams.outputFormat),

"The Texture2D is not the same size as the converted data.");

// Perform the conversion.

cpuImage.Convert(conversionParams, rawTextureData);

// "Apply" the new pixel data to the Texture2D.

texture.Apply();

// Make sure it's enabled.

rawImage.enabled = true;

}

void OnCameraFrameReceived(ARCameraFrameEventArgs eventArgs)

{

UpdateCameraImage();

}

//--------------

string DecodeQR(Color32[] colors, int width, int height)

{

try

{

// Logger.Log("DecodeQR");

// decode the current frame

var result = barcodeReader.Decode(colors, width, height);

if (result != null)

{

return result.Text;

}

}

catch

{

}

return null;

}

//------------------

}

}

五、常见问题

六、参考文献

1. Unity 指导手册:

Unity - Manual: Unity User Manual 2021.3 (LTS)

2.ARFoundation示例:

3.ARCore开发文档: