上一次说到用卷积网络实现图像处理。

这一次的笔记说的是卷积神经网络的正经事儿——使用CNN来实现手写数字的识别。

之前已经说过,CNN的权重带来了各种图像处理的效果,这些都可以作为图像特征来使用。因此,将标记好的训练集导入构建的CNN网络中,后面再增加全连接网络最终分类输出,就达到了识别的目的。

在我的笔记本上运行的时候,记录了一些中间结果:

160s/epoch 3ms/step loss: 0.0350 - acc: 0.9893 - val_loss: 0.0294 - val_acc: 0.9908 Test score: 0.029372483089374873 Test accuracy: 0.9908 可以看出来,卷积处理的效果比全连接网络要好,在图像识别上使用卷积神经网络还是很有优势的。 全连接网络1:784-784*2-10 epochs=12识别率约88% 全连接网络2:784- 3个【784*2】--5个【784】---10 epochs=12(87%),=30(92%),=100(94%) 可见卷积网络比单纯增加全连接的复杂度好得多。

from PIL import Image

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

from keras.utils import np_utils

from keras import backend as K

import matplotlib.pyplot as plt # 显示图片

# 全局变量

batch_size = 128

nb_classes = 10 # 手写数字一共有10类,可以生成10维的OneHot

epochs = 1

# input image dimensions

img_rows, img_cols = 28, 28

# number of convolutional filters to use

nb_filters = 32

# size of pooling area for max pooling

pool_size = (2, 2)

# convolution kernel size

kernel_size = (3, 3)

# 数据集获取 mnist 数据集的介绍可以参考 https://blog.csdn.net/simple_the_best/article/details/75267863

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# print(K.image_dim_ordering()) # 'th'

# 根据不同的backend定下不同的格式

if K.image_dim_ordering() == 'th':

X_train = X_train.reshape(X_train.shape[0], 1, img_rows, img_cols)

X_test = X_test.reshape(X_test.shape[0], 1, img_rows, img_cols)

input_shape = (1, img_rows, img_cols)

else:

X_train = X_train.reshape(X_train.shape[0], img_rows, img_cols, 1)

X_test = X_test.reshape(X_test.shape[0], img_rows, img_cols, 1)

input_shape = (img_rows, img_cols, 1)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255 # 规格化到 0-1

X_test /= 255

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# 转换为one_hot类型

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

#构建模型

model = Sequential()

"""

model.add(Convolution2D(nb_filters, kernel_size[0], kernel_size[1],

border_mode='same',

input_shape=input_shape))

"""

model.add(Convolution2D(nb_filters, (kernel_size[0], kernel_size[1]),

padding='same',

input_shape=input_shape)) # 卷积层1

model.add(Activation('relu')) #激活层

model.add(Convolution2D(nb_filters, (kernel_size[0], kernel_size[1]))) #卷积层2

model.add(Activation('relu')) #激活层

model.add(MaxPooling2D(pool_size=pool_size)) #池化层

model.add(Dropout(0.25)) #神经元随机失活

model.add(Flatten()) #拉成一维数据

model.add(Dense(128)) #全连接层1

model.add(Activation('relu')) #激活层

model.add(Dropout(0.5)) #随机失活

model.add(Dense(nb_classes)) #全连接层2 作为输出层

model.add(Activation('softmax')) #Softmax评分

#编译模型

model.compile(loss='categorical_crossentropy',

optimizer='adadelta',

metrics=['accuracy'])

#训练模型

model.fit(X_train, Y_train, batch_size=batch_size, epochs=epochs,

verbose=1, validation_data=(X_test, Y_test))

#评估模型

score = model.evaluate(X_test, Y_test, verbose=0)

print('Test score:', score[0])

print('Test accuracy:', score[1])

#保存

model.save('model_test_Keras_02(CNN-3-mnist).h5')

识别的结果

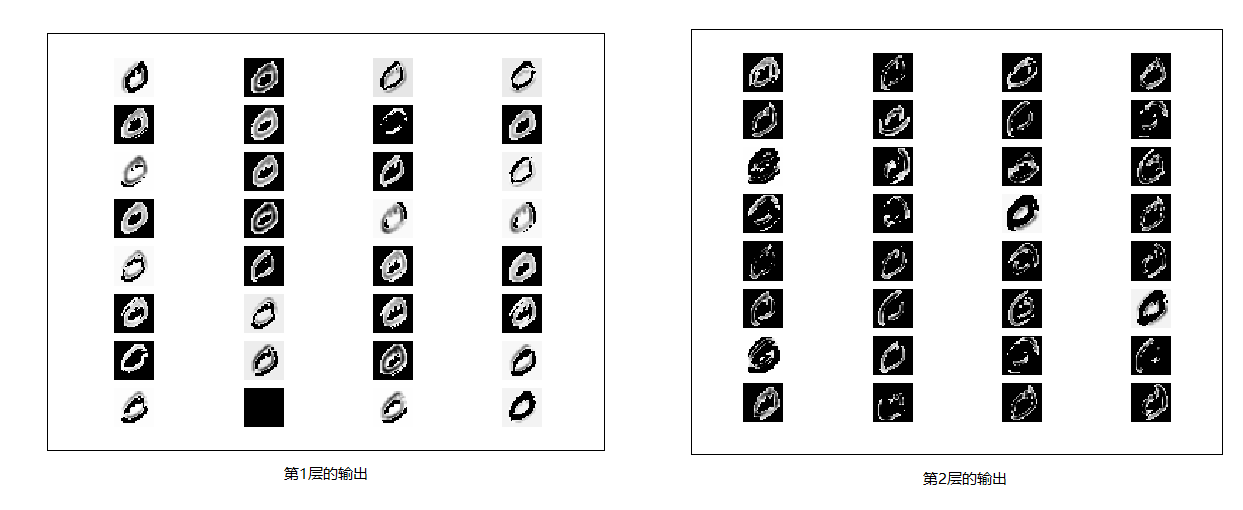

下面我们来看一下中间结果。在结果上可以看到各种滤波的效果,说明卷积神经网络比以前的图像处理的优势在于自动训练了各层的权重,通过权重调整实现了以前研究人员开发的各种滤波器效果。

要注意,获取第二层的输出时, 其输入应该是第一层输出的结果。不过下面写的代码我感觉比较土,应该有更好的方法能够实现调用。请懂行的朋友留言指导一下,谢谢。26日的时候我重新更新了一下代码,似乎是找到了一个更加合适的方法来获取某一层的输出(或输入),运行结果也证明更加方便一些

'''

CNN网络的加载、中间结果的获取

'''

from PIL import Image

import numpy as np

np.random.seed(1337) # for reproducibility

from keras.datasets import mnist

from keras.models import Sequential

from keras.models import load_model, Model

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

from keras.utils import np_utils

from keras import backend as K

import matplotlib.pyplot as plt # 显示图片

'''将0到1的浮点数转换到0-255的图像值'''

def deprocess_image(x):

if K.image_data_format() == 'channels_first':

x = x.transpose(1, 2, 3, 0)

elif K.image_data_format() == 'channels_last':

x = x.transpose(3, 1, 2, 0)

x *= 255.

x = np.clip(x, 0, 255).astype('uint8')

return x

# 全局变量

batch_size = 128

nb_classes = 10 # 手写数字一共有10类,可以生成10维的OneHot

epochs = 1

# input image dimensions

img_rows, img_cols = 28, 28

# number of convolutional filters to use

nb_filters = 32

# size of pooling area for max pooling

pool_size = (2, 2)

# convolution kernel size

kernel_size = (3, 3)

# 数据集获取 mnist 数据集的介绍可以参考 https://blog.csdn.net/simple_the_best/article/details/75267863

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# print(K.image_dim_ordering()) # 'th'

# 根据不同的backend定下不同的格式

if K.image_dim_ordering() == 'th':

X_train = X_train.reshape(X_train.shape[0], 1, img_rows, img_cols)

X_test = X_test.reshape(X_test.shape[0], 1, img_rows, img_cols)

input_shape = (1, img_rows, img_cols)

else:

X_train = X_train.reshape(X_train.shape[0], img_rows, img_cols, 1)

X_test = X_test.reshape(X_test.shape[0], img_rows, img_cols, 1)

input_shape = (img_rows, img_cols, 1)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255 # 规格化到 0-1

X_test /= 255

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

# 转换为one_hot类型

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

#载入模型

model = load_model('E:/PythonWorks/TestDemos/models/model_test_Keras_02(CNN-3-mnist).h5')

#编译模型 不编译是不能使用的

model.compile(loss='categorical_crossentropy',

optimizer='adadelta',

metrics=['accuracy'])

'''使用载入的模型显示一组识别结果的图片'''

retImg = []

curIndex = 0

img = np.empty((1, img_rows,img_cols, 1),dtype="float32") # 'channels_last' for TF

for i in range(0,1000):

img[0,:,:,:] = X_test[i]

ret = model.predict(img) # ret.shape = (1, 28, 28, 32)

retValue = np.argmax(ret, axis = 1)[0]

if retValue==curIndex:

retImg.append(X_test[i])

curIndex += 1

if len(retImg)==10:

break

fig = plt.figure()

for i in range(0,2):

for j in range(0,5):

ax0 = fig.add_subplot(2,5,i*5+j+1)

t1 = retImg[i*5+j]

temp = np.rollaxis(255*retImg[i*5+j], 2, 0) #retImg[i*5+j]

showImg = Image.fromarray(temp[0])

ax0.imshow(showImg, cmap ='gray')

ax0.axis('off')

ax0.set_title(str(i*5+j))

plt.show()

'''获取中间结果,并展示出中间结果的图片'''

'''下面这种方法比较繁琐, 不建议使用,仅仅表示这样可以执行成功'''

'''

#定义回调函数

get_1_layer_output = K.function([model.layers[0].input, K.learning_phase()], [model.layers[0].output])

pic_len = 1

p_32 = get_1_layer_output([X_train[1:1+pic_len], 0])[0] #获取第1层的输出

# 将第一层的输出作为第二层的输入,即可得到第二层的输出。

get_2_layer_output = K.function([model.layers[2].input, K.learning_phase()], [model.layers[2].output])

p_32 = get_2_layer_output([p_32, 0])[0] #获取第2层的输出

image_array = deprocess_image(p_32)

print('image_array.shape=',image_array.shape)

img = np.empty((1, img_rows,img_cols, 1),dtype="float32") # 'channels_last' for TF

img = np.rollaxis(image_array,3,0)

#ret = model.predict(img) # ret.shape = (1, 28, 28, 32)

ret = img[0] * 255

print('ret.shape = ', ret.shape)

'''

'''下面的方法才是获取中间结果的正解'''

#取某一层的输出为输出新建为model,采用函数模型

# 如果想要获取某一层的输入输出,最好给每一层命名,否则按照index是很难找到的

dense1_layer_model = Model(inputs=model.input, outputs=model.get_layer(index=1).output)

#以这个model的预测值作为输出

img = np.empty((1, img_rows,img_cols, 1),dtype="float32") # 'channels_last' for TF

img[0,:,:,:] = X_test[0]

dense1_output = dense1_layer_model.predict(img)

image_array = deprocess_image(dense1_output)

img = np.rollaxis(image_array,3,0)

ret = img[0] * 255

print("shape of img", img.shape)

print("shape of ret", ret.shape)

fig = plt.figure()

for i in range(0,8):

for j in range(0,4):

ax0 = fig.add_subplot(8,4,i*4+j+1)

temp = ret[i*4+j]

showImg = Image.fromarray(temp)

ax0.imshow(showImg, cmap ='gray')

ax0.axis('off')

#ax0.set_title(str(i*4+j))

plt.show()

第一段代码要感谢 marsjhao 博文见 https://blog.csdn.net/marsjhao/article/details/68490105