本文是利用opencv python 的美颜(磨皮,大眼)实现。

1 磨皮

1.1 导向滤波

磨皮使用的是导向滤波进行磨皮。关于导向滤波的介绍,可以看我的另一篇文章导向滤波与opencv python实现

2.2 椭圆肤色模型

可以先使用椭圆肤色模型对皮肤部分进行提取,得到掩膜数组,然后利用位运算将滤波后的图像与原图结合,这样就做到了对背景的保护。

def YCrCb_ellipse_model(img):

skinCrCbHist = np.zeros((256,256), dtype= np.uint8)

cv2.ellipse(skinCrCbHist, (113,155),(23,25), 43, 0, 360, (255,255,255), -1) #绘制椭圆弧线

YCrCb = cv2.cvtColor(img, cv2.COLOR_BGR2YCR_CB) #转换至YCrCb空间

(Y,Cr,Cb) = cv2.split(YCrCb) #拆分出Y,Cr,Cb值

skin = np.zeros(Cr.shape, dtype = np.uint8) #掩膜

(x,y) = Cr.shape

for i in range(0, x):

for j in range(0, y):

if skinCrCbHist [Cr[i][j], Cb[i][j]] > 0: #若不在椭圆区间中

skin[i][j] = 255

res = cv2.bitwise_and(img,img, mask = skin)

return skin,res

可以对掩膜数组进行一次开运算,消除细小区域。

def mopi(img):

skin,_ = ellipse_skin_model.YCrCb_ellipse_model(img)#获得皮肤的掩膜数组

#进行一次开运算

kernel = np.ones((3,3),dtype=np.uint8)

skin = cv2.erode(skin,kernel=kernel)

skin = cv2.dilate(skin,kernel=kernel)

img1 = guided_filter(img/255.0,img/255.0,10,eps=0.001)*255

img1 = np.array(img1,dtype=np.uint8)

img1 = cv2.bitwise_and(img1,img1,mask=skin)#将皮肤与背景分离

skin = cv2.bitwise_not(skin)

img1 = cv2.add(img1,cv2.bitwise_and(img,img,mask=skin))#磨皮后的结果与背景叠加

fig, ax = plt.subplots(1, 2)

# ax[0].imshow(skin)

# ax[1].imshow(img1[:, :, ::-1])

# plt.show()

return img1

得到的结果如下图

2 人脸关键点提取

在美颜中,要想进行大眼,瘦脸等操作,需要首先提取出人脸的关键点才可以做坐标变换。

这里,使用了Google的mediapipe进行检测。一般来说应该使用dlib的,但我折腾一个小时没装上。。。

import cv2

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

def get_face_key_point(img):

with mp_face_detection.FaceDetection(model_selection=1, min_detection_confidence=0.5) as face_detection:

results = face_detection.process(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

if not results.detections:

return None

annotated_image = img.copy()

r,w,c =img.shape

for detection in results.detections:

left_eye =mp_face_detection.get_key_point(detection, mp_face_detection.FaceKeyPoint.LEFT_EYE)

right_eye =mp_face_detection.get_key_point(detection, mp_face_detection.FaceKeyPoint.RIGHT_EYE)

left_eye_pos = [int(left_eye.x * w), int(left_eye.y * r)]

right_eye_pos = [int(right_eye.x * w), int(right_eye.y * r)]

return left_eye_pos,right_eye_pos

3 大眼

3.1 原理

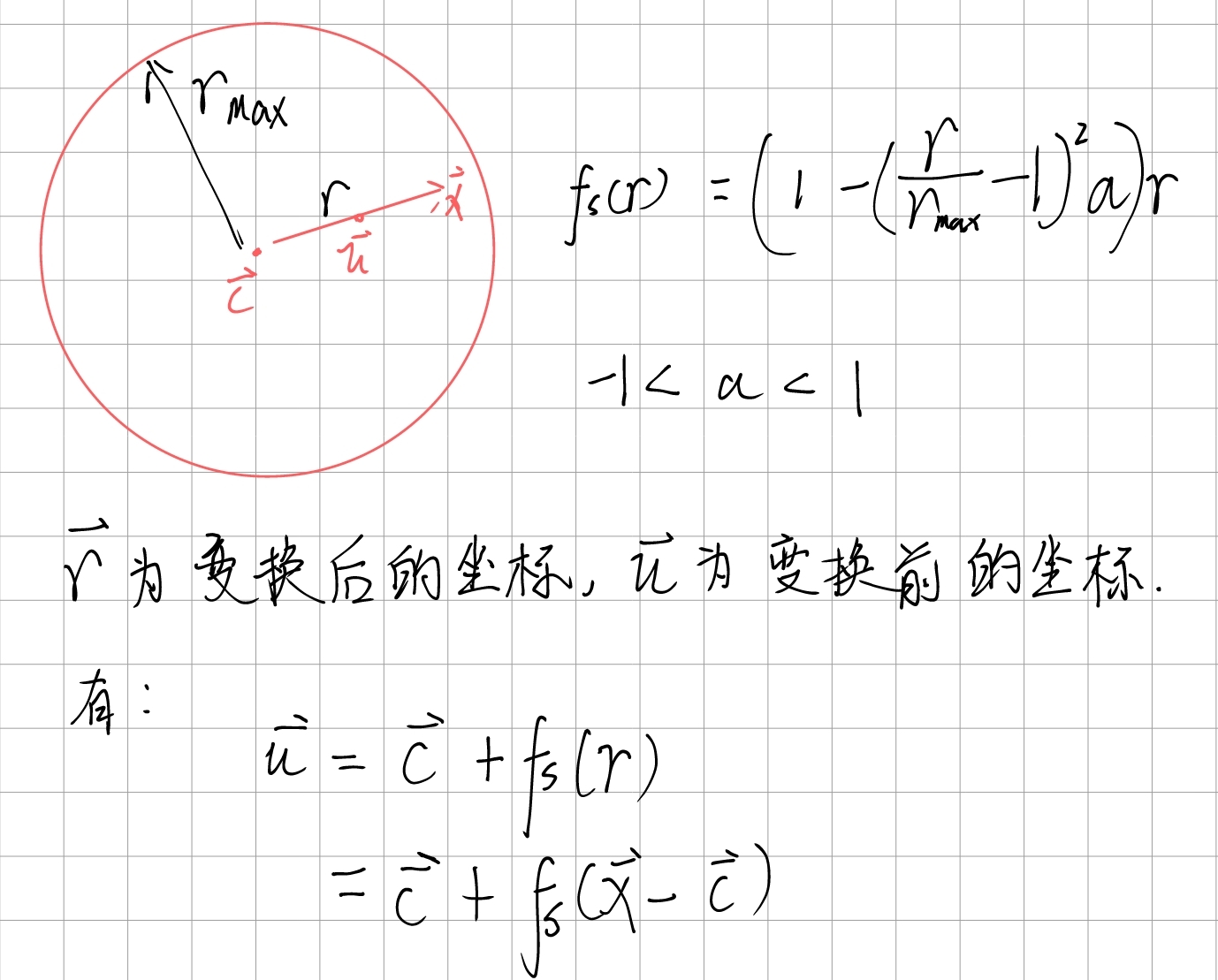

这里参考了论文Interactive Image Warping的局部缩放算法。

局部缩放公式:

由上公式可得,当 r r r等于 r m a x r_{max} rmax时,该式等于 r r r。

如果 a > 0 a>0 a>0,即r乘上一个0~1的数,即变换后的点相较于变换前的点相对于圆心外扩了。也就是局部放大。

如果 a < 0 a<0 a<0,即r乘上一个大于1的数,即变换后的点相较于变换前的点相对于圆心内缩了。也就是局部缩小。

根据上述公式,我们可以得到一个原图与输出图的像素坐标映射,通过双线性插值可以得到输出图的对应像素值。

3.2 代码

局部放大函数:

def Local_scaling_warps(img,cx,cy,r_max,a):

img1 = np.copy(img)

for y in range(cy-r_max,cy+r_max+1):

d = int(math.sqrt(r_max**2-(y-cy)**2))

x0 = cx-d

x1 = cx+d

for x in range(x0,x1+1):

r = math.sqrt((x-cx)**2 + (y-cy)**2)

for c in range(3):

if r<=r_max:

vector_c = np.array([cx, cy])

vector_r =np.array([x,y])-vector_c

f_s = (1-((r/r_max-1)**2)*a)

vector_u = vector_c+f_s*vector_r#原坐标

img1[y][x][c] = bilinear_interpolation(img,vector_u,c)

return img1

双线性插值:

def bilinear_interpolation(img,vector_u,c):

ux,uy=vector_u

x1,x2 = int(ux),int(ux+1)

y1,y2 = int(uy),int(uy+1)

# f_x_y1 = (x2-ux)/(x2-x1)*img[x1][y1]+(ux-x1)/(x2-x1)*img[x2][y1]

# f_x_y2 = (x2 - ux) / (x2 - x1) * img[x1][y2] + (ux - x1) / (x2 - x1) * img[x2][y2]

f_x_y1 = (x2-ux)/(x2-x1)*img[y1][x1][c]+(ux-x1)/(x2-x1)*img[y1][x2][c]

f_x_y2 = (x2 - ux) / (x2 - x1) * img[y2][x1][c] + (ux - x1) / (x2 - x1) * img[y2][x2][c]

f_x_y = (y2-uy)/(y2-y1)*f_x_y1+(uy-y1)/(y2-y1)*f_x_y2

return int(f_x_y)

4 简单界面

这里利用pyqt5简单设计了一个界面。

其中下方的两个滑块分别对应放缩的系数 a a a和放缩范围 r r r。

左边显示的是原图,右边是处理后的图像。可见小姐姐皮肤变好了,眼睛也变大了。

将参数稍微调下,会变得有点阴间哈哈哈。

# -*- coding: utf-8 -*-

# @Time : 2022/10/14 19:05

# @Author : shuoshuo

# @File : main.py

# @Project : 美颜

import sys

import cv2

import numpy as np

from PyQt5.QtWidgets import *

from PyQt5.QtCore import Qt

from PyQt5.QtGui import QPixmap, QImage

from PIL import Image,ImageQt

from qtpy import QtGui

import beautify

import face_detect

class My_win(QWidget):

def __init__(self,img):

super().__init__()

self.initUI(img)

def initUI(self,img):

self.r_max = 40

self.a = 0.5

self.left_eye_pos,self.right_eye_pos = face_detect.get_face_key_point(img)

self.img = img.copy()

self.mopi_btn = QPushButton("磨皮",self)

self.big_eye_btn = QPushButton("大眼",self)

self.save_btn = QPushButton("保存",self)

self.a_slider =QSlider(Qt.Horizontal)

self.a_slider.setRange(1,99)

self.a_slider.setSingleStep(5)

self.a_slider.setToolTip('大眼程度')

self.r_slider = QSlider(Qt.Horizontal)

self.r_slider.setRange(1,99)

self.r_slider.setSingleStep(5)

self.r_slider.setToolTip('大眼范围')

self.r_slider.valueChanged.connect(self.r_change)

self.a_slider.valueChanged.connect(self.a_change)

self.big_eye_btn.clicked.connect(self.big_eye)

self.mopi_btn.clicked.connect(self.mopi)

self.save_btn.clicked.connect(self.save)

self.original_pic = QLabel(self)

self.result_pic = QLabel(self)

self.button_box = QHBoxLayout()

# self.button_box.addStretch(1)

self.button_box.addWidget(self.mopi_btn)

self.button_box.addWidget(self.big_eye_btn)

self.button_box.addWidget(self.save_btn)

self.slider_box = QHBoxLayout()

self.slider_box.addWidget(self.a_slider)

self.slider_box.addWidget(self.r_slider)

self.hbox = QHBoxLayout()

self.hbox.addStretch(1)

self.hbox.addWidget(self.original_pic)

self.hbox.addWidget(self.result_pic)

self.vbox = QVBoxLayout()

self.vbox.addStretch(1)

self.vbox.addLayout(self.button_box)

self.vbox.addLayout(self.slider_box)

self.vbox.addLayout(self.hbox)

self.setLayout(self.vbox )

self.show_img(img,frame=self.original_pic)

self.show_img(self.img,frame=self.result_pic)

# self.setGeometry(100,100,800,600)

self.setWindowTitle("拉闸美颜")

self.show()

def show_img(self,img,frame):

r,w,c = img.shape

image = QtGui.QImage(img.data, w, r,w*3, QtGui.QImage.Format_RGB888).rgbSwapped()

frame.setPixmap(QtGui.QPixmap.fromImage(image))

# frame.setGeometry(pos[0],pos[1],r,w)

def big_eye(self):

self.result = beautify.big_eye(self.img,r_max=self.r_max,a=self.a,

left_eye_pos=self.left_eye_pos,right_eye_pos=self.right_eye_pos)

self.result = np.array(self.result, dtype=np.uint8)

self.show_img(self.result, frame=self.result_pic)

def mopi(self):

self.img = beautify.mopi(self.img)

# self.img = np.array(self.img*255,dtype=np.uint8)

self.show_img(self.img, frame=self.result_pic)

def a_change(self):

self.a = self.a_slider.value()/100

self.big_eye()

def r_change(self):

self.r_max = self.r_slider.value()

self.big_eye()

def save(self):

cv2.imwrite('output.jpg',self.result)

if __name__ == '__main__':

app = QApplication(sys.argv)

img = cv2.imread('img.png')

# img = cv2.resize(img,(400,300))

ex = My_win(img)

sys.exit(app.exec_())

4 完整代码

# -*- coding: utf-8 -*-

# @Time : 2022/10/5 22:48

# @Author : shuoshuo

# @File : beautify.py

# @Project : 美颜

import math

import cv2

import numpy as np

import matplotlib.pyplot as plt

import face_detect

import ellipse_skin_model

def bilinear_interpolation(img,vector_u,c):

ux,uy=vector_u

x1,x2 = int(ux),int(ux+1)

y1,y2 = int(uy),int(uy+1)

# f_x_y1 = (x2-ux)/(x2-x1)*img[x1][y1]+(ux-x1)/(x2-x1)*img[x2][y1]

# f_x_y2 = (x2 - ux) / (x2 - x1) * img[x1][y2] + (ux - x1) / (x2 - x1) * img[x2][y2]

f_x_y1 = (x2-ux)/(x2-x1)*img[y1][x1][c]+(ux-x1)/(x2-x1)*img[y1][x2][c]

f_x_y2 = (x2 - ux) / (x2 - x1) * img[y2][x1][c] + (ux - x1) / (x2 - x1) * img[y2][x2][c]

f_x_y = (y2-uy)/(y2-y1)*f_x_y1+(uy-y1)/(y2-y1)*f_x_y2

return int(f_x_y)

def Local_scaling_warps(img,cx,cy,r_max,a):

img1 = np.copy(img)

for y in range(cy-r_max,cy+r_max+1):

d = int(math.sqrt(r_max**2-(y-cy)**2))

x0 = cx-d

x1 = cx+d

for x in range(x0,x1+1):

r = math.sqrt((x-cx)**2 + (y-cy)**2) #求出当前位置的半径

for c in range(3):

vector_c = np.array([cx, cy])

vector_r =np.array([x,y])-vector_c

f_s = (1-((r/r_max-1)**2)*a)

vector_u = vector_c+f_s*vector_r#原坐标

img1[y][x][c] = bilinear_interpolation(img,vector_u,c)

return img1

def big_eye(img,r_max,a,left_eye_pos=None,right_eye_pos=None):

img0 = img.copy()

if left_eye_pos==None or right_eye_pos==None:

left_eye_pos,right_eye_pos=face_detect.get_face_key_point(img)

img0 = cv2.circle(img0,left_eye_pos,radius=10,color=(0,0,255))

img0 = cv2.circle(img0,right_eye_pos,radius=10,color=(0,0,255))

img= Local_scaling_warps(img,left_eye_pos[0],left_eye_pos[1],r_max=r_max,a=a)

img = Local_scaling_warps(img,right_eye_pos[0],right_eye_pos[1],r_max=r_max,a=a)

return img

def guided_filter(I,p,win_size,eps):

assert I.any() <=1 and p.any()<=1

mean_I = cv2.blur(I,(win_size,win_size))

mean_p = cv2.blur(p,(win_size,win_size))

corr_I = cv2.blur(I*I,(win_size,win_size))

corr_Ip = cv2.blur(I*p,(win_size,win_size))

var_I = corr_I-mean_I*mean_I

cov_Ip = corr_Ip - mean_I*mean_p

a = cov_Ip/(var_I+eps)

b = mean_p-a*mean_I

mean_a = cv2.blur(a,(win_size,win_size))

mean_b = cv2.blur(b,(win_size,win_size))

q = mean_a*I + mean_b

return q

def mopi(img):

skin,_ = ellipse_skin_model.YCrCb_ellipse_model(img)#获得皮肤的掩膜数组

#进行一次开运算

kernel = np.ones((3,3),dtype=np.uint8)

skin = cv2.erode(skin,kernel=kernel)

skin = cv2.dilate(skin,kernel=kernel)

img1 = guided_filter(img/255.0,img/255.0,10,eps=0.001)*255

img1 = np.array(img1,dtype=np.uint8)

img1 = cv2.bitwise_and(img1,img1,mask=skin)#将皮肤与背景分离

skin = cv2.bitwise_not(skin)

img1 = cv2.add(img1,cv2.bitwise_and(img,img,mask=skin))#磨皮后的结果与背景叠加

# fig, ax = plt.subplots(1, 2)

# ax[0].imshow(skin)

# ax[1].imshow(img1[:, :, ::-1])

# plt.show()

return img1

if __name__ == '__main__':

img = cv2.imread('img.png')

img1 = mopi(img)

big_eye(img1,r_max=40,a=0.8)

# -*- coding: utf-8 -*-

# @Time : 2022/10/18 21:54

# @Author : shuoshuo

# @File : ellipse_skin_model.py

# @Project : 美颜

import numpy as np

import cv2

def YCrCb_ellipse_model(img):

skinCrCbHist = np.zeros((256,256), dtype= np.uint8)

cv2.ellipse(skinCrCbHist, (113,155),(23,25), 43, 0, 360, (255,255,255), -1) #绘制椭圆弧线

YCrCb = cv2.cvtColor(img, cv2.COLOR_BGR2YCR_CB) #转换至YCrCb空间

(Y,Cr,Cb) = cv2.split(YCrCb) #拆分出Y,Cr,Cb值

skin = np.zeros(Cr.shape, dtype = np.uint8) #掩膜

(x,y) = Cr.shape

for i in range(0, x):

for j in range(0, y):

if skinCrCbHist [Cr[i][j], Cb[i][j]] > 0: #若不在椭圆区间中

skin[i][j] = 255

res = cv2.bitwise_and(img,img, mask = skin)

return skin,res

if __name__ == '__main__':

pass

import cv2

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

def get_face_key_point(img):

with mp_face_detection.FaceDetection(model_selection=1, min_detection_confidence=0.5) as face_detection:

results = face_detection.process(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

if not results.detections:

return None

annotated_image = img.copy()

r,w,c =img.shape

for detection in results.detections:

left_eye =mp_face_detection.get_key_point(detection, mp_face_detection.FaceKeyPoint.LEFT_EYE)

right_eye =mp_face_detection.get_key_point(detection, mp_face_detection.FaceKeyPoint.RIGHT_EYE)

left_eye_pos = [int(left_eye.x * w), int(left_eye.y * r)]

right_eye_pos = [int(right_eye.x * w), int(right_eye.y * r)]

return left_eye_pos,right_eye_pos

# -*- coding: utf-8 -*-

# @Time : 2022/10/14 19:05

# @Author : shuoshuo

# @File : main.py

# @Project : 美颜

import sys

import cv2

import numpy as np

from PyQt5.QtWidgets import *

from PyQt5.QtCore import Qt

from PyQt5.QtGui import QPixmap, QImage

from PIL import Image,ImageQt

from qtpy import QtGui

import beautify

import face_detect

class My_win(QWidget):

def __init__(self,img):

super().__init__()

self.initUI(img)

def initUI(self,img):

self.r_max = 40

self.a = 0.5

self.left_eye_pos,self.right_eye_pos = face_detect.get_face_key_point(img)

self.img = img.copy()

self.mopi_btn = QPushButton("磨皮",self)

self.big_eye_btn = QPushButton("大眼",self)

self.save_btn = QPushButton("保存",self)

self.a_slider =QSlider(Qt.Horizontal)

self.a_slider.setRange(1,99)

self.a_slider.setSingleStep(5)

self.a_slider.setToolTip('大眼程度')

self.r_slider = QSlider(Qt.Horizontal)

self.r_slider.setRange(1,99)

self.r_slider.setSingleStep(5)

self.r_slider.setToolTip('大眼范围')

self.r_slider.valueChanged.connect(self.r_change)

self.a_slider.valueChanged.connect(self.a_change)

self.big_eye_btn.clicked.connect(self.big_eye)

self.mopi_btn.clicked.connect(self.mopi)

self.save_btn.clicked.connect(self.save)

self.original_pic = QLabel(self)

self.result_pic = QLabel(self)

self.button_box = QHBoxLayout()

# self.button_box.addStretch(1)

self.button_box.addWidget(self.mopi_btn)

self.button_box.addWidget(self.big_eye_btn)

self.button_box.addWidget(self.save_btn)

self.slider_box = QHBoxLayout()

self.slider_box.addWidget(self.a_slider)

self.slider_box.addWidget(self.r_slider)

self.hbox = QHBoxLayout()

self.hbox.addStretch(1)

self.hbox.addWidget(self.original_pic)

self.hbox.addWidget(self.result_pic)

self.vbox = QVBoxLayout()

self.vbox.addStretch(1)

self.vbox.addLayout(self.button_box)

self.vbox.addLayout(self.slider_box)

self.vbox.addLayout(self.hbox)

self.setLayout(self.vbox )

self.show_img(img,frame=self.original_pic)

self.show_img(self.img,frame=self.result_pic)

# self.setGeometry(100,100,800,600)

self.setWindowTitle("拉闸美颜")

self.show()

def show_img(self,img,frame):

r,w,c = img.shape

image = QtGui.QImage(img.data, w, r,w*3, QtGui.QImage.Format_RGB888).rgbSwapped()

frame.setPixmap(QtGui.QPixmap.fromImage(image))

# frame.setGeometry(pos[0],pos[1],r,w)

def big_eye(self):

self.result = beautify.big_eye(self.img,r_max=self.r_max,a=self.a,

left_eye_pos=self.left_eye_pos,right_eye_pos=self.right_eye_pos)

self.result = np.array(self.result, dtype=np.uint8)

self.show_img(self.result, frame=self.result_pic)

def mopi(self):

self.img = beautify.mopi(self.img)

# self.img = np.array(self.img*255,dtype=np.uint8)

self.show_img(self.img, frame=self.result_pic)

def a_change(self):

self.a = self.a_slider.value()/100

self.big_eye()

def r_change(self):

self.r_max = self.r_slider.value()

self.big_eye()

def save(self):

cv2.imwrite('output.jpg',self.result)

if __name__ == '__main__':

app = QApplication(sys.argv)

img = cv2.imread('img.png')

# img = cv2.resize(img,(400,300))

ex = My_win(img)

sys.exit(app.exec_())