简介

进程和线程这两个词,每个程序员都十分熟悉,但是想要很清晰的描述出来却有一种不知道从何说起的感觉。所以今天结合一个具体的例子来描述一下进程与线程的相关概念:在terminal上敲出a.out这个自己编译出来可执行程序路径后,这个程序是怎么在linux系统中运行起来的?如果对于这个问题有兴趣的同学可以继续往下看。

对于linux内核下进程和线程的理解程度我经历过以下几个阶段:

- 知道进程和线程这两个基本概念:知道进程/线程相关的一些资源占用,调度的一些基本理论知识;知道进程=程序+数据等等;知道PC寄存器、指令、栈帧、上下文切换等一些零碎的概念。

- 知道进程和线程都是可独立调度的最小单位; 知道进程拥有独立地址空间,线程共享进程的地址空间,有自己独立的栈;知道pthread协议相关接口定义,以及会使用pthread接口创建线程/进程等操作。

- 知道线程在linux中本质上就是进程,可以分为内核线程,可调度线程和用户空间线程。内核线程是内核启动后的一些deamon线程用于处理一写内核状态工作,比如kswapd,flushd等;可调度线程是能够被内核调度感知的线程,也就是正常的native thread;用户空间线程不为内核调度感知,比如goroutine,gevent等,需要自己实现相关调度策略。

- 知道进程和线程在内核中是如何调度的:实时调度器,完全公平调度器等相关调度策略;知道可调度线程有内核栈和用户态栈两个不同的栈,当执行系统调用时需要从用户态栈切换到内核栈执行一些特权指令,比如write,mmap之类的。

- 知道在terminal bash中输入一个cmd之后,相关的进程是如何被操作系统运行起来的,知道线程是如何创建出来的。

- 知道栈中的指令是如何加载到CPU中,如何开始执行,上下文切换时的寄存器如何操作等。(这个阶段还没达到,不过不做内核开发的话,也没必要达到这个阶段。)

那么我们就通过结合linux源码以及一些man文档一起来深入了解一下进程和线程的实现,结合简介中说的例子来看一下linux用户进程及线程是如何被创建起来以及执行的。

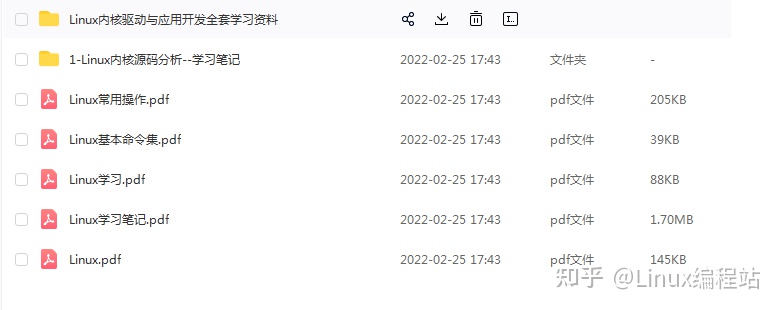

【文章福利】小编推荐自己的Linux内核技术交流群:【977878001】整理一些个人觉得比较好得学习书籍、视频资料共享在群文件里面,有需要的可以自行添加哦!!!前100进群领取,额外赠送一份价值699的内核资料包(含视频教程、电子书、实战项目及代码)

内核资料直通车:Linux内核源码技术学习路线+视频教程代码资料

学习直通车:Linux内核源码/内存调优/文件系统/进程管理/设备驱动/网络协议栈

进程 & 线程

task_struct,mm_struct这两个数据结构,分别描述进程/线程的数据结构及进程虚拟地址空间,是内核管理的关键结构,下面是相关内核代码。

struct task_struct {

#ifdef CONFIG_THREAD_INFO_IN_TASK

/*

* For reasons of header soup (see current_thread_info()), this

* must be the first element of task_struct.

*/

struct thread_info thread_info;

#endif

/* -1 unrunnable, 0 runnable, >0 stopped: */

volatile long state; // 运行状态

void *stack; // 内核栈空间地址

atomic_t usage;

/* Per task flags (PF_*), defined further below: */

unsigned int flags;

unsigned int ptrace; //

#ifdef CONFIG_SMP // 调度相关

struct llist_node wake_entry;

int on_cpu; // 是否已被分配到CPU上执行调度

#ifdef CONFIG_THREAD_INFO_IN_TASK

/* Current CPU: */

unsigned int cpu; // 被分配到的CPU

...

#endif

// 调度相关

int on_rq; // 是否在runqueue队列中(就绪)

int prio; // 优先级

int static_prio; // 静态优先级

int normal_prio; // 普通优先级

unsigned int rt_priority; // 实时优先级

// 上述优先级结合一个简单的算法来计算task_struct的权重,用于计算时间片

const struct sched_class *sched_class; // 调度类,诸如实时调度,完全公平调度等

struct sched_entity se; // 关联到的调度实体,这是完全公平调度器真正调度的单位

struct sched_rt_entity rt; // 关联到的实时调度实体,这是实时调度器真正调度的单位

#ifdef CONFIG_CGROUP_SCHED

struct task_group *sched_task_group; // 组调度,cgroup相关

#endif

struct sched_dl_entity dl; // deadline调度器调度实体

...

struct mm_struct *mm; // 虚拟内存结构

struct mm_struct *active_mm;

...

pid_t pid; // 进程id

pid_t tgid; // 组id

/* Real parent process: */

struct task_struct __rcu *real_parent; // 真实的父进程(ptrace的时候需要用到)

/* Recipient of SIGCHLD, wait4() reports: */

struct task_struct __rcu *parent; // 当前父进程

/*

* Children/sibling form the list of natural children:

*/

struct list_head children; // 子进程(当前进程fork出来的子进程/线程)

struct list_head sibling; // 兄弟进程

struct task_struct *group_leader;

/*

* 'ptraced' is the list of tasks this task is using ptrace() on.

*

* This includes both natural children and PTRACE_ATTACH targets.

* 'ptrace_entry' is this task's link on the p->parent->ptraced list.

*/

struct list_head ptraced;

struct list_head ptrace_entry;

/* PID/PID hash table linkage. */ //线程相关结构

struct pid *thread_pid;

struct hlist_node pid_links[PIDTYPE_MAX];

struct list_head thread_group;

struct list_head thread_node;

struct completion *vfork_done;

/* CLONE_CHILD_SETTID: */

int __user *set_child_tid;

/* CLONE_CHILD_CLEARTID: */

int __user *clear_child_tid;

u64 utime; // 用户态运行时间

u64 stime; // 和心态运行时间

...

/* Context switch counts: */

unsigned long nvcsw; //自愿切换

unsigned long nivcsw; //非自愿切换

/* Monotonic time in nsecs: */

u64 start_time; // 创建时间

/* Boot based time in nsecs: */

u64 real_start_time; // 创建时间

char comm[TASK_COMM_LEN]; // 执行cmd名称,包括路径

struct nameidata *nameidata; // 路径查找辅助结构

...

/* Filesystem information: */

struct fs_struct *fs; // 文件系统信息,根路径及当前文件路径

/* Open file information: */

struct files_struct *files; // 打开的文件句柄

/* Namespaces: */

struct nsproxy *nsproxy;

/* Signal handlers: */

struct signal_struct *signal; // 信号量

struct sighand_struct *sighand; // 信号量处理函数

...

/* CPU-specific state of this task: */

struct thread_struct thread; // 线程相关信息

};

struct mm_struct {

struct {

... // 虚拟地址空间相关数据结构(虚拟地址空间比较复杂,这里不做过多介绍)

unsigned long task_size; /* size of task vm space: 虚拟地址空间大小 */

unsigned long highest_vm_end; /* highest vma end address: 堆顶地址 */

pgd_t * pgd; // 页表相关

atomic_t mm_users; // 共享该空间的用户数

atomic_t mm_count; // 共享该空间的线程数(linux中线程与进程共享mm_struct)

#ifdef CONFIG_MMU

atomic_long_t pgtables_bytes; /* PTE page table pages:页表项占用的内存页数 */

#endif

int map_count; /* number of VMAs */

spinlock_t page_table_lock; /* Protects page tables and some

* counters

*/

struct rw_semaphore mmap_sem;

struct list_head mmlist; /* List of maybe swapped mm's. These

* are globally strung together off

* init_mm.mmlist, and are protected

* by mmlist_lock

*/

... // 统计信息

unsigned long stack_vm; /* VM_STACK */

unsigned long def_flags;

spinlock_t arg_lock; /* protect the below fields */

unsigned long start_code, end_code, start_data, end_data; // 代码段,数据段

unsigned long start_brk, brk, start_stack; // 堆起始地址,堆顶地址,进程栈地址

unsigned long arg_start, arg_end, env_start, env_end; // 初始变量段起始地址,环境变量段起始地址

...

struct linux_binfmt *binfmt; // 进程启动相关信息

...

};进程是从内核最原始的调度概念,伴随着内核诞生的,是调度的主体,每个进程都有唯一的进程ID用来标识,且在每一个命名空间都有一个独立的进程ID,进程ID在IPC中有着关键的作用。进程伴随着虚拟内存空间,内核栈,用户态栈,进程组,命名空间,打开文件,信号等资源。每个进程都有一些调度相关数据:优先级,cgroup;这两个数据用于计算进程运行过程中的时间片大小。进程可以被不同的调度器调度,主要的调度器包括:完全公平调度器,实时调度器,deadline调度器等。每个进程主要有三种状态:阻塞、就绪、运行中。

但是当你输入ps aux可以看到很多状态标识,分别表示:

PROCESS STATE CODES

Here are the different values that the s, stat and state output

specifiers (header "STAT" or "S") will display to describe the state of

a process:

D uninterruptible sleep (usually IO) // 不可中断阻塞,IO访问涉及到设备操作,如果要实现可中断操作比较复杂,一般是进入D状态,禁止竞争。(无法接受信号,不能被kil)

R running or runnable (on run queue)

S interruptible sleep (waiting for an event to complete)// 可中断阻塞(能够接受信号,可以被kill)

T stopped by job control signal

t stopped by debugger during the tracing

W paging (not valid since the 2.6.xx kernel)

X dead (should never be seen)

Z defunct ("zombie") process, terminated but not reaped by

its parent

For BSD formats and when the stat keyword is used, additional

characters may be displayed:

< high-priority (not nice to other users)

N low-priority (nice to other users)

L has pages locked into memory (for real-time and custom IO)

s is a session leader

l is multi-threaded (using CLONE_THREAD, like NPTL pthreads

do)

+ is in the foreground process group而线程是一个通用的标准概念,并不是操作系统的概念,很多操作系统最开始都没有线程的设计。线程的概念起源于pthread,其定义了一套POSIX标准接口,相比于原始进程,线程定义了一套具有更轻量级的资源分配,更方便的通信以及更融洽的协作等优势的调度标准,这个接口提供了一系列线程操作接口及线程通信接口。linux参考于unix,设计之初并没有考虑到这种设计,也就没有单独的特殊数据结构来描述线程,而且可以被调度的唯一结构也只有task_struct这一种结构。最开始实现pthread的实现库被称为LinuxThreads,但是该库对于pthread的支持并不是很兼容,因为内核很多设计没法很好的实现线程相关标准要求。内核为了更好的支持pthread,修改了task_struct中相关的数据结构及管理方式来支持更好的实现pthread标准,也就有了后来NPTL库,该库完美的兼容了POSIX协议中的各项要求,因此linux下线程又被成为LWP(Light-weight process)。

LinuxThreads

实现原理:

通过一个管理进程来创建线程,所有的线程操作包括创建、销毁等都由这个进程来执行。所以的线程本质上基本都是一个独立的进程,因此对POSIX要求的很多标准不兼容,具有很大的局限性。

LinuxThreads的局限性:

- 只有一个管理进程来协调所有线程,线程的创建和销毁开销大(管理进程是瓶颈)。

- 管理进程本身也需要上下文切换,且只能运行在一个CPU上,伸缩性和性能差。

- 与POSIX不兼容,诸如每个线程都有单独的进程ID;信号通信没法做到进程间级别;用户和组ID信息对进程下的所有线程来说不是通用的。

- 进程数有上限,这种线程机制很容易突破这个限制。

- ps会显示所有线程。

NPTL

为了更好的兼容POSIX,基于linux内核在2.6之后开发了新的线程库Native POSIX Thread Library,解决了 LinuxThreads中的诸多局限,也完全兼容了POSIX协议,性能和稳定性都有了重大改进。

内核2.4之后对于task_struct的创建提供了更丰富的的参数选择,这样为上层library的实现提供了很多便利,也为去除管理进程提供了可能。上层可以通过参数化的进程创建并结合一些机制来区分实现线程和进程。有了线程组概念后能更好的处理线程之间的关联与信号量处理,这样基本就能去除LinuxThread中的相关限制了。

实现原理:

linux暴露出来创建进程的系统调用主要有fork和clone两个。看一下fork和clone的函数模型:

pid_t fork(void);

int clone(int (*fn)(void *), void *stack, int flags, void arg, ...

/ pid_t *parent_tid, void *tls, pid_t *child_tid */ );

fork只能用来创建进程,因为其没啥可做差异化的参数,而clone中的flags提供了丰富的参数化配置,也是用来做进程/线程差异化的关键,为上层lib更好的实现Thread Library提供了可能,clone系统调用就成为了创建线程的最佳接口。

- 通过一些特殊的FLAG可以在创建新的task_struct的时候与父task_struct共享内存空间,共享打开的文件,共享io调度器,共享命名空间,共享cgroup。

- 通过线程组的数据结构来标识同一进程下的所有线程,这样就可以实现进程级别的信号IPC通信。

- 通过pid及tgid两个参数来区分进程和线程。

我们看一下clone的man文档。clone通过flag来设置需要共享的资源,包括打开的文件,内存地址空间,命名空间等;但是有自己独立的执行栈,也就是方法中的fn地址,可以通过参数化CLONE_FS,CLONE_IO,CLONE_NEWIPC之类的来与父进程隔离独立的资源(这也是container namespace隔离的基础)。

NPTL 基于参数化clone系统调用,可以很好的实现兼容POSIX协议的线程库。而且2.6之后内核还实现了FUTEX系统调用来更好的处理用户空间线程间的通信。

在clone中可以看到需要自己传入执行方法fn的地址,除此之外还需要传入栈地址stack,因此通过clone系统调用创建的线程的栈空间,需要用户自己分配,但不需要自己回收,父进程会负责子进程的资源回收,栈的分配在glibc中的具体实现如下:

static int

allocate_stack (const struct pthread_attr *attr, struct pthread **pdp,

ALLOCATE_STACK_PARMS)

{

/* Get memory for the stack. */

if (__glibc_unlikely (attr->flags & ATTR_FLAG_STACKADDR))

{

uintptr_t adj;

char *stackaddr = (char *) attr->stackaddr;

/* Assume the same layout as the _STACK_GROWS_DOWN case, with struct

pthread at the top of the stack block. Later we adjust the guard

location and stack address to match the _STACK_GROWS_UP case. */

if (_STACK_GROWS_UP)

stackaddr += attr->stacksize;

/* If the user also specified the size of the stack make sure it

is large enough. */

if (attr->stacksize != 0

&& attr->stacksize < (__static_tls_size + MINIMAL_REST_STACK))

return EINVAL;

/* The user provided stack memory needs to be cleared. */

memset (pd, '\0', sizeof (struct pthread));

... // init pd

} else {

void *mem;

const int prot = (PROT_READ | PROT_WRITE

| ((GL(dl_stack_flags) & PF_X) ? PROT_EXEC : 0));

...

mem = mmap (NULL, size, prot,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_STACK, -1, 0);

...

#if TLS_TCB_AT_TP

pd = (struct pthread *) ((char *) mem + size - coloring) - 1;

#elif TLS_DTV_AT_TP

pd = (struct pthread *) ((((uintptr_t) mem + size - coloring

- __static_tls_size)

& ~__static_tls_align_m1)

- TLS_PRE_TCB_SIZE);

}

}}

可以看到,glibc中的实现是通过mmap来申请MAP_STACK(grow down) flag的匿名映射作为线程栈地址。这样一个可被调度的native线程就在linux中被创建出来了。

所以知道linux下线程创建的关键之处还是clone的具体实现。fork和clone的会统一到_do_fork这个内核函数,唯一不同的是fork传入的都是默认参数,而clone可以进行特别详细的配置,看一下_do_fork的具体实现:

/*

* Ok, this is the main fork-routine.

*

* It copies the process, and if successful kick-starts

* it and waits for it to finish using the VM if required.

*/

long _do_fork(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *parent_tidptr,

int __user *child_tidptr,

unsigned long tls)

{

struct completion vfork; // 创建子进程后回调,vfork不拷贝页表页,

struct pid *pid;

struct task_struct *p;

int trace = 0;

long nr;

/*

* Determine whether and which event to report to ptracer. When

* called from kernel_thread or CLONE_UNTRACED is explicitly

* requested, no event is reported; otherwise, report if the event

* for the type of forking is enabled.

*/

if (!(clone_flags & CLONE_UNTRACED)) { // ptrace相关

if (clone_flags & CLONE_VFORK)

trace = PTRACE_EVENT_VFORK;

else if ((clone_flags & CSIGNAL) != SIGCHLD)

trace = PTRACE_EVENT_CLONE;

else

trace = PTRACE_EVENT_FORK;

if (likely(!ptrace_event_enabled(current, trace)))

trace = 0;

}

p = copy_process(clone_flags, stack_start, stack_size,

child_tidptr, NULL, trace, tls, NUMA_NO_NODE); // 拷贝task_struct对象,也就是生成一个新的进程/线程

add_latent_entropy();

if (IS_ERR(p))

return PTR_ERR(p);

/*

* Do this prior waking up the new thread - the thread pointer

* might get invalid after that point, if the thread exits quickly.

*/

trace_sched_process_fork(current, p);

pid = get_task_pid(p, PIDTYPE_PID);

nr = pid_vnr(pid);

if (clone_flags & CLONE_PARENT_SETTID)

put_user(nr, parent_tidptr);

if (clone_flags & CLONE_VFORK) {// vfork 相关特殊处理

p->vfork_done = &vfork;

init_completion(&vfork);

get_task_struct(p);

}

wake_up_new_task(p); // 将该task唤醒:放入就绪队列

/* forking complete and child started to run, tell ptracer */

if (unlikely(trace))

ptrace_event_pid(trace, pid);

if (clone_flags & CLONE_VFORK) {

if (!wait_for_vfork_done(p, &vfork))

ptrace_event_pid(PTRACE_EVENT_VFORK_DONE, pid);

}

put_pid(pid); // 记录各个namespace中pid被使用情况

return nr;

}

/*

* This creates a new process as a copy of the old one,

* but does not actually start it yet.

*

* It copies the registers, and all the appropriate

* parts of the process environment (as per the clone

* flags). The actual kick-off is left to the caller.

*/

static __latent_entropy struct task_struct *copy_process(

unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *child_tidptr,

struct pid *pid,

int trace,

unsigned long tls,

int node)

{

int retval;

struct task_struct *p;

struct multiprocess_signals delayed;

/*

* Don't allow sharing the root directory with processes in a different

* namespace

*/

if ((clone_flags & (CLONE_NEWNS|CLONE_FS)) == (CLONE_NEWNS|CLONE_FS))

return ERR_PTR(-EINVAL);

if ((clone_flags & (CLONE_NEWUSER|CLONE_FS)) == (CLONE_NEWUSER|CLONE_FS))

return ERR_PTR(-EINVAL);

/*

* Thread groups must share signals as well, and detached threads

* can only be started up within the thread group.

*/

if ((clone_flags & CLONE_THREAD) && !(clone_flags & CLONE_SIGHAND))

return ERR_PTR(-EINVAL);

/*

* Shared signal handlers imply shared VM. By way of the above,

* thread groups also imply shared VM. Blocking this case allows

* for various simplifications in other code.

*/

if ((clone_flags & CLONE_SIGHAND) && !(clone_flags & CLONE_VM))

return ERR_PTR(-EINVAL);

/*

* Siblings of global init remain as zombies on exit since they are

* not reaped by their parent (swapper). To solve this and to avoid

* multi-rooted process trees, prevent global and container-inits

* from creating siblings.

*/

if ((clone_flags & CLONE_PARENT) &&

current->signal->flags & SIGNAL_UNKILLABLE)

return ERR_PTR(-EINVAL);

/*

* If the new process will be in a different pid or user namespace

* do not allow it to share a thread group with the forking task.

*/

if (clone_flags & CLONE_THREAD) {

if ((clone_flags & (CLONE_NEWUSER | CLONE_NEWPID)) ||

(task_active_pid_ns(current) !=

current->nsproxy->pid_ns_for_children))

return ERR_PTR(-EINVAL);

}

/*

* Force any signals received before this point to be delivered

* before the fork happens. Collect up signals sent to multiple

* processes that happen during the fork and delay them so that

* they appear to happen after the fork.

*/

sigemptyset(&delayed.signal);

INIT_HLIST_NODE(&delayed.node);

spin_lock_irq(¤t->sighand->siglock);

if (!(clone_flags & CLONE_THREAD))

hlist_add_head(&delayed.node, ¤t->signal->multiprocess);

recalc_sigpending();

spin_unlock_irq(¤t->sighand->siglock);

retval = -ERESTARTNOINTR;

if (signal_pending(current))

goto fork_out;

retval = -ENOMEM;

p = dup_task_struct(current, node); // 复制task_struct数据结构

if (!p)

goto fork_out;

/*

* This _must_ happen before we call free_task(), i.e. before we jump

* to any of the bad_fork_* labels. This is to avoid freeing

* p->set_child_tid which is (ab)used as a kthread's data pointer for

* kernel threads (PF_KTHREAD).

*/

p->set_child_tid = (clone_flags & CLONE_CHILD_SETTID) ? child_tidptr : NULL; // 设置ThreadId

/*

* Clear TID on mm_release()?

*/

p->clear_child_tid = (clone_flags & CLONE_CHILD_CLEARTID) ? child_tidptr : NULL; // 线程退出时是否清除ThreadId

ftrace_graph_init_task(p);

rt_mutex_init_task(p);

...

retval = -EAGAIN;

if (atomic_read(&p->real_cred->user->processes) >=

task_rlimit(p, RLIMIT_NPROC)) {

if (p->real_cred->user != INIT_USER &&

!capable(CAP_SYS_RESOURCE) && !capable(CAP_SYS_ADMIN))

goto bad_fork_free;

}

current->flags &= ~PF_NPROC_EXCEEDED;

retval = copy_creds(p, clone_flags); // 权限相关

if (retval < 0)

goto bad_fork_free;

/*

* If multiple threads are within copy_process(), then this check

* triggers too late. This doesn't hurt, the check is only there

* to stop root fork bombs.

*/

retval = -EAGAIN;

if (nr_threads >= max_threads) // 超出线程上线

goto bad_fork_cleanup_count;

delayacct_tsk_init(p); /* Must remain after dup_task_struct() */

...

// 初始化统计信息

p->utime = p->stime = p->gtime = 0;

#ifdef CONFIG_ARCH_HAS_SCALED_CPUTIME

p->utimescaled = p->stimescaled = 0;

#endif

prev_cputime_init(&p->prev_cputime);

#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

seqcount_init(&p->vtime.seqcount);

p->vtime.starttime = 0;

p->vtime.state = VTIME_INACTIVE;

#endif

#if defined(SPLIT_RSS_COUNTING)

memset(&p->rss_stat, 0, sizeof(p->rss_stat));

#endif

p->default_timer_slack_ns = current->timer_slack_ns;

#ifdef CONFIG_PSI

p->psi_flags = 0;

#endif

...

/* Perform scheduler related setup. Assign this task to a CPU. */

retval = sched_fork(clone_flags, p); // 初始化调度器类,优先级等调度相关信息,并根据numa设置一个更倾向于的CPU,将该task放入当前cpu的runqueue

if (retval)

goto bad_fork_cleanup_policy;

retval = perf_event_init_task(p);

if (retval)

goto bad_fork_cleanup_policy;

retval = audit_alloc(p);

if (retval)

goto bad_fork_cleanup_perf;

/* copy all the process information */

shm_init_task(p);

retval = security_task_alloc(p, clone_flags);

if (retval)

goto bad_fork_cleanup_audit;

retval = copy_semundo(clone_flags, p);

if (retval)

goto bad_fork_cleanup_security;

retval = copy_files(clone_flags, p); // 拷贝打开文件句柄

if (retval)

goto bad_fork_cleanup_semundo;

retval = copy_fs(clone_flags, p); // 拷贝进程路径信息

if (retval)

goto bad_fork_cleanup_files;

retval = copy_sighand(clone_flags, p); // 拷贝信号处理函数

if (retval)

goto bad_fork_cleanup_fs;

retval = copy_signal(clone_flags, p); // 拷贝信号

if (retval)

goto bad_fork_cleanup_sighand;

retval = copy_mm(clone_flags, p); // 拷贝地址空间

if (retval)

goto bad_fork_cleanup_signal;

retval = copy_namespaces(clone_flags, p); // 拷贝命名空间

if (retval)

goto bad_fork_cleanup_mm;

retval = copy_io(clone_flags, p); // 拷贝IO调度相关信息

if (retval)

goto bad_fork_cleanup_namespaces;

retval = copy_thread_tls(clone_flags, stack_start, stack_size, p, tls); // 设置线程栈帧及相关寄存器上下文信息

if (retval)

goto bad_fork_cleanup_io;

...

stackleak_task_init(p); // 记录栈起始地址,防止栈溢出

if (pid != &init_struct_pid) {

pid = alloc_pid(p->nsproxy->pid_ns_for_children);

if (IS_ERR(pid)) {

retval = PTR_ERR(pid);

goto bad_fork_cleanup_thread;

}

}

...

/* ok, now we should be set up.. */

p->pid = pid_nr(pid);

if (clone_flags & CLONE_THREAD) { // 设置线程组及实时退出信号量,线程不能处理退出信号(signal numbers 32 and 33)

p->exit_signal = -1;

p->group_leader = current->group_leader;

p->tgid = current->tgid;

} else {

if (clone_flags & CLONE_PARENT)

p->exit_signal = current->group_leader->exit_signal;

else

p->exit_signal = (clone_flags & CSIGNAL);

p->group_leader = p;

p->tgid = p->pid;

}

... // cgroup 相关

/*

* From this point on we must avoid any synchronous user-space

* communication until we take the tasklist-lock. In particular, we do

* not want user-space to be able to predict the process start-time by

* stalling fork(2) after we recorded the start_time but before it is

* visible to the system.

*/

p->start_time = ktime_get_ns();

p->real_start_time = ktime_get_boot_ns();

/*

* Make it visible to the rest of the system, but dont wake it up yet.

* Need tasklist lock for parent etc handling!

*/

write_lock_irq(&tasklist_lock);

/* CLONE_PARENT re-uses the old parent */

if (clone_flags & (CLONE_PARENT|CLONE_THREAD)) { // 设置父进程

p->real_parent = current->real_parent;

p->parent_exec_id = current->parent_exec_id;

} else {

p->real_parent = current;

p->parent_exec_id = current->self_exec_id;

}

klp_copy_process(p);

spin_lock(¤t->sighand->siglock);

/*

* Copy seccomp details explicitly here, in case they were changed

* before holding sighand lock.

*/

copy_seccomp(p); // Secure Computing

rseq_fork(p, clone_flags);

/* Don't start children in a dying pid namespace */

if (unlikely(!(ns_of_pid(pid)->pid_allocated & PIDNS_ADDING))) {

retval = -ENOMEM;

goto bad_fork_cancel_cgroup;

}

/* Let kill terminate clone/fork in the middle */

if (fatal_signal_pending(current)) {

retval = -EINTR;

goto bad_fork_cancel_cgroup;

}

... // pid分配及 更新统计信息

return p;

...

}

static struct task_struct *dup_task_struct(struct task_struct *orig, int node)

{

struct task_struct *tsk;

unsigned long *stack;

struct vm_struct *stack_vm_area __maybe_unused;

int err;

if (node == NUMA_NO_NODE)

node = tsk_fork_get_node(orig); // 获取内核栈slab节点

tsk = alloc_task_struct_node(node); // 分配task_struct slab内存

if (!tsk)

return NULL;

stack = alloc_thread_stack_node(tsk, node); // 分配内核栈slab内存

if (!stack)

goto free_tsk;

if (memcg_charge_kernel_stack(tsk)) // 记录内核栈cgroup统计

goto free_stack;

stack_vm_area = task_stack_vm_area(tsk); // 获取栈虚拟地址(通过vmalloc内核空间虚拟映射)

err = arch_dup_task_struct(tsk, orig); // 将原始task的数据拷贝到新的task中

/*

* arch_dup_task_struct() clobbers the stack-related fields. Make

* sure they're properly initialized before using any stack-related

* functions again.

*/

tsk->stack = stack; // 修改内核栈地址

#ifdef CONFIG_VMAP_STACK

tsk->stack_vm_area = stack_vm_area; // 修改内核栈虚拟地址

#endif

#ifdef CONFIG_THREAD_INFO_IN_TASK

atomic_set(&tsk->stack_refcount, 1);

#endif

if (err)

goto free_stack;

#ifdef CONFIG_SECCOMP

/*

* We must handle setting up seccomp filters once we're under

* the sighand lock in case orig has changed between now and

* then. Until then, filter must be NULL to avoid messing up

* the usage counts on the error path calling free_task.

*/

tsk->seccomp.filter = NULL;

#endif

setup_thread_stack(tsk, orig); // 通过拷贝原始task线程信息,初始化新task线程信息

clear_user_return_notifier(tsk); // 清除notifer

clear_tsk_need_resched(tsk); // 清除调度标记

set_task_stack_end_magic(tsk); // 设置内核栈栈尾地址,防止溢出

#ifdef CONFIG_STACKPROTECTOR

tsk->stack_canary = get_random_canary();

#endif

/*

* One for us, one for whoever does the "release_task()" (usually

* parent)

*/

atomic_set(&tsk->usage, 2);

#ifdef CONFIG_BLK_DEV_IO_TRACE

tsk->btrace_seq = 0;

#endif

tsk->splice_pipe = NULL;

tsk->task_frag.page = NULL;

tsk->wake_q.next = NULL;

account_kernel_stack(tsk, 1); // 技术内核栈数量

kcov_task_init(tsk);

#ifdef CONFIG_FAULT_INJECTION

tsk->fail_nth = 0;

#endif

#ifdef CONFIG_BLK_CGROUP

tsk->throttle_queue = NULL;

tsk->use_memdelay = 0;

#endif

#ifdef CONFIG_MEMCG

tsk->active_memcg = NULL;

#endif

return tsk;

free_stack:

free_thread_stack(tsk);

free_tsk:

free_task_struct(tsk);

return NULL;

}

/*

* wake_up_new_task - wake up a newly created task for the first time.

*

* This function will do some initial scheduler statistics housekeeping

* that must be done for every newly created context, then puts the task

* on the runqueue and wakes it.

*/

void wake_up_new_task(struct task_struct *p)

{

struct rq_flags rf;

struct rq *rq;

raw_spin_lock_irqsave(&p->pi_lock, rf.flags);

p->state = TASK_RUNNING; // 设置状态

#ifdef CONFIG_SMP

/*

* Fork balancing, do it here and not earlier because:

* - cpus_allowed can change in the fork path

* - any previously selected CPU might disappear through hotplug

*

* Use __set_task_cpu() to avoid calling sched_class::migrate_task_rq,

* as we're not fully set-up yet.

*/

p->recent_used_cpu = task_cpu(p); // 设置最近跑的cpu

__set_task_cpu(p, select_task_rq(p, task_cpu(p), SD_BALANCE_FORK, 0));

#endif

rq = __task_rq_lock(p, &rf);

update_rq_clock(rq); // 更新时钟

post_init_entity_util_avg(&p->se);

activate_task(rq, p, ENQUEUE_NOCLOCK); // 将其放入runqueue

p->on_rq = TASK_ON_RQ_QUEUED;

trace_sched_wakeup_new(p);

check_preempt_curr(rq, p, WF_FORK); // 检查是否可抢占

#ifdef CONFIG_SMP

if (p->sched_class->task_woken) {

/*

* Nothing relies on rq->lock after this, so its fine to

* drop it.

*/

rq_unpin_lock(rq, &rf);

p->sched_class->task_woken(rq, p);

rq_repin_lock(rq, &rf);

}

#endif

task_rq_unlock(rq, p, &rf);

}通过阅读上述代码发现新建线程或者进程的逻辑主要包括以下步骤:

如此一个新的进程 或者线程就被创建出来了,设置好想应的指令栈帧,调度到该task的时候可以直接从对应的栈帧及寄存器上下文信息中恢复运行。其中最关键的部分就是根据flag参数判断是否拷贝内存空间,打开文件以及设置用户空间线程栈以及设置线程组等操作。