一、遇到问题

- 使用RK3399的开发板,跑Android8.1系统

- 一开始插上外置的USB麦克风的时候,无法使用

二、分析问题

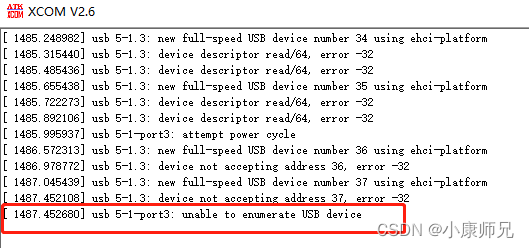

- 查看USB麦克风拔插过程的debug打印日志

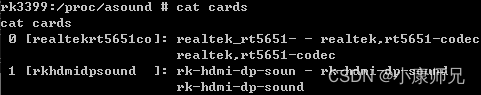

- 插入USB麦克风后,adb 查询当前声卡信息

cat cards

三、解决问题

- 通过分析发现,USB麦克风设备没有枚举出来,节点都没挂载上去

- 这时候就怀疑是硬件问题,

USB麦克风设备故障,或者USB供电不足,或者USB布线问题 - 通过交叉实验,

拔插不同的USB口,更换RK3399开发板,更换USB麦克风 - 最后确认是

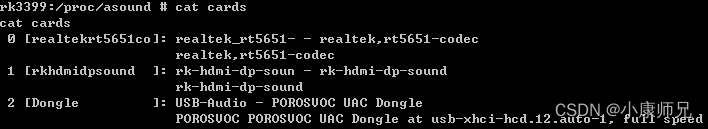

USB麦克风设备故障,更换USB麦克风后,再cat cards就能查询出USB-AUDIO设备

- 使用USB麦克风设备

- 首先需要正常识别到该USB-AUDIO设备

- 其次Android系统会自动切换MIC录音源

- 如果没有插入USB麦克风设备,则Android系统使用主板上模拟MIC

- 如果插入USB麦克风设备,则Android系统就会切换到USB麦克风

四、录音源码分析

audioSource: MediaRecorder.AudioSource.CAMCORDER, MediaRecorder.AudioSource.MIC 等sampleRateInHz: 常用的有: 8000,11025,16000,22050,44100,96000;44100Hz是唯一可以保证兼容所有Android手机的采样率channelConfig:AudioFormat.CHANNEL_CONFIGURATION_DEFAULT,常用的是CHANNEL_IN_MONO(单通道)CHANNEL_IN_STEREO(双通道)audioFormat: AudioFormat.ENCODING_PCM_16BIT, 测试中要捕获PCM数据bufferSizeInBytes: 由AudioRecord.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat)获得

public AudioRecord(int audioSource, int sampleRateInHz, int channelConfig, int audioFormat, int bufferSizeInBytes)

AudioRecord.startRecording();

AudioRecord.stop();

AudioRecord.read(byte[] audioData, int offsetInBytes, int sizeInBytes);

public class AudioCapturer {

private static final String TAG = "AudioCapturer";

private static final int DEFAULT_SOURCE = MediaRecorder.AudioSource.MIC;

private static final int DEFAULT_SAMPLE_RATE = 44100;

private static final int DEFAULT_CHANNEL_CONFIG = AudioFormat.CHANNEL_IN_STEREO;

private static final int DEFAULT_AUDIO_FORMAT = AudioFormat.ENCODING_PCM_16BIT;

private AudioRecord mAudioRecord;

private int mMinBufferSize = 0;

private Thread mCaptureThread;

private boolean mIsCaptureStarted = false;

private volatile boolean mIsLoopExit = false;

private OnAudioFrameCapturedListener mAudioFrameCapturedListener;

public interface OnAudioFrameCapturedListener {

public void onAudioFrameCaptured(byte[] audioData);

}

public boolean isCaptureStarted() {

return mIsCaptureStarted;

}

public void setOnAudioFrameCapturedListener(OnAudioFrameCapturedListener listener) {

mAudioFrameCapturedListener = listener;

}

public boolean startCapture() {

return startCapture(DEFAULT_SOURCE, DEFAULT_SAMPLE_RATE, DEFAULT_CHANNEL_CONFIG,

DEFAULT_AUDIO_FORMAT);

}

public boolean startCapture(int audioSource, int sampleRateInHz, int channelConfig, int audioFormat) {

if (mIsCaptureStarted) {

Log.e(TAG, "Capture already started !");

return false;

}

mMinBufferSize = AudioRecord.getMinBufferSize(sampleRateInHz,channelConfig,audioFormat);

if (mMinBufferSize == AudioRecord.ERROR_BAD_VALUE) {

Log.e(TAG, "Invalid parameter !");

return false;

}

Log.d(TAG , "getMinBufferSize = "+mMinBufferSize+" bytes !");

mAudioRecord = new AudioRecord(audioSource,sampleRateInHz,channelConfig,audioFormat,mMinBufferSize);

if (mAudioRecord.getState() == AudioRecord.STATE_UNINITIALIZED) {

Log.e(TAG, "AudioRecord initialize fail !");

return false;

}

mAudioRecord.startRecording();

mIsLoopExit = false;

mCaptureThread = new Thread(new AudioCaptureRunnable());

mCaptureThread.start();

mIsCaptureStarted = true;

Log.d(TAG, "Start audio capture success !");

return true;

}

public void stopCapture() {

if (!mIsCaptureStarted) {

return;

}

mIsLoopExit = true;

try {

mCaptureThread.interrupt();

mCaptureThread.join(1000);

}

catch (InterruptedException e) {

e.printStackTrace();

}

if (mAudioRecord.getRecordingState() == AudioRecord.RECORDSTATE_RECORDING) {

mAudioRecord.stop();

}

mAudioRecord.release();

mIsCaptureStarted = false;

mAudioFrameCapturedListener = null;

Log.d(TAG, "Stop audio capture success !");

}

private class AudioCaptureRunnable implements Runnable {

@Override

public void run() {

while (!mIsLoopExit) {

byte[] buffer = new byte[mMinBufferSize];

int ret = mAudioRecord.read(buffer, 0, mMinBufferSize);

if (ret == AudioRecord.ERROR_INVALID_OPERATION) {

Log.e(TAG , "Error ERROR_INVALID_OPERATION");

}

else if (ret == AudioRecord.ERROR_BAD_VALUE) {

Log.e(TAG , "Error ERROR_BAD_VALUE");

}

else {

if (mAudioFrameCapturedListener != null) {

mAudioFrameCapturedListener.onAudioFrameCaptured(buffer);

}

Log.d(TAG , "OK, Captured "+ret+" bytes !");

}

SystemClock.sleep(10);

}

}

}

}

五、播放源码分析

streamType:AudioManager.STREAM_VOCIE_CALL:电话声音AudioManager.STREAM_SYSTEM:系统声音AudioManager.STREAM_RING:铃声AudioManager.STREAM_MUSCI:音乐声AudioManager.STREAM_ALARM:警告声AudioManager.STREAM_NOTIFICATION:通知声

sampleRateInHz: 常用的有: 8000,11025,16000,22050,44100,96000;44100Hz是唯一可以保证兼容所有Android手机的采样率channelConfig:AudioFormat.CHANNEL_CONFIGURATION_DEFAULT,常用的是CHANNEL_IN_MONO(单通道)audioFormat: AudioFormat.ENCODING_PCM_16BIT, 测试中要捕获PCM数据bufferSizeInBytes: 由AudioRecord.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat)获得mode:AudioTrack.MODE_STATIC,一次性将所有的数据都写入播放缓冲区,简单高效AudioTrack.MODE_STREAM,按照一定的时间间隔不间断地写入音频数据

public AudioTrack(int streamType, int sampleRateInHz, int channelConfig, int audioFormat, int bufferSizeInBytes, int mode)

AudioTrack.play();

AudioTrack.stop();

import android.util.Log;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioTrack;

public class AudioPlayer {

private static final String TAG = "AudioPlayer";

private static final int DEFAULT_STREAM_TYPE = AudioManager.STREAM_MUSIC;

private static final int DEFAULT_SAMPLE_RATE = 44100;

private static final int DEFAULT_CHANNEL_CONFIG = AudioFormat.CHANNEL_IN_STEREO;

private static final int DEFAULT_AUDIO_FORMAT = AudioFormat.ENCODING_PCM_16BIT;

private static final int DEFAULT_PLAY_MODE = AudioTrack.MODE_STREAM;

private boolean mIsPlayStarted = false;

private int mMinBufferSize = 0;

private AudioTrack mAudioTrack;

public boolean startPlayer() {

return startPlayer(DEFAULT_STREAM_TYPE,DEFAULT_SAMPLE_RATE,DEFAULT_CHANNEL_CONFIG,DEFAULT_AUDIO_FORMAT);

}

public boolean startPlayer(int streamType, int sampleRateInHz, int channelConfig, int audioFormat) {

if (mIsPlayStarted) {

Log.e(TAG, "Player already started !");

return false;

}

mMinBufferSize = AudioTrack.getMinBufferSize(sampleRateInHz,channelConfig,audioFormat);

if (mMinBufferSize == AudioTrack.ERROR_BAD_VALUE) {

Log.e(TAG, "Invalid parameter !");

return false;

}

Log.d(TAG , "getMinBufferSize = "+mMinBufferSize+" bytes !");

mAudioTrack = new AudioTrack(streamType,sampleRateInHz,channelConfig,audioFormat,mMinBufferSize,DEFAULT_PLAY_MODE);

if (mAudioTrack.getState() == AudioTrack.STATE_UNINITIALIZED) {

Log.e(TAG, "AudioTrack initialize fail !");

return false;

}

mIsPlayStarted = true;

Log.d(TAG, "Start audio player success !");

return true;

}

public int getMinBufferSize() {

return mMinBufferSize;

}

public void stopPlayer() {

if (!mIsPlayStarted) {

return;

}

if (mAudioTrack.getPlayState() == AudioTrack.PLAYSTATE_PLAYING) {

mAudioTrack.stop();

}

mAudioTrack.release();

mIsPlayStarted = false;

Log.d(TAG, "Stop audio player success !");

}

public boolean play(byte[] audioData, int offsetInBytes, int sizeInBytes) {

if (!mIsPlayStarted) {

Log.e(TAG, "Player not started !");

return false;

}

if (sizeInBytes < mMinBufferSize) {

Log.e(TAG, "audio data is not enough !");

return false;

}

if (mAudioTrack.write(audioData,offsetInBytes,sizeInBytes) != sizeInBytes) {

Log.e(TAG, "Could not write all the samples to the audio device !");

}

mAudioTrack.play();

Log.d(TAG , "OK, Played "+sizeInBytes+" bytes !");

return true;

}

}

六、参考

觉得好,就一键三连呗(点赞+收藏+关注)