一、引言:

在上一篇博客中,介绍了ijkplayer的编译及demo的使用,这篇博客将从应用层入手分析,看ijkplayer是如何调入到jni层的。

二、Java层代码分析:

选择码流进行播放时,将会跳转到VideoActivity,看一下onCreate:

onCreate@ijkplayer\android\ijkplayer\ijkplayer-example\src\main\java\tv\danmaku\ijk\media\example\activities\VideoActivity.java

protected void onCreate(Bundle savedInstanceState) {

...

/* 1.加载jni层库 */

// init player

IjkMediaPlayer.loadLibrariesOnce(null);

IjkMediaPlayer.native_profileBegin("libijkplayer.so");

/* 2.加载view及设置播放路径 */

mVideoView = (IjkVideoView) findViewById(R.id.video_view);

mVideoView.setMediaController(mMediaController);

mVideoView.setHudView(mHudView);

// prefer mVideoPath

if (mVideoPath != null)

mVideoView.setVideoPath(mVideoPath);

else if (mVideoUri != null)

mVideoView.setVideoURI(mVideoUri);

else {

Log.e(TAG, "Null Data Source\n");

finish();

return;

}

mVideoView.start();

}

注释一:

public static void loadLibrariesOnce(IjkLibLoader libLoader) {

synchronized (IjkMediaPlayer.class) {

if (!mIsLibLoaded) {

if (libLoader == null)

libLoader = sLocalLibLoader;

/* 这里将加载三个jni层的库 */

libLoader.loadLibrary("ijkffmpeg");

libLoader.loadLibrary("ijksdl");

libLoader.loadLibrary("ijkplayer");

mIsLibLoaded = true;

}

}

}

注释二:

以setVideoPath为例,跟进一下:

IjkVideoView.java@ijkplayer\android\ijkplayer\ijkplayer-example\src\main\java\tv\danmaku\ijk\media\example\widget\media

public void setVideoPath(String path) {

if (path.contains("adaptationSet")){

mManifestString = path;

setVideoURI(Uri.EMPTY);

} else {

setVideoURI(Uri.parse(path));

}

}

继续跟进到setVideoURI:

private void setVideoURI(Uri uri, Map<String, String> headers) {

mUri = uri;

mHeaders = headers;

mSeekWhenPrepared = 0;

/* */

openVideo();

requestLayout();

invalidate();

}

函数openVideo()将会去创建播放器,我们继续跟进:

private void openVideo() {

...

try {

/* 1.创建player */

mMediaPlayer = createPlayer(mSettings.getPlayer());

...

/* 2.setdatasource */

mMediaPlayer.setDataSource(mUri.toString());

...

/* 3.prepare */

mMediaPlayer.prepareAsync();

}

}

先看下播放器的创建:

public IMediaPlayer createPlayer(int playerType) {

IMediaPlayer mediaPlayer = null;

switch (playerType) {

case Settings.PV_PLAYER__IjkExoMediaPlayer:

...

case Settings.PV_PLAYER__AndroidMediaPlayer:

...

case Settings.PV_PLAYER__IjkMediaPlayer:

default: {

IjkMediaPlayer ijkMediaPlayer = null;

if (mUri != null) {

/* 实例化ijkplayer */

ijkMediaPlayer = new IjkMediaPlayer();

ijkMediaPlayer.native_setLogLevel(IjkMediaPlayer.IJK_LOG_DEBUG);

...

/* 设置播放器属性到native层 */

ijkMediaPlayer.setOption(...);

...

mediaPlayer = ijkMediaPlayer;

}

}

...

}

mediaPlayer是一个IMediaPlayer 接口,支持Android原生播放器(即系统播放器)、ExoPlayer(Google力荐的应用级播放器)和ijkplayer播放器,在默认情况下,即不改变setting中设置下,都是创建的ijkplayer播放器。着重看ijkplayer的创建,在实例化了播放器之后,会设置一些预制属性到native层的实现中。后面的分析会讲到。这里重点看播放器的实例化:

IjkMediaPlayer.java@ijkplayer\android\ijkplayer\ijkplayer-java\src\main\java\tv\danmaku\ijk\media\player:

private void initPlayer(IjkLibLoader libLoader) {

loadLibrariesOnce(libLoader);

initNativeOnce();

Looper looper;

if ((looper = Looper.myLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else if ((looper = Looper.getMainLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else {

mEventHandler = null;

}

/*

* Native setup requires a weak reference to our object. It's easier to

* create it here than in C++.

*/

native_setup(new WeakReference<IjkMediaPlayer>(this));

}

可以看到最后通过调用jni方法到native层去了。同理,setdatasource和prepare及更上面的mVideoView.start()都是一样,最终调用的jni方法分别如下:

native_setup

_setDataSource

_prepareAsync

_start

扩展:

mediaplayer通路调用处:

src\main\java\tv\danmaku\ijk\media\player\IjkMediaPlayer.java

ExoPlayer通路调用处:

src\main\java\tv\danmaku\ijk\media\exo\IjkExoMediaPlayer.java

三、JNI层分析之播放器创建:

我们根据上面的几个Jni方法来分析native层是如何创建的:

1.native_setup,调入到Ijkpalyer_jni.c:

static void

IjkMediaPlayer_native_setup(JNIEnv *env, jobject thiz, jobject weak_this)

{

MPTRACE("%s\n", __func__);

/* 创建native层播放器 */

IjkMediaPlayer *mp = ijkmp_android_create(message_loop);

JNI_CHECK_GOTO(mp, env, "java/lang/OutOfMemoryError", "mpjni: native_setup: ijkmp_create() failed", LABEL_RETURN);

jni_set_media_player(env, thiz, mp);

ijkmp_set_weak_thiz(mp, (*env)->NewGlobalRef(env, weak_this));

ijkmp_set_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_set_ijkio_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_android_set_mediacodec_select_callback(mp, mediacodec_select_callback, ijkmp_get_weak_thiz(mp));

LABEL_RETURN:

ijkmp_dec_ref_p(&mp);

}

继续跟进:

IjkMediaPlayer *ijkmp_android_create(int(*msg_loop)(void*))

{

/* 1.创建播放器 */

IjkMediaPlayer *mp = ijkmp_create(msg_loop);

if (!mp)

goto fail;

/* 2.创建vout */

mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface();

if (!mp->ffplayer->vout)

goto fail;

/* 3.创建pipeline,用于配置av codec及aout */

mp->ffplayer->pipeline = ffpipeline_create_from_android(mp->ffplayer);

if (!mp->ffplayer->pipeline)

goto fail;

ffpipeline_set_vout(mp->ffplayer->pipeline, mp->ffplayer->vout);

return mp;

fail:

ijkmp_dec_ref_p(&mp);

return NULL;

}

注释一:

IjkMediaPlayer *ijkmp_create(int (*msg_loop)(void*))

{

/* 创建ijkplayer结构体 */

IjkMediaPlayer *mp = (IjkMediaPlayer *) mallocz(sizeof(IjkMediaPlayer));

if (!mp)

goto fail;

/* 创建FFmpeg */

mp->ffplayer = ffp_create();

if (!mp->ffplayer)

goto fail;

/* 消息机制的绑定 */

mp->msg_loop = msg_loop;

ijkmp_inc_ref(mp);

pthread_mutex_init(&mp->mutex, NULL);

return mp;

fail:

ijkmp_destroy_p(&mp);

return NULL;

}

IjkMediaPlayer结构体统领整个native层与播放器相关的所有信息,看一下里面的成员变量:

struct IjkMediaPlayer {

volatile int ref_count;

pthread_mutex_t mutex;

/* FFmpeg结构体 */

FFPlayer *ffplayer;

/* 消息机制的函数指针 */

int (*msg_loop)(void*);

SDL_Thread *msg_thread;

SDL_Thread _msg_thread;

int mp_state;

char *data_source;

void *weak_thiz;

int restart;

int restart_from_beginning;

int seek_req;

long seek_msec;

};

这个结构体有两个需要重点关注的地方,一个是msg_loop这个函数指针,用于处理ijkplayer的消息发送与接收,关于消息机制,将在后面单独讲解,第二个是ijkplayer结构体中有一个FFmpeg成员,因为ijkplayer就是对FFmpeg的封装,所以这个成员变量记录了所有FFmpeg的信息,我们可以看到在申请了IjkMediaPlayer之后紧接着就是去初始化内部的FFmpeg结构体:

FFPlayer *ffp_create()

{

av_log(NULL, AV_LOG_INFO, "av_version_info: %s\n", av_version_info());

av_log(NULL, AV_LOG_INFO, "ijk_version_info: %s\n", ijk_version_info());

/* 申请FFPlayer结构体并初始化 */

FFPlayer* ffp = (FFPlayer*) av_mallocz(sizeof(FFPlayer));

if (!ffp)

return NULL;

/* 初始化消息机制 */

msg_queue_init(&ffp->msg_queue);

ffp->af_mutex = SDL_CreateMutex();

ffp->vf_mutex = SDL_CreateMutex();

ffp_reset_internal(ffp);

ffp->av_class = &ffp_context_class;

ffp->meta = ijkmeta_create();

av_opt_set_defaults(ffp);

las_stat_init(&ffp->las_player_statistic);

return ffp;

}

注意av_mallocz函数,其作用是不但申请了空间,还会将内存初始化为0,这个在后续遇到有些变量不知道是何值时,需要跟踪到这里来确认:

av_mallocz源码:

void *av_mallocz(size_t size)

{

void *ptr = av_malloc(size); //使用av_malloc分配内存

if (ptr)

memset(ptr, 0, size); //将分配的内存块所有字节置0

return ptr;

}

注释二:

mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface();

if (!mp->ffplayer->vout)

goto fail;

创建vout,在ijkplayer解码出了视频帧之后,会去调用vout进行渲染,渲染的方式有使用mediacodec和openGL,关于这里面具体是如何渲染的,将在后续的文章中再分析;

注释三:

mp->ffplayer->pipeline = ffpipeline_create_from_android(mp->ffplayer);

if (!mp->ffplayer->pipeline)

goto fail;

进入这个函数看一下:

IJKFF_Pipeline *ffpipeline_create_from_android(FFPlayer *ffp)

{

...

pipeline->func_destroy = func_destroy;

pipeline->func_open_video_decoder = func_open_video_decoder;

pipeline->func_open_audio_output = func_open_audio_output;

pipeline->func_init_video_decoder = func_init_video_decoder;

pipeline->func_config_video_decoder = func_config_video_decoder;

...

}

这个函数主要就是对一些函数指针进行赋值,包括a/vcodec及aout的确定等等,结合前面注释二的内容,我们就清楚了,ijkmp_android_create这个函数确定了audio/video的解码器及对应输出的函数指针指向,为后续的解码及渲染工作做好准备。

四、JNI层分析之setdatasource:

Java层调用的native接口是_setDataSource,native实现在ijkmedia\ijkplayer\android\ijkplayer_jni.c:

static void

IjkMediaPlayer_setDataSourceCallback(JNIEnv *env, jobject thiz, jobject callback)

{

...

retval = ijkmp_set_data_source(mp, uri);

...

}

往下一直跟进到ijkplayer.c:

static int ijkmp_set_data_source_l(IjkMediaPlayer *mp, const char *url)

{

assert(mp);

assert(url);

// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_IDLE);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_INITIALIZED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ASYNC_PREPARING);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PREPARED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STARTED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PAUSED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_COMPLETED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STOPPED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ERROR);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_END);

freep((void**)&mp->data_source);

mp->data_source = strdup(url);

if (!mp->data_source)

return EIJK_OUT_OF_MEMORY;

ijkmp_change_state_l(mp, MP_STATE_INITIALIZED);

return 0;

}

实际上这个接口是为了迎合Android平台MediaPlayer及ExoPlayer的,因为在native层主要做的事仅仅是保存URL并将此时的播放器状态设置为MP_STATE_INITIALIZED。

五、JNI层分析之prepareAsync:

进入Ijkpalyer_jni.c:

static void

IjkMediaPlayer_prepareAsync(JNIEnv *env, jobject thiz)

{

MPTRACE("%s\n", __func__);

int retval = 0;

IjkMediaPlayer *mp = jni_get_media_player(env, thiz);

JNI_CHECK_GOTO(mp, env, "java/lang/IllegalStateException", "mpjni: prepareAsync: null mp", LABEL_RETURN);

retval = ijkmp_prepare_async(mp);

IJK_CHECK_MPRET_GOTO(retval, env, LABEL_RETURN);

LABEL_RETURN:

ijkmp_dec_ref_p(&mp);

}

往下面跟进到Ijkpalyer.c:

static int ijkmp_prepare_async_l(IjkMediaPlayer *mp)

{

assert(mp);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_IDLE);

// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_INITIALIZED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ASYNC_PREPARING);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PREPARED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STARTED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PAUSED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_COMPLETED);

// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STOPPED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ERROR);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_END);

assert(mp->data_source);

ijkmp_change_state_l(mp, MP_STATE_ASYNC_PREPARING);

/* 1.开启消息队列 */

msg_queue_start(&mp->ffplayer->msg_queue);

// released in msg_loop

ijkmp_inc_ref(mp);

/* 2.创建消息处理线程 */

mp->msg_thread = SDL_CreateThreadEx(&mp->_msg_thread, ijkmp_msg_loop, mp, "ff_msg_loop");

// msg_thread is detached inside msg_loop

// TODO: 9 release weak_thiz if pthread_create() failed;

/* 3.调用到ff_ffplay.c中做后续工作 */

int retval = ffp_prepare_async_l(mp->ffplayer, mp->data_source);

if (retval < 0) {

ijkmp_change_state_l(mp, MP_STATE_ERROR);

return retval;

}

return 0;

}

ijkmp_prepare_async_l主要做了两件事情,一件是让消息机制完全的转起来,另一件就是调用到ff_ffplay.c中,我们知道,ff_ffplay.c其实是ijkplayer对FFmpeg中的ffplay.c的完全重写。下面看一下在ffplay.c中是怎么做的:

int ffp_prepare_async_l(FFPlayer *ffp, const char *file_name)

{

assert(ffp);

assert(!ffp->is);

assert(file_name);

if (av_stristart(file_name, "rtmp", NULL) ||

av_stristart(file_name, "rtsp", NULL)) {

// There is total different meaning for 'timeout' option in rtmp

av_log(ffp, AV_LOG_WARNING, "remove 'timeout' option for rtmp.\n");

av_dict_set(&ffp->format_opts, "timeout", NULL, 0);

}

/* there is a length limit in avformat */

if (strlen(file_name) + 1 > 1024) {

av_log(ffp, AV_LOG_ERROR, "%s too long url\n", __func__);

if (avio_find_protocol_name("ijklongurl:")) {

av_dict_set(&ffp->format_opts, "ijklongurl-url", file_name, 0);

file_name = "ijklongurl:";

}

}

/* 1.打印一些版本信息 */

av_log(NULL, AV_LOG_INFO, "===== versions =====\n");

ffp_show_version_str(ffp, "ijkplayer", ijk_version_info());

ffp_show_version_str(ffp, "FFmpeg", av_version_info());

ffp_show_version_int(ffp, "libavutil", avutil_version());

ffp_show_version_int(ffp, "libavcodec", avcodec_version());

ffp_show_version_int(ffp, "libavformat", avformat_version());

ffp_show_version_int(ffp, "libswscale", swscale_version());

ffp_show_version_int(ffp, "libswresample", swresample_version());

av_log(NULL, AV_LOG_INFO, "===== options =====\n");

ffp_show_dict(ffp, "player-opts", ffp->player_opts);

ffp_show_dict(ffp, "format-opts", ffp->format_opts);

ffp_show_dict(ffp, "codec-opts ", ffp->codec_opts);

ffp_show_dict(ffp, "sws-opts ", ffp->sws_dict);

ffp_show_dict(ffp, "swr-opts ", ffp->swr_opts);

av_log(NULL, AV_LOG_INFO, "===================\n");

av_opt_set_dict(ffp, &ffp->player_opts);

/* 2.打开aout */

if (!ffp->aout) {

ffp->aout = ffpipeline_open_audio_output(ffp->pipeline, ffp);

if (!ffp->aout)

return -1;

}

#if CONFIG_AVFILTER

if (ffp->vfilter0) {

GROW_ARRAY(ffp->vfilters_list, ffp->nb_vfilters);

ffp->vfilters_list[ffp->nb_vfilters - 1] = ffp->vfilter0;

}

#endif

/* 3.打开音视频流并创建相关线程 */

VideoState *is = stream_open(ffp, file_name, NULL);

if (!is) {

av_log(NULL, AV_LOG_WARNING, "ffp_prepare_async_l: stream_open failed OOM");

return EIJK_OUT_OF_MEMORY;

}

ffp->is = is;

ffp->input_filename = av_strdup(file_name);

return 0;

}

注释一是版本等基本信息打印,重点关注应用对播放器的配置,在读取了底层的配置之后,就会将配置设置下去。

跟进下注释二:

SDL_Aout *ffpipeline_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

return pipeline->func_open_audio_output(pipeline, ffp);

}

会回调到ffpipeline_android.c:

static SDL_Aout *func_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

SDL_Aout *aout = NULL;

if (ffp->opensles) {

aout = SDL_AoutAndroid_CreateForOpenSLES();

} else {

aout = SDL_AoutAndroid_CreateForAudioTrack();

}

if (aout)

SDL_AoutSetStereoVolume(aout, pipeline->opaque->left_volume, pipeline->opaque->right_volume);

return aout;

}

这里会根据应用层的设置选择是走OpenSLES还是Android的AudioTrack进行输出,建议Android系统选择后者,我在实际项目中遇到过音频总是起播慢几秒出声,之后一直没法同步的问题,然后查阅了半天发现是应用的人设置的OpenSLES来进行音频输出,导致音视频同步策略上从一开始就不停丢帧,最后直接放弃同步。

回到上面的注释三,来看下重点函数stream_open:

static VideoState *stream_open(FFPlayer *ffp, const char *filename, AVInputFormat *iformat)

{

assert(!ffp->is);

VideoState *is;

/* 1.申请VideoState结构体 */

is = av_mallocz(sizeof(VideoState));

if (!is)

return NULL;

is->filename = av_strdup(filename);

if (!is->filename)

goto fail;

is->iformat = iformat;

is->ytop = 0;

is->xleft = 0;

#if defined(__ANDROID__)

if (ffp->soundtouch_enable) {

is->handle = ijk_soundtouch_create();

}

#endif

/* 2,初始化a/v/s的帧队列 */

/* start video display */

if (frame_queue_init(&is->pictq, &is->videoq, ffp->pictq_size, 1) < 0)

goto fail;

if (frame_queue_init(&is->subpq, &is->subtitleq, SUBPICTURE_QUEUE_SIZE, 0) < 0)

goto fail;

if (frame_queue_init(&is->sampq, &is->audioq, SAMPLE_QUEUE_SIZE, 1) < 0)

goto fail;

/* 3,初始化a/v/s的packet队列 */

if (packet_queue_init(&is->videoq) < 0 ||

packet_queue_init(&is->audioq) < 0 ||

packet_queue_init(&is->subtitleq) < 0)

goto fail;

if (!(is->continue_read_thread = SDL_CreateCond())) {

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());

goto fail;

}

if (!(is->video_accurate_seek_cond = SDL_CreateCond())) {

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());

ffp->enable_accurate_seek = 0;

}

if (!(is->audio_accurate_seek_cond = SDL_CreateCond())) {

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\n", SDL_GetError());

ffp->enable_accurate_seek = 0;

}

/* 4.初始化a/v/s的时钟 */

init_clock(&is->vidclk, &is->videoq.serial);

init_clock(&is->audclk, &is->audioq.serial);

init_clock(&is->extclk, &is->extclk.serial);

is->audio_clock_serial = -1;

if (ffp->startup_volume < 0)

av_log(NULL, AV_LOG_WARNING, "-volume=%d < 0, setting to 0\n", ffp->startup_volume);

if (ffp->startup_volume > 100)

av_log(NULL, AV_LOG_WARNING, "-volume=%d > 100, setting to 100\n", ffp->startup_volume);

ffp->startup_volume = av_clip(ffp->startup_volume, 0, 100);

ffp->startup_volume = av_clip(SDL_MIX_MAXVOLUME * ffp->startup_volume / 100, 0, SDL_MIX_MAXVOLUME);

is->audio_volume = ffp->startup_volume;

is->muted = 0;

is->av_sync_type = ffp->av_sync_type;

is->play_mutex = SDL_CreateMutex();

is->accurate_seek_mutex = SDL_CreateMutex();

ffp->is = is;

is->pause_req = !ffp->start_on_prepared;

/* 5.创建视频帧渲染线程 */

is->video_refresh_tid = SDL_CreateThreadEx(&is->_video_refresh_tid, video_refresh_thread, ffp, "ff_vout");

if (!is->video_refresh_tid) {

av_freep(&ffp->is);

return NULL;

}

/* 6.创建read线程 */

is->initialized_decoder = 0;

is->read_tid = SDL_CreateThreadEx(&is->_read_tid, read_thread, ffp, "ff_read");

if (!is->read_tid) {

av_log(NULL, AV_LOG_FATAL, "SDL_CreateThread(): %s\n", SDL_GetError());

goto fail;

}

if (ffp->async_init_decoder && !ffp->video_disable && ffp->video_mime_type && strlen(ffp->video_mime_type) > 0

&& ffp->mediacodec_default_name && strlen(ffp->mediacodec_default_name) > 0) {

if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2) {

decoder_init(&is->viddec, NULL, &is->videoq, is->continue_read_thread);

ffp->node_vdec = ffpipeline_init_video_decoder(ffp->pipeline, ffp);

}

}

is->initialized_decoder = 1;

return is;

fail:

is->initialized_decoder = 1;

is->abort_request = true;

if (is->video_refresh_tid)

SDL_WaitThread(is->video_refresh_tid, NULL);

stream_close(ffp);

return NULL;

}

stream_open函数略复杂,主要是做一些跟解码相关的初始化工作。

注释一:

VideoState记录的是FFmpeg需要使用的关于音视频字幕的所有信息,尤其是源数据及解码出来的帧等;

注释二:

初始化音视频字幕解码完后的帧队列,所有从底层解码出来的帧全部都保存在这个队列中,需要注意的是,此时的队列还没有运转起来;

注释三:

初始化音视频待解码数据packet的队列,需要注意的是,此时的队列也没有运转起来;

注释四:

初始化音视频字幕的时钟,后续的同步会用到这个;

注释五:

is->video_refresh_tid = SDL_CreateThreadEx(&is->_video_refresh_tid, video_refresh_thread, ffp, "ff_vout");

if (!is->video_refresh_tid) {

av_freep(&ffp->is);

return NULL;

}

用于渲染视频帧的线程,包括音视频同步也是在这里面做的;

注释六:

is->read_tid = SDL_CreateThreadEx(&is->_read_tid, read_thread, ffp, "ff_read");

if (!is->read_tid) {

av_log(NULL, AV_LOG_FATAL, "SDL_CreateThread(): %s\n", SDL_GetError());

goto fail;

}

read_thread这个线程就比较复杂了,除了去初始化相关的解码器之外,还会去创建三个线程,分别是音视频解码和音频播放线程,具体的分析将在后续的文章中展开。

至此,整个ijkplayer的初始化工作就完成了,只待应用开启start,就可以开始播放了。

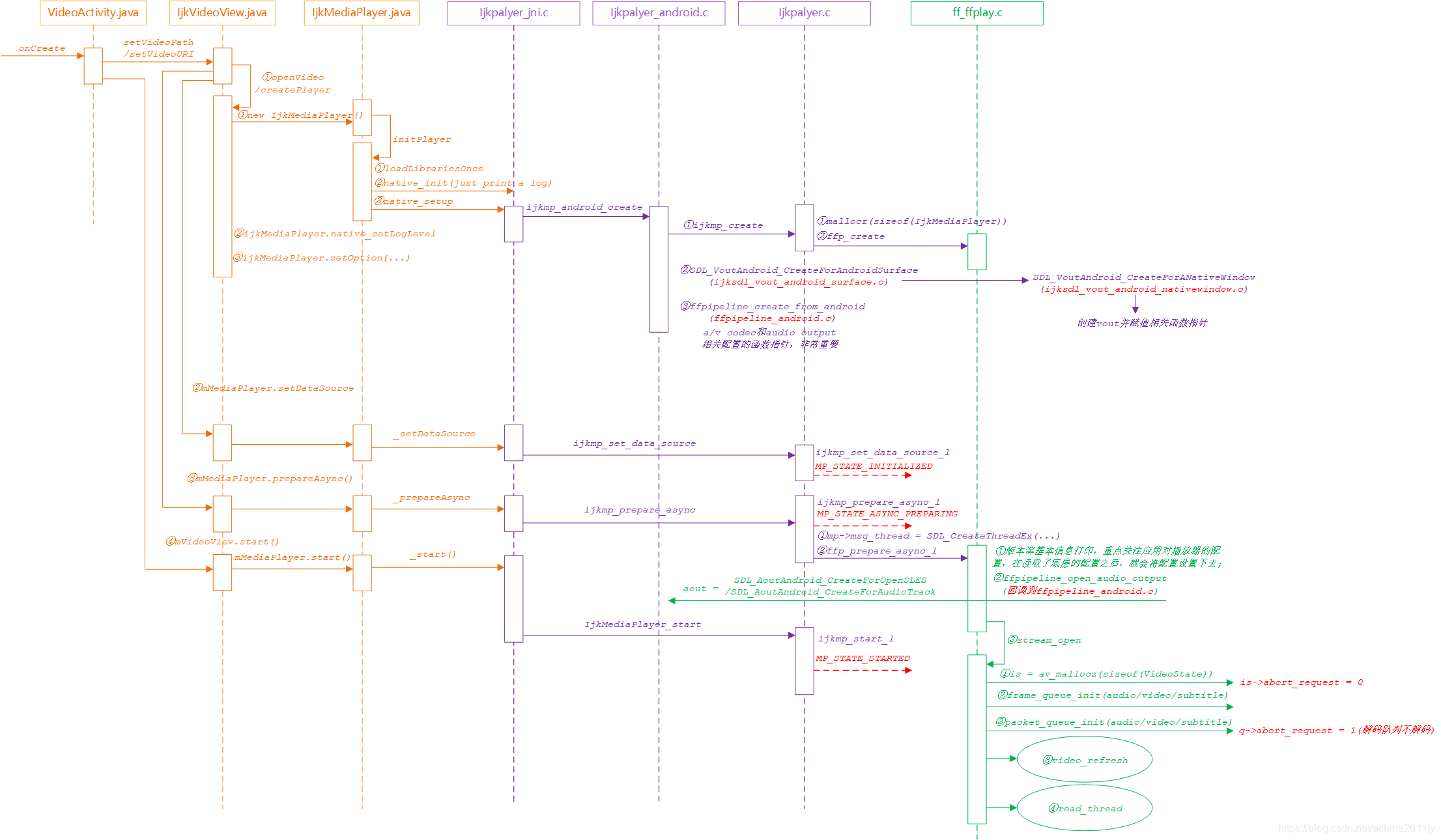

四、总结:

整体逻辑流程图如下:

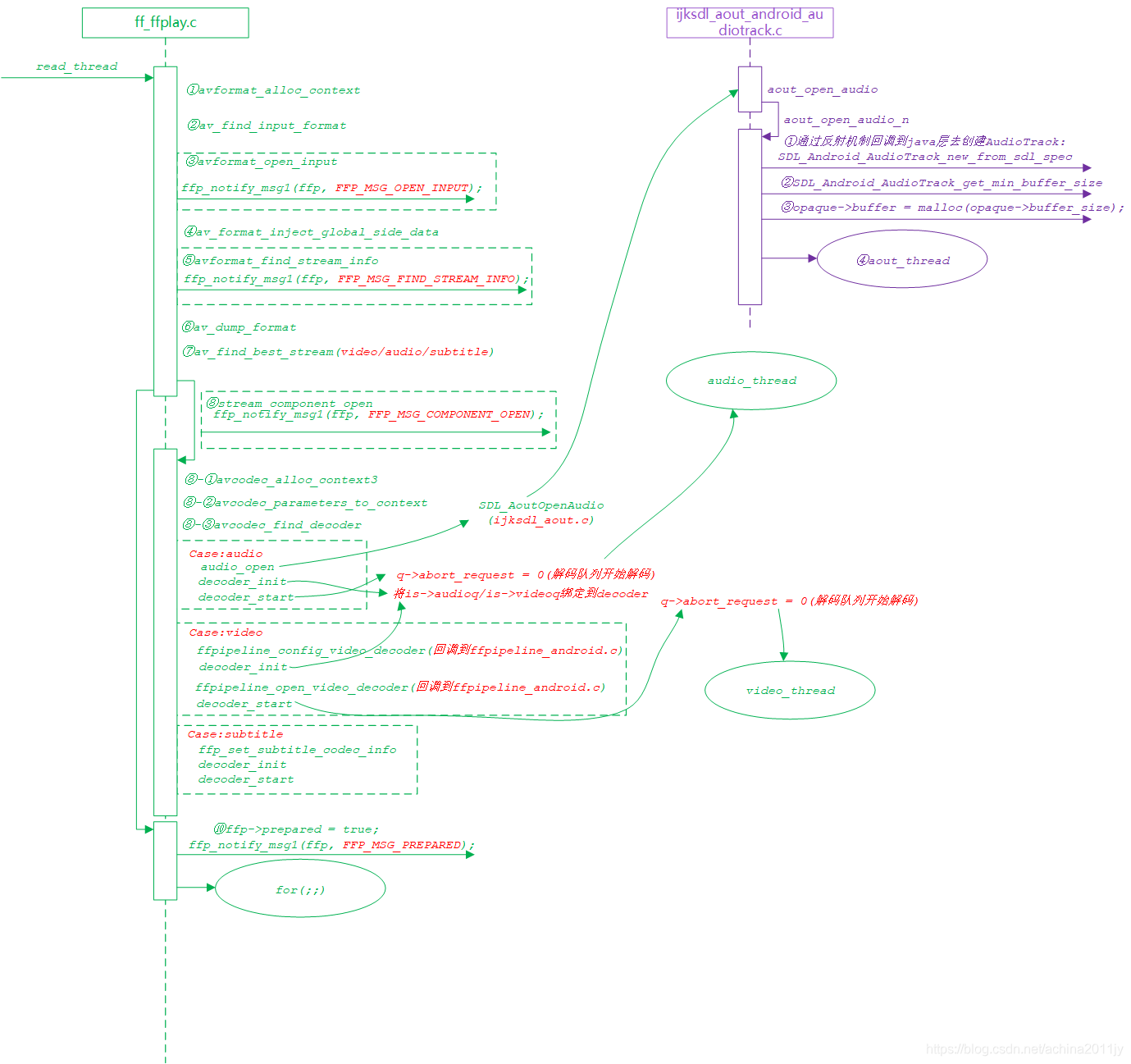

read_thread逻辑图如下: