K8s平台搭建手册

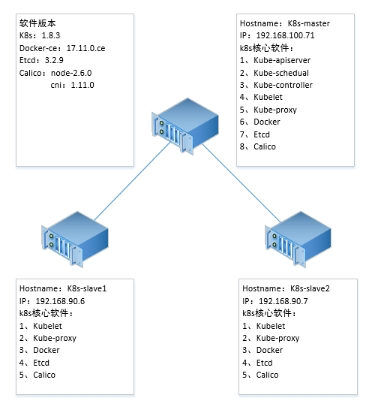

1搭建环境说明

2安装步骤

2.1初始化环境

在每台服务器上执行

#编辑每台服务器的 /etc/hosts 文件,配置hostname 通信

vi /etc/hosts

192.168.100.71 k8s-master.doone.com

192.168.90.6 k8s-slave1.doone.com

192.168.90.7 k8s-slave2.doone.com

2.2关闭防火墙

在每台服务器上执行

systemctl stop firewalld.service #停止firewall

systemctl disable firewalld.service #禁止firewall开机启动

firewall-cmd --state #查看默认防火墙状态(关闭后显示notrunning,开启后显示running)

2.3关闭selinux

在每台服务器上执行

$ setenforce 0

$ vim /etc/selinux/config

SELINUX=disabled

2.4关闭swap

在每台服务器上执行

K8s需使用内存,而不用swap

$ swapoff -a

$ vim /etc/fstab

注释掉SWAP分区项,即可

2.5安装go 语言环境(按需)

https://golang.org/dl/

下载 linux版本go,解压后配置环境变量即可

vi /etc/profile

export GOROOT=/usr/local/go

export PATH=$GOROOT/bin:$PATH

$ source profile

2.6创建K8s集群验证

2.6.1安装cfssl

这里使用 CloudFlare 的 PKI 工具集 cfssl 来生成 Certificate Authority (CA) 证书和秘钥文件。

cd /usr/local/bin

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 cfssl-certinfo

chmod +x *

2.6.2创建CA证书配置

mkdir /opt/ssl

cd /opt/ssl

# config.json 文件

vi config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

# csr.json 文件

vi csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

2.6.3生成CA证书和私钥

cd /opt/ssl

cfssl gencert -initca csr.json | cfssljson -bare ca

会生成3个文件ca.csr、ca-key.pem、ca.pem

2.6.4分发证书

# 创建证书目录

mkdir -p /etc/kubernetes/ssl

# 拷贝所有文件到目录下

cp *.pem /etc/kubernetes/ssl

# 这里要将文件拷贝到所有的k8s 机器上

scp *.pem 192.168.90.6:/etc/kubernetes/ssl/

scp *.pem 192.168.90.7:/etc/kubernetes/ssl/

2.7安装docker

在每台服务器上执行

2.7.1导入yum源

# 安装 yum-config-manager

yum -y install yum-utils

# 导入

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

# 更新 repo

yum makecache

2.7.2安装

yum install docker-ce –y

2.7.3更改docker配置

# 修改配置

vi /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS $DOCKER_OPTS $DOCKER_DNS_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

# 修改其他配置

mkdir -p /usr/lib/systemd/system/docker.service.d/

vi /usr/lib/systemd/system/docker.service.d/docker-options.conf

# 添加如下 : (注意 environment 必须在同一行,如果出现换行会无法加载)

# iptables=false 会使 docker run 的容器无法连网,false 是因为 calico 有一些高级的应用,需要限制容器互通。

# 建议 一般情况 不添加 --iptables=false,calico需要添加

[Service]

Environment="DOCKER_OPTS=--insecure-registry=10.254.0.0/16 --graph=/opt/docker --registry-mirror=http://b438f72b.m.daocloud.io --disable-legacy-registry --iptables=false"

2.7.4重新读取配置,启动 docker

systemctl daemon-reload

systemctl start docker

systemctl enable docker

2.8安装etcd集群

etcd 是k8s集群的基础组件

2.8.1安装etcd

在每台上服务器上执行

yum -y install etcd

2.8.2创建etcd证书

cd /opt/ssl/

vi etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.100.71",

"192.168.90.6",

"192.168.90.7"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

# 生成 etcd 密钥

cfssl gencert -ca=/opt/ssl/ca.pem \

-ca-key=/opt/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

# 查看生成

[root@k8s-master ssl]# ls etcd*

etcd.csr etcd-csr.json etcd-key.pem etcd.pem

# 拷贝到etcd服务器

# etcd-1

cp etcd*.pem /etc/kubernetes/ssl/

# etcd-2

scp etcd*.pem 192.168.90.6:/etc/kubernetes/ssl/

# etcd-3

scp etcd*.pem 192.168.90.7:/etc/kubernetes/ssl/

# 如果 etcd 非 root 用户,读取证书会提示没权限

chmod 644 /etc/kubernetes/ssl/etcd-key.pem

2.8.3修改etcd配置

修改 etcd 启动文件 /usr/lib/systemd/system/etcd.service

# etcd-1

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/usr/bin/etcd \

--name=etcd1 \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--initial-advertise-peer-urls=https://192.168.100.71:2380 \

--listen-peer-urls=https://192.168.100.71:2380 \

--listen-client-urls=https://192.168.100.71:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.100.71:2379 \

--initial-cluster-token=k8s-etcd-cluster \

--initial-cluster=etcd1=https://192.168.100.71:2380,etcd2=https://192.168.90.6:2380,etcd3=https://192.168.90.7:2380 \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# etcd-2

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/usr/bin/etcd \

--name=etcd2 \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--initial-advertise-peer-urls=https://192.168.90.6:2380 \

--listen-peer-urls=https://192.168.90.6:2380 \

--listen-client-urls=https://192.168.90.6:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.90.6:2379 \

--initial-cluster-token=k8s-etcd-cluster \

--initial-cluster=etcd1=https://192.168.100.71:2380,etcd2=https://192.168.90.6:2380,etcd3=https://192.168.90.7:2380 \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# etcd-3

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/usr/bin/etcd \

--name=etcd3 \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--initial-advertise-peer-urls=https://192.168.90.7:2380 \

--listen-peer-urls=https://192.168.90.7:2380 \

--listen-client-urls=https://192.168.90.7:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.90.7:2379 \

--initial-cluster-token=k8s-etcd-cluster \

--initial-cluster=etcd1=https://192.168.100.71:2380,etcd2=https://192.168.90.6:2380,etcd3=https://192.168.90.7:2380 \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.8.4启动etcd

分别启动 所有节点的 etcd 服务

systemctl enable etcd

systemctl start etcd

systemctl status etcd

# 如果报错 请使用

journalctl -f -t etcd 和 journalctl -u etcd 来定位问题

2.8.5验证etcd集群状态

查看 etcd 集群状态:

etcdctl --endpoints=https://192.168.100.71:2379 \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

cluster-health

member 29262d49176888f5 is healthy: got healthy result from https://192.168.100.71:2379

member d4ba1a2871bfa2b0 is healthy: got healthy result from https://192.168.90.6:2379

member eca58ebdf44f63b6 is healthy: got healthy result from https://192.168.90.7:2379

cluster is healthy

查看 etcd 集群成员:

etcdctl --endpoints=https://192.168.100.71:2379 \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

member list

29262d49176888f5: name=etcd3 peerURLs=https://192.168.100.71:2380 clientURLs=https://192.168.100.71:2379 isLeader=false

d4ba1a2871bfa2b0: name=etcd1 peerURLs=https://192.168.90.6:2380 clientURLs=https://192.168.90.6:2379 isLeader=true

eca58ebdf44f63b6: name=etcd2 peerURLs=https://192.168.90.7:2380 clientURLs=https://192.168.90.7:2379 isLeader=false

2.9安装kubectl 工具

Master节点 192.168.100.71

2.9.1Master端安装kubectl工具

# 首先安装 kubectl

wget https://dl.k8s.io/v1.8.0/kubernetes-client-linux-amd64.tar.gz

(如果连接不上,直接去git上下载二进制文件)

tar -xzvf kubernetes-client-linux-amd64.tar.gz

cp kubernetes/client/bin/* /usr/local/bin/

chmod a+x /usr/local/bin/kube*

# 验证安装

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.3", GitCommit:"f0efb3cb883751c5ffdbe6d515f3cb4fbe7b7acd", GitTreeState:"clean", BuildDate:"2017-11-08T18:39:33Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.3", GitCommit:"f0efb3cb883751c5ffdbe6d515f3cb4fbe7b7acd", GitTreeState:"clean", BuildDate:"2017-11-08T18:27:48Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

2.9.2创建 admin 证书

kubectl 与 kube-apiserver 的安全端口通信,需要为安全通信提供 TLS 证书和秘钥。

cd /opt/ssl/

vi admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "system:masters",

"OU": "System"

}

]

}

# 生成 admin 证书和私钥

cd /opt/ssl/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

# 查看生成

[root@k8s-master ssl]# ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem

cp admin*.pem /etc/kubernetes/ssl/

scp admin*.pem 192.168.90.6:/etc/kubernetes/ssl/

scp admin*.pem 192.168.90.7:/etc/kubernetes/ssl/

2.9.3配置 kubectl kubeconfig 文件

server 配置为 本机IP 各自连接本机的 Api

# 配置 kubernetes 集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.100.71:6443

# 配置 客户端认证

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

kubectl config use-context kubernetes

2.9.4kubectl config文件

# kubeconfig 文件在如下位置:

/root/.kube

2.10 部署 Kubernetes Master 节点

2.10.1 部署Master节点的Master部分

Master 需要部署 kube-apiserver , kube-scheduler , kube-controller-manager 这三个组件。 kube-scheduler 作用是调度pods分配到那个node里,简单来说就是资源调度。 kube-controller-manager 作用是 对 deployment controller , replication controller, endpoints controller, namespace controller, and serviceaccounts controller等等的循环控制,与kube-apiserver交互。

2.10.2 安装Master节点组件

# 从github 上下载版本

cd /tmp

wget https://dl.k8s.io/v1.8.3/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

cp –r server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} /usr/local/bin/

2.10.3 创建kubernetes 证书

cd /opt/ssl

vi kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.100.71",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

# 这里 hosts 字段中 三个 IP 分别为 127.0.0.1 本机, 192.168.100.71为 Master 的IP,多个Master需要写多个 10.254.0.1 为 kubernetes SVC 的 IP, 一般是 部署网络的第一个IP , 如: 10.254.0.1 , 在启动完成后,我们使用 kubectl get svc , 就可以查看到。

2.10.4 生成 kubernetes 证书和私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

# 查看生成

[root@k8s-master-25 ssl]# ls -l kubernetes*

kubernetes.csr

kubernetes-key.pem

kubernetes.pem

kubernetes-csr.json

# 拷贝到目录

cp -r kubernetes*.pem /etc/kubernetes/ssl/

scp -r kubernetes*.pem 192.168.90.6:/etc/kubernetes/ssl/

scp -r kubernetes*.pem 192.168.90.7:/etc/kubernetes/ssl/

2.10.5 配置 kube-apiserver

kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token 一致,如果一致则自动为 kubelet生成证书和秘钥。

# 生成 token

[root@k8s-master ssl]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

d59a702004f33c659640bf8dd2717b64 需记录下来

# 创建 token.csv 文件

cd /opt/ssl

vi token.csv

d59a702004f33c659640bf8dd2717b64,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

# 拷贝

cp token.csv /etc/kubernetes/

scp token.csv 192.168.90.6:/etc/kubernetes/

scp token.csv 192.168.90.7:/etc/kubernetes/

2.10.5.1. 创建 kube-apiserver.service 文件

# 1.8 新增 (Node) --authorization-mode=Node,RBAC

# 自定义 系统 service 文件一般存于 /etc/systemd/system/ 下

# 配置为 各自的本地 IP

vi /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

User=root

ExecStart=/usr/local/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--advertise-address=192.168.100.71 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--authorization-mode=Node,RBAC \

--bind-address=192.168.100.71 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--enable-swagger-ui=true \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/etcd.pem \

--etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \

--etcd-servers=https://192.168.100.71:2379,https://192.168.90.6:2379,https://192.168.90.7:2379 \

--event-ttl=1h \

--kubelet-https=true \

--insecure-bind-address=192.168.100.71 \

--runtime-config=rbac.authorization.k8s.io/v1alpha1 \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# 这里面要注意的是 --service-node-port-range=30000-32000

# 这个地方是 映射外部端口时 的端口范围,随机映射也在这个范围内映射,指定映射端口必须也在这个范围内。

2.10.5.2. 启动 kube-apiserver

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

2.10.6 配置 kube-controller-manager

master 配置为 各自 本地 IP

2.10.6.1. 创建 kube-controller-manager.service 文件

vi /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--address=127.0.0.1 \

--master=http://192.168.100.71:8080 \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-cidr=10.233.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--leader-elect=true \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

2.10.6.2. 启动 kube-controller-manager

systemactl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

2.10.7 配置 kube-scheduler

master 配置为 各自 本地 IP

2.10.7.1. 创建 kube-cheduler.service 文件

vi /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--address=127.0.0.1 \

--master=http://192.168.100.71:8080 \

--leader-elect=true \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

2.10.7.2. 启动 kube-scheduler

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

2.10.8 验证 Master 节点

[root@k8s-master ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

2.10.9 部署 Master节点的 Node 部分

Node 部分 需要部署的组件有 docker calico kubectl kubelet kube-proxy 这几个组件。

2.10.10配置 kubelet

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper 角色,然后 kubelet 才有权限创建认证请求(certificatesigningrequests)。

2.10.10.1.先创建认证请求

# user 为 master 中 token.csv 文件里配置的用户

# 只需创建一次就可以

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

2.10.10.2.创建 kubelet kubeconfig 文件

server 配置为 master 本机 IP

# 配置集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.100.71:6443 \

--kubeconfig=bootstrap.kubeconfig

# 配置客户端认证

kubectl config set-credentials kubelet-bootstrap \

--token=d59a702004f33c659640bf8dd2717b64 \

--kubeconfig=bootstrap.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# 拷贝生成的 bootstrap.kubeconfig 文件

mv bootstrap.kubeconfig /etc/kubernetes/

2.10.10.3.创建 kubelet.service 文件

# 创建 kubelet 目录

> 配置为 node 本机 IP

mkdir /var/lib/kubelet

vi /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--address=192.168.100.71 \

--hostname-override=192.168.100.71 \

--pod-infra-container-image=jicki/pause-amd64:3.0 \

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--cluster_dns=10.254.0.2 \

--cluster_domain=doone.com. \

--hairpin-mode promiscuous-bridge \

--allow-privileged=true \

--fail-swap-on=false \

--serialize-image-pulls=false \

--logtostderr=true \

--max-pods=512 \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

# 如上配置:

192.168.100.71 为本机的IP

10.254.0.2 预分配的 dns 地址

cluster.local. 为 kubernetes 集群的 domain

jicki/pause-amd64:3.0 这个是 pod 的基础镜像,既 gcr 的 gcr.io/google_containers/pause-amd64:3.0 镜像, 下载下来修改为自己的仓库中的比较快。

2.10.10.4.启动 kubelet

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl status kubelet

# 如果报错 请使用

journalctl -f -t kubelet 和 journalctl -u kubelet 来定位问题

2.10.10.5.配置 TLS 认证

# 查看 csr 的名称

[root@k8s-master]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-pf-Bb5Iqx6ccvVA67gLVT-G4Zl3Zl5FPUZS4d7V6rk4 1h kubelet-bootstrap Pending

# 增加认证

[root@k8s-master]# kubectl certificate approve node-csr-pf-Bb5Iqx6ccvVA67gLVT-G4Zl3Zl5FPUZS4d7V6rk4

certificatesigningrequest "node-csr-pf-Bb5Iqx6ccvVA67gLVT-G4Zl3Zl5FPUZS4d7V6rk4" approved

[root@k8s-master]#

2.10.10.6.验证 nodes

[root@k8s-master]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.100.71 Ready <none> 22s v1.8.3

# 成功以后会自动生成配置文件与密钥

# 配置文件

ls /etc/kubernetes/kubelet.kubeconfig

/etc/kubernetes/kubelet.kubeconfig

# 密钥文件

ls /etc/kubernetes/ssl/kubelet*

/etc/kubernetes/ssl/kubelet-client.crt /etc/kubernetes/ssl/kubelet.crt

/etc/kubernetes/ssl/kubelet-client.key /etc/kubernetes/ssl/kubelet.key

2.10.11配置 kube-proxy

2.10.11.1.创建 kube-proxy 证书

# 证书方面由于我们node端没有装 cfssl

# 我们回到 master 端 机器 去配置证书,然后拷贝过来

[root@k8s-master ~]# cd /opt/ssl

vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

2.10.11.2.生成 kube-proxy 证书和私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

# 查看生成

ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

# 拷贝到目录

cp kube-proxy*.pem /etc/kubernetes/ssl/

scp kube-proxy*.pem 192.168.90.6:/etc/kubernetes/ssl/

scp kube-proxy*.pem 192.168.90.7:/etc/kubernetes/ssl/

2.10.11.3.创建 kube-proxy kubeconfig 文件

server 配置为各自 本机IP

# 配置集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.100.71:6443 \

--kubeconfig=kube-proxy.kubeconfig

# 配置客户端认证

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 拷贝到目录

mv kube-proxy.kubeconfig /etc/kubernetes/

2.10.11.4.创建 kube-proxy.service 文件

配置为 各自的 IP

# 创建 kube-proxy 目录

mkdir -p /var/lib/kube-proxy

vi /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--bind-address=192.168.100.71 \

--hostname-override=192.168.100.71 \

--cluster-cidr=10.254.0.0/16 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.10.11.5.启动 kube-proxy

systemctl daemon-reload

systemctl enable kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy

# 如果报错 请使用

journalctl -f -t kube-proxy 和 journalctl -u kube-proxy 来定位问题

2.11 部署Kubernetes Node节点

Node 节点 基于 Nginx 负载 API 做 Master HA。192.168.90.6,192.168.90.7。

# master 之间除 api server 以外其他组件通过 etcd 选举,api server 默认不作处理;在每个 node 上启动一个 nginx,每个 nginx 反向代理所有 api server,node 上 kubelet、kube-proxy 连接本地的 nginx 代理端口,当 nginx 发现无法连接后端时会自动踢掉出问题的 api server,从而实现 api server 的 HA。

2.11.1 安装Node节点组件

# 从github 上下载版本

cd /tmp

wget https://dl.k8s.io/v1.8.3/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

cp -r server/bin/{kube-proxy,kubelet} /usr/local/bin/

# ALL node

mkdir -p /etc/kubernetes/ssl/

scp ca.pem kube-proxy.pem kube-proxy-key.pem 192.168.90.6:/etc/kubernetes/ssl/

scp ca.pem kube-proxy.pem kube-proxy-key.pem 192.168.90.7:/etc/kubernetes/ssl/

2.11.2 创建 kubelet kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=bootstrap.kubeconfig

# 配置客户端认证

kubectl config set-credentials kubelet-bootstrap \

--token=d59a702004f33c659640bf8dd2717b64 \

--kubeconfig=bootstrap.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# 拷贝生成的 bootstrap.kubeconfig 文件

mv bootstrap.kubeconfig /etc/kubernetes/

2.11.3 创建 kubelet.service 文件

参照Master节点

2.11.4 启动 kubelet

参照Master节点

2.11.5 创建 kube-proxy kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-proxy.kubeconfig

# 配置客户端认证

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 拷贝到目录

mv kube-proxy.kubeconfig /etc/kubernetes/

2.11.6 创建 kube-proxy.service 文件

参照Master节点

2.11.7 启动 kube-proxy

参照Master节点

2.12 创建Nginx 代理

在每个 node 都必须创建一个 Nginx 代理, 这里特别注意, 当 Master 也做为 Node 的时候 不需要配置 Nginx-proxy

# 创建配置目录

mkdir -p /etc/nginx

# 写入代理配置

cat << EOF > /etc/nginx/nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 192.168.100.71:6443;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

# 配置 Nginx 基于 docker 进程,然后配置 systemd 来启动

cat << EOF > /etc/systemd/system/nginx-proxy.service

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run -p 127.0.0.1:6443:6443 \\

-v /etc/nginx:/etc/nginx \\

--name nginx-proxy \\

--net=host \\

--restart=on-failure:5 \\

--memory=512M \\

nginx:1.13.5-alpine

ExecStartPre=-/usr/bin/docker rm -f nginx-proxy

ExecStop=/usr/bin/docker stop nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target

EOF

# 启动 Nginx

systemctl daemon-reload

systemctl start nginx-proxy

systemctl enable nginx-proxy

systemctl status nginx-proxy

# 重启 Node 的 kubelet 与 kube-proxy

systemctl restart kubelet

systemctl status kubelet

systemctl restart kube-proxy

systemctl status kube-proxy

2.13 在Master 配置通过 TLS 认证

# 查看 csr 的名称

[root@k8s-master]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-pf-Bb5Iqx6ccvVA67gLVT-G4Zl3Zl5FPUZS4d7V6rk4 1h kubelet-bootstrap Pending

# 增加认证

[root@k8s-master]# kubectl certificate approve NAME

2.14 部署Calico网络

2.14.1 修改 kubelet.service

在每个节点

vi /etc/systemd/system/kubelet.service

# 增加 如下配置

--network-plugin=cni \

# 重新加载配置

systemctl daemon-reload

systemctl restart kubelet.service

systemctl status kubelet.service

2.14.2 获取Calico 配置

Calico 部署仍然采用 “混搭” 方式,即 Systemd 控制 calico node,cni 等由 kubernetes daemonset 安装。

# 获取 calico.yaml

$ export CALICO_CONF_URL="https://kairen.github.io/files/manual-v1.8/network"

$ wget "${CALICO_CONF_URL}/calico-controller.yml.conf" -O calico-controller.yml

$ kubectl apply -f calico-controller.yaml

$ kubectl -n kube-system get po -l k8s-app=calico-policy

NAME READY STATUS RESTARTS AGE

calico-policy-controller-5ff8b4549d-tctmm 0/1 Pending 0 5s

需修改yaml文件内ETCD集群的IP地址

2.14.3 在所有节点下载 Calico

$ export CALICO_URL="https://github.com/projectcalico/cni-plugin/releases/download/v1.11.0"

$ wget -N -P /opt/cni/bin ${CALICO_URL}/calico

$ wget -N -P /opt/cni/bin ${CALICO_URL}/calico-ipam

$ chmod +x /opt/cni/bin/calico /opt/cni/bin/calico-ipam

2.14.4 在所有节点下载 CNI plugins配置文件

$ mkdir -p /etc/cni/net.d

$ export CALICO_CONF_URL="https://kairen.github.io/files/manual-v1.8/network"

$ wget "${CALICO_CONF_URL}/10-calico.conf" -O /etc/cni/net.d/10-calico.conf

vi 10-calico.conf

{

"name": "calico-k8s-network",

"cniVersion": "0.1.0",

"type": "calico",

"etcd_endpoints": "https://192.168.100.71:2379,https://192.168.90.6:2379,https://192.168.90.7:2379",

"etcd_ca_cert_file": "/etc/kubernetes/ssl/ca.pem",

"etcd_cert_file": "/etc/kubernetes/ssl/etcd.pem",

"etcd_key_file": "/etc/kubernetes/ssl/etcd-key.pem",

"log_level": "info",

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/kubernetes/kubelet.kubeconfig"

}

}

2.14.5 创建 calico-node.service 文件

上一步注释了 calico.yaml 中 Calico Node 相关内容,为了防止自动获取 IP 出现问题,将其移动到 Systemd,Systemd service 配置如下,每个节点都要安装 calico-node 的 Service,其他节点请自行修改 ip。

cat > /usr/lib/systemd/system/calico-node.service <<EOF

[Unit]

Description=calico node

After=docker.service

Requires=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run --net=host --privileged --name=calico-node \

-e ETCD_ENDPOINTS=https://192.168.100.71:2379,https://192.168.90.6:2379,https://192.168.90.7:2379 \

-e ETCD_CA_CERT_FILE=/etc/kubernetes/ssl/ca.pem \

-e ETCD_CERT_FILE=/etc/kubernetes/ssl/etcd.pem \

-e ETCD_KEY_FILE=/etc/kubernetes/ssl/etcd-key.pem \

-e NODENAME=${HOSTNAME} \

-e IP= \

-e NO_DEFAULT_POOLS= \

-e AS= \

-e CALICO_LIBNETWORK_ENABLED=true \

-e IP6= \

-e CALICO_NETWORKING_BACKEND=bird \

-e FELIX_DEFAULTENDPOINTTOHOSTACTION=ACCEPT \

-e FELIX_HEALTHENABLED=true \

-e CALICO_IPV4POOL_CIDR=10.233.0.0/16 \

-e CALICO_IPV4POOL_IPIP=always \

-e IP_AUTODETECTION_METHOD=interface=em1 \

-e IP6_AUTODETECTION_METHOD=interface=em1 \

-v /etc/kubernetes/ssl:/etc/kubernetes/ssl \

-v /var/run/calico:/var/run/calico \

-v /lib/modules:/lib/modules \

-v /run/docker/plugins:/run/docker/plugins \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /var/log/calico:/var/log/calico \

jicki/node:v2.6.0

ExecStop=/usr/bin/docker rm -f calico-node

Restart=on-failure

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

2.14.6 启动 Calico Node

Calico Node 采用 Systemd 方式启动,在每个节点配置好 Systemd service后,每个节点修改对应的 calico-node.service 中的 IP 和节点名称,然后启动即可

systemctl daemon-reload

systemctl restart calico-node

systemctl restart kubelet

2.14.7 安装 Calicoctl

cd /usr/local/bin/

wget -c https://github.com/projectcalico/calicoctl/releases/download/v1.6.1/calicoctl

chmod +x calicoctl

## 创建 calicoctl 配置文件

# 配置文件, 在 安装了 calico 网络的 机器下

mkdir /etc/calico

vi /etc/calico/calicoctl.cfg

apiVersion: v1

kind: calicoApiConfig

metadata:

spec:

datastoreType: "etcdv2"

etcdEndpoints: "https://192.168.100.71:2379,https://192.168.90.6:2379,https://192.168.90.7:2379"

etcdKeyFile: "/etc/kubernetes/ssl/etcd-key.pem"

etcdCertFile: "/etc/kubernetes/ssl/etcd.pem"

etcdCACertFile: "/etc/kubernetes/ssl/ca.pem"

# 查看 calico 状态

[root@k8s-master~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+------------+-------------+

| 192.168.90.6 | node-to-node mesh | up | 2017-11-24 | Established |

| 192.168.90.7 | node-to-node mesh | up | 2017-11-24 | Established |

+--------------+-------------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

2.15 部署 KubeDNS

2.15.1下载kubeDNS镜像

# 官方镜像

gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

# 国内镜像

jicki/k8s-dns-sidecar-amd64:1.14.7

jicki/k8s-dns-kube-dns-amd64:1.14.7

jicki/k8s-dns-dnsmasq-nanny-amd64:1.14.7

2.15.2下载yaml文件

curl -O https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kube-dns.yaml.base

# 修改后缀

mv kube-dns.yaml.base kube-dns.yaml

2.15.3系统预定义的 RoleBinding

预定义的 RoleBinding system:kube-dns 将 kube-system 命名空间的 kube-dns ServiceAccount 与 system:kube-dns Role 绑定, 该 Role 具有访问 kube-apiserver DNS 相关 API 的权限;

[root@k8s-master kubedns]# kubectl get clusterrolebindings system:kube-dns -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: 2017-09-29T04:12:29Z

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-dns

resourceVersion: "78"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterrolebindings/system%3Akube-dns

uid: 688927eb-a4cc-11e7-9f6b-44a8420b9988

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-dns

subjects:

- kind: ServiceAccount

name: kube-dns

namespace: kube-system

2.15.4 Kube-dns yaml文件模板

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

containers:

- name: kubedns

image: jicki/k8s-dns-kube-dns-amd64:1.14.7

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=doone.com.

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: jicki/k8s-dns-dnsmasq-nanny-amd64:1.14.7

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --no-negcache

- --log-facility=-

- --server=/doone.com./127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: jicki/k8s-dns-sidecar-amd64:1.14.7

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.doone.com.,5,SRV

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.doone.com.,5,SRV

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default # Don't use cluster DNS.

serviceAccountName: kube-dns

2.15.5 导入yaml文件

[root@k8s-master kubedns]# kubectl create -f kube-dns.yaml

service "kube-dns" created

serviceaccount "kube-dns" created

configmap "kube-dns" created

deployment "kube-dns" created

2.15.6 查看kubedns服务

[root@k8s-master kube-dns]# kubectl get all --namespace=kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/calico-policy-controller 1 1 1 1 6d

deploy/default-http-backend 1 1 1 1 2d

deploy/kube-dns 1 1 1 1 3d

deploy/kubernetes-dashboard 1 1 1 1 2d

deploy/nginx-ingress-controller 1 1 1 1 2d

NAME DESIRED CURRENT READY AGE

rs/calico-policy-controller-6dfdc6c556 1 1 1 6d

rs/default-http-backend-7f47b7d69b 1 1 1 2d

rs/kube-dns-fb8bf5848 1 1 1 3d

rs/kubernetes-dashboard-c8f5ff7f8 1 1 1 2d

rs/nginx-ingress-controller-5759c8464f 1 1 1 7h

rs/nginx-ingress-controller-6ccd8cfdb5 0 0 0 2d

rs/nginx-ingress-controller-745695d6cf 0 0 0 2d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/calico-policy-controller 1 1 1 1 6d

deploy/default-http-backend 1 1 1 1 2d

deploy/kube-dns 1 1 1 1 3d

deploy/kubernetes-dashboard 1 1 1 1 2d

deploy/nginx-ingress-controller 1 1 1 1 2d

NAME DESIRED CURRENT READY AGE

rs/calico-policy-controller-6dfdc6c556 1 1 1 6d

rs/default-http-backend-7f47b7d69b 1 1 1 2d

rs/kube-dns-fb8bf5848 1 1 1 3d

rs/kubernetes-dashboard-c8f5ff7f8 1 1 1 2d

rs/nginx-ingress-controller-5759c8464f 1 1 1 7h

rs/nginx-ingress-controller-6ccd8cfdb5 0 0 0 2d

rs/nginx-ingress-controller-745695d6cf 0 0 0 2d

NAME READY STATUS RESTARTS AGE

po/calico-policy-controller-6dfdc6c556-qp29z 1/1 Running 3 6d

po/default-http-backend-7f47b7d69b-fcwdw 1/1 Running 0 2d

po/kube-dns-fb8bf5848-jfzrs 3/3 Running 0 3d

po/kubernetes-dashboard-c8f5ff7f8-f9pfp 1/1 Running 0 2d

po/nginx-ingress-controller-5759c8464f-hhkkz 1/1 Running 0 7h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/default-http-backend ClusterIP 10.254.194.21 <none> 80/TCP 2d

svc/kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 3d

svc/kubernetes-dashboard ClusterIP 10.254.4.173 <none> 80/TCP 2d

2.16 部署 Ingress

Kubernetes 暴露服务的方式目前只有三种:LoadBlancer Service、NodePort Service、Ingress; 什么是 Ingress ? Ingress 就是利用 Nginx Haproxy 等负载均衡工具来暴露 Kubernetes 服务

2.16.1配置 调度 node

# ingress 有多种方式 1. deployment 自由调度 replicas

2. daemonset 全局调度 分配到所有node里

# deployment 自由调度过程中,由于我们需要 约束 controller 调度到指定的 node 中,所以需要对 node 进行 label 标签

# 默认如下:

[root@k8s-master dashboard]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.100.71 Ready <none> 9d v1.8.3

192.168.90.6 Ready <none> 9d v1.8.3

192.168.90.7 Ready <none> 9d v1.8.3

# 对 71 打上 label

kubectl label nodes 192.168.100.71 ingress=proxy

# 打完标签以后

[root@k8s-master dashboard]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

192.168.100.71 Ready <none> 9d v1.8.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=proxy,kubernetes.io/hostname=192.168.100.71

192.168.90.6 Ready <none> 9d v1.8.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=192.168.90.6

192.168.90.7 Ready <none> 9d v1.8.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=192.168.90.7

2.16.2下载Ingress镜像

# 官方镜像

gcr.io/google_containers/defaultbackend:1.0

gcr.io/google_containers/nginx-ingress-controller:0.9.0-beta.17

# 国内镜像

jicki/defaultbackend:1.0

jicki/nginx-ingress-controller:0.9.0-beta.17

2.16.3下载yaml文件

# 部署 Nginx backend , Nginx backend 用于统一转发 没有的域名 到指定页面。

curl -O https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/default-backend.yaml

# 部署 Ingress RBAC 认证

curl -O https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/rbac.yaml

# 部署 Ingress Controller 组件

curl -O https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/with-rbac.yaml

2.16.4 Ingress yaml 文件模板

#default-backend.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

app: default-http-backend

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: jicki/defaultbackend:1.4

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: kube-system

labels:

app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: default-http-backend

#rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: kube-system

#with-rbac.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

spec:

hostNetwork: true

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

ingress: proxy

containers:

- name: nginx-ingress-controller

image: jicki/nginx-ingress-controller:0.9.0-beta.17

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --apiserver-host=http://192.168.100.71:8080

# - --configmap=$(POD_NAMESPACE)/nginx-configuration

# - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

# - --udp-services-configmap=$(POD_NAMESPACE)/udp-services

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://192.168.100.71:8080

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

2.16.5 导入yaml文件

[root@k8s-master ingress]# kubectl apply -f default-backend.yaml

deployment "default-http-backend" created

service "default-http-backend" created

[root@k8s-master ingress]# kubectl apply -f rbac.yml

namespace "nginx-ingress" created

serviceaccount "nginx-ingress-serviceaccount" created

clusterrole "nginx-ingress-clusterrole" created

role "nginx-ingress-role" created

rolebinding "nginx-ingress-role-nisa-binding" created

clusterrolebinding "nginx-ingress-clusterrole-nisa-binding" created

[root@k8s-master ingress]# kubectl apply -f with-rbac.yaml

deployment "nginx-ingress-controller" created

2.16.6 查看ingress服务

[root@k8s-master ingress]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default-http-backend ClusterIP 10.254.194.21 <none> 80/TCP 2d

kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 3d

kubernetes-dashboard ClusterIP 10.254.4.173 <none> 80/TCP 2d

[root@k8s-master ingress]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-policy-controller-6dfdc6c556-qp29z 1/1 Running 3 6d

default-http-backend-7f47b7d69b-fcwdw 1/1 Running 0 2d

kube-dns-fb8bf5848-jfzrs 3/3 Running 0 3d

kubernetes-dashboard-c8f5ff7f8-f9pfp 1/1 Running 0 2d

nginx-ingress-controller-5759c8464f-hhkkz 1/1 Running 0 7h

2.17 部署 Dashboard

2.17.1下载dashboard镜像

# 官方镜像

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.6.3

# 国内镜像

jicki/kubernetes-dashboard-amd64:v1.6.3

2.17.2下载yaml文件

curl -O https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dashboard/dashboard-controller.yaml

curl -O https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dashboard/dashboard-service.yaml

# 因为开启了 RBAC 所以这里需要创建一个 RBAC 认证

vi dashboard-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: dashboard

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

2.17.3 Dashboard yaml文件模板

#dashboard-controller.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: dashboard

containers:

- name: kubernetes-dashboard

image: jicki/kubernetes-dashboard-amd64:v1.6.3

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9090

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

#dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

2.17.4 导入yaml文件

[root@k8s-master dashboard]# kubectl apply -f .

deployment "kubernetes-dashboard" created

serviceaccount "dashboard" created

clusterrolebinding "dashboard" created

service "kubernetes-dashboard" created

2.17.5 查看Dashboard服务

[root@k8s-master dashboard]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default-http-backend ClusterIP 10.254.194.21 <none> 80/TCP 2d

kube-dns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP 3d

kubernetes-dashboard ClusterIP 10.254.4.173 <none> 80/TCP 2d

[root@k8s-master dashboard]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-policy-controller-6dfdc6c556-qp29z 1/1 Running 3 6d

default-http-backend-7f47b7d69b-fcwdw 1/1 Running 0 2d

kube-dns-fb8bf5848-jfzrs 3/3 Running 0 3d

kubernetes-dashboard-c8f5ff7f8-f9pfp 1/1 Running 0 2d

nginx-ingress-controller-5759c8464f-hhkkz 1/1 Running 0 7h