最近公司需要用opencv调用某个解码模块进行解码,所以专门研究了一下。

下面是Opencv官方文档地址:https://docs.opencv.org/3.4.8/examples.html

如何编译opencv+ffmpeg,把ffmpeg编译到opencv中有两种方式,一种是静态方式,一种是插件方式。

一、下面是一个静态加载

opencv+ffmpeg编译打包全解指南

这个有点麻烦了,还有更加简单的,我来介绍。

另外,因为opencv依然依赖libavresample,但是这个库很早就不维护了,详细见:

http://ffmpeg.org/pipermail/ffmpeg-devel/2012-April/123746.html

所以我们这次也不会使用这个库

正式开始:

Opencv4.x ffmpeg5.3

首先脚本如下:

cmake .. \

-D CMAKE_BUILD_TYPE=Debug \

-D PKG_CONFIG_PATH="/workspace/depends/simple-x86-omx-ffmpeg5.0/lib/pkgconfig" \

-D CMAKE_INSTALL_PREFIX="/workspace/depends/opencv3.4-ffmpeg" \

-D BUILD_TESTS=OFF \

-D BUILD_PERF_TESTS=OFF \

-D WITH_CUDA=OFF \

-D WITH_VTK=OFF \

-D WITH_MATLAB=OFF \

-D BUILD_DOCS=OFF \

-D BUILD_opencv_python3=ON \

-D BUILD_opencv_python2=ON \

-D WITH_IPP=OFF \

-D BUILD_SHARED_LIBS=ON \

-D BUILD_opencv_apps=ON \

-D WITH_CUDA=OFF \

-D WITH_OPENCL=OFF \

-D WITH_VTK=OFF \

-D WITH_MATLAB=OFF \

-D BUILD_DOCS=ON \

-D BUILD_JAVA=OFF \

-D BUILD_FAT_JAVA_LIB=OFF \

-D WITH_PROTOBUF=OFF \

-D WITH_QUIRC=OFF \

-D WITH_FFMPEG=ON

编译的时候发现找不到上面设置的 -D PKG_CONFIG_PATH中ffmpeg路径,怎么办呢,手动来设置:

export PKG_CONFIG_PATH="/workspace/depends/simple-x86-omx-ffmpeg5.0/lib/pkgconfig"

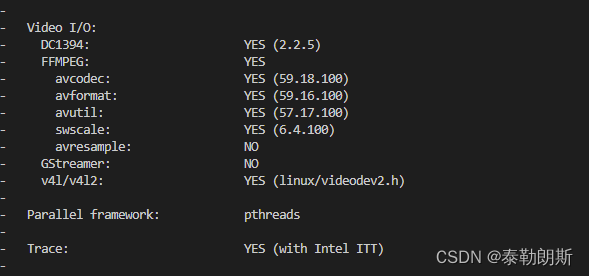

接着编译,就会发现这次成功了:

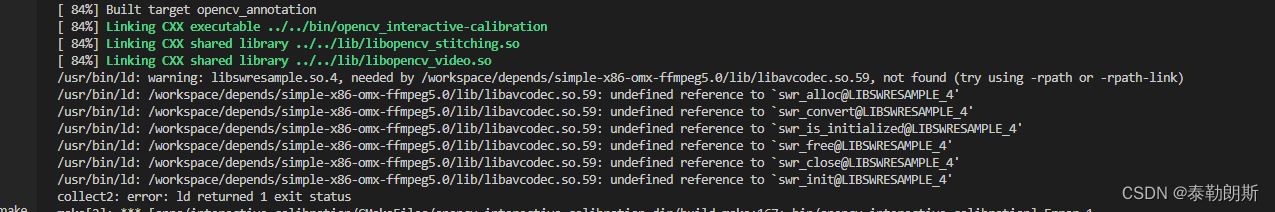

编译的时候发现出错了:

继续执行下述命令:

export LD_LIBRARY_PATH=/workspace/depends/simple-x86-omx-ffmpeg5.0/lib

终于编译成功了。

最终我们验证:

ldd libopencv_videoio.so

成功编译。并且后面的案例也成功了。

二、下面说说动态加载:Opencv版本4.0以上

1、首先编译opencv不带ffmpeg

2、编译opencv_ffmpeg插件

具体如下:

- 在下载的opencv中首先创建mybuild文件夹

- 进入到mybuild文件夹,执行:

cmake .. \

-D CMAKE_BUILD_TYPE=Debug \

-D CMAKE_INSTALL_PREFIX="/workspace/depends/opencv4.0-ffmpeg-camrm" \

-D BUILD_TESTS=OFF \

-D BUILD_PERF_TESTS=OFF \

-D WITH_CUDA=OFF \

-D WITH_VTK=OFF \

-D WITH_MATLAB=OFF \

-D BUILD_DOCS=OFF \

-D BUILD_opencv_python3=ON \

-D BUILD_opencv_python2=ON \

-D WITH_IPP=OFF \

-D BUILD_SHARED_LIBS=ON \

-D BUILD_opencv_apps=ON \

-D WITH_CUDA=OFF \

-D WITH_OPENCL=OFF \

-D WITH_VTK=OFF \

-D WITH_MATLAB=OFF \

-D BUILD_DOCS=ON \

-D BUILD_JAVA=OFF \

-D BUILD_FAT_JAVA_LIB=OFF \

-D WITH_PROTOBUF=OFF \

-D WITH_QUIRC=OFF \

-D WITH_FFMPEG=OFF \

-D OPENCV_GENERATE_PKGCONFIG=ON

- 进入到文件夹/opencv/modules/videoio/misc/plugin_ffmpeg文件夹中,看到有单独编译opencv_ffmpeg插件的脚本,build-standalone…sh

#!/bin/bash

set -e

mkdir -p build_shared && pushd build_shared

#修改这个路径到ffmpg的pkgconfig下

PKG_CONFIG_PATH=/workspace/depends/simple-x86-omx-ffmpeg5.0/lib/pkgconfig \

cmake -GNinja \

#修改这个路径到前面编译opencv时的mybuild文件夹下

-DOpenCV_DIR=/workspace/opencv3.4.0/mybuild \

-DOPENCV_PLUGIN_NAME=opencv_videoio_ffmpeg_shared_$2 \

-DOPENCV_PLUGIN_DESTINATION=$1 \

-DCMAKE_BUILD_TYPE=$3 \

#修改这个路径到当前路径下

/workspace/opencv3.4.0/modules/videoio/misc/plugin_ffmpeg

ninja

popd

mkdir -p build_static && pushd build_static

#修改这个路径到ffmpg的pkgconfig下

PKG_CONFIG_PATH=/workspace/depends/simple-x86-omx-ffmpeg5.0/lib/pkgconfig \

cmake -GNinja \

#修改这个路径到前面编译opencv时的mybuild文件夹下

-DOpenCV_DIR=/workspace/opencv3.4.0/mybuild \

-DOPENCV_PLUGIN_NAME=opencv_videoio_ffmpeg_static_$2 \

-DOPENCV_PLUGIN_DESTINATION=$1 \

-DCMAKE_MODULE_LINKER_FLAGS=-Wl,-Bsymbolic \

-DCMAKE_BUILD_TYPE=$3 \

#修改这个路径到当前路径下

/workspace/opencv3.4.0/modules/videoio/misc/plugin_ffmpeg

ninja

popd

- 执行./build-standalone.sh,然后在当前文件夹下生成了两个文件夹build_shared 和build_static,把里面的libopencv_videoio_ffmpeg_shared_.so拷贝到opencv安装路径lib下就可以了。

总体来说这种方式非常简单,比起知乎上的方法容易不少。

动他加载和静态加载有什么区别呢,看下面代码,videoio_registry.cpp

#if OPENCV_HAVE_FILESYSTEM_SUPPORT && defined(ENABLE_PLUGINS)

#define DECLARE_DYNAMIC_BACKEND(cap, name, mode) \

{

\

cap, (BackendMode)(mode), 1000, name, createPluginBackendFactory(cap, name) \

},

#else

#define DECLARE_DYNAMIC_BACKEND(cap, name, mode) /* nothing */

#endif

#define DECLARE_STATIC_BACKEND(cap, name, mode, createCaptureFile, createCaptureCamera, createWriter) \

{

\

cap, (BackendMode)(mode), 1000, name, createBackendFactory(createCaptureFile, createCaptureCamera, createWriter) \

},

/** Ordering guidelines:

- modern optimized, multi-platform libraries: ffmpeg, gstreamer, Media SDK

- platform specific universal SDK: WINRT, AVFOUNDATION, MSMF/DSHOW, V4L/V4L2

- RGB-D: OpenNI/OpenNI2, REALSENSE, OBSENSOR

- special OpenCV (file-based): "images", "mjpeg"

- special camera SDKs, including stereo: other special SDKs: FIREWIRE/1394, XIMEA/ARAVIS/GIGANETIX/PVAPI(GigE)/uEye

- other: XINE, gphoto2, etc

*/

//builtin_backends是一个全局变量

static const struct VideoBackendInfo builtin_backends[] =

{

#ifdef HAVE_FFMPEG

//静态加载

DECLARE_STATIC_BACKEND(CAP_FFMPEG, "FFMPEG", MODE_CAPTURE_BY_FILENAME | MODE_WRITER, cvCreateFileCapture_FFMPEG_proxy, 0, cvCreateVideoWriter_FFMPEG_proxy)

#elif defined(ENABLE_PLUGINS) || defined(HAVE_FFMPEG_WRAPPER)

//动态加载

DECLARE_DYNAMIC_BACKEND(CAP_FFMPEG, "FFMPEG", MODE_CAPTURE_BY_FILENAME | MODE_WRITER)

#endif

...

}

2、案例

#include <iostream>

#include "opencv2/core/utils/logger.hpp"

#include "opencv2/opencv.hpp"

using namespace cv;

#define CV_FOURCC(c1, c2, c3, c4) \

(((c1)&255) + (((c2)&255) << 8) + (((c3)&255) << 16) + (((c4)&255) << 24))

int main() {

cv::utils::logging::setLogLevel(utils::logging::LOG_LEVEL_VERBOSE);

VideoCapture capture;

Mat frame;

// char *infile = "/workspace/videos/codec-videos/zzsin_1920x1080_30fps_60s.mp4";

char *infile = "/workspace/videos/codec-videos/image_all/ocr_1600-1600.mp4";

if (!capture.open(infile, CAP_FFMPEG)) {

printf("can not open infile %s ...\n", infile);

return -1;

}

cv::Size sWH = cv::Size(

(int)capture.get(CAP_PROP_FRAME_WIDTH), (int)capture.get(CAP_PROP_FRAME_HEIGHT));

char * outfile = "./test.mp4";

VideoWriter outputVideo;

if (!outputVideo.open(outfile, CAP_FFMPEG, CV_FOURCC('a', 'v', 'c', '1'), 25.0, sWH)) {

printf("can not open outfile %s ...\n", outfile);

return -1;

}

while (capture.read(frame)) {

if (frame.empty())

break;

outputVideo << frame;

}

printf("end of stream!\n");

capture.release();

outputVideo.release();

return 0;

}

CMakeLists.txt文件

# CMake 最低版本号要求

cmake_minimum_required (VERSION 3.5)

# 项目信息

project (OPENCV4-Demo1)

set(EXECUTABLE_OUTPUT_PATH ${

PROJECT_BINARY_DIR}/bin)

set(CMAKE_BUILD_TYPE "Debug")

set(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -O0 -Wall -g -fpermissive")

set(CMAKE_CXX_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall -fpermissive")

set(root_path /workspace/depends)

#dependencies

set(ENV{

PKG_CONFIG_PATH} "/workspace/depends/opencv4.0-ffmpeg/lib/pkgconfig")

find_package(PkgConfig)

pkg_check_modules(OPENCV4 REQUIRED opencv4)

message(STATUS "=== OPENCV4_LIBRARIES: ${OPENCV4_LIBRARIES}")

message(STATUS "=== OPENCV4_INCLUDE_DIRS: ${OPENCV4_INCLUDE_DIRS}")

message(STATUS "=== OPENCV4_LIBRARY_DIRS: ${OPENCV4_LIBRARY_DIRS}")

include_directories(${

OPENCV4_INCLUDE_DIRS} )

#如果是按照静态编译方式编译 还要加上ffmpeg的lib

link_directories(${

OPENCV4_LIBRARY_DIRS} /path/to/ffmpeg/lib)

set(SOURCES main.cpp)

# 指定生成目标

add_executable(Demo ${

SOURCES})

target_link_libraries(Demo ${

OPENCV4_LIBRARIES})

执行前先执行:

#这里路径根据你实际的改变

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/workspace/depends/simple-x86-omx-ffmpeg5.0/lib

#OPENCV_VIDEOIO_PLUGIN_PATH是opencv默认的插件路径寻找全局变量

export OPENCV_VIDEOIO_PLUGIN_PATH=/workspace/depends/opencv4.0-ffmpeg/lib

下面是日志:

通过日志我们可以知道,代码是进入到了ffmpeg中的解码器中了。

另外如果要指定ffmpeg的编解码器,opencv中有四个文件要好好看看,

- cap_ffmpeg_hw.hpp

- cap_ffmpeg_impl.hpp

- cap_ffmpeg.cpp

- ffmpeg_codecs.hpp

对于解码器,可以通过全局变量,如下设置:

export OPENCV_FFMPEG_CAPTURE_OPTIONS="video_codec;h264"

对于编码器,目前没有好的办法,因为代码中只有:

codec = avcodec_find_encoder(codec_id);

所以你只能在你编译ffmpeg的时候来只编译你需要的那个编码器就好了,没有其它办法,但是如果你要采用hw方式,那还是可以通过全局变量来设置的:OPENCV_FFMPEG_WRITER_OPTIONS

3、解析

在videoio.hpp中定义了

- VideoCapture

- VideoWriter

这两个类就是我们要的读写类

默认的构造函数,如下,特别注意下面的apiPreference = CAP_ANY,这个参数可以选择解码器

CV_WRAP VideoCapture();

CV_WRAP explicit VideoCapture(const String& filename, int apiPreference = CAP_ANY);

...

它的实现在cap.cpp文件夹中:

VideoCapture::VideoCapture(const String& filename, int apiPreference) : throwOnFail(false)

{

CV_TRACE_FUNCTION();

open(filename, apiPreference);

}

可以看到,里面主要是open()函数,所以一切打开解码器的动作都在这里,我们继续追踪open()函数(代码进行了简化):

//从这里开始 CAP_ANY

bool VideoCapture::open(int cameraNum, int apiPreference, const std::vector<int>& params)

...

const VideoCaptureParameters parameters(params);

//在这里调用capture插件

const std::vector<VideoBackendInfo> backends = cv::videoio_registry::getAvailableBackends_CaptureByIndex();

for (size_t i = 0; i < backends.size(); i++)

{

const VideoBackendInfo& info = backends[i];

if (apiPreference == CAP_ANY || apiPreference == info.id)

{

if (!info.backendFactory)

{

CV_LOG_DEBUG(NULL, "VIDEOIO(" << info.name << "): factory is not available (plugins require filesystem support)");

continue;

}

CV_Assert(!info.backendFactory.empty());

//获取backend

const Ptr<IBackend> backend = info.backendFactory->getBackend();

icap = backend->createCapture(cameraNum, parameters);

//保证icap打开了

if (icap->isOpened()) return true;

} //while

...

return false;

}

//上面有一个params,具体定义如下

class VideoParameters

{

public:

struct VideoParameter {

VideoParameter() = default;

VideoParameter(int key_, int value_) : key(key_), value(value_) {

}

//key value都是int类型

int key{

-1};

int value{

-1};

mutable bool isConsumed{

false};

};

//这是param的构造函数

explicit VideoParameters(const std::vector<int>& params)

{

const auto count = params.size();

if (count % 2 != 0)

{

CV_Error_(Error::StsVecLengthErr,

("Vector of VideoWriter parameters should have even length"));

}

params_.reserve(count / 2);

for (std::size_t i = 0; i < count; i += 2)

{

add(params[i], params[i + 1]);

}

}

void add(int key, int value)

{

params_.emplace_back(key, value);

}

private:

//就是key=value的形式,但都是int=int类型

std::vector<VideoParameter> params_;

}

//下面只是简单的继承了一下

class VideoWriterParameters : public VideoParameters

{

public:

using VideoParameters::VideoParameters; // reuse constructors

};

class VideoCaptureParameters : public VideoParameters

{

public:

using VideoParameters::VideoParameters; // reuse constructors

};

接下来主要看插件是怎么注册上去的,videoio_registry.cpp:

#define DECLARE_DYNAMIC_BACKEND(cap, name, mode) \

{

\

cap, (BackendMode)(mode), 1000, name, createPluginBackendFactory(cap, name) \

},

#else

#define DECLARE_DYNAMIC_BACKEND(cap, name, mode) /* nothing */

#endif

#define DECLARE_STATIC_BACKEND(cap, name, mode, createCaptureFile, createCaptureCamera, createWriter) \

{

\

cap, (BackendMode)(mode), 1000, name, createBackendFactory(createCaptureFile, createCaptureCamera, createWriter) \

},

/** Ordering guidelines:

- modern optimized, multi-platform libraries: ffmpeg, gstreamer, Media SDK

- platform specific universal SDK: WINRT, AVFOUNDATION, MSMF/DSHOW, V4L/V4L2

- RGB-D: OpenNI/OpenNI2, REALSENSE, OBSENSOR

- special OpenCV (file-based): "images", "mjpeg"

- special camera SDKs, including stereo: other special SDKs: FIREWIRE/1394, XIMEA/ARAVIS/GIGANETIX/PVAPI(GigE)/uEye

- other: XINE, gphoto2, etc

*/

//这里有一个全局的变量,所有的插件在这里进行添加

static const struct VideoBackendInfo builtin_backends[] =

{

#ifdef HAVE_FFMPEG

DECLARE_STATIC_BACKEND(CAP_FFMPEG, "FFMPEG", MODE_CAPTURE_BY_FILENAME | MODE_WRITER, cvCreateFileCapture_FFMPEG_proxy, 0, cvCreateVideoWriter_FFMPEG_proxy)

#elif defined(ENABLE_PLUGINS) || defined(HAVE_FFMPEG_WRAPPER)

DECLARE_DYNAMIC_BACKEND(CAP_FFMPEG, "FFMPEG", MODE_CAPTURE_BY_FILENAME | MODE_WRITER)

#endif

#ifdef HAVE_GSTREAMER

DECLARE_STATIC_BACKEND(CAP_GSTREAMER, "GSTREAMER", MODE_CAPTURE_ALL | MODE_WRITER, createGStreamerCapture_file, createGStreamerCapture_cam, create_GStreamer_writer)

#elif defined(ENABLE_PLUGINS)

DECLARE_DYNAMIC_BACKEND(CAP_GSTREAMER, "GSTREAMER", MODE_CAPTURE_ALL | MODE_WRITER)

#endif

...

}

DECLARE_DYNAMIC_BACKEND(CAP_FFMPEG, “FFMPEG”, MODE_CAPTURE_BY_FILENAME | MODE_WRITER)展开就是VideoBackendInfo 结构体定义:

这个结构体定义如下:

struct VideoBackendInfo {

VideoCaptureAPIs id;

BackendMode mode;

int priority; // 1000-<index*10> - default builtin priority

// 0 - disabled (OPENCV_VIDEOIO_PRIORITY_<name> = 0)

// >10000 - prioritized list (OPENCV_VIDEOIO_PRIORITY_LIST)

const char* name;

Ptr<IBackendFactory> backendFactory;

};

展开如下:

//cap=CAP_FFMPEG

//name="FFMPEG"

//model=MODE_CAPTURE_BY_FILENAME | MODE_WRITER

{

cap, (BackendMode)(mode), 1000, name, createPluginBackendFactory(cap, name)

},

cap的定义如下:

enum VideoCaptureAPIs {

CAP_ANY = 0, //!< Auto detect == 0

CAP_VFW = 200, //!< Video For Windows (obsolete, removed)

CAP_V4L = 200, //!< V4L/V4L2 capturing support

CAP_V4L2 = CAP_V4L, //!< Same as CAP_V4L

CAP_FIREWIRE = 300, //!< IEEE 1394 drivers

CAP_FIREWARE = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_IEEE1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_DC1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_CMU1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_QT = 500, //!< QuickTime (obsolete, removed)

CAP_UNICAP = 600, //!< Unicap drivers (obsolete, removed)

CAP_DSHOW = 700, //!< DirectShow (via videoInput)

CAP_PVAPI = 800, //!< PvAPI, Prosilica GigE SDK

CAP_OPENNI = 900, //!< OpenNI (for Kinect)

CAP_OPENNI_ASUS = 910, //!< OpenNI (for Asus Xtion)

CAP_ANDROID = 1000, //!< Android - not used

CAP_XIAPI = 1100, //!< XIMEA Camera API

CAP_AVFOUNDATION = 1200, //!< AVFoundation framework for iOS (OS X Lion will have the same API)

CAP_GIGANETIX = 1300, //!< Smartek Giganetix GigEVisionSDK

CAP_MSMF = 1400, //!< Microsoft Media Foundation (via videoInput)

CAP_WINRT = 1410, //!< Microsoft Windows Runtime using Media Foundation

CAP_INTELPERC = 1500, //!< RealSense (former Intel Perceptual Computing SDK)

CAP_REALSENSE = 1500, //!< Synonym for CAP_INTELPERC

CAP_OPENNI2 = 1600, //!< OpenNI2 (for Kinect)

CAP_OPENNI2_ASUS = 1610, //!< OpenNI2 (for Asus Xtion and Occipital Structure sensors)

CAP_OPENNI2_ASTRA= 1620, //!< OpenNI2 (for Orbbec Astra)

CAP_GPHOTO2 = 1700, //!< gPhoto2 connection

CAP_GSTREAMER = 1800, //!< GStreamer

CAP_FFMPEG = 1900, //!< Open and record video file or stream using the FFMPEG library

CAP_IMAGES = 2000, //!< OpenCV Image Sequence (e.g. img_%02d.jpg)

CAP_ARAVIS = 2100, //!< Aravis SDK

CAP_OPENCV_MJPEG = 2200, //!< Built-in OpenCV MotionJPEG codec

CAP_INTEL_MFX = 2300, //!< Intel MediaSDK

CAP_XINE = 2400, //!< XINE engine (Linux)

CAP_UEYE = 2500, //!< uEye Camera API

CAP_OBSENSOR = 2600, //!< For Orbbec 3D-Sensor device/module (Astra+, Femto)

};

注册上去之后,通过 下面语句进行调用,如果获取到一个可以使用的插件呢?

const std::vector<VideoBackendInfo> backends = cv::videoio_registry::getAvailableBackends_CaptureByIndex();

std::vector<VideoBackendInfo> getAvailableBackends_CaptureByIndex()

{

//这里是个单例模式

const std::vector<VideoBackendInfo> result = VideoBackendRegistry::getInstance().getAvailableBackends_CaptureByIndex();

return result;

}

//注册器

class VideoBackendRegistry

{

protected:

std::vector<VideoBackendInfo> enabledBackends;

VideoBackendRegistry()

{

const int N = sizeof(builtin_backends)/sizeof(builtin_backends[0]);

enabledBackends.assign(builtin_backends, builtin_backends + N);

for (int i = 0; i < N; i++)

{

VideoBackendInfo& info = enabledBackends[i];

info.priority = 1000 - i * 10;

}

CV_LOG_DEBUG(NULL, "VIDEOIO: Builtin backends(" << N << "): " << dumpBackends());

if (readPrioritySettings())

{

CV_LOG_INFO(NULL, "VIDEOIO: Updated backends priorities: " << dumpBackends());

}

int enabled = 0;

for (int i = 0; i < N; i++)

{

VideoBackendInfo& info = enabledBackends[enabled];

if (enabled != i)

info = enabledBackends[i];

size_t param_priority = utils::getConfigurationParameterSizeT(cv::format("OPENCV_VIDEOIO_PRIORITY_%s", info.name).c_str(), (size_t)info.priority);

CV_Assert(param_priority == (size_t)(int)param_priority); // overflow check

if (param_priority > 0)

{

info.priority = (int)param_priority;

enabled++;

}

else

{

CV_LOG_INFO(NULL, "VIDEOIO: Disable backend: " << info.name);

}

}

enabledBackends.resize(enabled);

CV_LOG_DEBUG(NULL, "VIDEOIO: Available backends(" << enabled << "): " << dumpBackends());

//根据优先级重新排序

std::sort(enabledBackends.begin(), enabledBackends.end(), sortByPriority);

CV_LOG_INFO(NULL, "VIDEOIO: Enabled backends(" << enabled << ", sorted by priority): " << dumpBackends());

}

//获取到有用的插件

inline std::vector<VideoBackendInfo> getAvailableBackends_CaptureByIndex() const

{

std::vector<VideoBackendInfo> result;

for (size_t i = 0; i < enabledBackends.size(); i++)

{

const VideoBackendInfo& info = enabledBackends[i];

if (info.mode & MODE_CAPTURE_BY_INDEX)

result.push_back(info);

}

return result;

}

继续前面,看看如何获取到capture

struct VideoBackendInfo {

VideoCaptureAPIs id;

BackendMode mode;

int priority; // 1000-<index*10> - default builtin priority

// 0 - disabled (OPENCV_VIDEOIO_PRIORITY_<name> = 0)

// >10000 - prioritized list (OPENCV_VIDEOIO_PRIORITY_LIST)

const char* name;

Ptr<IBackendFactory> backendFactory;

};

//在前面这里已经得到了backends

const std::vector<VideoBackendInfo> backends = cv::videoio_registry::getAvailableBackends_CaptureByIndex();

for (size_t i = 0; i < backends.size(); i++)

{

const VideoBackendInfo& info = backends[i];

//这里获取IBackendFactory

const Ptr<IBackend> backend = info.backendFactory->getBackend();

//ffmpeg最终调用的是这个

//ffmpegCapture = cvCreateFileCaptureWithParams_FFMPEG(filename.c_str(), params);

icap = backend->createCapture(cameraNum, parameters);

if(icap.isopen())return true;

}

接下来主要看如何创建工厂函数IBackendFactory:

//cap=CAP_FFMPEG

//name="FFMPEG"

//model=MODE_CAPTURE_BY_FILENAME | MODE_WRITER

//{

// cap, (BackendMode)(mode), 1000, name, createPluginBackendFactory(cap, name)

//},

//这是前面定义的ffmpeg

Ptr<IBackendFactory> createPluginBackendFactory(VideoCaptureAPIs id, const char* baseName)

{

return makePtr<impl::PluginBackendFactory>(id, baseName); //.staticCast<IBackendFactory>();

}

//下面创建工厂

PluginBackendFactory(VideoCaptureAPIs id, const char* baseName) :

id_(id), baseName_(baseName),

initialized(false){

// nothing, plugins are loaded on demand

}

Ptr<IBackend> getBackend() const CV_OVERRIDE{

initBackend();

return backend.staticCast<IBackend>();

}

inline void initBackend() const{

if (!initialized){

const_cast<PluginBackendFactory*>(this)->initBackend_();

}

}

void initBackend_(){

AutoLock lock(getInitializationMutex());

loadPlugin();

...

}

void PluginBackendFactory::loadPlugin(){

//baseName_是前面设置的FFMPFEG

for (const FileSystemPath_t& plugin : getPluginCandidates(baseName_))

{

//加载动态库

auto lib = makePtr<cv::plugin::impl::DynamicLib>(plugin);

if (!lib->isLoaded())

continue;

//创建一个插件智能指针

Ptr<PluginBackend> pluginBackend = makePtr<PluginBackend>(lib);

backend = pluginBackend;

return;

...

}

static

std::vector<FileSystemPath_t> getPluginCandidates(const std::string& baseName)

{

using namespace cv::utils;

using namespace cv::utils::fs;

const std::string baseName_l = toLowerCase(baseName);

const std::string baseName_u = toUpperCase(baseName);

const FileSystemPath_t baseName_l_fs = toFileSystemPath(baseName_l);

std::vector<FileSystemPath_t> paths;

const std::vector<std::string> paths_ = getConfigurationParameterPaths("OPENCV_VIDEOIO_PLUGIN_PATH", std::vector<std::string>());

if (paths_.size() != 0)

{

for (size_t i = 0; i < paths_.size(); i++)

{

paths.push_back(toFileSystemPath(paths_[i]));

}

}

else

{

FileSystemPath_t binaryLocation;

if (getBinLocation(binaryLocation))

{

binaryLocation = getParent(binaryLocation);

paths.push_back(binaryLocation);

}

}

const std::string default_expr = libraryPrefix() + "opencv_videoio_" + baseName_l + "*" + librarySuffix();

const std::string plugin_expr = getConfigurationParameterString((std::string("OPENCV_VIDEOIO_PLUGIN_") + baseName_u).c_str(), default_expr.c_str());

std::vector<FileSystemPath_t> results;

CV_LOG_INFO(NULL, "VideoIO plugin (" << baseName << "): glob is '" << plugin_expr << "', " << paths.size() << " location(s)");

for (const std::string& path : paths)

{

if (path.empty())

continue;

std::vector<std::string> candidates;

cv::glob(utils::fs::join(path, plugin_expr), candidates);

// Prefer candisates with higher versions

// TODO: implemented accurate versions-based comparator

std::sort(candidates.begin(), candidates.end(), std::greater<std::string>());

CV_LOG_INFO(NULL, " - " << path << ": " << candidates.size());

copy(candidates.begin(), candidates.end(), back_inserter(results));

}

CV_LOG_INFO(NULL, "Found " << results.size() << " plugin(s) for " << baseName);

return results;

}

接下来就看如何创建:

//在前面这里已经得到了backends

const std::vector<VideoBackendInfo> backends = cv::videoio_registry::getAvailableBackends_CaptureByIndex();

for (size_t i = 0; i < backends.size(); i++)

{

const VideoBackendInfo& info = backends[i];

//这里获取IBackendFactory

const Ptr<IBackend> backend = info.backendFactory->getBackend();

//ffmpeg最终调用的是这个

//ffmpegCapture = cvCreateFileCaptureWithParams_FFMPEG(filename.c_str(), params);

icap = backend->createCapture(cameraNum, parameters);//在这里创建

if(icap.isopen())return true;

}

Ptr<IVideoCapture> PluginBackend::createCapture(const std::string &filename, const VideoCaptureParameters& params) const

{

if (capture_api_)

return PluginCapture::create(capture_api_, filename, 0, params); //.staticCast<IVideoCapture>();

if (plugin_api_){

Ptr<IVideoCapture> cap = legacy::PluginCapture::create(plugin_api_, filename, 0); //.staticCast<IVideoCapture>();

return cap;

}

return Ptr<IVideoCapture>();

}

static

Ptr<PluginCapture> create(const OpenCV_VideoIO_Capture_Plugin_API* plugin_api,

const std::string &filename, int camera, const VideoCaptureParameters& params)

{

CV_Assert(plugin_api);

CV_Assert(plugin_api->v0.Capture_release);

CvPluginCapture capture = NULL;

if (plugin_api->api_header.api_version >= 1 && plugin_api->v1.Capture_open_with_params)

{

std::vector<int> vint_params = params.getIntVector();

int* c_params = vint_params.data();

unsigned n_params = (unsigned)(vint_params.size() / 2);

//这个回调中调用

if (CV_ERROR_OK == plugin_api->v1.Capture_open_with_params(

filename.empty() ? 0 : filename.c_str(), camera, c_params, n_params, &capture))

{

CV_Assert(capture);

return makePtr<PluginCapture>(plugin_api, capture);

}

}

else if (plugin_api->v0.Capture_open)

{

if (CV_ERROR_OK == plugin_api->v0.Capture_open(filename.empty() ? 0 : filename.c_str(), camera, &capture))

{

CV_Assert(capture);

Ptr<PluginCapture> cap = makePtr<PluginCapture>(plugin_api, capture);

if (cap && !params.empty())

{

applyParametersFallback(cap, params);

}

return cap;

}

}

return Ptr<PluginCapture>();

}

最终调用到这里:

static

CvCapture_FFMPEG* cvCreateFileCaptureWithParams_FFMPEG(const char* filename, const VideoCaptureParameters& params)

{

// FIXIT: remove unsafe malloc() approach

CvCapture_FFMPEG* capture = (CvCapture_FFMPEG*)malloc(sizeof(*capture));

if (!capture)

return 0;

capture->init();

if (capture->open(filename, params))

return capture;

capture->close();

free(capture);

return 0;

}

bool CvCapture_FFMPEG::open(const char *_filename, const VideoCaptureParameters ¶ms) {

InternalFFMpegRegister::init();

AutoLock lock(_mutex);

unsigned i;

bool valid = false;

int nThreads = 0;

close();

if (!params.empty()) {

if (params.has(CAP_PROP_FORMAT)) {

int value = params.get<int>(CAP_PROP_FORMAT);

if (value == -1) {

CV_LOG_INFO(NULL, "VIDEOIO/FFMPEG: enabled demuxer only mode: '"

<< (_filename ? _filename : "<NULL>") << "'");

rawMode = true;

} else {

CV_LOG_ERROR(NULL,

"VIDEOIO/FFMPEG: CAP_PROP_FORMAT parameter value is invalid/unsupported: "

<< value);

return false;

}

}

if (params.has(CAP_PROP_HW_ACCELERATION)) {

va_type = params.get<VideoAccelerationType>(CAP_PROP_HW_ACCELERATION);

#if !USE_AV_HW_CODECS

if (va_type != VIDEO_ACCELERATION_NONE && va_type != VIDEO_ACCELERATION_ANY) {

CV_LOG_ERROR(NULL,

"VIDEOIO/FFMPEG: FFmpeg backend is build without acceleration support. "

"Can't handle CAP_PROP_HW_ACCELERATION parameter. Bailout");

return false;

}

#endif

}

if (params.has(CAP_PROP_HW_DEVICE)) {

hw_device = params.get<int>(CAP_PROP_HW_DEVICE);

if (va_type == VIDEO_ACCELERATION_NONE && hw_device != -1) {

CV_LOG_ERROR(NULL,

"VIDEOIO/FFMPEG: Invalid usage of CAP_PROP_HW_DEVICE without requested "

"H/W acceleration. Bailout");

return false;

}

if (va_type == VIDEO_ACCELERATION_ANY && hw_device != -1) {

CV_LOG_ERROR(NULL,

"VIDEOIO/FFMPEG: Invalid usage of CAP_PROP_HW_DEVICE with 'ANY' H/W "

"acceleration. Bailout");

return false;

}

}

if (params.has(CAP_PROP_HW_ACCELERATION_USE_OPENCL)) {

use_opencl = params.get<int>(CAP_PROP_HW_ACCELERATION_USE_OPENCL);

}

#if USE_AV_INTERRUPT_CALLBACK

if (params.has(CAP_PROP_OPEN_TIMEOUT_MSEC)) {

open_timeout = params.get<int>(CAP_PROP_OPEN_TIMEOUT_MSEC);

}

if (params.has(CAP_PROP_READ_TIMEOUT_MSEC)) {

read_timeout = params.get<int>(CAP_PROP_READ_TIMEOUT_MSEC);

}

#endif

if (params.has(CAP_PROP_N_THREADS)) {

nThreads = params.get<int>(CAP_PROP_N_THREADS);

}

if (params.warnUnusedParameters()) {

CV_LOG_ERROR(NULL,

"VIDEOIO/FFMPEG: unsupported parameters in .open(), see logger INFO channel "

"for details. Bailout");

return false;

}

}

#if USE_AV_INTERRUPT_CALLBACK

/* interrupt callback */

interrupt_metadata.timeout_after_ms = open_timeout;

get_monotonic_time(&interrupt_metadata.value);

ic = avformat_alloc_context();

ic->interrupt_callback.callback = _opencv_ffmpeg_interrupt_callback;

ic->interrupt_callback.opaque = &interrupt_metadata;

#endif

#ifndef NO_GETENV

char *options = getenv("OPENCV_FFMPEG_CAPTURE_OPTIONS");

if (options == NULL) {

#if LIBAVFORMAT_VERSION_MICRO >= 100 && LIBAVFORMAT_BUILD >= CALC_FFMPEG_VERSION(55, 48, 100)

av_dict_set(&dict, "rtsp_flags", "prefer_tcp", 0);

#else

av_dict_set(&dict, "rtsp_transport", "tcp", 0);

#endif

} else {

#if LIBAVUTIL_BUILD >= (LIBAVUTIL_VERSION_MICRO >= 100 ? CALC_FFMPEG_VERSION(52, 17, 100) \

: CALC_FFMPEG_VERSION(52, 7, 0))

av_dict_parse_string(&dict, options, ";", "|", 0);

#else

av_dict_set(&dict, "rtsp_transport", "tcp", 0);

#endif

}

#else

av_dict_set(&dict, "rtsp_transport", "tcp", 0);

#endif

CV_FFMPEG_FMT_CONST AVInputFormat *input_format = NULL;

AVDictionaryEntry * entry = av_dict_get(dict, "input_format", NULL, 0);

if (entry != 0) {

input_format = av_find_input_format(entry->value);

}

int err = avformat_open_input(&ic, _filename, input_format, &dict);

if (err < 0) {

CV_WARN("Error opening file");

CV_WARN(_filename);

goto exit_func;

}

err = avformat_find_stream_info(ic, NULL);

if (err < 0) {

CV_LOG_WARNING(NULL, "Unable to read codec parameters from stream ("

<< _opencv_ffmpeg_get_error_string(err) << ")");

goto exit_func;

}

for (i = 0; i < ic->nb_streams; i++) {

#ifndef CV_FFMPEG_CODECPAR

context = ic->streams[i]->codec;

AVCodecID codec_id = context->codec_id;

AVMediaType codec_type = context->codec_type;

#else

AVCodecParameters *par = ic->streams[i]->codecpar;

AVCodecID codec_id = par->codec_id;

AVMediaType codec_type = par->codec_type;

#endif

if (AVMEDIA_TYPE_VIDEO == codec_type && video_stream < 0) {

// backup encoder' width/height

#ifndef CV_FFMPEG_CODECPAR

int enc_width = context->width;

int enc_height = context->height;

#else

int enc_width = par->width;

int enc_height = par->height;

#endif

CV_LOG_DEBUG(NULL, "FFMPEG: stream["

<< i << "] is video stream with codecID=" << (int)codec_id

<< " width=" << enc_width << " height=" << enc_height);

#if !USE_AV_HW_CODECS

va_type = VIDEO_ACCELERATION_NONE;

#endif

// find and open decoder, try HW acceleration types specified in 'hw_acceleration'

// list (in order)

const AVCodec *codec = NULL;

err = -1;

#if USE_AV_HW_CODECS

HWAccelIterator accel_iter(va_type, false /*isEncoder*/, dict);

while (accel_iter.good()) {

#else

do {

#endif

#if USE_AV_HW_CODECS

accel_iter.parse_next();

AVHWDeviceType hw_type = accel_iter.hw_type();

if (hw_type != AV_HWDEVICE_TYPE_NONE) {

CV_LOG_DEBUG(NULL, "FFMPEG: trying to configure H/W acceleration: '"

<< accel_iter.hw_type_device_string() << "'");

AVPixelFormat hw_pix_fmt = AV_PIX_FMT_NONE;

codec = hw_find_codec(codec_id, hw_type, av_codec_is_decoder,

accel_iter.disabled_codecs().c_str(), &hw_pix_fmt);

if (codec) {

#ifdef CV_FFMPEG_CODECPAR

context = avcodec_alloc_context3(codec);

#endif

CV_Assert(context);

context->get_format = avcodec_default_get_format;

if (context->hw_device_ctx) {

av_buffer_unref(&context->hw_device_ctx);

}

if (hw_pix_fmt != AV_PIX_FMT_NONE)

context->get_format =

hw_get_format_callback; // set callback to select HW pixel

// format, not SW format

context->hw_device_ctx = hw_create_device(

hw_type, hw_device, accel_iter.device_subname(), use_opencl != 0);

if (!context->hw_device_ctx) {

context->get_format = avcodec_default_get_format;

CV_LOG_DEBUG(NULL, "FFMPEG: ... can't create H/W device: '"

<< accel_iter.hw_type_device_string()

<< "'");

codec = NULL;

}

}

} else if (hw_type == AV_HWDEVICE_TYPE_NONE)

#endif // USE_AV_HW_CODECS

{

AVDictionaryEntry *video_codec_param =

av_dict_get(dict, "video_codec", NULL, 0);

if (video_codec_param == NULL) {

codec = avcodec_find_decoder(codec_id);

if (!codec) {

CV_LOG_ERROR(

NULL, "Could not find decoder for codec_id=" << (int)codec_id);

}

} else {

CV_LOG_DEBUG(NULL,

"FFMPEG: Using video_codec='" << video_codec_param->value << "'");

codec = avcodec_find_decoder_by_name(video_codec_param->value);

if (!codec) {

CV_LOG_ERROR(NULL,

"Could not find decoder '" << video_codec_param->value << "'");

}

}

if (codec) {

#ifdef CV_FFMPEG_CODECPAR

context = avcodec_alloc_context3(codec);

#endif

CV_Assert(context);

}

}

if (!codec) {

#ifdef CV_FFMPEG_CODECPAR

avcodec_free_context(&context);

#endif

continue;

}

context->thread_count = nThreads;

fill_codec_context(context, dict);

#ifdef CV_FFMPEG_CODECPAR

avcodec_parameters_to_context(context, par);

#endif

err = avcodec_open2(context, codec, NULL);

if (err >= 0) {

#if USE_AV_HW_CODECS

va_type = hw_type_to_va_type(hw_type);

if (hw_type != AV_HWDEVICE_TYPE_NONE && hw_device < 0)

hw_device = 0;

#endif

break;

} else {

CV_LOG_ERROR(

NULL, "Could not open codec " << codec->name << ", error: " << err);

}

#if USE_AV_HW_CODECS

} // while (accel_iter.good())

#else

} while (0);

#endif

if (err < 0) {

CV_LOG_ERROR(NULL, "VIDEOIO/FFMPEG: Failed to initialize VideoCapture");

goto exit_func;

}

// checking width/height (since decoder can sometimes alter it, eg. vp6f)

if (enc_width && (context->width != enc_width))

context->width = enc_width;

if (enc_height && (context->height != enc_height))

context->height = enc_height;

video_stream = i;

video_st = ic->streams[i];

#if LIBAVCODEC_BUILD >= (LIBAVCODEC_VERSION_MICRO >= 100 ? CALC_FFMPEG_VERSION(55, 45, 101) \

: CALC_FFMPEG_VERSION(55, 28, 1))

picture = av_frame_alloc();

#else

picture = avcodec_alloc_frame();

#endif

frame.width = context->width;

frame.height = context->height;

frame.cn = 3;

frame.step = 0;

frame.data = NULL;

get_rotation_angle();

break;

}

}

if (video_stream >= 0)

valid = true;

exit_func:

#if USE_AV_INTERRUPT_CALLBACK

// deactivate interrupt callback

interrupt_metadata.timeout_after_ms = 0;

#endif

if (!valid)

close();

return valid;

}

下面是apiPreference 定义,可以看到下面有ffmpeg

Select preferred API for a capture object.

To be used in the VideoCapture::VideoCapture() constructor or VideoCapture::open()

@note Backends are available only if they have been built with your OpenCV binaries.

See @ref videoio_overview for more information.

*/

enum VideoCaptureAPIs {

CAP_ANY = 0, //!< Auto detect == 0

CAP_VFW = 200, //!< Video For Windows (obsolete, removed)

CAP_V4L = 200, //!< V4L/V4L2 capturing support

CAP_V4L2 = CAP_V4L, //!< Same as CAP_V4L

CAP_FIREWIRE = 300, //!< IEEE 1394 drivers

CAP_FIREWARE = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_IEEE1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_DC1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_CMU1394 = CAP_FIREWIRE, //!< Same value as CAP_FIREWIRE

CAP_QT = 500, //!< QuickTime (obsolete, removed)

CAP_UNICAP = 600, //!< Unicap drivers (obsolete, removed)

CAP_DSHOW = 700, //!< DirectShow (via videoInput)

CAP_PVAPI = 800, //!< PvAPI, Prosilica GigE SDK

CAP_OPENNI = 900, //!< OpenNI (for Kinect)

CAP_OPENNI_ASUS = 910, //!< OpenNI (for Asus Xtion)

CAP_ANDROID = 1000, //!< Android - not used

CAP_XIAPI = 1100, //!< XIMEA Camera API

CAP_AVFOUNDATION = 1200, //!< AVFoundation framework for iOS (OS X Lion will have the same API)

CAP_GIGANETIX = 1300, //!< Smartek Giganetix GigEVisionSDK

CAP_MSMF = 1400, //!< Microsoft Media Foundation (via videoInput)

CAP_WINRT = 1410, //!< Microsoft Windows Runtime using Media Foundation

CAP_INTELPERC = 1500, //!< RealSense (former Intel Perceptual Computing SDK)

CAP_REALSENSE = 1500, //!< Synonym for CAP_INTELPERC

CAP_OPENNI2 = 1600, //!< OpenNI2 (for Kinect)

CAP_OPENNI2_ASUS = 1610, //!< OpenNI2 (for Asus Xtion and Occipital Structure sensors)

CAP_OPENNI2_ASTRA= 1620, //!< OpenNI2 (for Orbbec Astra)

CAP_GPHOTO2 = 1700, //!< gPhoto2 connection

CAP_GSTREAMER = 1800, //!< GStreamer

CAP_FFMPEG = 1900, //!< Open and record video file or stream using the FFMPEG library

CAP_IMAGES = 2000, //!< OpenCV Image Sequence (e.g. img_%02d.jpg)

CAP_ARAVIS = 2100, //!< Aravis SDK

CAP_OPENCV_MJPEG = 2200, //!< Built-in OpenCV MotionJPEG codec

CAP_INTEL_MFX = 2300, //!< Intel MediaSDK

CAP_XINE = 2400, //!< XINE engine (Linux)

CAP_UEYE = 2500, //!< uEye Camera API

CAP_OBSENSOR = 2600, //!< For Orbbec 3D-Sensor device/module (Astra+, Femto)

};

Reading / writing properties involves many layers. Some unexpected result might happens along this chain.

Effective behaviour depends from device hardware, driver and API Backend.

@sa videoio_flags_others, VideoCapture::get(), VideoCapture::set()

*/

enum VideoCaptureProperties {

CAP_PROP_POS_MSEC =0, //!< Current position of the video file in milliseconds.

CAP_PROP_POS_FRAMES =1, //!< 0-based index of the frame to be decoded/captured next.

CAP_PROP_POS_AVI_RATIO =2, //!< Relative position of the video file: 0=start of the film, 1=end of the film.

CAP_PROP_FRAME_WIDTH =3, //!< Width of the frames in the video stream.

CAP_PROP_FRAME_HEIGHT =4, //!< Height of the frames in the video stream.

CAP_PROP_FPS =5, //!< Frame rate.

CAP_PROP_FOURCC =6, //!< 4-character code of codec. see VideoWriter::fourcc .

CAP_PROP_FRAME_COUNT =7, //!< Number of frames in the video file.

CAP_PROP_FORMAT =8, //!< Format of the %Mat objects (see Mat::type()) returned by VideoCapture::retrieve().

//!< Set value -1 to fetch undecoded RAW video streams (as Mat 8UC1).

CAP_PROP_MODE =9, //!< Backend-specific value indicating the current capture mode.

CAP_PROP_BRIGHTNESS =10, //!< Brightness of the image (only for those cameras that support).

CAP_PROP_CONTRAST =11, //!< Contrast of the image (only for cameras).

CAP_PROP_SATURATION =12, //!< Saturation of the image (only for cameras).

CAP_PROP_HUE =13, //!< Hue of the image (only for cameras).

CAP_PROP_GAIN =14, //!< Gain of the image (only for those cameras that support).

CAP_PROP_EXPOSURE =15, //!< Exposure (only for those cameras that support).

CAP_PROP_CONVERT_RGB =16, //!< Boolean flags indicating whether images should be converted to RGB. <br/>

//!< *GStreamer note*: The flag is ignored in case if custom pipeline is used. It's user responsibility to interpret pipeline output.

CAP_PROP_WHITE_BALANCE_BLUE_U =17, //!< Currently unsupported.

CAP_PROP_RECTIFICATION =18, //!< Rectification flag for stereo cameras (note: only supported by DC1394 v 2.x backend currently).

CAP_PROP_MONOCHROME =19,

CAP_PROP_SHARPNESS =20,

CAP_PROP_AUTO_EXPOSURE =21, //!< DC1394: exposure control done by camera, user can adjust reference level using this feature.

CAP_PROP_GAMMA =22,

CAP_PROP_TEMPERATURE =23,

CAP_PROP_TRIGGER =24,

CAP_PROP_TRIGGER_DELAY =25,

CAP_PROP_WHITE_BALANCE_RED_V =26,

CAP_PROP_ZOOM =27,

CAP_PROP_FOCUS =28,

CAP_PROP_GUID =29,

CAP_PROP_ISO_SPEED =30,

CAP_PROP_BACKLIGHT =32,

CAP_PROP_PAN =33,

CAP_PROP_TILT =34,

CAP_PROP_ROLL =35,

CAP_PROP_IRIS =36,

CAP_PROP_SETTINGS =37, //!< Pop up video/camera filter dialog (note: only supported by DSHOW backend currently. The property value is ignored)

CAP_PROP_BUFFERSIZE =38,

CAP_PROP_AUTOFOCUS =39,

CAP_PROP_SAR_NUM =40, //!< Sample aspect ratio: num/den (num)

CAP_PROP_SAR_DEN =41, //!< Sample aspect ratio: num/den (den)

CAP_PROP_BACKEND =42, //!< Current backend (enum VideoCaptureAPIs). Read-only property

CAP_PROP_CHANNEL =43, //!< Video input or Channel Number (only for those cameras that support)

CAP_PROP_AUTO_WB =44, //!< enable/ disable auto white-balance

CAP_PROP_WB_TEMPERATURE=45, //!< white-balance color temperature

CAP_PROP_CODEC_PIXEL_FORMAT =46, //!< (read-only) codec's pixel format. 4-character code - see VideoWriter::fourcc . Subset of [AV_PIX_FMT_*](https://github.com/FFmpeg/FFmpeg/blob/master/libavcodec/raw.c) or -1 if unknown

CAP_PROP_BITRATE =47, //!< (read-only) Video bitrate in kbits/s

CAP_PROP_ORIENTATION_META=48, //!< (read-only) Frame rotation defined by stream meta (applicable for FFmpeg back-end only)

CAP_PROP_ORIENTATION_AUTO=49, //!< if true - rotates output frames of CvCapture considering video file's metadata (applicable for FFmpeg back-end only) (https://github.com/opencv/opencv/issues/15499)

CAP_PROP_HW_ACCELERATION=50, //!< (**open-only**) Hardware acceleration type (see #VideoAccelerationType). Setting supported only via `params` parameter in cv::VideoCapture constructor / .open() method. Default value is backend-specific.

CAP_PROP_HW_DEVICE =51, //!< (**open-only**) Hardware device index (select GPU if multiple available). Device enumeration is acceleration type specific.

CAP_PROP_HW_ACCELERATION_USE_OPENCL=52, //!< (**open-only**) If non-zero, create new OpenCL context and bind it to current thread. The OpenCL context created with Video Acceleration context attached it (if not attached yet) for optimized GPU data copy between HW accelerated decoder and cv::UMat.

CAP_PROP_OPEN_TIMEOUT_MSEC=53, //!< (**open-only**) timeout in milliseconds for opening a video capture (applicable for FFmpeg back-end only)

CAP_PROP_READ_TIMEOUT_MSEC=54, //!< (**open-only**) timeout in milliseconds for reading from a video capture (applicable for FFmpeg back-end only)

CAP_PROP_STREAM_OPEN_TIME_USEC =55, //<! (read-only) time in microseconds since Jan 1 1970 when stream was opened. Applicable for FFmpeg backend only. Useful for RTSP and other live streams

CAP_PROP_VIDEO_TOTAL_CHANNELS = 56, //!< (read-only) Number of video channels

CAP_PROP_VIDEO_STREAM = 57, //!< (**open-only**) Specify video stream, 0-based index. Use -1 to disable video stream from file or IP cameras. Default value is 0.

CAP_PROP_AUDIO_STREAM = 58, //!< (**open-only**) Specify stream in multi-language media files, -1 - disable audio processing or microphone. Default value is -1.

CAP_PROP_AUDIO_POS = 59, //!< (read-only) Audio position is measured in samples. Accurate audio sample timestamp of previous grabbed fragment. See CAP_PROP_AUDIO_SAMPLES_PER_SECOND and CAP_PROP_AUDIO_SHIFT_NSEC.

CAP_PROP_AUDIO_SHIFT_NSEC = 60, //!< (read only) Contains the time difference between the start of the audio stream and the video stream in nanoseconds. Positive value means that audio is started after the first video frame. Negative value means that audio is started before the first video frame.

CAP_PROP_AUDIO_DATA_DEPTH = 61, //!< (open, read) Alternative definition to bits-per-sample, but with clear handling of 32F / 32S

CAP_PROP_AUDIO_SAMPLES_PER_SECOND = 62, //!< (open, read) determined from file/codec input. If not specified, then selected audio sample rate is 44100

CAP_PROP_AUDIO_BASE_INDEX = 63, //!< (read-only) Index of the first audio channel for .retrieve() calls. That audio channel number continues enumeration after video channels.

CAP_PROP_AUDIO_TOTAL_CHANNELS = 64, //!< (read-only) Number of audio channels in the selected audio stream (mono, stereo, etc)

CAP_PROP_AUDIO_TOTAL_STREAMS = 65, //!< (read-only) Number of audio streams.

CAP_PROP_AUDIO_SYNCHRONIZE = 66, //!< (open, read) Enables audio synchronization.

CAP_PROP_LRF_HAS_KEY_FRAME = 67, //!< FFmpeg back-end only - Indicates whether the Last Raw Frame (LRF), output from VideoCapture::read() when VideoCapture is initialized with VideoCapture::open(CAP_FFMPEG, {CAP_PROP_FORMAT, -1}) or VideoCapture::set(CAP_PROP_FORMAT,-1) is called before the first call to VideoCapture::read(), contains encoded data for a key frame.

CAP_PROP_CODEC_EXTRADATA_INDEX = 68, //!< Positive index indicates that returning extra data is supported by the video back end. This can be retrieved as cap.retrieve(data, <returned index>). E.g. When reading from a h264 encoded RTSP stream, the FFmpeg backend could return the SPS and/or PPS if available (if sent in reply to a DESCRIBE request), from calls to cap.retrieve(data, <returned index>).

CAP_PROP_FRAME_TYPE = 69, //!< (read-only) FFmpeg back-end only - Frame type ascii code (73 = 'I', 80 = 'P', 66 = 'B' or 63 = '?' if unknown) of the most recently read frame.

CAP_PROP_N_THREADS = 70, //!< (**open-only**) Set the maximum number of threads to use. Use 0 to use as many threads as CPU cores (applicable for FFmpeg back-end only).

#ifndef CV_DOXYGEN

CV__CAP_PROP_LATEST

#endif

};